XR World Weekly 009

Editor’s Note

Wow, after a long National Day holiday (of course, the editorial department also took a week off), this issue of XR World Guide 009, covering the essence of the past three weeks, are you ready? 😉

In this issue, we will open up a new small section, which is the SmallNews section. In this section, we will place some information that may be “interesting” but actually “just listen”.

For example, in this issue, we will classify “Immersed released more information about Visor and no longer provides 2.5K version” as SmallNews. Why would such information be classified into this category?

Before this, for this kind of content, we would generally be very cautious to select it into the Newsletter - unless we can dig deeper information. Because when we start XR World Weekly, itself is hope that you can get more “dry goods” content, each content is best to be able to read you produce some inspiration, or help you in the next work.

Although the information presented by Visor is very beautiful, at present, we know nothing about it except that “there will be such a headset soon”. At the same time, ThrillSeeker also mentioned his concerns in his latest video - an amazing product that will never be released, which will have a very negative impact on the industry.

After some internal discussions, we came to the conclusion that we cannot assume that no one likes this kind of information - after all, it is still very easy to read this kind of information, but we need to carefully recommend this kind of information. Therefore, in the SmallNews section, we will put some information that you can read easily, but may not be able to help you in practice at present.

OK, after saying a lot of words, then next, please make a cup of tea 🍵, and slowly read the XR World Guide 009 we offer you~

Table of Contents

BigNews - Meta released Quest 3 at the Meta Connect conference - Come join the competition, PICO or Rokid are both welcome! - Unity apologized. - The updates brought by XRI 2.4.0 and 2.5.0 - Xcode 15.1 beta && visionOS 1.0 beta4 version has been released

Idea - Where can I find the UI materials for practicing visionOS? - What would it be like to use ChatGPT on Apple Vision Pro? - Facetime updated the AR effect of gesture recognition. - Using AI + AR, achieve seamless integration of virtual and real spaces. - Perhaps the strangest name AR music App (one of them)?

Video - The Principle of Spatial Video for Pre-Exposing iPhone 15 Pro - Dilmer Valecillos Published Apple Native visionOS Tutorial

Article - The Battle of 3D File Formats - Awesome OpenUSD: Comprehensive Unofficial Documentation about USD - AR/VR Guide - Tutorial: Create an AI Robot NPC using Hugging Face Transformers and Unity Sentis

Code - SwiftSplash: New Official Demo of visionOS

SmallNews - Immersed has released more information about Visor and no longer offers the 2.5K version. - Lex and Zuck had a very realistic remote conversation using Quest Pro. - Persona may allow you to edit glasses - The refresh rate of Apple Vision Pro seems to have been confirmed?

BigNews

Meta released Quest 3 at the Meta Connect conference

Keywords: Meta, Quest 3, MR, Passthrough, Augments, Multimodal Input

The annual XR event, Meta Connect, was held as scheduled on September 27th and 28th. At the event, Mark Zuckerberg announced Meta’s next-generation headset, Meta Quest 3.

Don’t know if it was influenced by Apple’s release of Vision Pro, but there was a detailed explanation of the mixed reality function of Quest 3 at this conference. Therefore, we will use an article - “What interesting updates are there at the Meta Connect conference in 2023?” to help everyone organize the interesting technological updates at this Meta Connect conference.

Come join the competition, PICO or Rokid are both welcome!

Keywords: VR, PICO, Rokid, AR, Jam

If you have been studying VR/AR knowledge for some time and want to test your learning achievements through some small projects, then participating in a competition is undoubtedly a great way - in fact, some articles like How to Learn VR Development Online, Mostly Free, in One-Year, Week-by-Week will also suggest you to find and participate in some Jam competitions in the sixth month to test yourself.

If you are interested in VR, you can try participating in the 2023 PICO Dev Jam organized by PICO!

What needs to be emphasized is that this competition does not require the mandatory coding implementation of the work, so as long as you have a good idea and can materialize this idea, you can participate in this competition and win the champion prize of ¥60,000 ($8000).

This event is a fully online event. Participants can enter the competition by submitting their works before November 10, 2023. The event homepage for international users is here, and users from mainland China can sign up through the official website.

If you are more interested in AR, Rokid’s Second AR Application Development Competition might be more to your liking. This competition has two tracks, AR applications/games and Master Space mini programs, with the AR applications/games track further divided into social/campus groups. The first prize in each of these three categories will receive bonuses of ¥10,000, ¥30,000, and ¥100,000 respectively.

The Rokid AR Application Development Contest focuses more on the actual development effects compared to the PICO competition. Therefore, you need to prepare a program that can run in practice to participate in the selection process. Moreover, participants are required to register before October 30, 2023, and submit preliminary works before December 10.

If you are interested in the Rokid competition, you can directly participate by filling out this form to join the competition.

Unity apologized.

Keywords: Unity, Runtime Fee

The controversial issue of Unity installation fee seems to have come to a temporary end with the official apology letter from Unity.

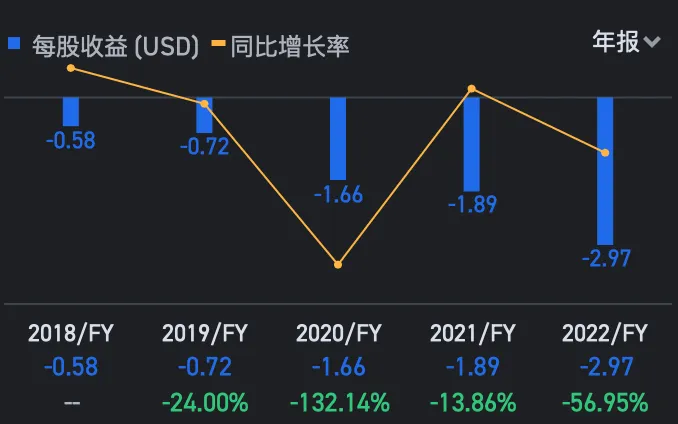

In this letter, Marc Whitten, the President of Unity Create, who is responsible for leading Unity, said that Unity did not sufficiently communicate with the community before announcing the erroneous runtime fees. Despite Unity’s intention to better support the public with game engine, the action was taken without proper communication. (It is also mentioned in Ruanyifeng’s article that since going public in 2019, Unity has not been profitable in any year as a listed company.)

Regarding the criticism from developers, Marc Whitten stated that Unity will implement the following pricing measures:

- Unity Personal will continue to remain free and the fee limit will be increased to $200,000. Meanwhile, the requirement to use “Made with Unity” as the startup screen will be cancelled.

- The controversial runtime charging policy will be postponed until the next LTS version (presumably the 2023 LTS version, released 24 years later) before it takes effect. Users will not be charged runtime fees until they upgrade to this LTS version.

- For games that require payment of runtime fees, Unity will allow users to choose the lower amount between a 2.5% revenue share and the runtime fees for payment - and both of these numbers will be provided by the users themselves.

No matter what, this is ultimately a positive signal, Dilmer Valecillos also expressed his attitude in his tweet:

https://x.com/Dilmerv/status/1705283411253002277

If you want to learn more about the community’s attitude towards Unity’s new initiatives, you can also check out the comments on Unity official tweet:

https://x.com/unity/status/1705270545002983657

The updates brought by XRI 2.4.0 and 2.5.0

Keywords: XRI, Unity

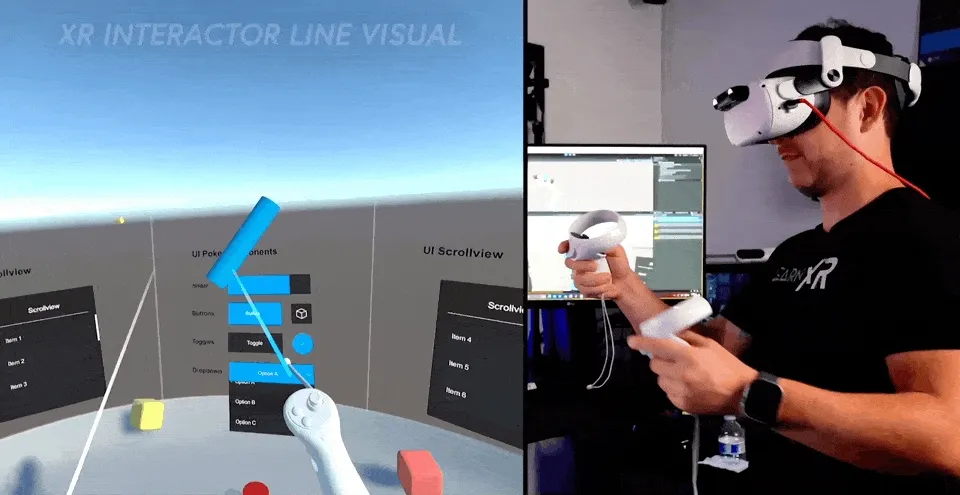

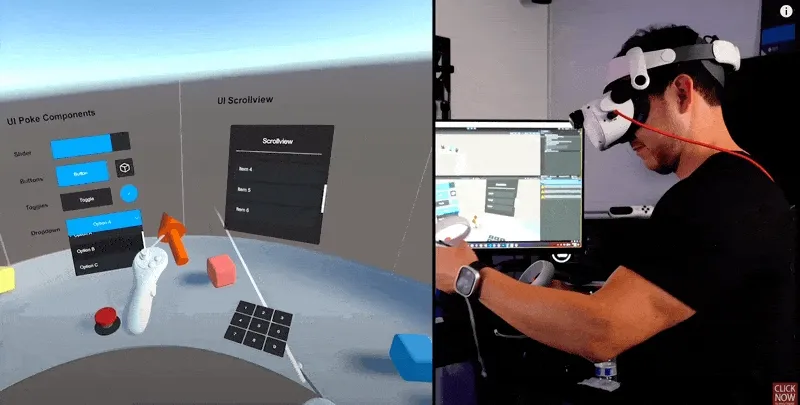

Two months ago, we explained the updates for XRI 2.3.0 in Newsletter #002. After two months, versions 2.4.0 and 2.5.0 of XRI have also been released. Here, we will use some materials from Dilmer Valecillos’ video to give you an overview of the highlights of this update.

In these two versions, XRI brings us a series of updates, some of the more remarkable ones are:

| Content | Display |

|---|---|

| The end of the XR Interactor Line Visual defaults to using a curve to display, which looks more natural. |  |

| The newly added XR Input Modality Manager allows switching between controllers and gesture recognition. This manager will help us identify the tracking status of controllers and gestures to determine which control method to use. |  |

| The new Climb Locomotion Provider can help developers create a climbing effect for players on wall surfaces. |  |

In 2.5.0, XRI mainly updated some capabilities related to AR, while in 2.4.0, it mainly updated some interaction updates including Gaze and Hand. If you are interested in the details of these capabilities, you can directly use XRI 2.5.0 version and check these specific API updates in the Sample provided by XRI.

Xcode 15.1 beta && visionOS 1.0 beta4 version has been released

Keywords: Xcode, visionOS, Xcodes, Simulator

After nearly a month of waiting, Xcode has finally released a new version of the visionOS SDK. This time, the visionOS beta 4 version is released together with Xcode 15.1 beta.

Tips

Highly recommend everyone to use Xcodes app to manage all the beta versions of Xcode.

Of course, after the new version is released, everyone still needs to carefully read the Release Notes. After all, nobody wants to spend an afternoon doubting life in a known bug reported by the official 🤨.

For example, in this Release Note, the official kindly reminds: an App launched with Volume as the startup window may not start in the correct position if it is not launched from the main interface.

Apps that use a volumetric style window as the initial scene do not launch at the correct position unless launched from the Home Screen. (116022772)

Workaround: Launch the app from the Home Screen. Alternatively, long press Digital Crown to re-center the app.

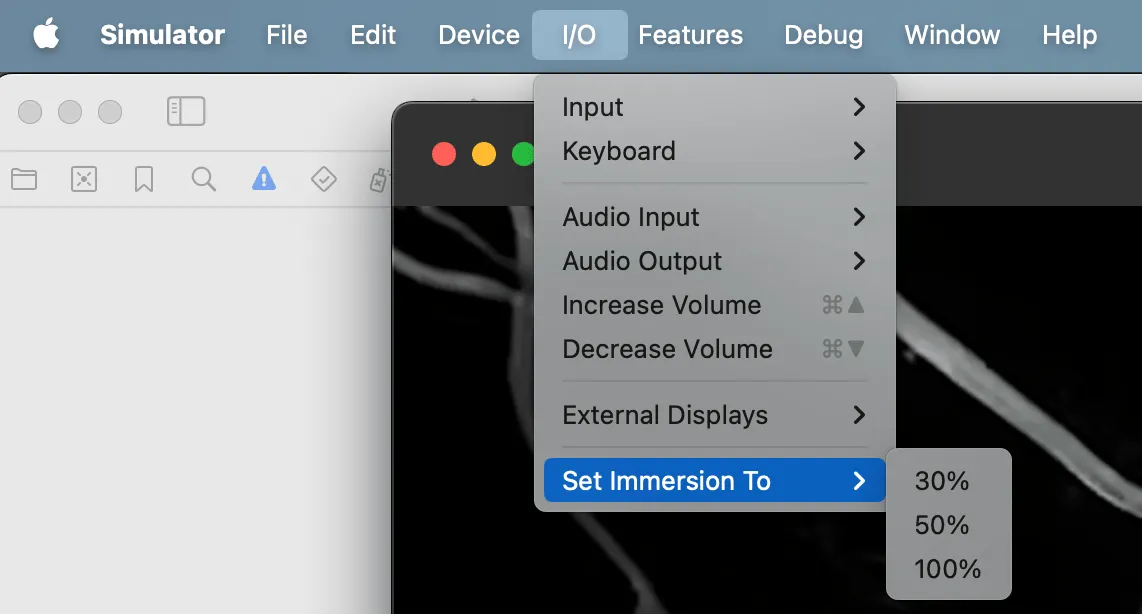

Additionally, there are some minor features in this update for the simulator. For example, you can now use I/O -> Set Immsersion To to simulate adjusting the immersion level by turning the Digital Crown.

Idea

Where can I find the UI materials for practicing visionOS?

Keywords: visionOS, Dribbble

Although front-end programmers are often teased as “UI boys”, objectively speaking, as front-end developers, it is essential to be involved in tasks such as building UI interfaces and designing UI elements.

When visionOS comes out, how can developers without design talent hone their development skills?

After all, no one wants to create an interface that is extremely ugly, so here, I will share my learning approach!

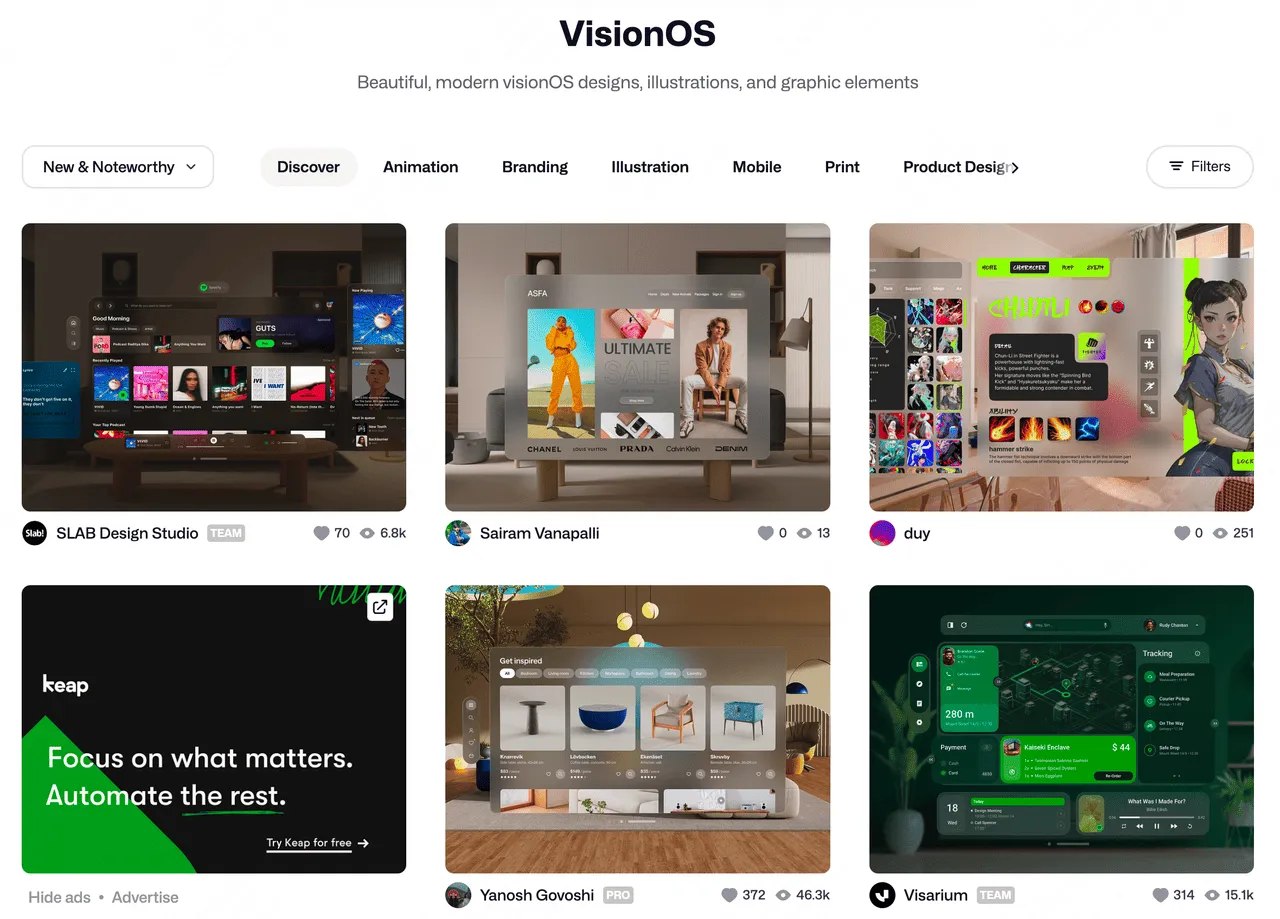

Usually, I would go to Dribbble and search for relevant information using the keyword visionOS. Typically, you will get the following information:

So far, you have obtained many exquisitely designed visionOS user interfaces, and the most important thing is that they are completely free!

Now, you can use SwiftUI to draw these interfaces. Through drawing these interfaces, you will gradually become familiar with various APIs and considerations of SwiftUI. For example, recently I have been studying Oleg Frolov’s visionOS App, which involves the rotation of objects in 3D space. Honestly, I have never had a thorough understanding of these APIs, so when I initially attempted to reproduce them, the rotation was always a bit “unstable”.

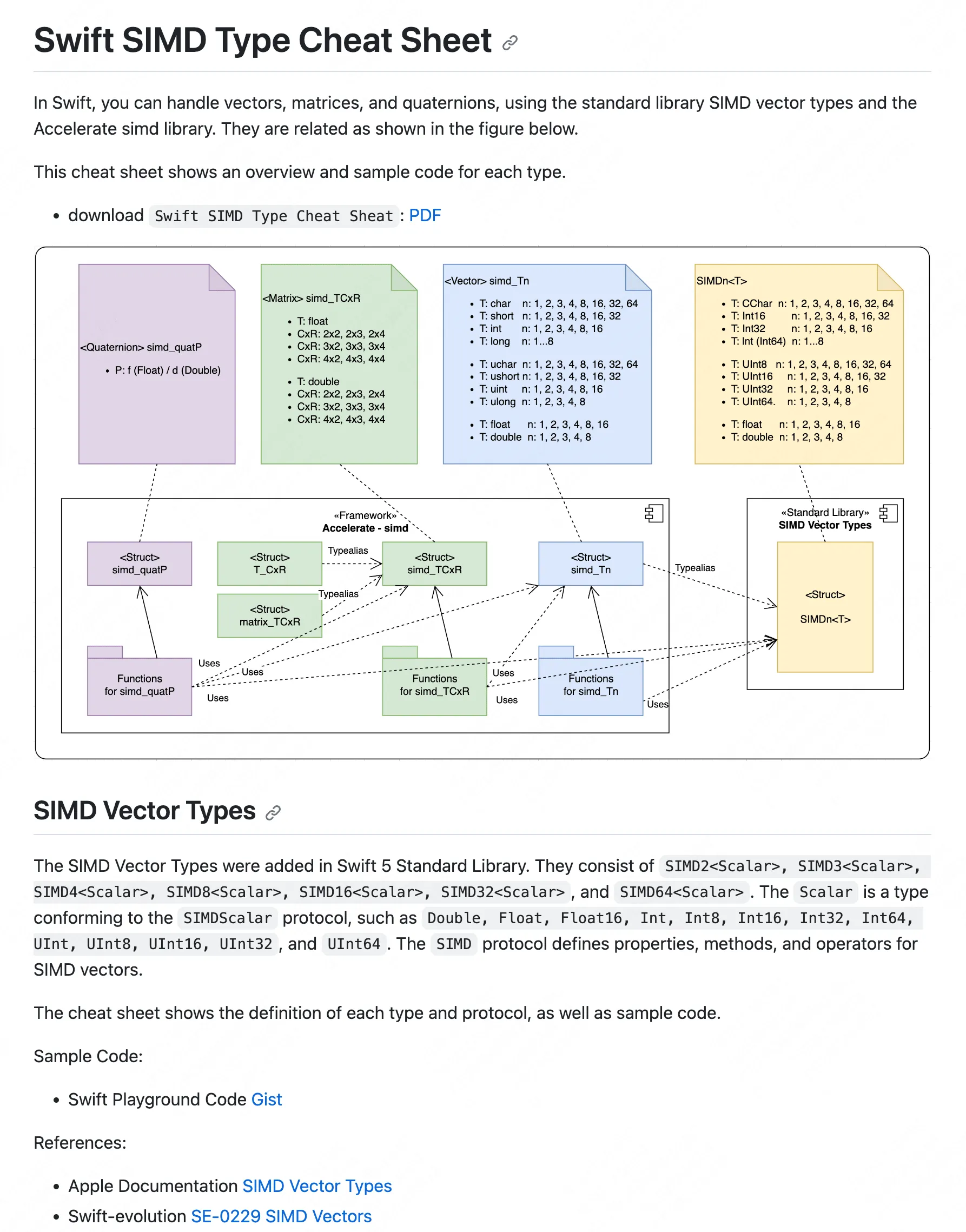

However, after some research, and also with the help of Yasuhito Nagatomo and his SIMD cheat sheet, I finally managed to crawl out of the deep hole. I recommend my friends who are also afraid of SIMD to give this project a Star, it should save you a lot of time in the future.

Okay, here is the main idea to provide everyone with a practice visionOS. You can use exquisite materials from Dribbble for targeted exercises. For those who are not good at design, this can help you focus on implementation and effect restoration (even paying attention to some details that are not usually noticed by ordinary people). It can also make the interface more pleasing to the eye. It’s really a win-win situation!

What are you waiting for? Come and practice with me!

What would it be like to use ChatGPT on Apple Vision Pro?

Keywords: visionOS, Apple Vision Pro, ChatGPT, Spatial Computing

In Newsletter #008, we previously excerpted some intriguing thoughts from Wenbo. And this time, they brought up a method of using ChatGPT under Apple Vision Pro—a way to make AI truly assist like a companion by the side.

If the desktop arrangement of Apple Vision Pro is like this …

Keywords: AR, Apple Vision Pro, SnapAR, LensStudio, UI

SnapAR filter creator Inna Sparrow (Inna Horobchuk) shared on Twitter another layout of the Apple Vision Pro desktop application created using SnapAR filters in Lens Studio (combined with tools like C4D and AE). She also showcased the effects displayed on Snap’s AR glasses Spectacles (only available to creators) which can be previewed on a phone using Snapchat.

If you say that the desktop application display of Apple Vision Pro is now closer to the full-screen layout of iOS (occupying from top to bottom), then Inna’s design logic undoubtedly takes inspiration from MacBook’s Dock, placing the icons of major applications in the form of 3D models at the bottom of the screen.

Perhaps now the design of visionOS’s 2.5D icons and full-screen layout applications is more in line with Apple’s mentioned “familiarity” that people need when migrating to a new platform. It may also reduce the information overload and visual fatigue caused by full-screen 3D icons. However, Inna’s design undoubtedly brings us another possibility and imagination. For example, Inna’s design may inspire quick launching of applications on Apple Vision Pro (think about how many apps you directly open and how many you find through iOS’ built-in “search”).

The interesting point of this case is that the author shows us that as long as you can make a demo, communication will be more efficient (think about how you describe this idea in words?), and any handy tool can be used to quickly create a prototype. Inna also revealed that this demo actually originated from a small model made casually in 2020. It seems that sometimes demos made long ago may come in handy in the future.~

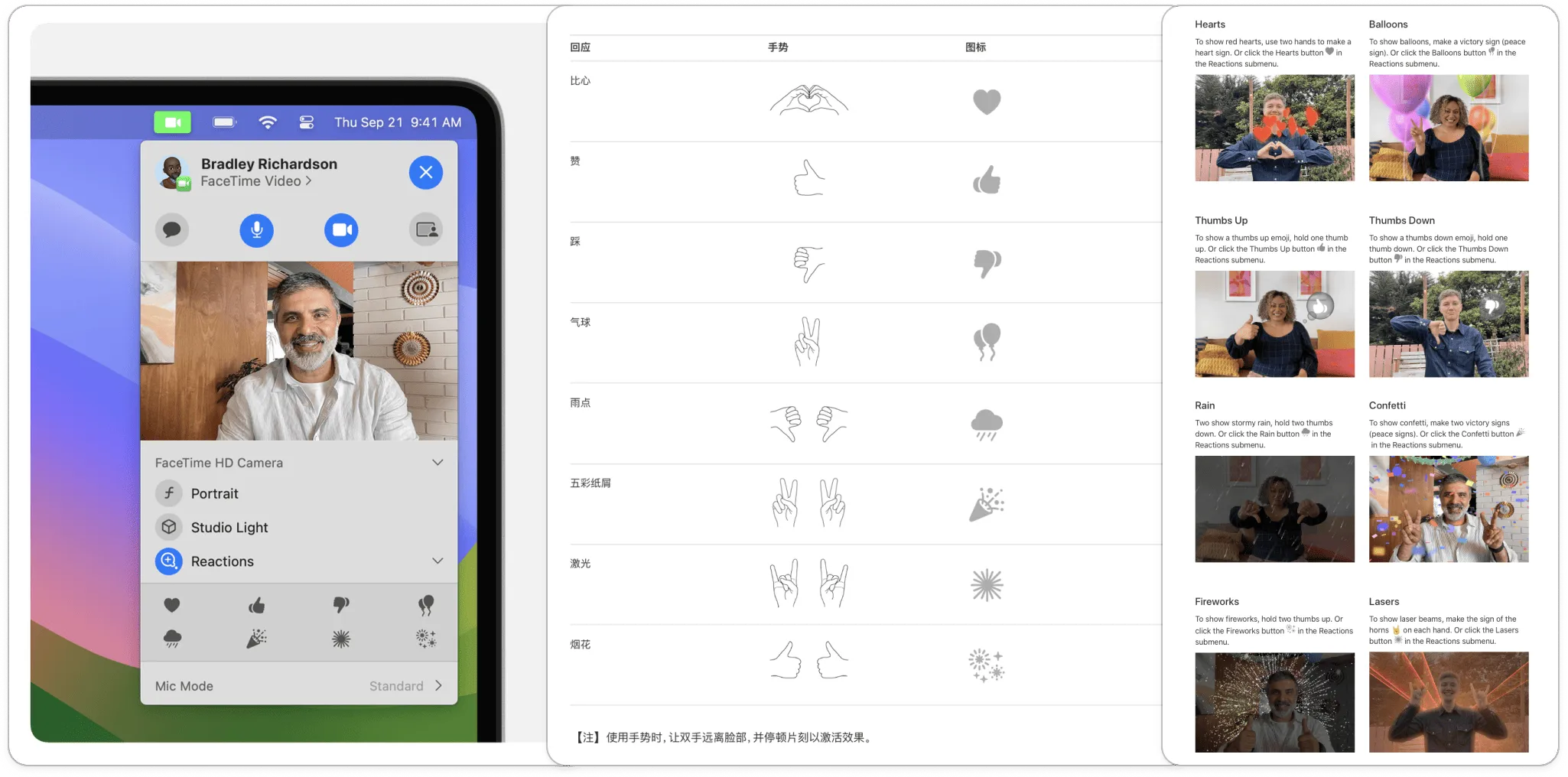

Facetime updated the AR effect of gesture recognition.

Keywords: AR, Apple, Facetime

Recently, netizen X user Alexson Chu (also a SnapAR filter creator) mentioned that after updating to iOS 17, he discovered a hidden gem from Apple: when opening Snapchat (before using filters) or making a FaceTime call, gesture recognition can trigger native AR effects.

This is referred to as the “Reaction” feature by Apple. It supports macOS Sonoma and later versions on Mac, and iOS 17 and later versions on iPhone. This feature is also supported in third-party apps, such as Snapchat (which explains why the poster’s creator was confused). When users are making a FaceTime video call on an iPhone running iOS 17 or a Mac running macOS Sonoma, they can trigger screen effects like hearts, balloons, confetti, and fireworks using specific gestures. The specific gestures and corresponding effects can be seen in the following image: Gesture-Effect Mapping.

Using AI + AR, achieve seamless integration of virtual and real spaces.

Keywords: AR, AI, SnapAR, Spectacles, Instagram, Minecraft

As mentioned earlier, Meta recently released their first mixed reality headset device Quest 3 and integrated AI services. Coincidentally, 3D artist Enuriru (Denis Rossiev) also released a set of reality-extending filters combining AI and AR on X.

For example, this brainstorming “Food Spirit” AR filter (Snap AR filter preview can be found here) utilizes AI image recognition and transformation capabilities to turn everyday items like coffee, cookies, etc. into companions for your work… Cookie spirits?

https://twitter.com/Enuriru/status/1582351912803790848

For example, Snap’s AR glasses Spectacles, which are exclusively designed for creators, combine AI and AR, and can summon virtual pets and have real-time output of AR text.

https://twitter.com/Spectacles/status/1673327268532215812

These applications are all demonstrating to us more interesting ways of combining AI/machine learning with AR. We hope that everyone can be inspired and develop more interesting products 😉

Perhaps the strangest name AR music App (one of them)?

Keywords: AR, Aphex Twin, music

In the previous Newsletter #005 , we introduced the special AR effects on the album release commemoration of the classic punk band (already disbanded) Daft Punk’s collaboration with Snapchat, and it’s obvious that this won’t be the last time musicians combine XR technology with music for presentation.

Recently, British electronic musician Aphex Twin has collaborated with Tokyo-based spatial application studio KALKUL and visual artist Weirdcore to create an accompanying AR application called YXBoZXh0d2lu for his latest album “Blackbox Life Recorder 21f / in a room7 F760”. This seemingly strange-named application actually corresponds to Aphex Twin’s name displayed in base64 encoding, and many visuals in both the music video and the application have already been generated using AI.

Before downloading this application, you need to purchase the physical album of this album. You can unlock a unique 3D experience by scanning the corresponding album cover, labels, and so on, through the AR application. Open the accompanying stamp album of the album and unfold it into a box. After scanning, four floating boxes will appear, allowing you to interact and display more visual effects.

You can download the application from the Apple App Store and Google Play Store.

Video

The Principle of Spatial Video for Pre-Exposing iPhone 15 Pro

Keywords: iPhone 15 Pro, iPhone 15 Max, Spatial Video

https://www.youtube.com/watch?v=s-Dwm9e89mE&t=27s

In the video of AK from Digital Pill Technology, he mainly shared three possible solutions that he believes Apple will use to achieve spatial video with the iPhone 15 Pro/Max:

- Use the main camera and wide-angle lens of the iPhone respectively to correspond to the left and right eye images. This approach has the advantage of being straightforward, but the disadvantage is that the wide-angle lens requires some post-processing due to its different focal length from the main camera.

- The main shooting lens captures the foreground information, while the wide-angle lens captures the background information. The advantage of this approach is high resolution, but the disadvantage is that there may be “seams” at the junction of the foreground and background, giving a sense of stitching.

- Similar to solution 2, but using LiDAR to extract the foreground more accurately, the advantage of this solution is that the blending effect is less obvious compared to solution 2. However, there may still be significant blending artifacts in complex foreground scenes.

In addition, AK also shared his views on the limitations of using an iPhone to shoot space videos:

- Limited shooting time: Due to the extensive post-processing required, the shooting process will consume a significant amount of energy, so it may only be possible to shoot for a minute or two.

- The images captured will appear larger compared to Apple Vision Pro, because the distance between the two cameras on the phone is smaller than the distance between a person’s pupils. Therefore, just like a small animal sees the world, things that are nearby will appear particularly large.

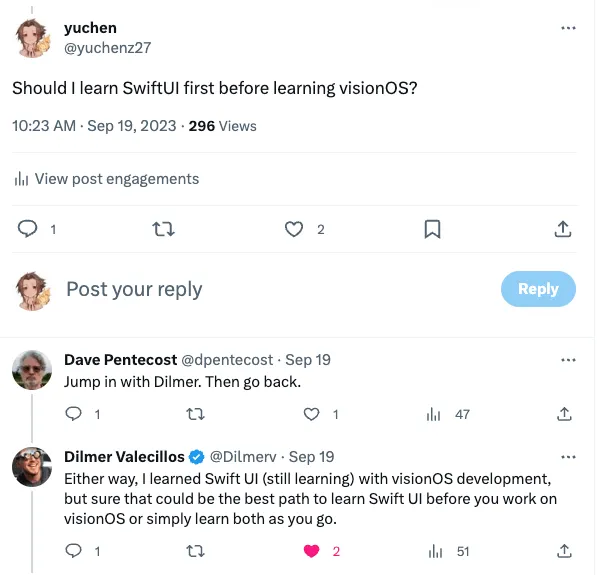

Dilmer Valecillos Published Apple Native visionOS Tutorial

Keywords: visionOS, SwiftUI, RealityKit, Xcode, Reality Composer Pro

This tutorial aims to demonstrate how to build a visionOS application from scratch using Apple’s native tools. The tutorial covers SwiftUI, RealityKit, Xcode, and Reality Composer Pro.

As one of the top YouTubers in the XR education field, DV expresses that although he had developed native Apple applications using Objective-C many years ago, he has recently focused on Unity and is somewhat unfamiliar with Apple’s new programming language Swift and the new version of Xcode. In order to create this video, DV specifically studied Swift for several weeks and reacquainted himself with the development environment of Xcode.

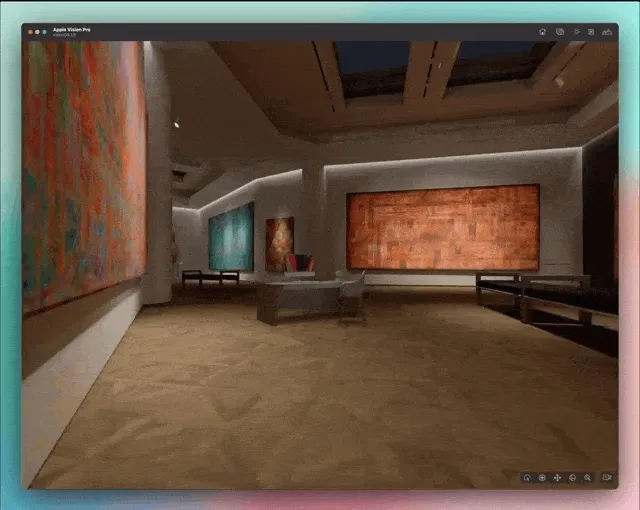

In this tutorial, DV will demonstrate the development of a visionOS application from scratch to showcase the SpaceX Inspiration4 space mission. The tutorial covers building a 2D window in a three-dimensional space using SwiftUI, displaying a 3D rocket model in the Volumetric window of visionOS, and how to enter Full Immersive mode. The reason for choosing SpaceX’s Inpiration4 project is because DV is a loyal fan of SpaceX. The source code for this project can be downloaded from this GitHub repository.

The content of this tutorial is somewhat basic, but for developers who want to get started with visionOS development but don’t know where to start, this tutorial is worth a look. After creating the project, DV deleted all the initially generated code by Xcode and started developing from scratch.

Because native visionOS development is built on top of SwiftUI, a natural question is: “Do I need to learn SwiftUI before learning visionOS?” DV’s advice on this issue is to first become familiar with SwiftUI and then learn visionOS, which may be the best path. However, he himself is learning both frameworks at the same time. So, if you want to lay a solid foundation for future native Apple development, learning SwiftUI first is a good choice. If you want to start developing visionOS content immediately, it is also possible to learn SwiftUI and visionOS simultaneously.

Article

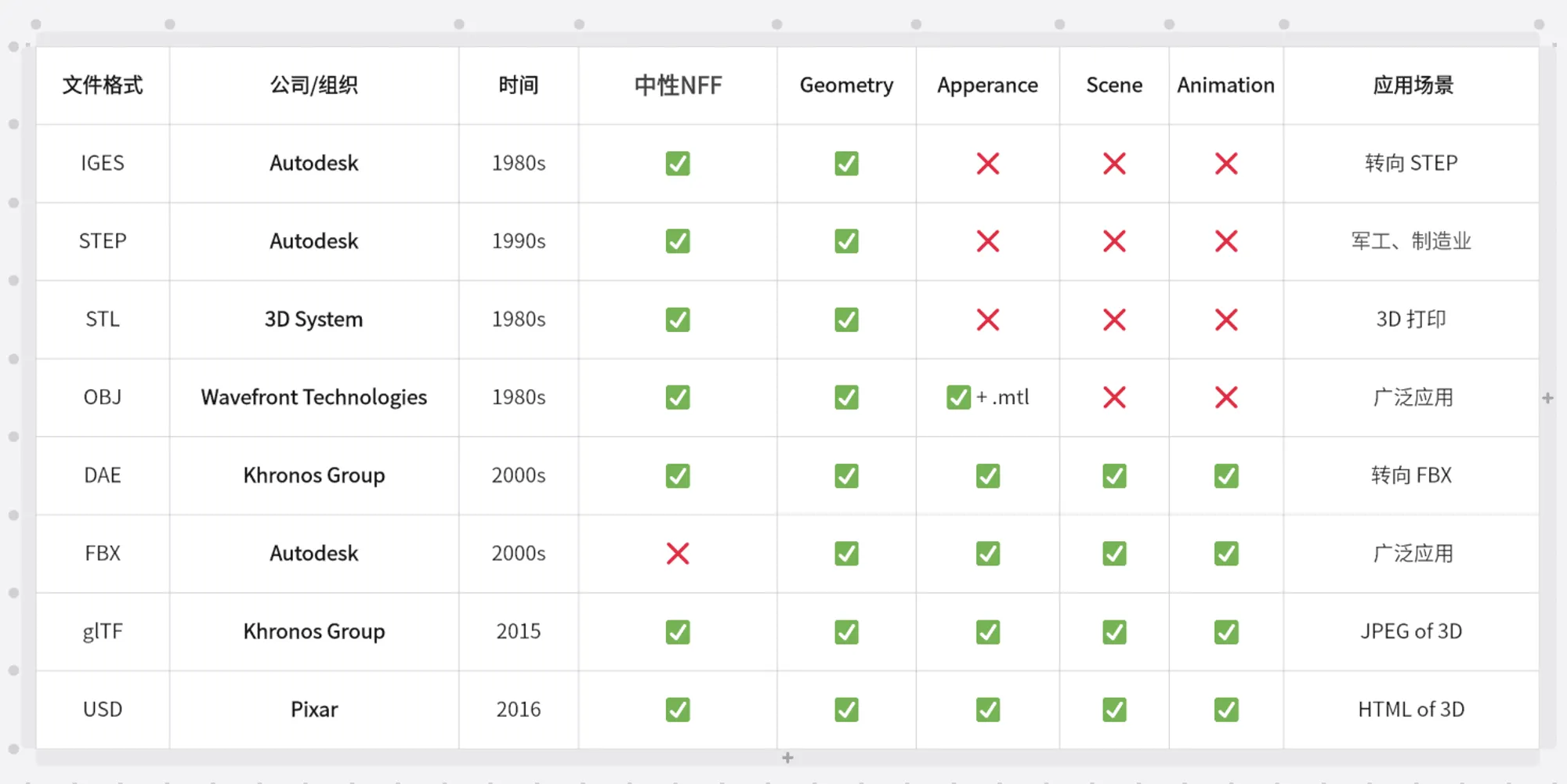

The Battle of 3D File Formats

Keywords: 3D, glTF, USDZ

This article recounts the disputes among various common 3D file formats. Starting from the classification of 3D formats, it discusses the early pure geometric models (IGES, STEP, STL), models with texture mapping (OBJ), models supporting scenes and animations (DAE, FBX), and finally, the current competition between the two major open-source formats (glTF vs USD).

Currently, behind glTF is Khronos Group, while USD has USD Alliance (AOUSD) behind it. They are both open standard alliances, and it can be foreseen that they will have fierce competition in the AR/VR era.

glTF is a format launched by Khronos Group that is lighter and more suitable for web distribution (with its binary format being glb). Khronos positions it as the JPEG format in the world of 3D. Currently, there is a rich ecosystem built around glTF in the web, with members such as Microsoft, Google, and Meta.

USD (Universal Scene Description) was originally developed by Pixar as an open-source 3D format, mainly used for 3D rendering and animation. At the 2018 developer conference (WWDC 2018), Apple announced its collaboration with Pixar to create a new file format called USDZ for AR (alongside the release of ARKit 2.0). This new format is actually a zero-compression package of USD. Subsequently, companies like Nvidia and Adobe also adopted USDZ in their respective projects and collaborated on its development.

Awesome OpenUSD: Comprehensive Unofficial Documentation about USD

Keywords: USDZ, Hydra, OpenUSD, Awesome List

This is a list of OpenUSD resources maintained by Matias Codesal, the Developer Relations Manager at NVIDIA. It can be used as a supplement to the official OpenUSD documentation. It covers the following topics:

- Explanation and Definition of OpenUSD, including explanations for both technical and non-technical audiences.

- USD related libraries and tools, Frameworks, Converters, Text Editor Plugins, Hydra Renderer, among which conversion tools similar to guc were also introduced in our previous Newsletter #007.

- Sample materials, mainly consist of USD Sample Assets organized by well-known organizations, such as Pixar Sample Assets and NVIDIA Sample Assets.

- Study courses and reference manuals, including both technical and non-technical books, courses, tutorials.

AR/VR Guide

Keywords: AR, VR, XR, Unreal, Unity, Oculus, SteamVR, LiDAR

Maintained by mikeroyal, the Guide series covers various aspects of AR/VR and is divided into 3 major categories:

- Introduction guide and development courses for AR/VR related platforms.

- Specific products and accompanying software for AR/VR. Such as Unreal, Unity, PlayStationVR, Quest, Apple Vision Pro, HTC Vive, SteamVR, Nreal.

- Base knowledge and techniques related to Vulkan, Metal, DirectX, Computer Vision, LiDAR, Linear Algebra, Machine Learning.

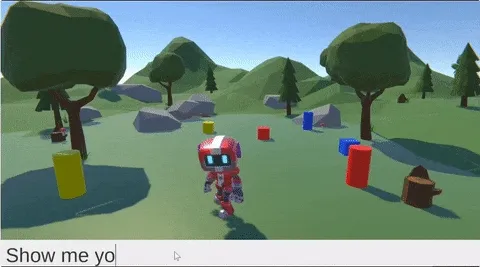

Tutorial: Create an AI Robot NPC using Hugging Face Transformers and Unity Sentis

Keywords: AI, Unity, Sentis

In Newsletter #005, we introduced Unity’s new AI plugin Sentis. The biggest feature of Sentis is the ability to run user-defined neural network models locally on all Unity-supported devices, avoiding the latency issues and API cost problems associated with AI functionality that requires connection to cloud servers. Developers only need to import ONNX format neural network models into Unity to achieve local execution of AI models.

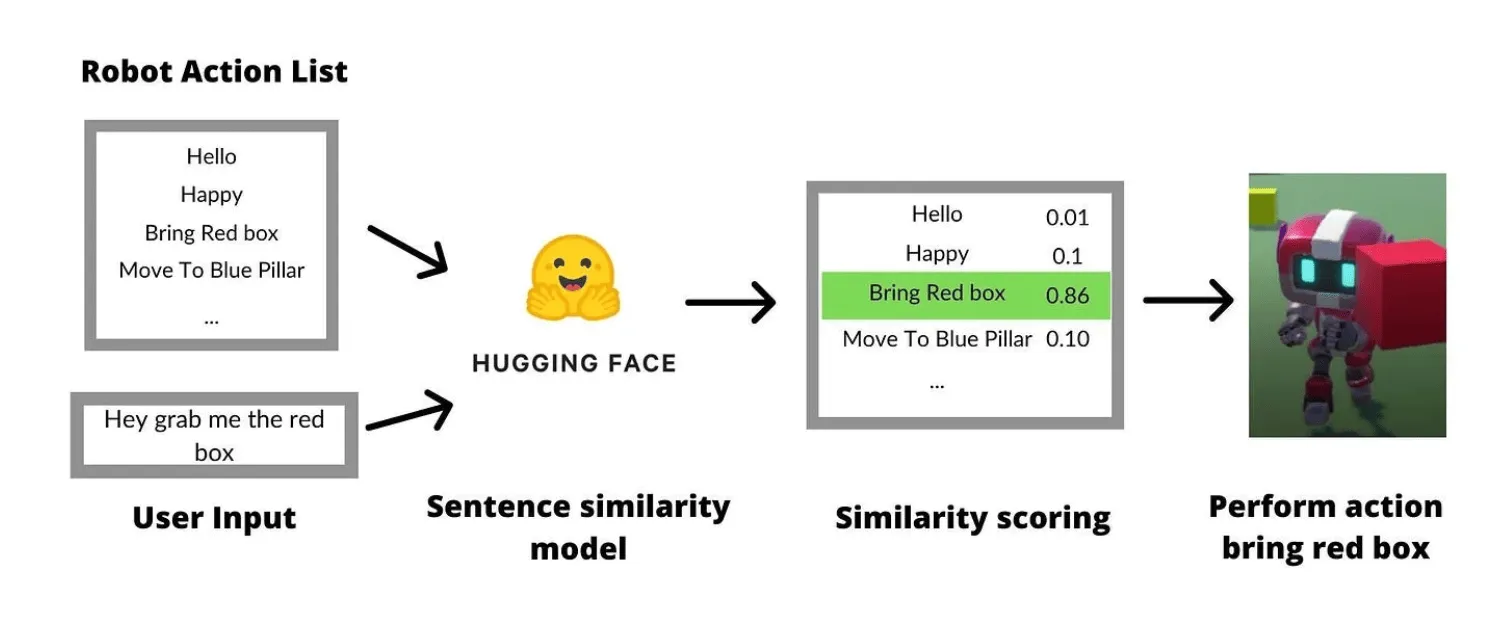

This tutorial implements the functionality of controlling robot behavior through text input. Unlike giving instructions to the robot by clicking buttons or other interactive methods, the AI will understand any text input from the user and perform actions most relevant to that input. The following image shows the implementation of this tutorial.

To achieve the above functionality, we need to introduce a concept - Sentence Similarity. It compares the similarity between two sentences. We will set up a list of actions for AI in advance. When the user inputs a text command, AI will compare this input with all the actions in the list based on their sentence similarity, and then select the action with the highest similarity to execute.

It is worth mentioning that when the user’s input text command has low similarity with all the actions on the action list, the AI will play an animation expressing confusion to inform the player that it is confused by the command.

In Unity, using AI models can ignite people’s imaginations. This tutorial only demonstrates a basic application of Sentis. When combined with a speech recognition plugin, it can achieve the functionality of allowing users to control game characters directly through voice. Perhaps in the future, NPCs capable of engaging in any conversation with players may also become the norm.

Code

SwiftSplash: New Official Demo of visionOS

Keywords: RealityKit, visionOS

In addition to the updates of Xcode and visionOS SDK, Apple has also released a new official demo called SwiftSplash.

This is a small game similar to building blocks, players can use tracks of various shapes to make the little fish slide into the pool.

Compared with the previous official code example, SwiftSplash uses more complex Composer Pro scenes and combines multiple scenes from Composer Pro together at the end.

SmallNews

Immersed has released more information about Visor and no longer offers the 2.5K version.

Keywords: Immersed, Visor

Recently, Immersed has released more information about the appearance and some hardware details of Visor on their hardware official website. For example, it features 6DOF tracking, is 25% lighter than smartphones, and can run on Mac, PC, and Linux.

At the same time, Visor also began accepting pre-orders. But due to the fact that everyone is currently pre-ordering the 4K version, Visor has canceled the pre-orders for the 2.5K version.

Lex and Zuck had a very realistic remote conversation using Quest Pro.

Keywords: Meta, Zuck, Lex, Quest Pro, Avatar

https://www.youtube.com/watch?v=EohIA7QPmmE

Lex (a very well-known podcast host) and Zuck had a very realistic online conversation using the Meta Quest Pro. Although it looks great, this technology is not yet available for us to use because participants need to use complex equipment to scan their faces in order to create such realistic virtual avatars.

Persona may allow you to edit glasses

Keywords: Apple Vision Pro, visionOS, Persona

According to the discovery of X netizen M1, Apple Vision Pro may allow users to edit the glasses on Persona images.

https://twitter.com/m1astra/status/1708587875606892621

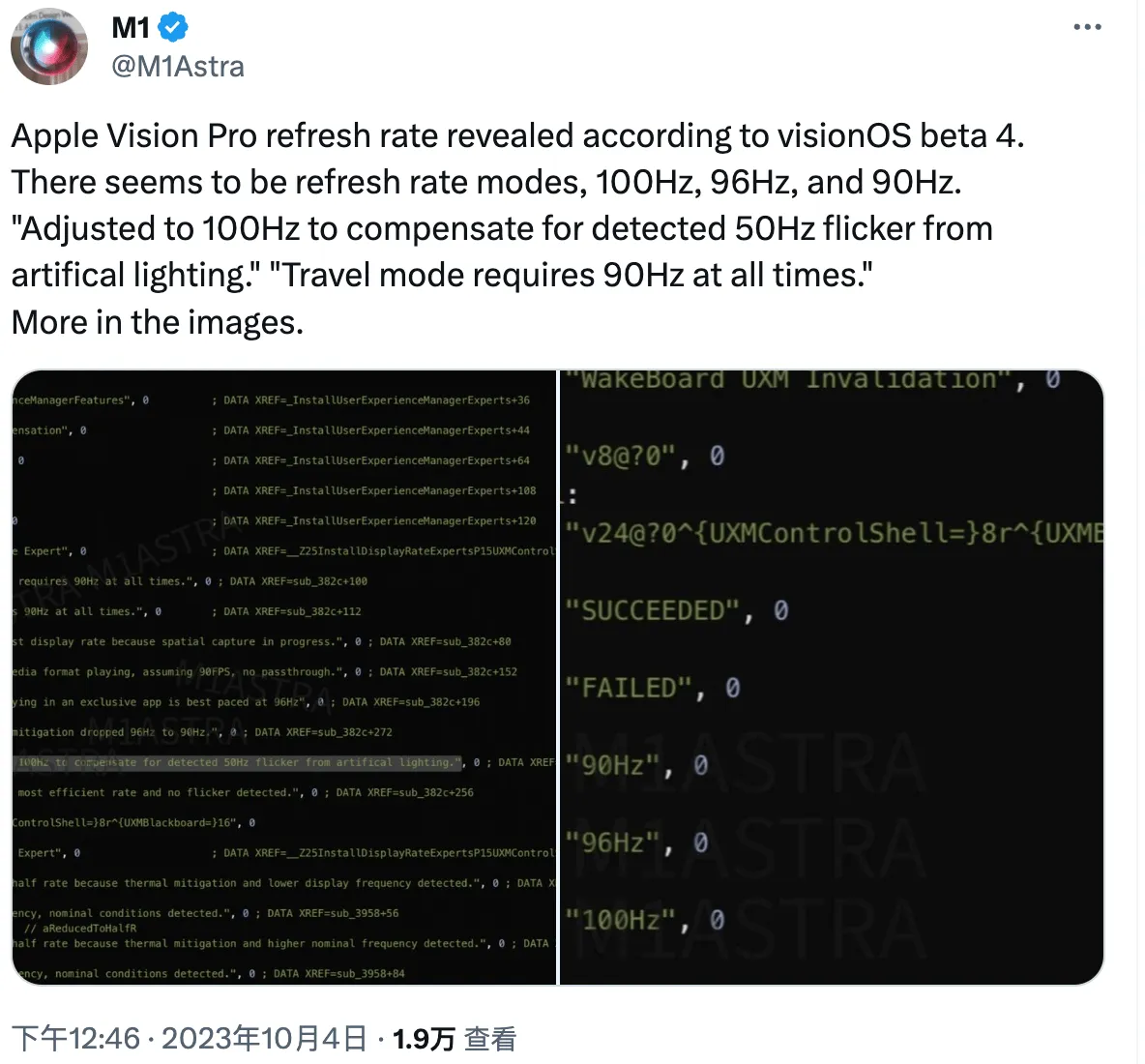

The refresh rate of Apple Vision Pro seems to have been confirmed?

Keywords: Apple Vision Pro, visionOS, Refresh Rate

Similarly, it is discovered by X netizen M1 that in the latest visionOS beta 4, Apple Vision Pro seems to provide three different refresh rates: 90Hz, 96Hz, and 100Hz. Among them, the 100Hz refresh mode is provided by Apple Vision Pro to compensate for the detection of indoor artificial lighting (50Hz).

Afterword

Did you feel that the content of Issue 009 has become much more substantial (and the writing style has become richer)? Yes, compared to the previous issues where the editorial team collected and wrote the content on their own, we have had many new members joining us for Issue 009, and together we have completed this knowledge-filled “guided tour”. Starting from Issue 009, we will also showcase the contributors at the end of each issue.

You can contribute whether it’s high-quality information you found or refined content you created, by using Github Issue to submit your work. 😉

Contributors of this issue

| Link | Image |

|---|---|

| Puffinwalker.eth |  |

| XanderXu |  |

| Yuchen |  |

| SketchK |  |

| Onee |  |

Recommended Reading

- XR World Weekly 031 - Friend, do you believe in light?

- XR World Weekly 021 - Apple launches the web version of the visionOS App Store

- XR World Weekly 011

- XR World Weekly 020

- XR World Weekly 002

- XR World Weekly 032 - Not First, But Best

- XR World Weekly 030 - The greatest significance of Orion released at the Connect conference is to give people hope

XReality.Zone

XReality.Zone