XR World Weekly 031

Cover of This Issue

For this issue’s cover, we’re sharing NEXBOT by Max.

Opening Words

Hey, have you noticed that XRealityZone’s official website (https://xreality.zone/ ) has quietly undergone some changes recently?

That’s right, over the past two weeks, we’ve made some renovations to XRealityZone’s official website, making the new site look more vibrant! This renovation also includes many small detail updates, such as more natural language switching without directly returning to the homepage, and article recommendations at the bottom of the page, etc.

Most importantly, we have officially launched the email subscription feature. If you want to see updates of XR World Weekly as soon as possible, you’re welcome to subscribe to XR World Weekly via email in your preferred language.

Note that if you subscribe on the Chinese interface, you’ll receive emails in Chinese, and if you subscribe on the English interface, you’ll receive emails in English. Don’t mix them up!

Perhaps the XR world isn’t evolving as fast as we imagined, unable to meet our eager expectations, but it should be like the gears of fate, slowly and steadily turning, and XR World Weekly is the same💪. We might be moving slowly, and sometimes we might even stop to catch our breath, but we will still continue to move forward steadily. If you ask why, we would say, friend, do you believe in light.

No matter which issue you started reading this Newsletter from, we are very grateful for your continued support🙏. Sometimes we think that the Newsletter is like a small boat on the vast sea, carrying us to explore and move forward bit by bit, and you are that gentle sea breeze, our motivation.

It’s also because of you that we have more motivation to continue bringing you some surprises. So here, we also give you a little surprise, please see the image below😉:

That’s right, in 2025, we can still meet at the Let’s visionOS conference, to go crazy together, play together, learn together, and — be happy together.

Finally, if you like our content, please don’t forget to like👍 or share📤 it with your friends. We also hope that XR World Weekly can continue to be a bond for everyone in the future, and witness together the day when the fire of XR🔥 burns brightly.

Table of Contents

Recap

- What sparks will be ignited when PICO 4 Ultra meets spatial video?

News

- Apple TV has released two new Immersive Videos: Submerged and NBA All Star Weekend

- Unity 6 official version is released, and PolySpatial has also ended its Pre version, with the official 2.04 version

- Meta has updated its developer documentation

Tool

- ShaderVision

Article

- Drawing Graphics on Apple Vision with the Metal Rendering API

- Configuring iOS Development Environment in Cursor/VSCode

Video

- Hands-On: Meta Orion Augmented Reality Glasses!

- Submerged: Technical Breakdown

Code

- Meta Horizon UI Set

- Depth Pro: Apple’s Official Open-Source Depth Detection Model

Recap

What sparks will be ignited when PICO 4 Ultra meets spatial video?

In this article, we introduced the latest support for spatial video on PICO 4 Ultra and how to use related features. So far, we’ve written quite a bit about spatial video. If you haven’t read it yet, you can review our previous articles:

- Advanced! Spatial Video Shooting Tips

- How to Play with the New Spatial Video on iOS 17.2

- What is Spatial Video on iPhone 15 Pro / Apple Vision Pro?

News

Apple TV has released two new Immersive Videos: Submerged and NBA All Star Weekend

Apple TV has released two new immersive videos, Submerged and NBA All Star Weekend. With this, all the immersive videos “to be released this fall” have been fully delivered😄.

If you’ve forgotten what immersive videos are available in the second half of this year, you can review our XR World Weekly Issue 022.

Among them, NBA All Star Weekend is still a documentary-style short film like other immersive videos. If you haven’t watched this film yet, remember to be careful not to bump your head when you dodge backwards around 1:20~

In comparison, Submerged is more special because this short film of less than 20 minutes is the first immersive video with a plot. The overall story is simple, telling the story of a group of submariners escaping after their submarine was sunk during World War II. Obviously, in this short film, the plot is not the focus, but the immersion is. The cramped environment of the submarine and the dim light underwater will make you fully experience what “immersive” means.

It’s worth mentioning that the director of this film is Edward Berger. He won an Oscar for directing the new version of “All Quiet on the Western Front”, and he has always been praised for his delicate cinematography. This immersive video is also a new challenge to his cinematography skills. You can pay more attention to the cinematography of this film when watching.

In addition, to complement this short film, Apple has also released official trailer and behind-the-scenes on its YouTube channel to promote Submerged. In the Video recommendations below, we also recommend a video about in-depth analysis of the behind-the-scenes production.

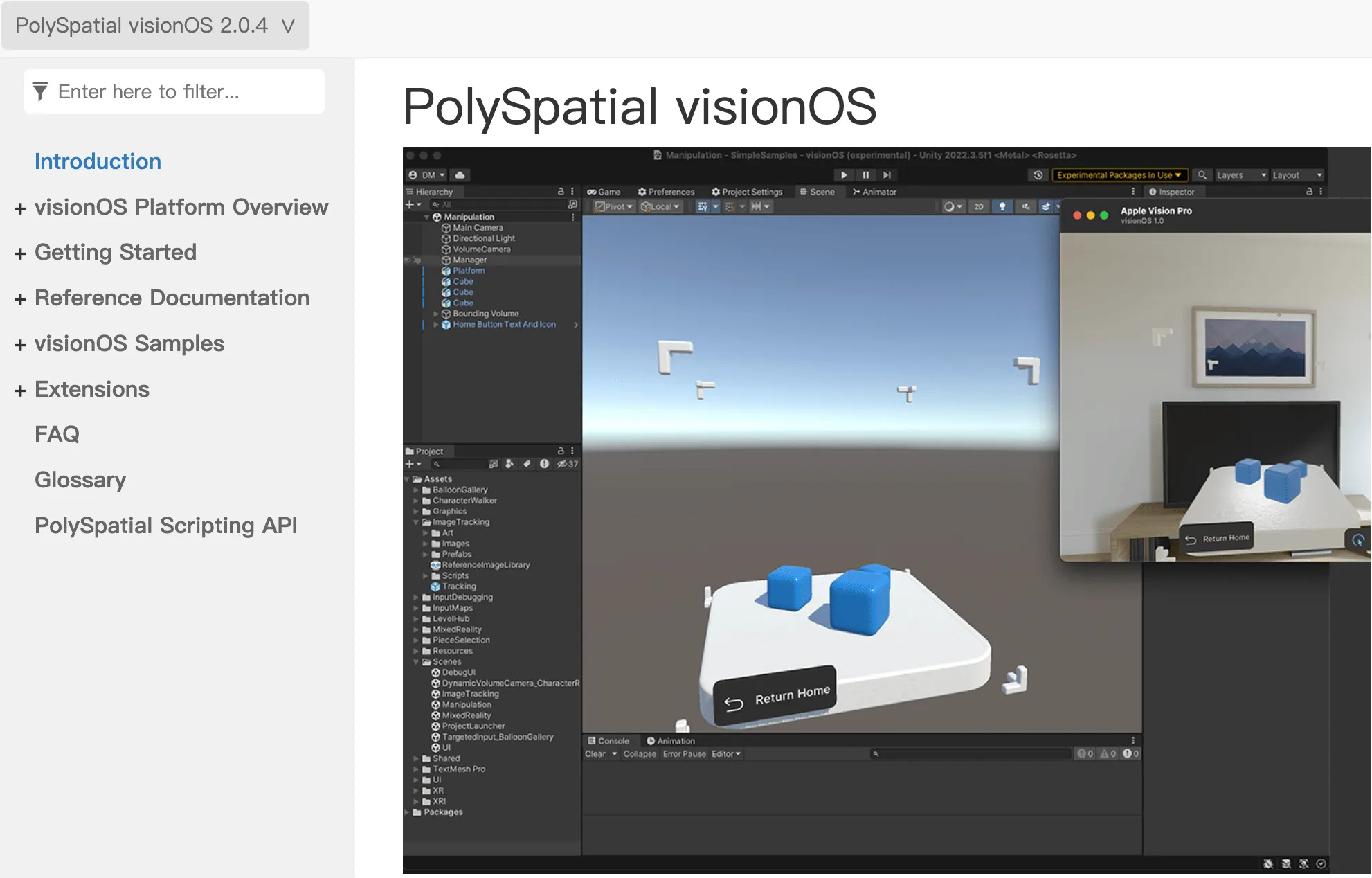

Unity 6 official version is released, and PolySpatial has also ended its Pre version, with the official 2.04 version

After six months of testing, the first LTS version of Unity 6 (6000.0.23f1) was officially released on October 16, 2024. Unity official also wrote a blog post to introduce all the new features.

In short, the main new features of Unity 6 include:

- Performance improvement: GPU Resident Drawer brings 2x performance improvement in some scenarios. Developers can experience it through Fantasy Kingdom in the Asset Store.

- Simpler multiplayer game development: Multiplayer game development will be simpler on Unity 6. Official provides a demo called Megacity Metro as an example.

- Better support for Web environment: The Unity Web platform features based on WebAssembly will be more complete. This time, Unity’s project officially supports mobile platform web browsers, and can even use local storage like PWA.

- Better multi-platform workflow support: Mainly includes Build Profiles and Platform Browser. The former can set different build configurations for each platform, and the latter can better preview the configuration content of each platform in the Unity editor.

- Improvement of visual tools: The improvement of lighting, VFX and other tools allows developers to achieve more beautiful visual effects on Unity 6.

In addition, PolySpatial 2.0 has also ended its Pre version and has the official 2.04 version.

However, currently the template project of PolySpatial 2 has not been updated and is still using the previous 6000.0.23f1 version. Please pay attention⚠️ when initializing projects using the template.

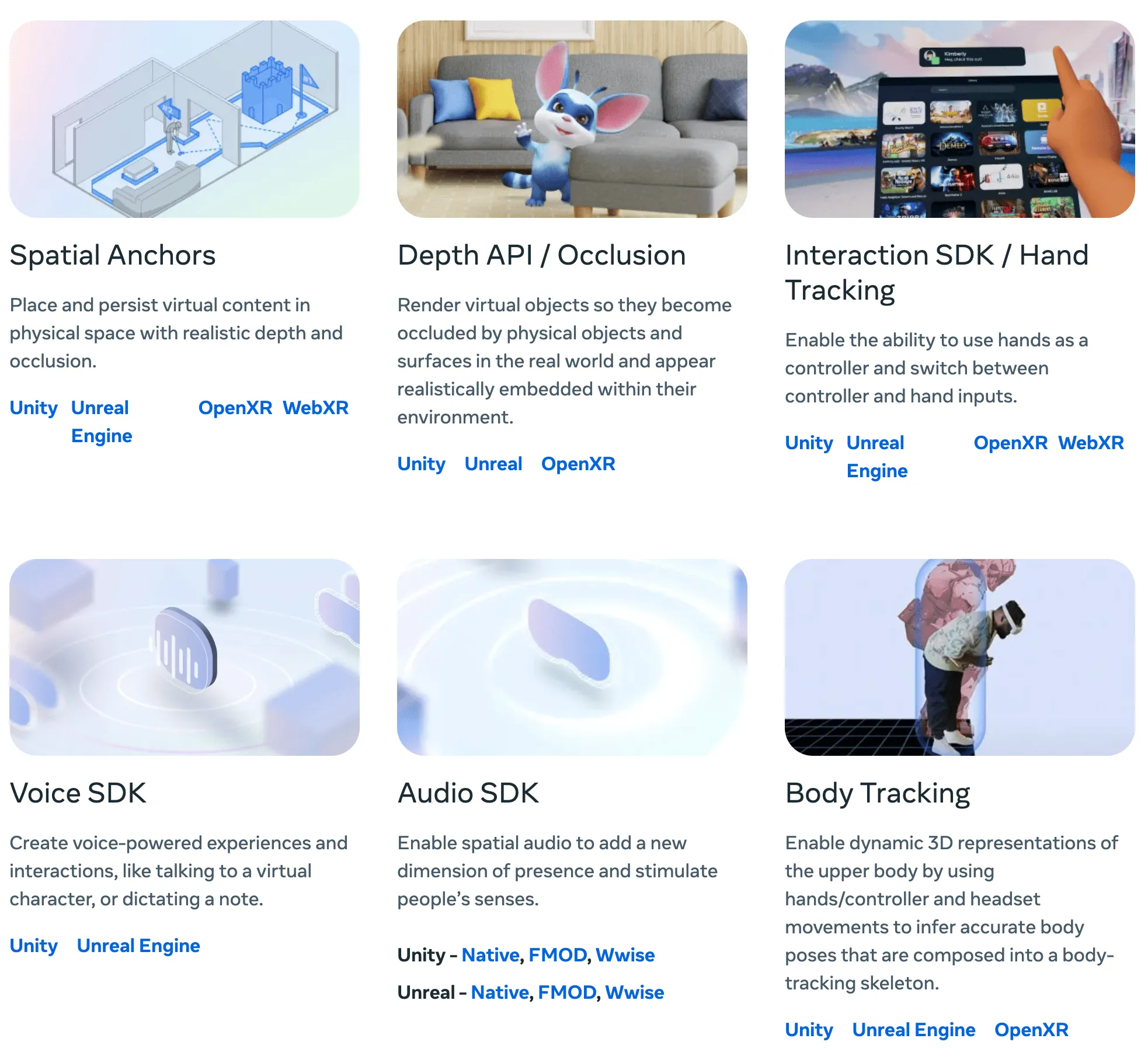

Meta has updated its developer documentation

Perhaps feeling the pressure of Apple’s excellent performance in developer relations, Meta recently not only released Spatial SDK, but also updated the style of the developer homepage for MR development. Similar to the visionOS Developer homepage, it categorizes and organizes existing technologies, making the overall structure clearer for developers who are new to the field:

Tool

ShaderVision

Hey, my old buddy, are you still troubled by writing Shaders on visionOS? Well, you need this tool called ShaderVision. With this tool, you can directly write Shader code in Apple Vision Pro and preview the effect in real-time.

It’s worth mentioning that the author of ShaderVision also created an application called Pulsargeist at VisionHack, which we’ve promoted before, and won first place in the Games and Entertainment category.

Article

Drawing Graphics on Apple Vision with the Metal Rendering API

In this article, the author introduces how to use the Metal Rendering API to draw graphics on Apple Vision Pro. If you want to learn how to use Metal to draw graphics on visionOS, this article is a good start.

Configuring iOS Development Environment in Cursor/VSCode

If you’re a developer new to visionOS development and really need to use AI to help with development, then using VSCode or Cursor is an ideal start.

However, due to some toolchain limitations, you need some configuration to make these tools perfectly integrate with the iOS development environment.

In this article, the author introduces how to configure the iOS development environment for Cursor/VSCode. The third step is particularly important, allowing Swift code to be recognized by the compiler without too many red error messages.

Video

Hands-On: Meta Orion Augmented Reality Glasses!

In this video and related article, the author delves into some technical details of Meta Orion, including battery life limit (about three hours), resolution (12 PPD, about half of Quest 3), and so on.

Submerged: Technical Breakdown

As the saying goes, laymen watch the fun, experts watch the technique. If you’re curious about the story behind the filming of Submerged, but find the official behind-the-scenes a bit vague, you can check out this in-depth analysis of Submerged.

The author is also a VR industry film practitioner, so he can break down for us from a very professional perspective the details that are easily overlooked in the fleeting shots of the director’s behind-the-scenes.

Code

Meta Horizon UI Set

Unlike Apple, which has a large and detailed HIG and design requirements, Meta seems to have never had a so-called “UI specification” for a long time.

However, recently, Meta launched a UI component library called Meta Horizon OS UI Set. This component library contains some common UI components, such as buttons, input boxes, sliders, etc. Based on this, developers can complete the UI design of applications very quickly.

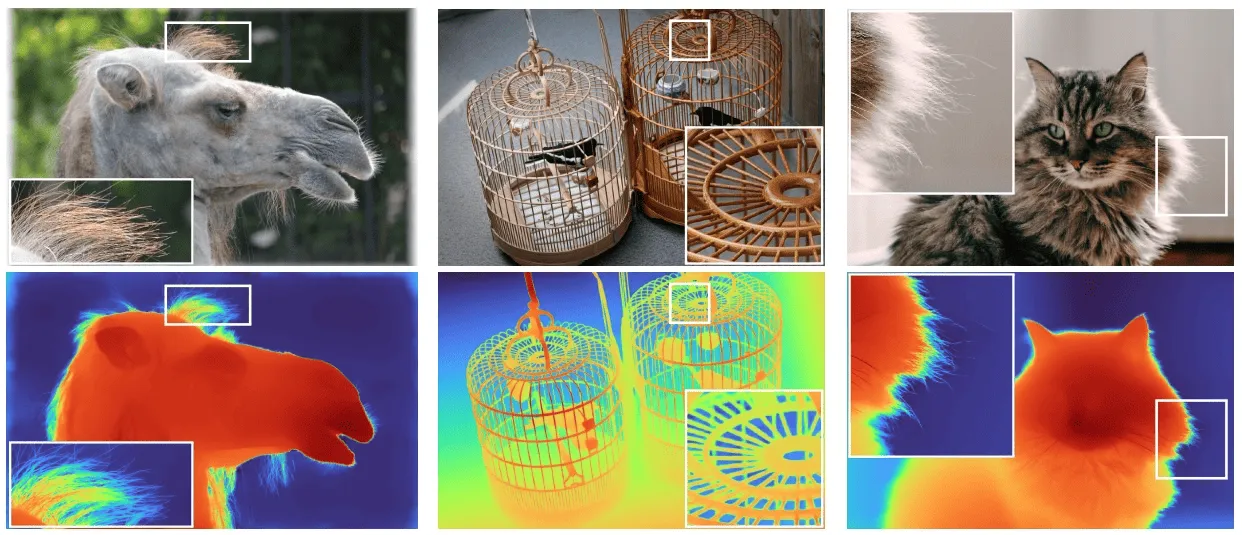

Depth Pro: Apple’s Official Open-Source Depth Detection Model

Depth Pro is Apple’s official open-source depth detection model. This model can obtain depth information from a single photo, which can be used to make some applications based on depth information, such as background blur, portrait segmentation, etc. From the given examples, the accuracy is quite high. (I wonder if the function of converting ordinary photos to spatial photos on visionOS also uses this model? 🤔)

Contributors of This Issue

XReality.Zone

XReality.Zone