XR World Weekly 015

This Issue’s Cover

For this issue’s cover, we share a concept design from Ben Artis titled Apple Maps for visionOS. It reminds us that the Map app in the official version of visionOS is still just a Window-style compatible version, making Ben’s design concept quite avant-garde and trendy.

In addition to the cover, Ben has also designed a series of product promotion pages, very much in the Apple style. Interested friends can click the link for more details.

Table of Contents

BigNews

- Spline released multiple major updates related to the Apple platform

- Meta Quest SDK welcomes a wave of updates

Idea

- MR smart home control app “Immersive Home(BETA)” arrives on App Lab and Side Quest

- An aquarium on the wall!

- Neko Neko at home, petting cats!

Video

- Unity6 New Features Introduction Series

Article

- In-depth analysis of the spatial computing industry: related devices, key elements, industry chain, and relevant companies

Code

- Custom rendering with Metal on visionOS

- Spatial Metal: Custom rendering on visionOS using Metal and Compositing Services

- Third-party keyboards on visionOS: Vision Keyboard Kit

- Hand tracking debugging on the visionOS simulator

SmallNews

- Ray-Ban Meta smart glasses + multimodal AI, a true smart life assistant

BigNews

Spline releases multiple major updates related to the Apple platform

Keywords: Spline, visionOS, SwiftUI

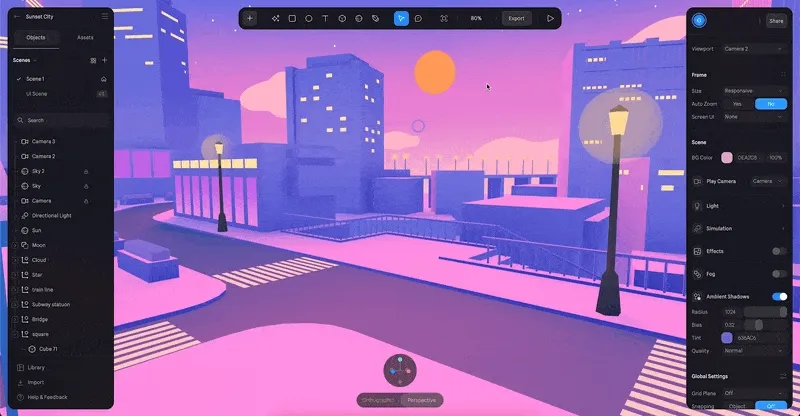

Hey, friends, do you remember Spline, which we started recommending to you from Issue 001? Just recently, the Spline team released an update video revealing some fun stuff they’re about to launch during the Spline Online Event held on December 19th:

- Particles

- 3D embed for Apple Platforms

- Generate App

- UI Scenes

Let’s take a closer look at these fun features.

First up, the particle system. Now, in Spline, we can finally use particle systems to create more “dynamic” scenes:

The particle system is just the beginning. The next three updates are quite revolutionary.

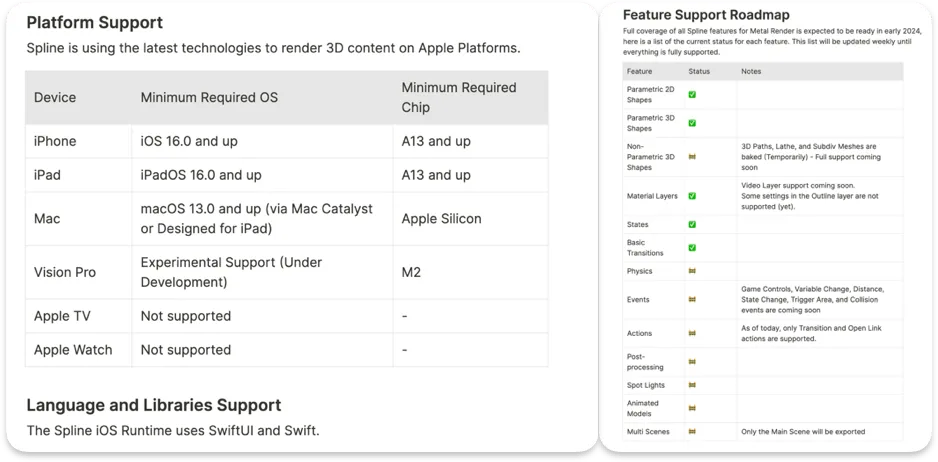

First, 3D embed for Apple Platforms. Simply put, the Spline team has used Metal — Apple’s flagship graphics framework — to achieve nearly seamless 3D scene embedding on Apple platforms. With Spline’s SplineRuntime, developers can embed 3D scenes in their apps with simple code like this, using Spline’s exported splineswift files:

import SplineRuntime

import SwiftUI

struct ContentView: View {

var body: some View {

// // fetching from local

// let url = Bundle.main.url(forResource: "scene", withExtension: "splineswift")!

// fetching from cloud

let url = URL(string: "https://build.spline.design/8DX7ysrAJ3oDSok9hNgs/scene.splineswift")!

try? SplineView(sceneFileURL: url).ignoresSafeArea(.all)

}

}

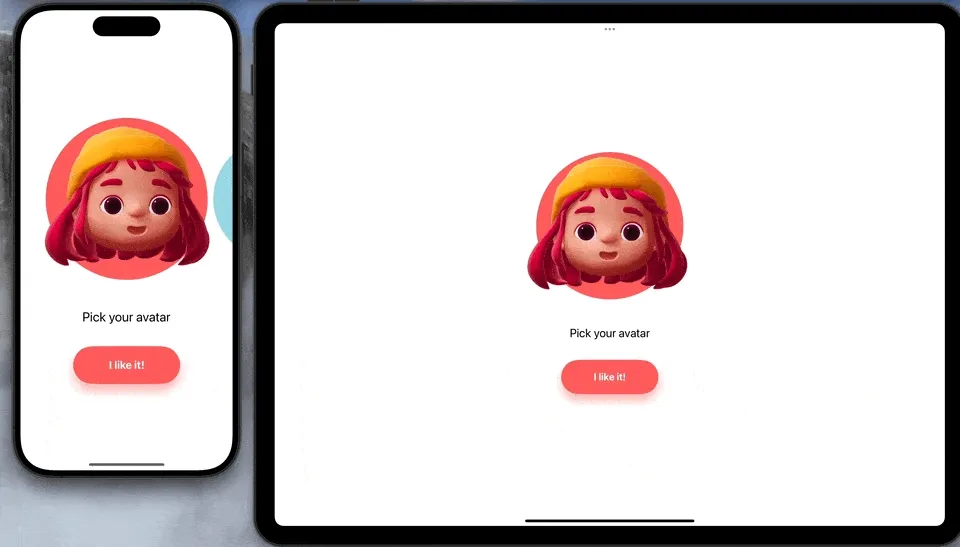

If your app has a need for a 3D scene like the one below, Spline’s new feature is undoubtedly a great help:

Moreover, leveraging the event system Spline has been updating, you can even create a game that runs on iPhone/iPad directly using Spline:

Currently, this feature is still in Beta, and support for both the Apple platform and Spline’s own functions is not yet complete, but the potential for the future is definitely exciting!

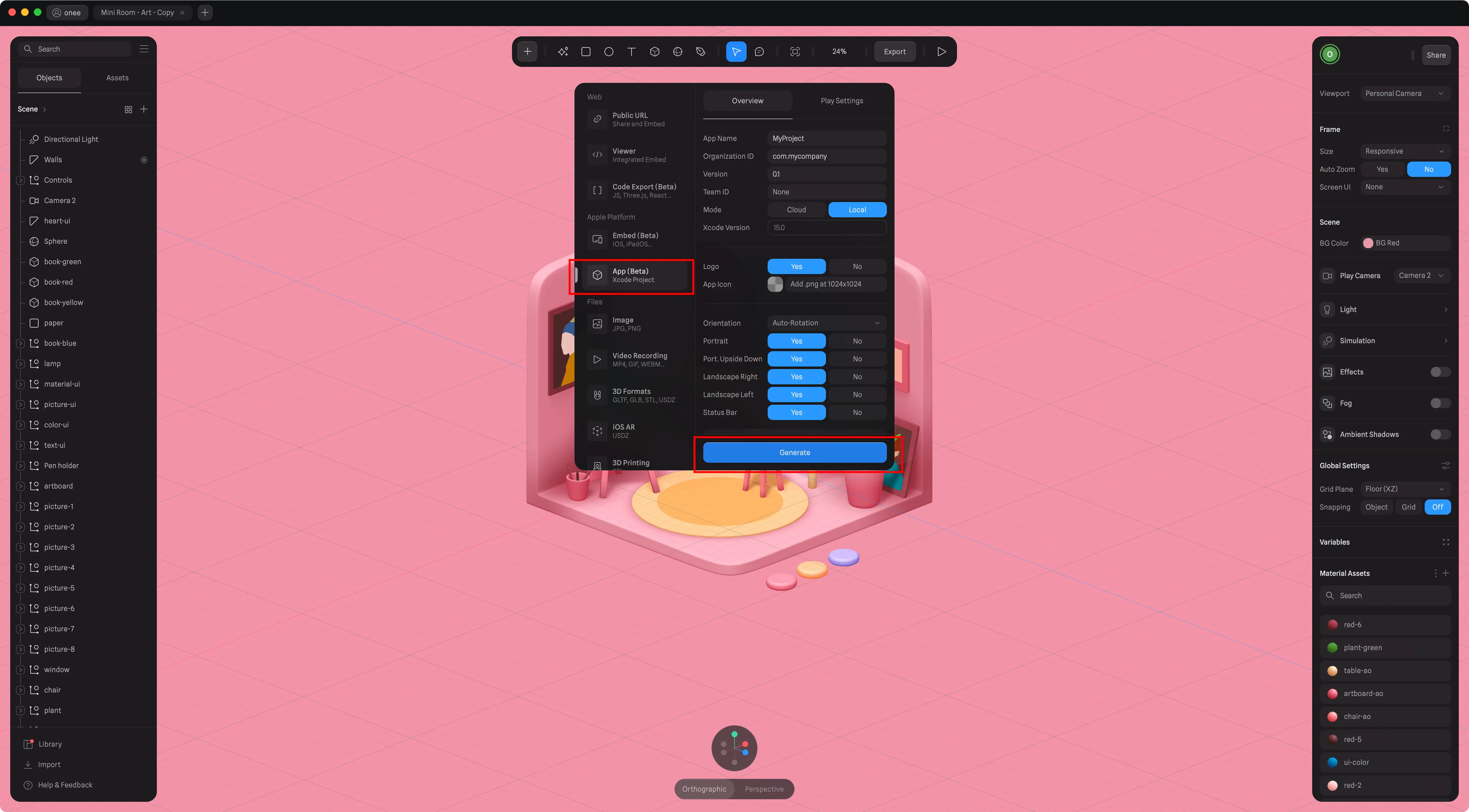

Besides, if you’re not familiar with Apple platform development but know Spline’s features well, you can use Spline’s newly launched Generate App feature to quickly generate an Xcode project. Then, knowing the basic process of submitting an app, you can launch an app on Apple’s App Store.

Tips

Similarly, this feature is currently in Beta, and you also need to subscribe to Spline Super to use it.

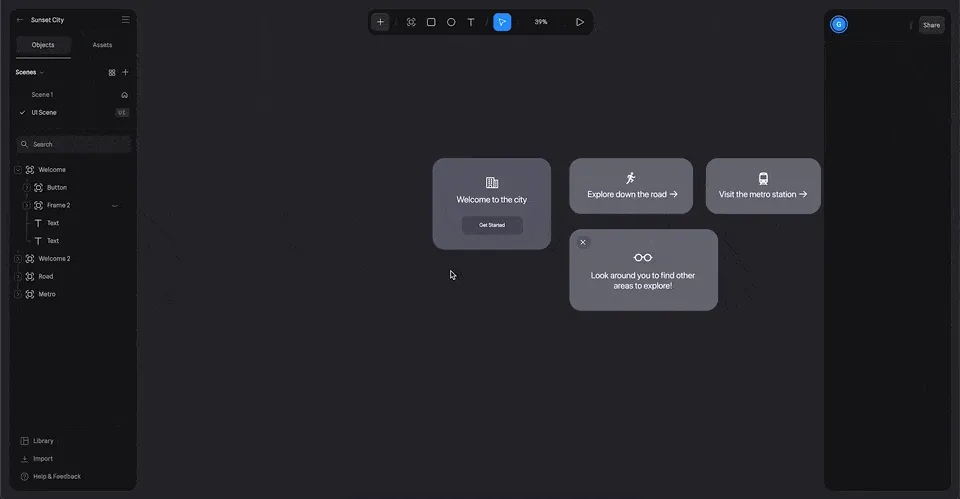

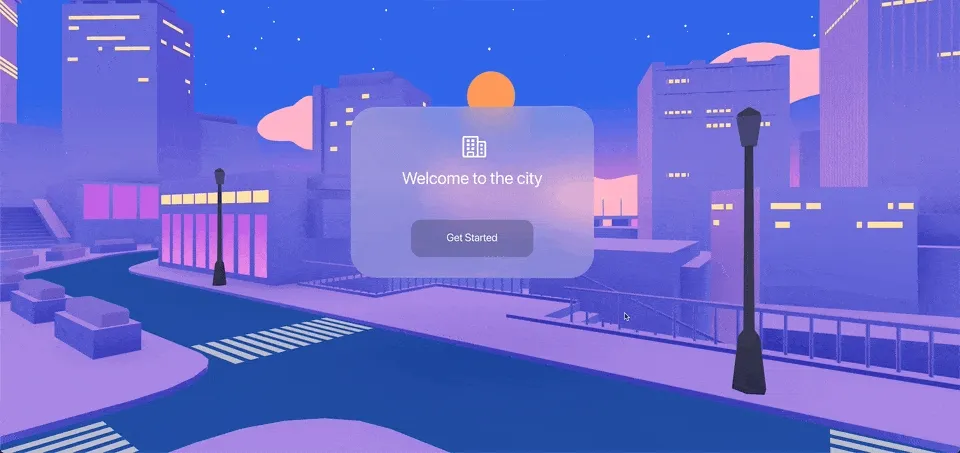

The first two features are aimed at helping modelers collaborate better with Apple platform developers. The next feature is intended to assist spatial computing scene interaction designers in prototyping more effectively. Now, in Spline, you can use UI Scenes to design 2D views within Spline:

And embed these flat UI Scenes into 3D scenes in a manner similar to Windows in visionOS:

Thanks to the event system Spline has built, button interactions in UI Scenes can seamlessly interact with the entire 3D scene. For example, you can create effects like the entire scene’s light and shadow transformation by using “Get Started” on the UI Scene:

Tips

Like the previous two features, UI Scenes is also in an early stage (still Early Preview), and if you encounter any difficulties using it, the Spline Team is very welcoming of all kinds of feedback.

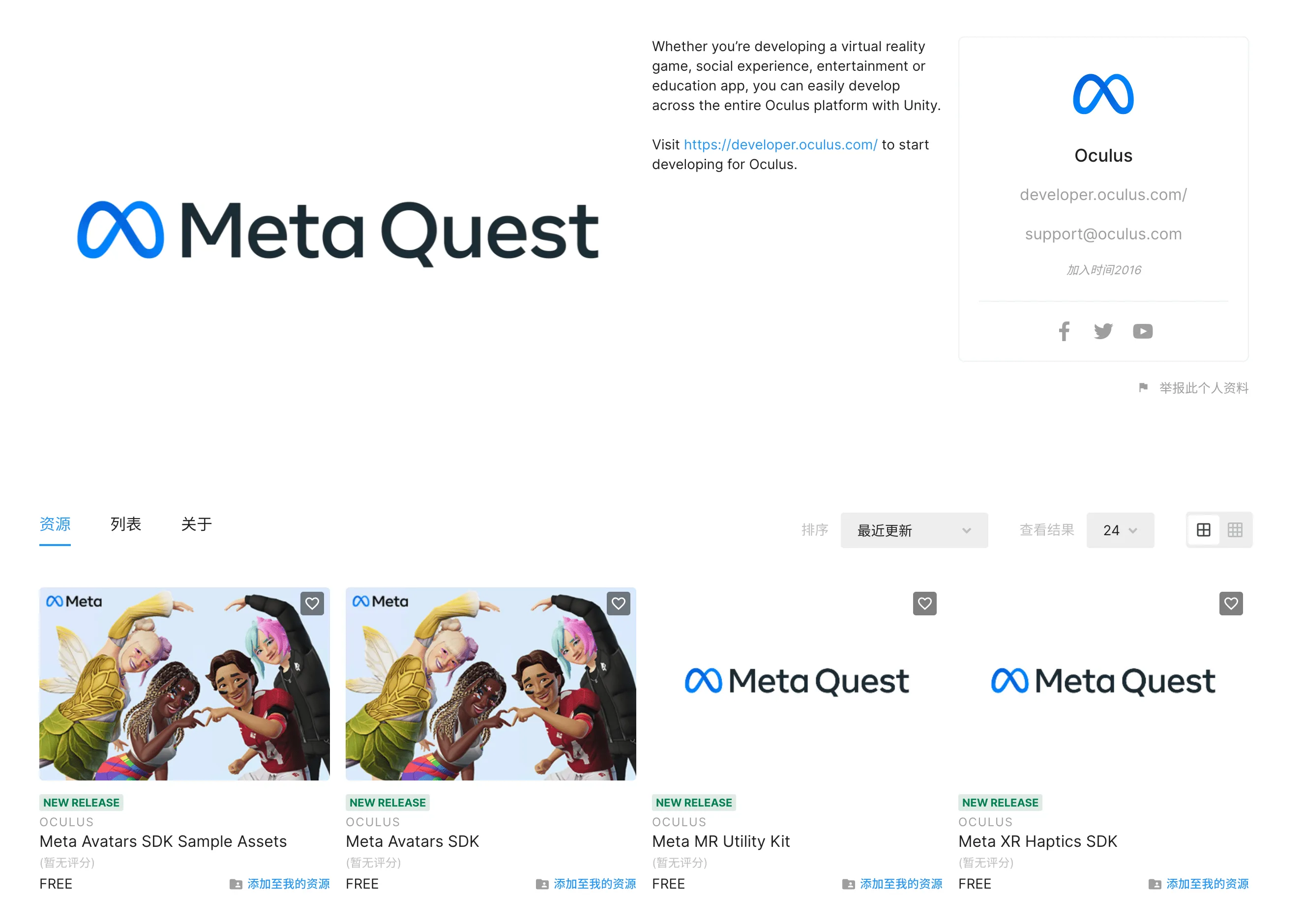

Meta Quest SDK welcomes a wave of updates

Keywords: Meta, Quest, Avatar SDK, MR Utility Kit, Haptics SDK, Passthrough Relighting (PTRL)

Recently, with the release of the Quest v60.0 version, Meta updated the Meta XR Haptics SDK, Meta Avatars SDK, and officially launched the MR Utility Kit (which we mentioned in Detailing Meta Quest 3’s MR Technology Upgrades, and Meta promised to release at Meta Connect 2023).

Tips

For these three SDK updates, Meta also published three blog posts for introduction:

In the Meta Avatars SDK v24 version, Meta mainly uploaded it to the Unity Asset Store and made some performance optimizations, making the default appearance of Avatars look a bit more realistic. For example, in the comparison below, you can see that Avatars’ hair, skin, and clothing will have more natural light reflection processing.

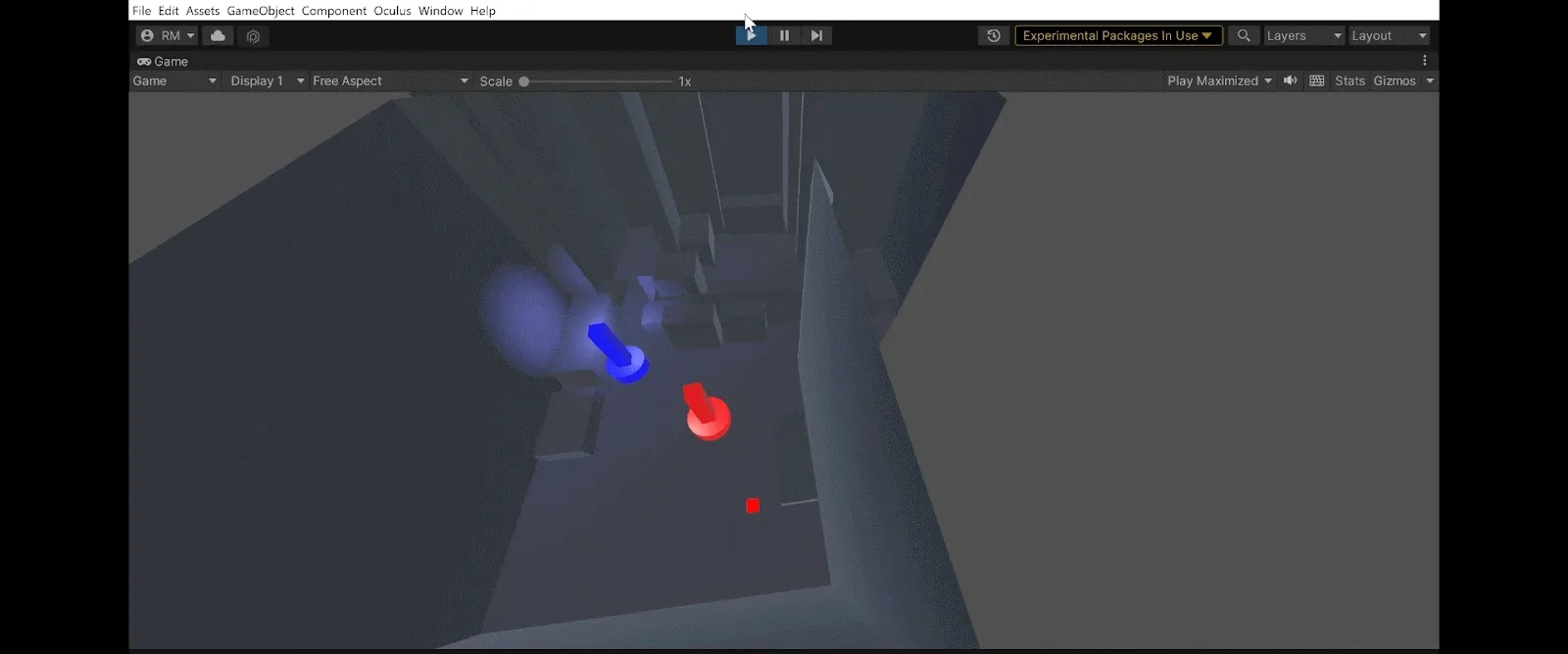

The release of the new MR Utility Kit can help developers build an MR App more quickly. As the name of this SDK (Utility Kit) suggests, it is not a core SDK providing complex functions, but a collection of resources, utility functions, and debugging tools. Many of these tools are built on the Scene API. Currently, the basic features included in the MR Utility Kit are:

- Scene queries

- Graphical Helpers

- Development Tools

- Passthrough Relighting (PTRL)

Let’s discuss these features one by one.

Scene queries allow for easy checking of a scene’s location and its relationship to the real world. For instance, we can quickly check whether a position is inside the room using the IsPositionInSceneVolume API.

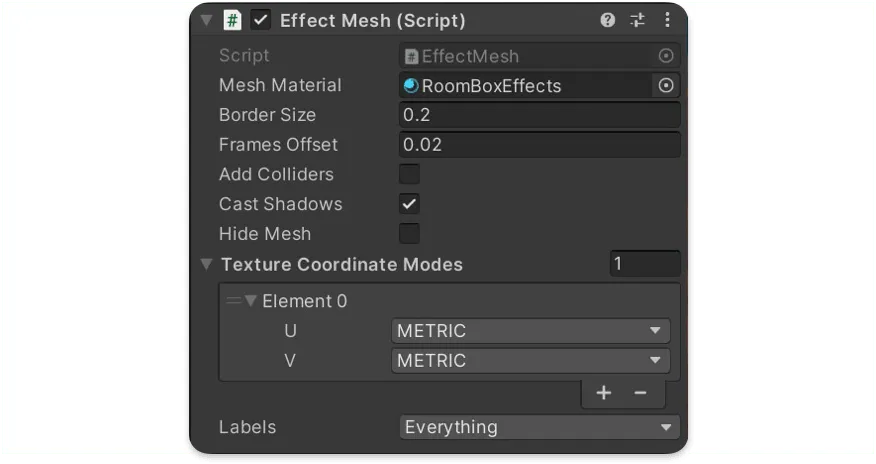

Graphical Helpers are aids for graphical imaging. For example, using the Effect Mesh component provided by the SDK, we can easily add textures to real-world planes like walls, ceilings, and floors.

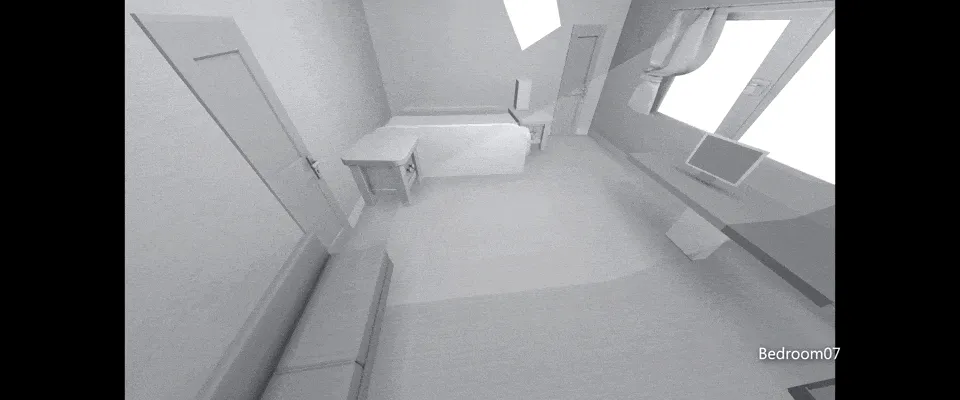

Development Tools are pretty self-explanatory, providing various developer tools for easier debugging. The SDK mainly provides Scene Debugger and Room Prefabs. The former helps developers visually inspect the previously mentioned Scene Query, while the latter offers a room prefab, facilitating rapid development in a room setting:

Regarding Passthrough Relighting (PTRL), we’re keeping some details under wraps. Remember the realistic light reflection effect video player we mentioned in Issue 011, shared by a Redditor?

At that time, we noted that the effect was developer-implemented, lacking a low-cost solution. Now, the PTRL feature provided by MR Utility Kit is designed to help developers quickly add shadows:

And lighting to objects:

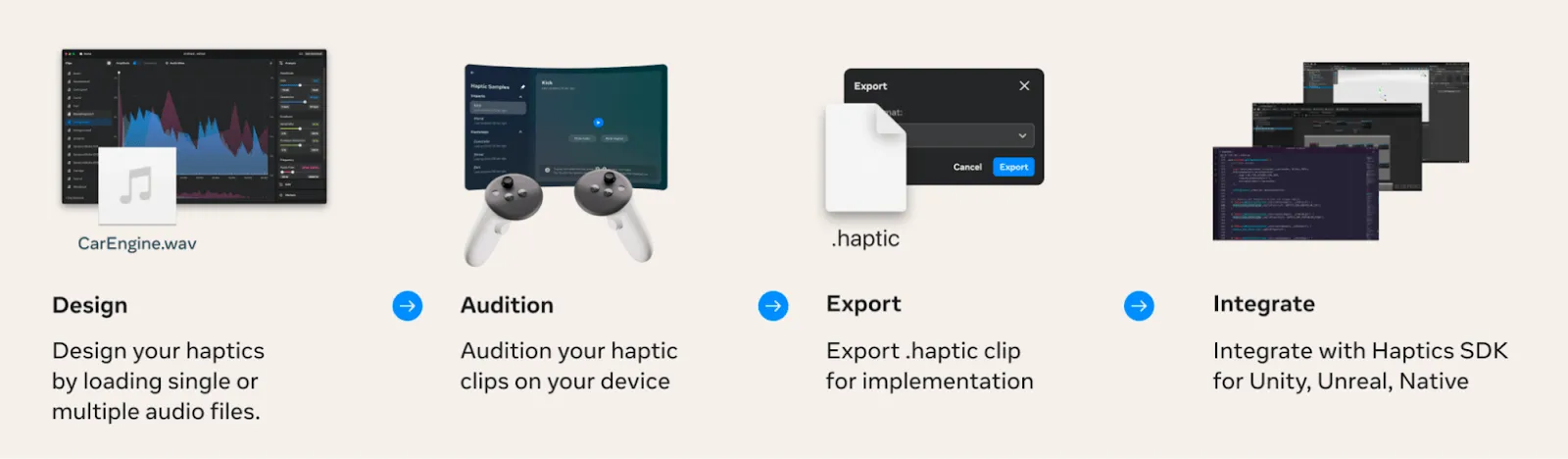

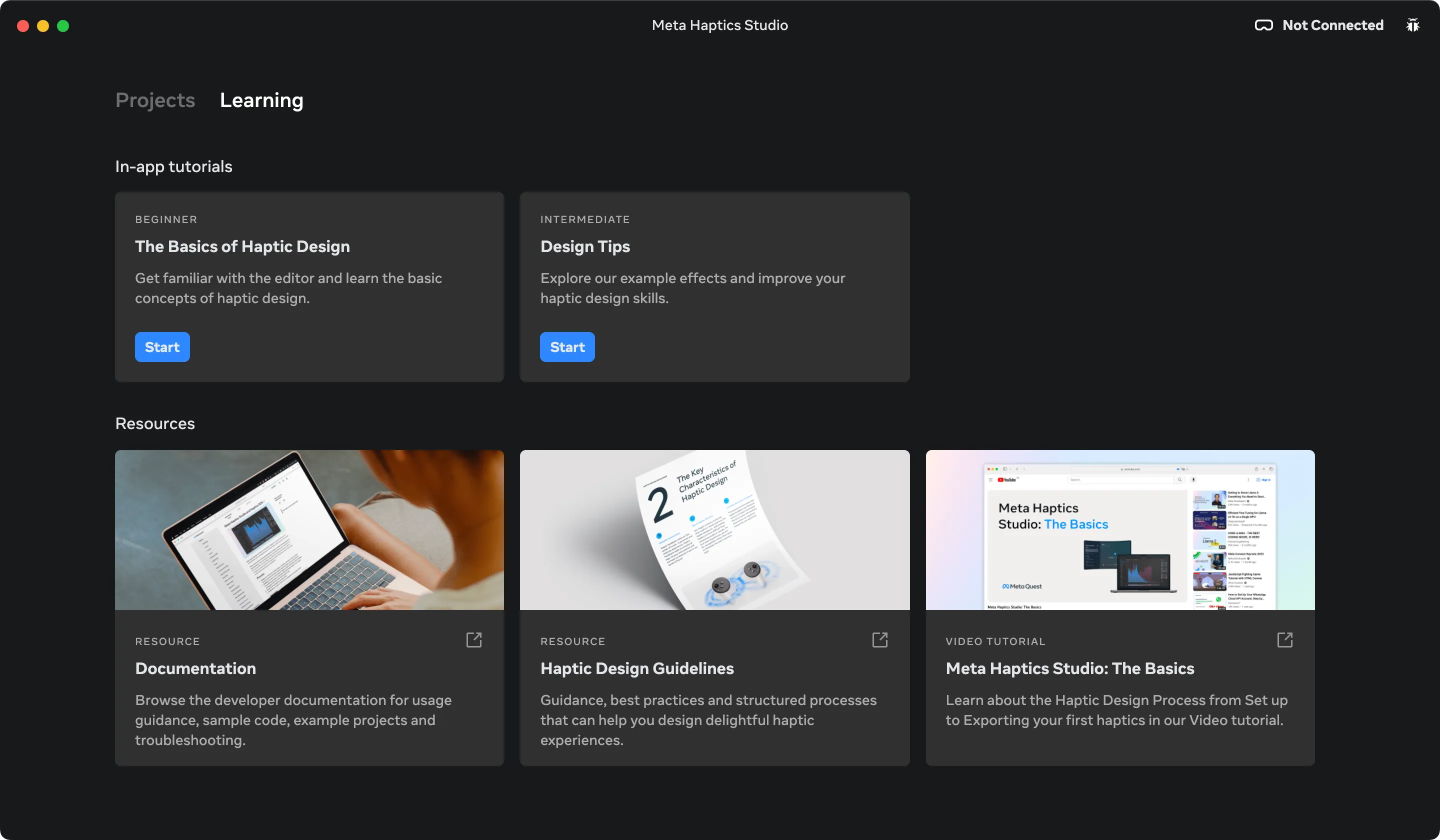

Finally, the Haptics SDK has moved out of its Beta phase, which began in March, to officially enter version 1.0. Paired with the Mac, Windows, and Quest Meta Haptics Studio apps, developers can use audio files to tweak the vibration waveform of the controllers, preview it on the Quest app, and export it in the .haptic format recognized by the Unity/Unreal SDKs.

Additionally, the official version of the Studio now includes several tutorials for beginners, helping new developers get up to speed quickly.

Idea

MR Smart Home Control App “Immersive Home(BETA)” Arrives on App Lab and Side Quest

Keywords: MR, smart home

We recently came across several interesting apps on Side Quest and would like to share them with you.

Let’s start with a practical one. The concept of smart homes has been touted by major platforms for many years, and there have been some demos combining smart homes with XR. For instance, in our last issue, we introduced a smart home control interface designed by a designer for Vision Pro. Numerous other concept videos also abound. Now, we can see a mature MR application, “Immersive Home(BETA),” on Side Quest and Quest App Lab.

This smart home app by Nils Twelker utilizes Quest 3’s MR capabilities for a fascinating application: users wearing Quest 3 can view power consumption by gazing at or pointing to smart home devices and toggle lights with a wave of the hand. Alternatively, a virtual control panel can be opened to operate dozens of smart home devices. The developer notes that while it runs better on Quest 3, Quest 2 is also compatible.

Looking at the official website’s plans, in addition to the current support for gesture-based power viewing and light switching, the developer hopes to add features like automation control, voice interaction, whole-house power consumption, and weather display in the future.

It’s worth noting that the connected home products need to be compatible with Home Assistant before pairing with the app. More information is available on the app’s official website, and the project repository address is also provided here.

An Aquarium on the Wall!

Keywords: MR, aquarium

The next app is from one of the earliest modern VR experiences — Ocean Rift.

Their recent update supports the latest MR mixed reality mode for the Quest series. For example, Quest 2 users can use their fingers to draw a square area on their room wall to display their favorite fish species, as if turning their home into an aquarium. Different lighting effects can also be added to the environment. On Quest 3, the app can intelligently recognize a user’s previously recorded room environment and walls, turning the selected wall edge into an aquarium display glass. If set on the ceiling, it can even simulate the lighting effect seen from underwater. Head over to Side Quest / Quest App Store to bring the aquarium home.

Neko Neko at Home, Petting Cats!

Keywords: MR, cats, Neko Atsume

The next app comes from developer Hit-Point, who previously developed a mobile game called Neko Atsume: Kitty Collector. This game allows you to collect different breeds of cartoon cats and interact with them. The iOS version is available here, and the Android version can be downloaded here.

Now, this adorable cat petting and collecting game is available on Meta Quest! Combined with Quest 3’s see-through capabilities, you can easily bring cats into your home, collect different breeds, and play games with them using items like catnip. More importantly, you can pick up the cats with your hands (especially with the recently released Haptic SDK, the feeling must be great). The interaction is expected to be much stronger than the mobile version. Interested friends can get it here on Quest Store.

Video

Unity6 New Features Introduction Series

The Unity6 New Features Series introduces a series of new features in Unity6. Regarding support for the USD format, it is no longer recommended to use the old version of USD Packages, but instead the New OpenUSD Packages, supporting USD version v23.02. It provides import and export of scenes and animations, meeting the needs of collaborative creation of large scenes and offers a powerful compositing engine capable of assembling new scenes losslessly from numerous resources.

The new toolkit includes USD Importer, USD Exporter, and USD core:

- USD Importer: A tool for importing USD files into Unity;

- USD Exporter: A tool for exporting from Unity to USD files;

- USD core: A C# wrapper based on the open-source USD engine.

Article

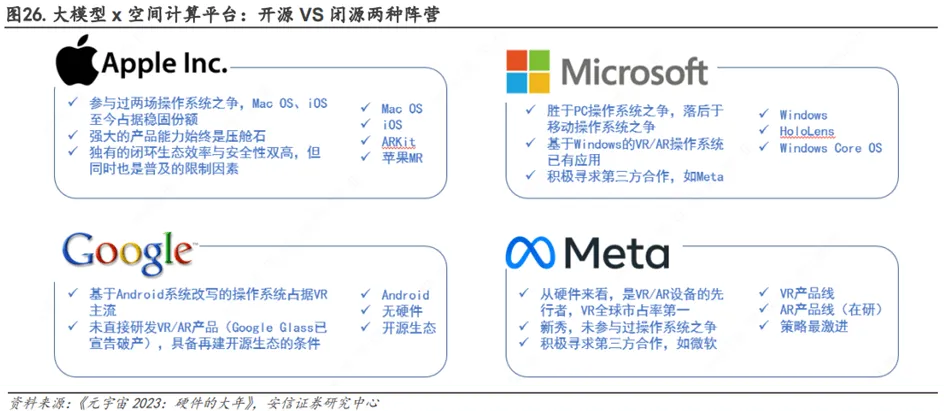

In-depth Analysis of the Spatial Computing Industry: Related Devices, Key Elements, Industry Chain, and Relevant Companies

Keywords: Spatial computing, Apple Vision Pro, Meta

The In-depth Report on the Spatial Computing Industry is a compilation by Hui Bo, referencing multiple industry reports. It covers a wide range of content concerning the spatial computing and XR industry’s current landscape and future development, offering high value for understanding these fields.

The report starts with What is Spatial Computing and What Role Does Spatial Computing Play, suggesting that computational dimensionality is a disruptive trend of the era. It then analyzes key technology points, device industry chains, and relevant companies, finally discussing current factions and main tracks and making future predictions: Large models × spatial computing platforms, expecting frequent hits in 24 and 25.

The full article is lengthy, so here we’ve summarized its content, covering several aspects:

- Overview of Spatial Computing

- The Disruptive and Inevitable Nature of Spatial Computing

- Key Elements of Spatial Computing Platforms

- Analysis of the Spatial Computing Device Industry Chain

- Related Companies

- Analysis of Factions and Main Tracks

- Future Outlook

Code

Custom Rendering with Metal on visionOS

Keywords: Metal, visionOS, Compositor Service

Metal Spatial Rendering is a sample program that uses Metal, ARKit, and visionOS Compositing Services for custom rendering. At WWDC2023’s session 10089, Apple unveiled the Compositor Services framework and demonstrated basic usage. It allows developers to implement fully custom 3D rendering on visionOS using Metal cpp, enabling third-party rendering engines to integrate with visionOS.

Fully immersive scene: A fully immersive scenario, akin to VR’s fully virtual mode, can present scene objects like hands, tables, and floors, but lacks video passthrough capabilities.

However, Apple only provided partial key code and explanations, not a complete sample. This project, by metal-by-example, is a complete sample code based on Apple’s public information. It implements basic lighting rendering and model loading, serving as a valuable reference for developers needing to integrate custom rendering into visionOS.

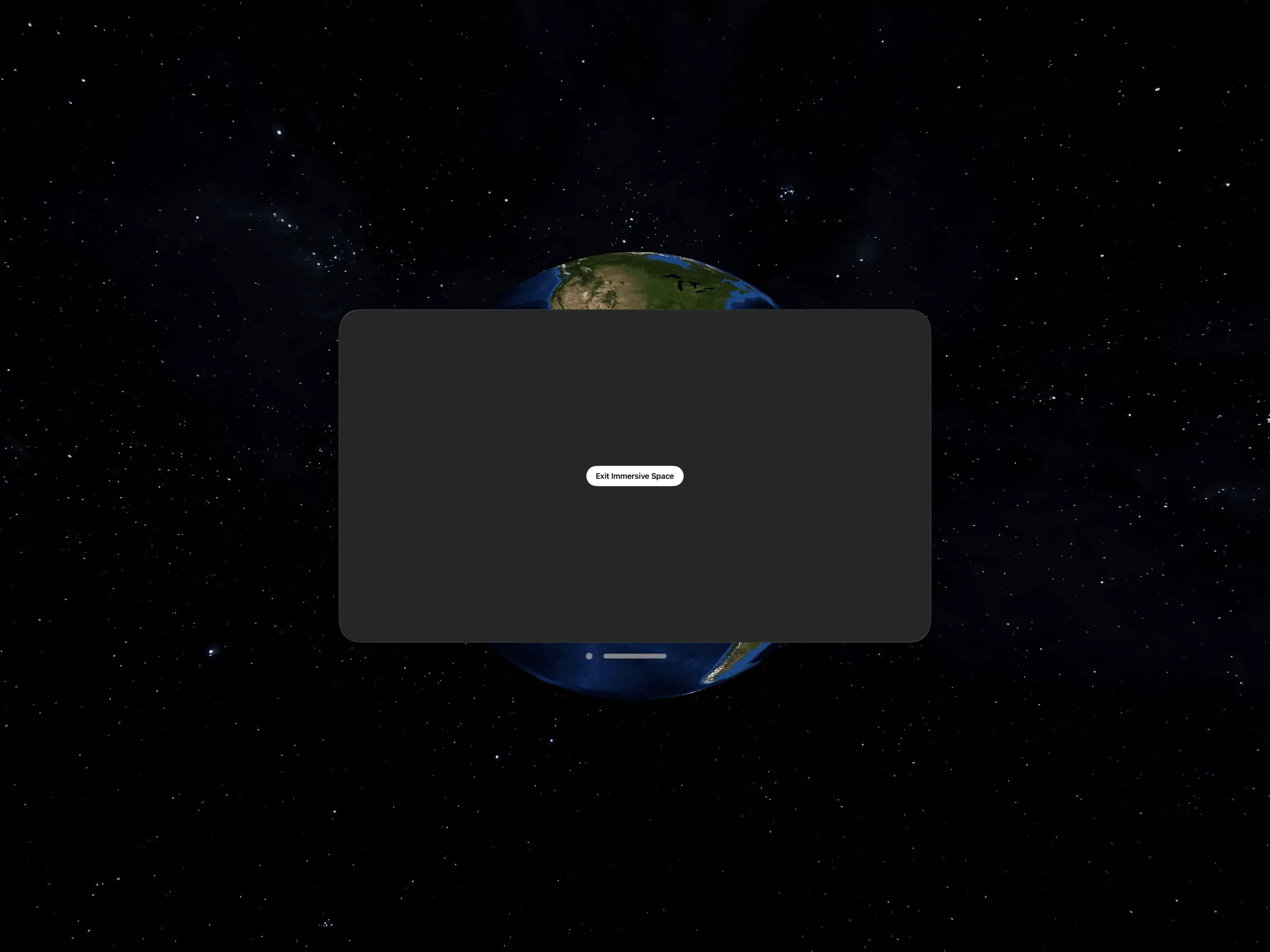

Spatial Metal: Custom Rendering on visionOS Using Metal and Compositor Services

Keywords: Metal, Compositor Services, visionOS

Similar to the Metal Spatial Rendering project, SpatialMetal and SpatialMetal2 are also sample programs for custom rendering on visionOS using Metal and Compositor Services. They render Earth models and cosmic starry skies, representing foreground and background, respectively. The difference between SpatialMetal and SpatialMetal2 lies in SpatialMetal2 implementing multi-uniform buffers for binocular vision.

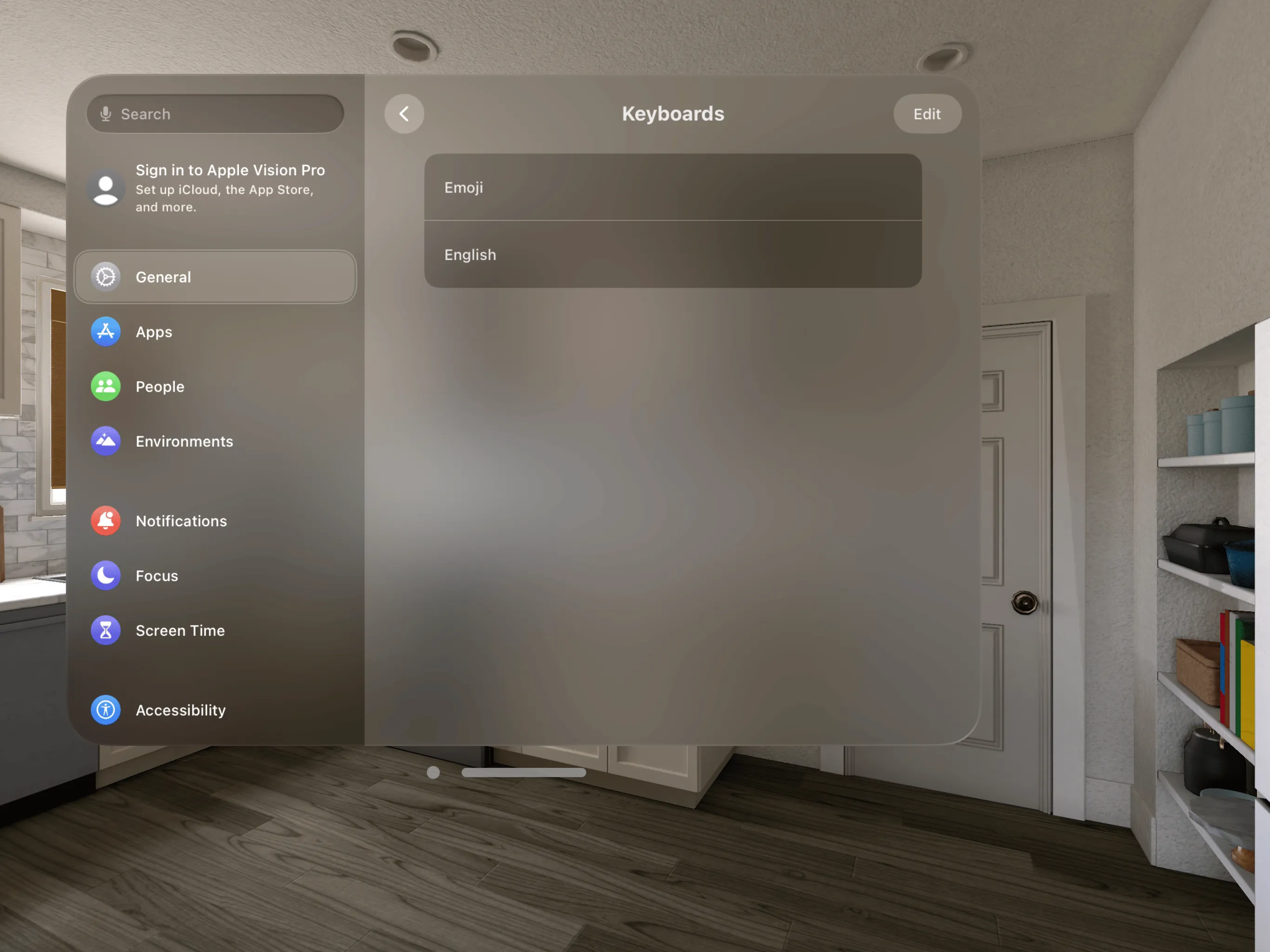

Third-Party Keyboards on visionOS: Vision Keyboard Kit

Keywords: visionOS, Third Party Keyboard

VisionKeyboardKit is a keyboard component for visionOS, supporting full-size 104 keys and 60% mode without a numeric keypad.

However, since the visionOS simulator currently does not support installing other input method keyboards, it cannot provide system-level keyboard support and can only be embedded in one’s app as a regular window. The usage is similar to the encrypted keyboards found in banking apps.

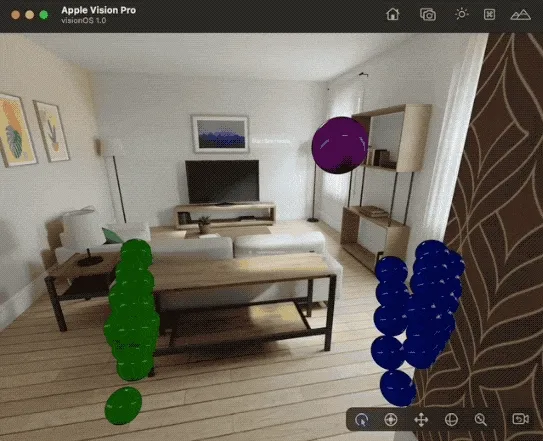

Hand Tracking Debugging on the visionOS Simulator

Keywords: visionOS, Hand Tracking, Simulator

VisionOS-SimHands is a project for debugging hand tracking features on the visionOS simulator, utilizing WebCam, Bonjour, and Google MediaPipes. This project is divided into two parts:

- A macOS app that uses the Google MediaPipe framework and a Mac camera to perform three-dimensional hand tracking, starting a Bonjour sharing service to transmit gesture information.

- A visionOS project running in the simulator, which starts a Bonjour service to receive gesture information and display it in visionOS.

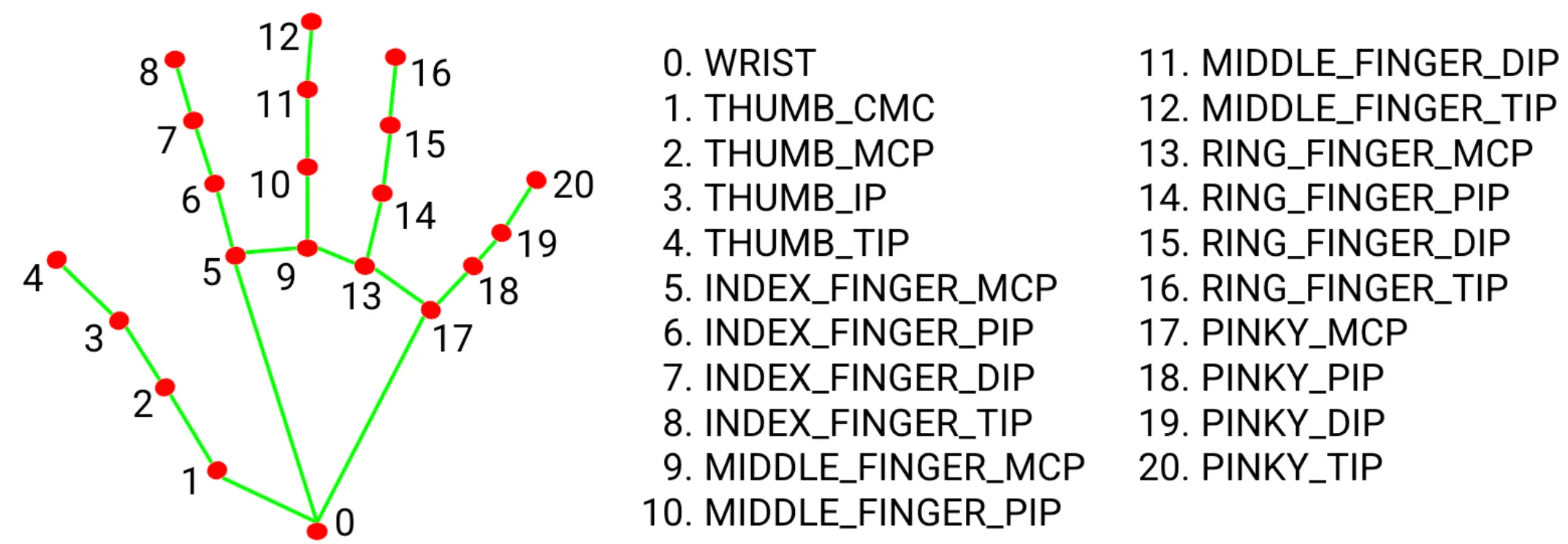

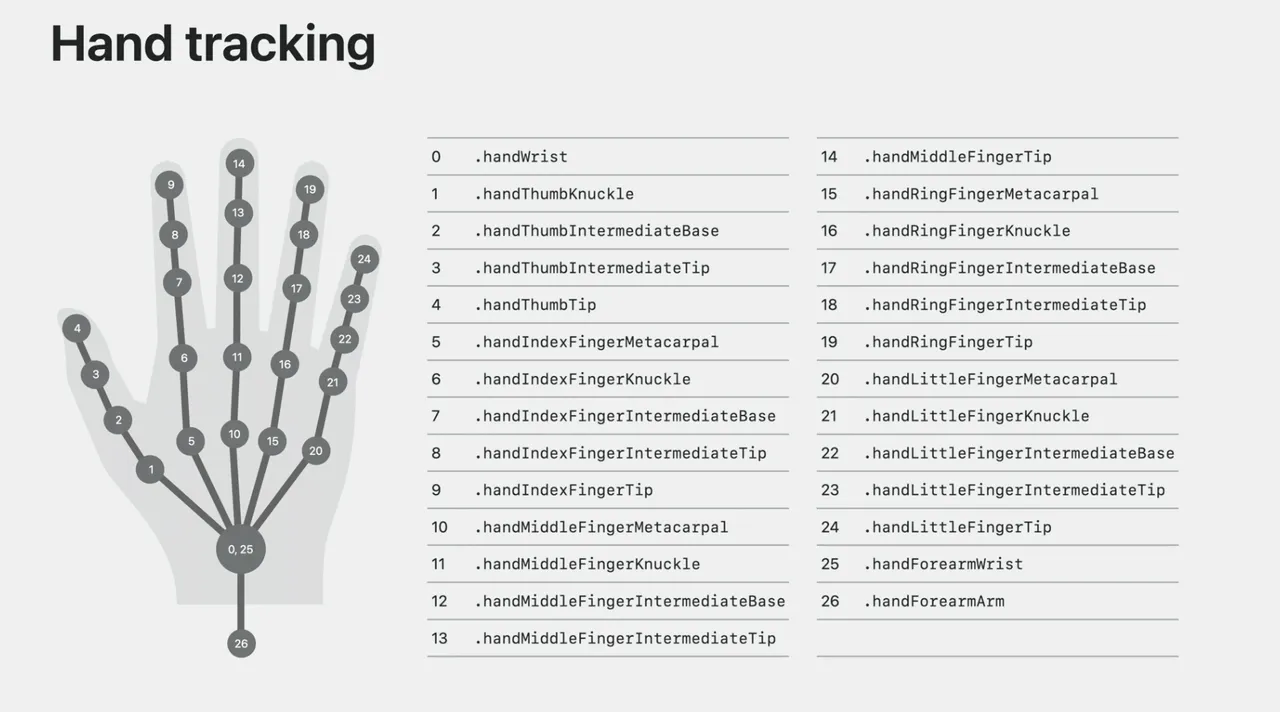

It’s important to note that the number of hand joint points recognized by Google MediaPipe differs from those in visionOS. Not only are the names different, but there are also fewer palm joints, one less forearm joint, and one wrist root joint.

This innovative project can significantly facilitate hand tracking code debugging on the visionOS simulator, effectively reducing development costs.

SmallNews

Ray-Ban Meta Smart Glasses + Multimodal AI, a True Smart Life Assistant

Keywords: Meta, Ray-Ban, Smart Glasses, Multimodal AI

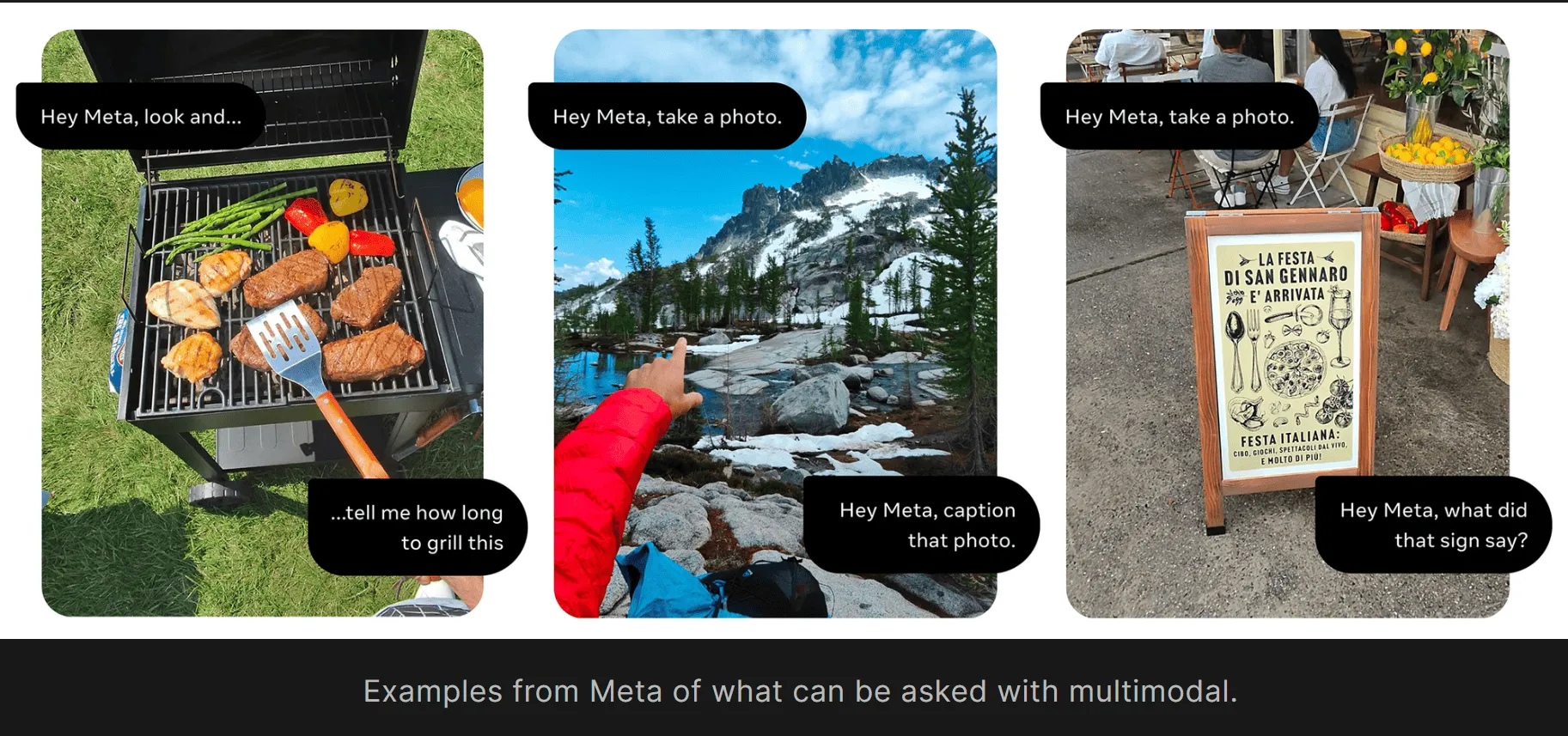

In September this year, Meta collaborated with Ray-Ban to launch smart glasses at the Connect Conference. According to reports, Meta is currently testing more advanced artificial intelligence features on these glasses and randomly pushing them to some users in the United States to participate in early testing of Meta AI’s image recognition function on the glasses.

Meta’s CTO Andrew Bosworth revealed that this new AI feature is expected to be available to all users of the smart glasses next year. This feature allows the glasses to capture first-person perspective photos and use Meta’s multimodal AI technology to interact with the photos in a Q&A format, providing users with more fun experiences or assistance. For instance, you could ask the glasses how much longer to grill for medium-rare steak, or translate a restaurant’s sign or menu in real-time to help you order food while traveling abroad.

In the following scene, for example, you could use the Ray-Ban glasses to attempt translating the poster’s photo:

Contributors to This Issue

| Link | Image |

|---|---|

| Onee |  |

| XanderXu |  |

| Puffinwalker.eth |  |

XReality.Zone

XReality.Zone