XR World Weekly 014

This Issue’s Cover

This issue’s cover features Yehor Haiduk 🇺🇦‘s creation Smart Home Vision Pro OS UI. Compare it with the compact list style of smart home applications commonly found on mobile phones. Doesn’t it feel like in spatial computing, not only developers but also designers are given more freedom due to the “unlimited canvas”?

Table of Contents

BigNews

- AOUSD announced its roadmap for the next two years along with new partnership members.

- Official release of iOS 17.2: Start recording your own three-dimensional memories from now on.

- MLX — Another big move by Apple AI.

- Meta introduces Relightable Gaussian Codec Avatars to present real-time relit head models in VR.

Ideas

- AI + XR = A Door to Anywhere?

- Unmissable apps on Quest.

- Using Passthrough AR to meet from afar.

Tools

- Evolution of the Gaussian Splatting Web app: Special effects & WebXR.

- Generating 3D models from 2D sketches.

- Cognitive3D — A user analytics tool in XR.

Video & Code

- SwiftUI + Metal - Create special effects by building your own shaders.

SmallNews

- Wow! These gesture operations have been realized!

- Xbox Cloud Gaming (beta) lands on Quest 3.

BigNews

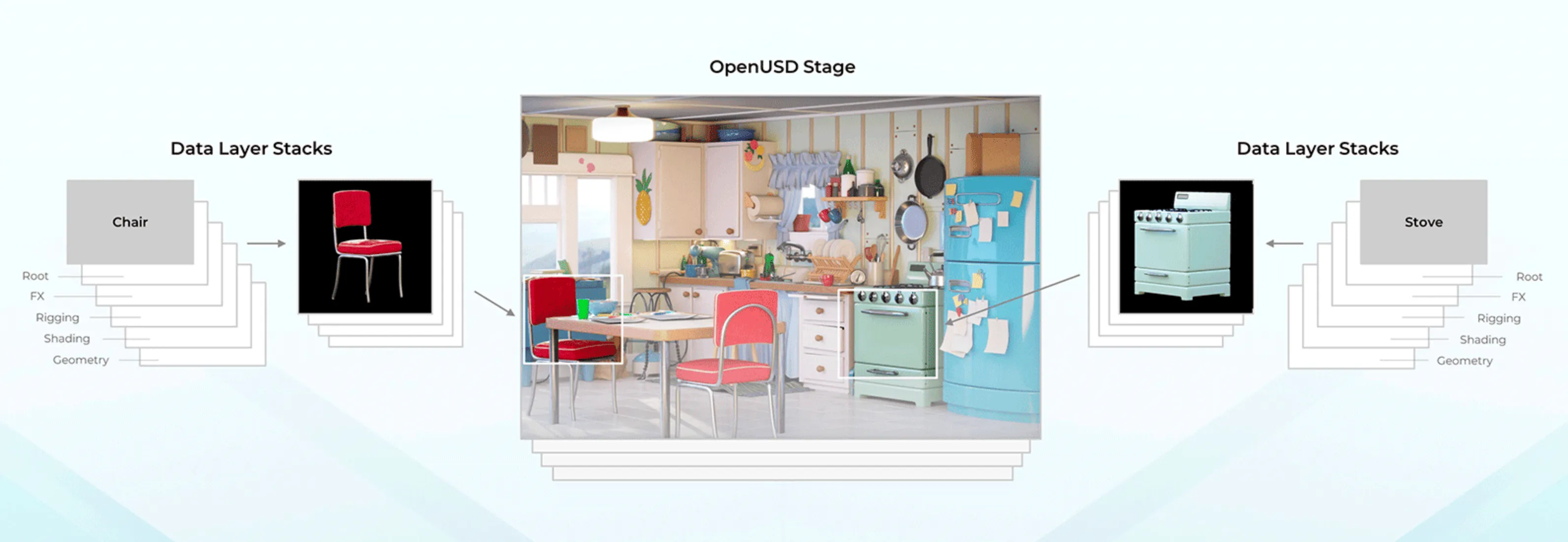

AOUSD Announces Its Two-Year Roadmap and New Members

Keywords: AOUSD, Unity, Meta, Khronos Group

In Issue 006, we introduced the establishment of AOUSD and its potential impact.

Four months have flown by, and AOUSD has released two major pieces of news: a two-year roadmap and new members including Cesium, Chaos, Epic Games, Foundry, Hexagon, IKEA, Lowe’s, Meta, OTOY, SideFX, Spatial, and Unity.

These new members, who are leaders in their respective fields such as film, gaming, retail, and research, all have a demand for 3D models.

AOUSD also announced its collaboration with Khronos Group, the organization behind glTF, to ensure unnecessary compatibility issues between glTF and USD do not arise.

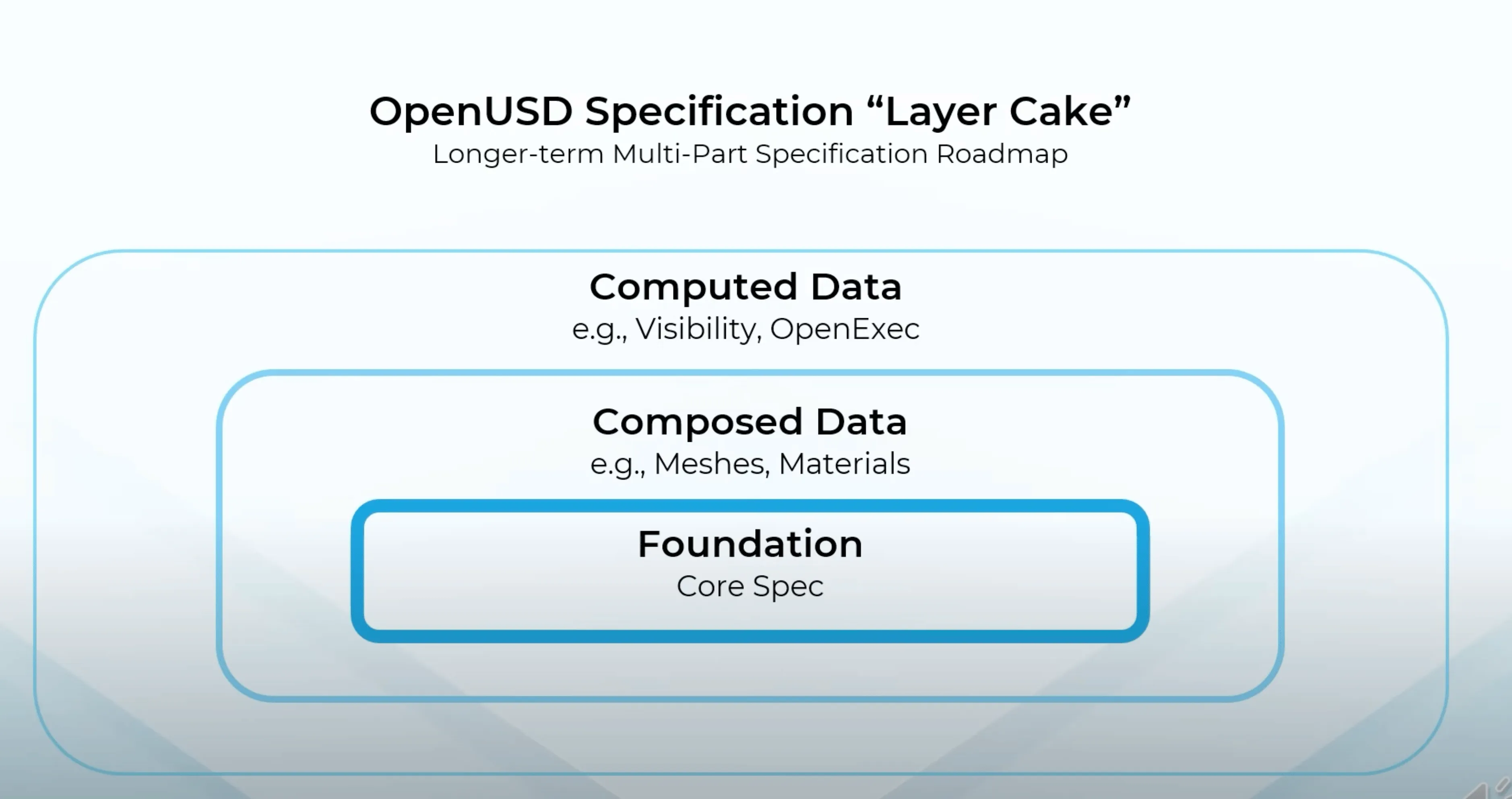

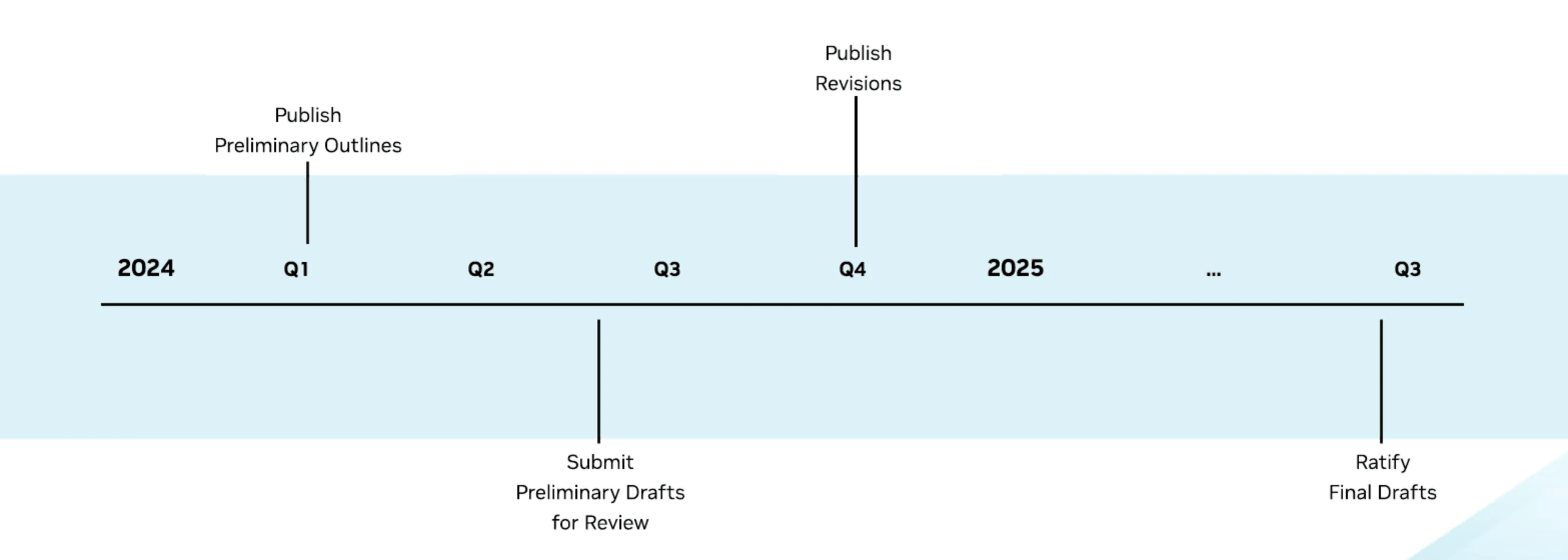

The roadmap details AOUSD’s plan to divide its standards into three layers: Computed Data, Composed Data, and Foundation layer, with the outer layers stably utilizing the capabilities of the inner layers:

Over the next two years, AOUSD will focus on the Foundation layer, planning to release its first draft in Q1 of 2024, revise it in Q4 of 2024, and finally publish the final draft in Q3 of 2025.

If all goes well, by Q4 of 2025, our Newsletter should reach 60+ issues, and we’ll review AOUSD’s accomplishments then (Hey! Is it really good to dig such a big hole for ourselves so early? 🥴)

Official Release of iOS 17.2: Start Recording Your Own Three-Dimensional Memories

Keywords: iOS 17.2, Spatial Video, Apple Vision Pro

With the official release of iOS 17.2, users who previously purchased the iPhone 15 Pro series can finally try out this exclusive feature. To help everyone get started with spatial video, we detailed in How to Play Spatial Video on iOS 17.2 the current ways to enjoy spatial video.

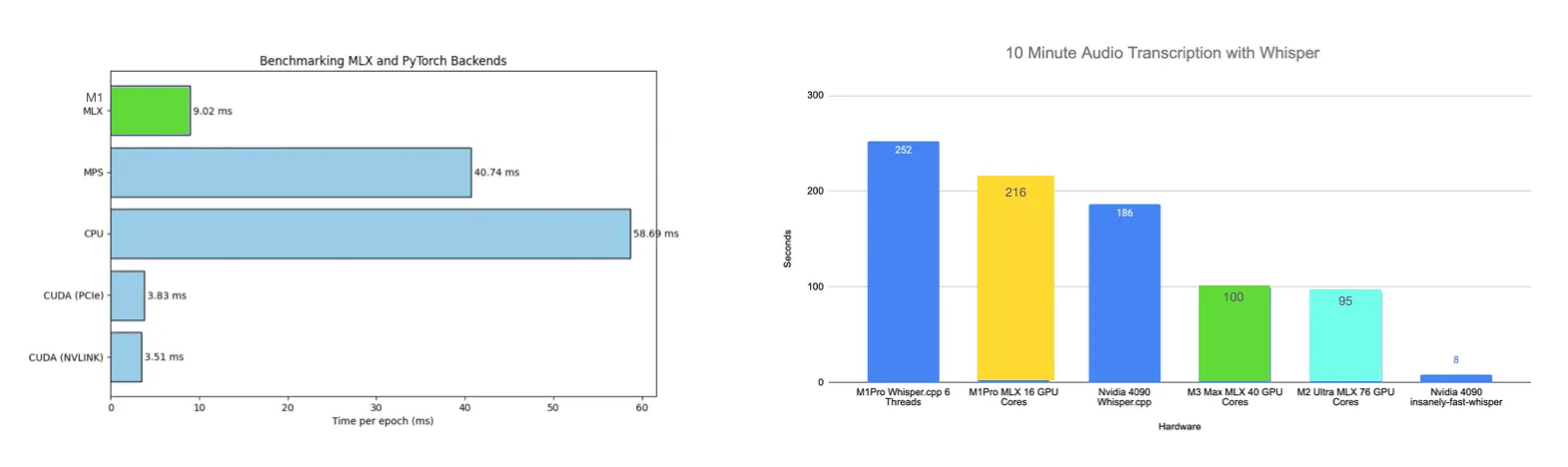

MLX — Another Big Move by Apple AI

Keywords: Deep Learning Framework, Apple Silicon, GPU

Deep learning enthusiasts are surely familiar with Pytorch, but Apple has recently launched its own deep learning framework: MLX! It’s not just another framework for developers to choose

from, but a game-changing tool for the growing GPU performance of Macs!

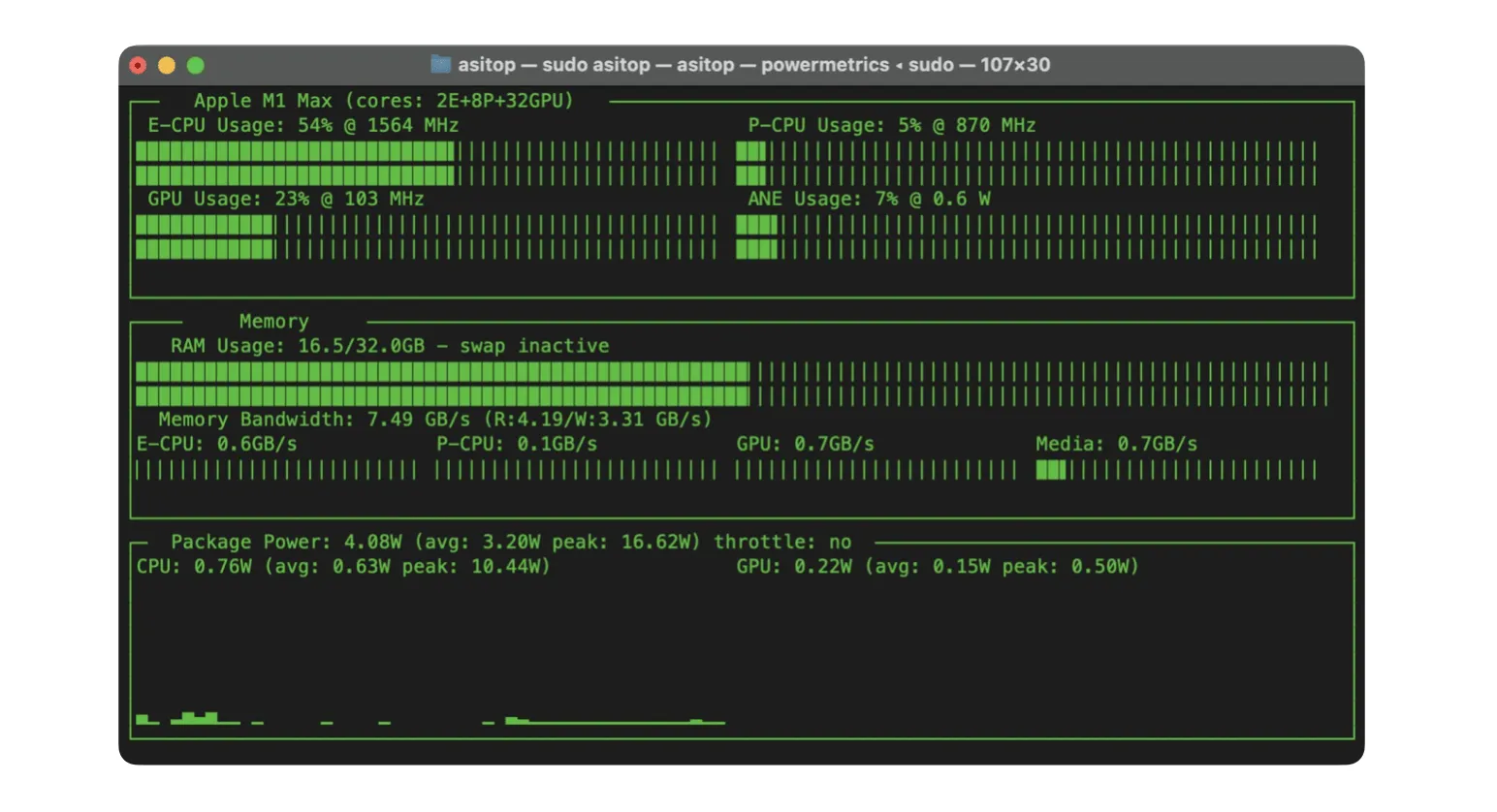

Left image above: Training Graph Conv Nets, right image: Real-time performance comparison with Whisper

However, one might wonder why Apple introduced MLX instead of implementing it in Pytorch.

Apple engineers explained that first, it fully utilizes Mac features (like unified memory, which eliminates the need to consider whether arrays are created on CPU or GPU); retains the best parts of other frameworks; and the overall framework is simple, flexible, and hackable.

We agree with their statement, especially in specific domains where GPU memory is often a bottleneck for many algorithms. Mac’s unified memory design can easily surpass NVIDIA H100’s 80 GB. (Although Mac’s memory is as expensive as gold, it’s still more reasonable compared to NVIDIA.) Moreover, official model examples have been provided.

- LLM models: Meta’s LLaMA with 7B to 70B parameters, Mistral with 7B parameters, Microsoft’s Phi-2 with 2.7B parameters.

- Voice recognition models: OpenAI’s Whisper.

- Fine-tune models: LoRA.

If you wish to run models on mobile devices (like iPad and Apple Vision Pro equipped with Apple Silicon), you can refer to:

Additionally, asitop is recommended for real-time monitoring of CPU, GPU, and memory performance on Apple Silicon. However, there’s a small bug regarding monitoring on M2 and M3 series chips, so stay updated with the latest progress.

Meta Introduces Relightable Gaussian Codec Avatars for Real-Time VR Head Models

Keywords: Head Avatar, Relighting, Gaussian Splatting, Codec Avatar

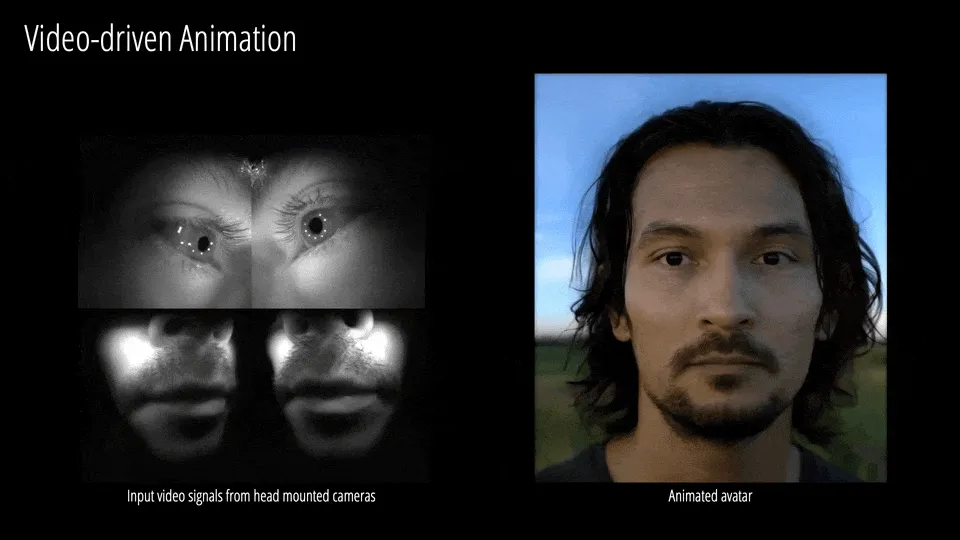

To enhance user immersion when wearing MR headsets, whether it’s Apple Vision Pro’s Persona or Meta’s Codec Avatar, they both aim to capture users’ expressions in real-time through device cameras to drive a realistic head model. Persona has achieved real-time driving and display on the headset, while Codec Avatar, though more detailed in reconstruction, requires substantial computational power.

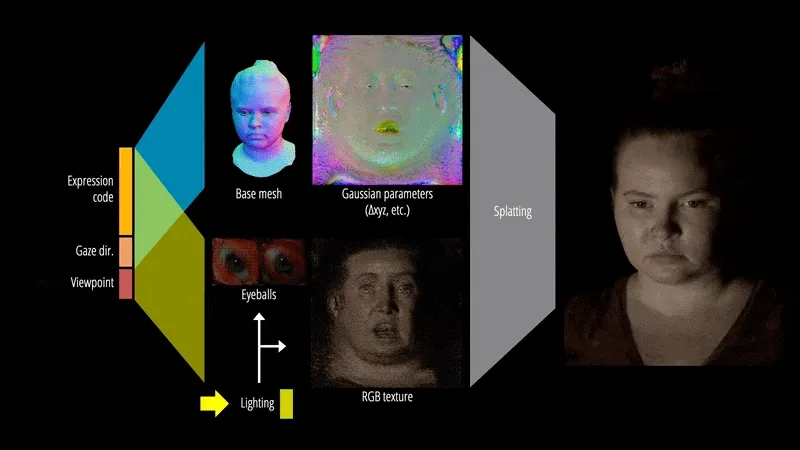

A few days ago, Meta announced a new work, using Gaussian Splatting (GSplats) to accelerate the most time-consuming body rendering step in Codec Avatar, ultimately achieving real-time presentation of high-precision head models in VR.

Unlike general GSplats, the human head model can be standardized (facial features are similarly positioned). Thus, Meta’s work parameterizes GSplats and radiative transfer functions on a standardized head UV map (the flattened head parameterization UV map shown above), combined with GSplats and precomputed acceleration rendering processes, to achieve relightable realistic head rendering.

However, despite the acceleration with Gaussian Splatting, there are still many challenges to overcome. For instance, head reconstruction data requires capture with hundreds of synchronized cameras and controllable LED environments, and real-time presentation in VR usually needs PC-level GPU streaming.

Ideas

AI + XR = A Door to Anywhere?

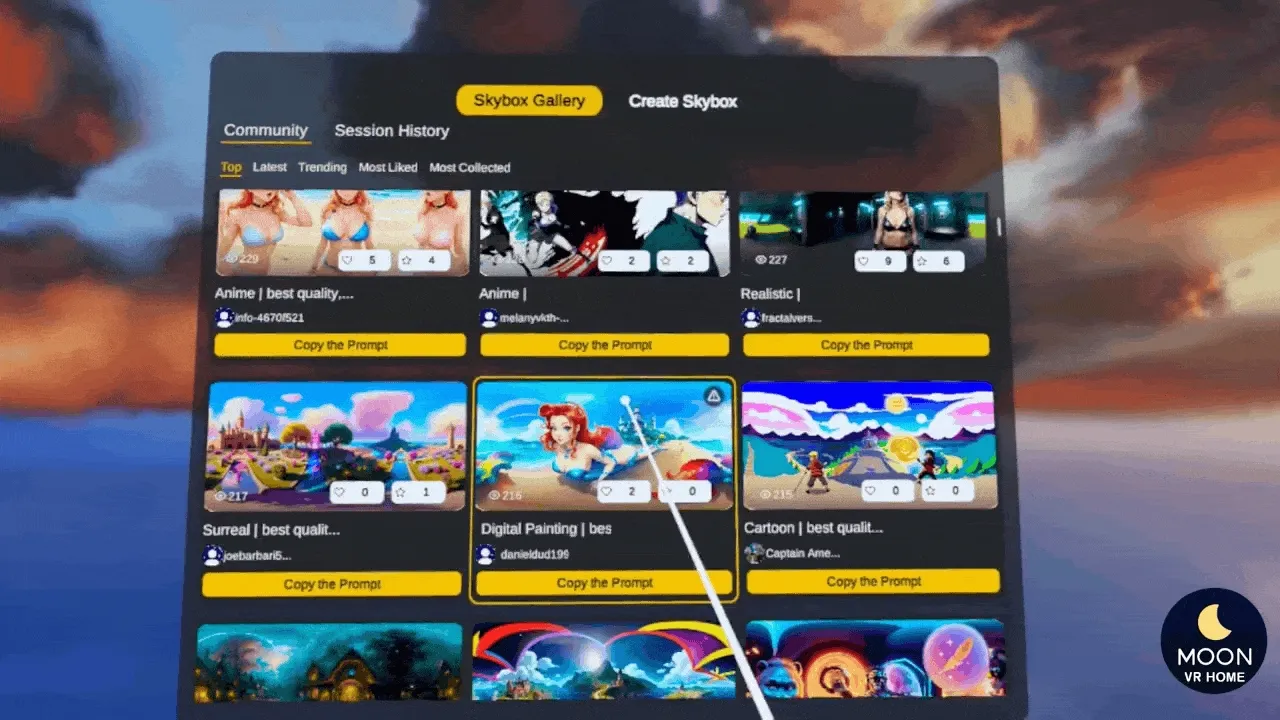

Keywords: Quest, MR, MoonVR, Skybox

Anyone who has seen Doraemon will have a lasting impression of the “Anywhere Door” from the manga - a door that leads to various different worlds— step through it, and you can arrive in a multitude of different places.

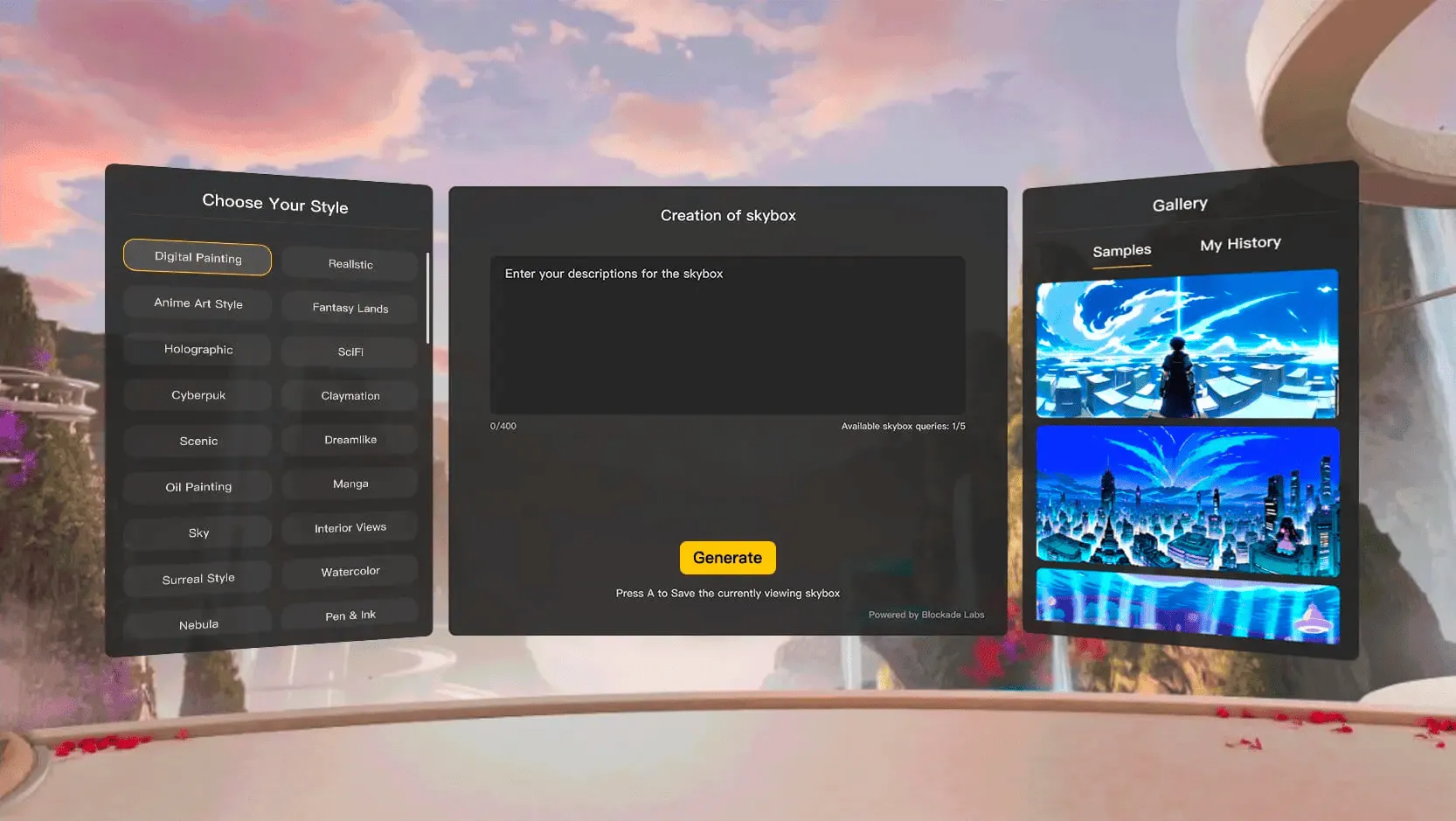

When writing the topic of SkyboxLab in Newsletter 003, we were thinking, can we combine this technology with the XR world?

Now, MoonVR brings us such a practical product — AI Skybox Generator.

It’s an app in Quest that uses AI prompts to generate Skyboxes in real-time. In addition to creating and inputting your own prompts, you can choose your favorite sky walls from the rich creations of other users.

Thanks to the Quest v54 update’s Custom Skybox feature, you can also download these beautiful Skyboxes to your Quest and use them as the background for your control room.

It’s worth mentioning that the MoonVR team is actively working on porting this app to Apple Vision Pro. You can see their current progress on Reddit.

Unmissable Apps on Quest

Keywords: Quest, Official Demos, Free Apps

With the Black Friday sales and online user numbers, more and more people are buying Quest devices. However, you might find that some interesting apps and games are either expensive (and you have to buy them again even if you’ve bought them on another platform) or hard to find. To prevent your Quest from collecting dust too quickly, here are a few official apps we recommend. Although they have relatively simple processes, they are well-made and worth experiencing as each app showcases a new feature. Don’t miss out!

summary: An official creation filled with toy boxes and a robot guide in a small house, where you can make a new friend and play together. It doesn’t support hand gestures, but the interaction design of the items is creative.

Compatible Devices: All Quest devices, no internet connection required during use.

summary: Mainly for developers to reference the Interaction SDK sample effects. The SDK allows developers to build high-quality interactions in VR or MR more simply, quickly, and easily. This app provides a quick overview of the SDK’s support for interactions such as poking, hand grabbing, touch grabbing, ray, distance grabbing, and post recognition.

Compatible Devices: All Quest devices, supports hand gestures, no internet connection required during use.

summary: The first official hand gesture interaction demo after the release of the Quest Interaction SDK. There are four tasks, from making a robotic glove and playing a shooting game to interactions, teleportation, and decryption. In this demo, you can truly feel the charm of hand gesture interaction.

Compatible Devices: Quest 2 and later devices, supports hand gestures, no internet connection required during use.

summary: Interact with Oppy using voice and gestures. Make Oppy jump, come closer, or just say hello. Use a magic flashlight to find energy orbs in your room and feed them to Oppy so she can go home. Although it lasts only 5 minutes, it’s a magical MR experience!

Compatible Devices: All Quest devices, supports hand gestures, supports Passthrough, requires an internet connection.

summary: Don’t miss out if you love rhythm games! Conduct an orchestra to play epic symphonies in an opera house. (Holding a pen or a chopstick can unlock the advanced conductor experience, haha.)

Compatible Devices: All Quest devices, supports hand gestures, no internet connection needed.

summary: Finally, a MR game that’s been talked about a lot. The main content is to help a group of cute creatures that have crash-landed in your room to get home. Shoot to help them get into the lifeboat within a limited time. Missed bullets will even break the walls of your room, truly an interesting blend of virtual and real gameplay. If you visit Meta’s offline stores in Japan, you’ll see that this game is one of the MR games recommended by the staff.

Compatible Devices: Quest 3, supports Passthrough, no internet connection needed.

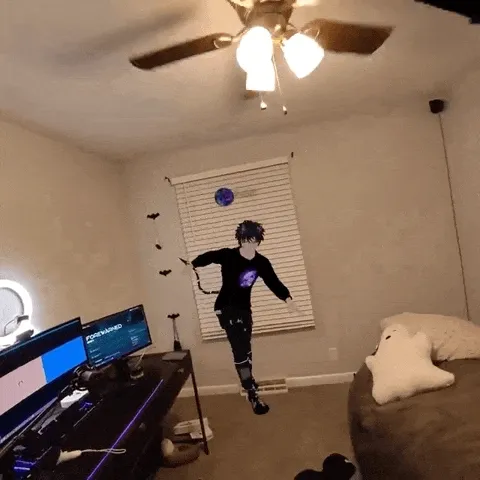

Using Passthrough AR to Meet from Afar

Keywords: Passthrough AR, VRChat

Twitter user ShodahVR used Quest3’s Passthrough AR feature to create an interesting display, bringing a distant friend into their world. In implementation, ShodahVR first activated Virtual Desktop’s passthrough feature, then entered a world named greenscreen in VRChat, achieving the effect of overlaying the virtual world onto the real one.

Tools

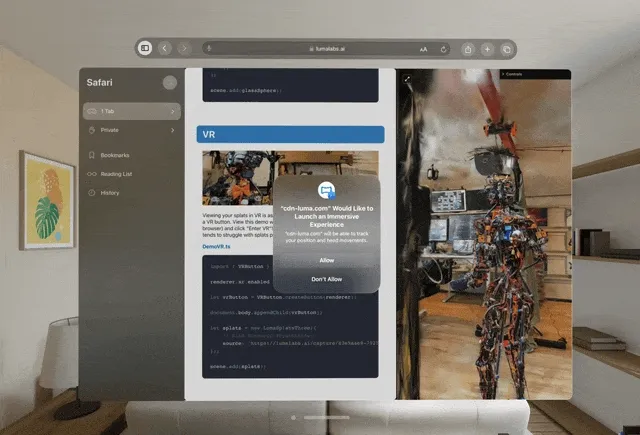

Evolution of the Gaussian Splatting Web App: Special Effects & WebXR

Keywords: Gaussian Splatting, WebGL, WebXR

LumaAI recently released the Luma WebGL Library, a framework that integrates the Gaussian Splatting rendering framework with three.js rendering pipeline, making it convenient to share various Luma splats reconstruction results on the web and add interesting effects.

For example, adding a fog effect to a reconstructed Hollywood scene.

Or rendering Luma splats scenes into cubemaps and using them as the HDRI ambient light of a three.js scene (the high-reflective spaceship in the scene can reflect the outline of the snow-capped mountains).

We can even use custom Shaders to hook the vertex and fragment shaders of Luma splats, creating unique motion effects (like making the entire mountain range roll like a sine wave).

It’s worth noting that Luma splats also support rendering on WebXR, meaning we can experience three-dimensional Gaussian Splatting on Quest, PICO, Vision Pro, and even the Leia holographic display.

For those who don’t have these devices, we can immerse ourselves in Luma splats previews in Safari on the Vision Pro simulator.

Note: You need to enable WebXR-related features in Settings > Apps > Safari, under Advanced at the bottom of Feature Flags.

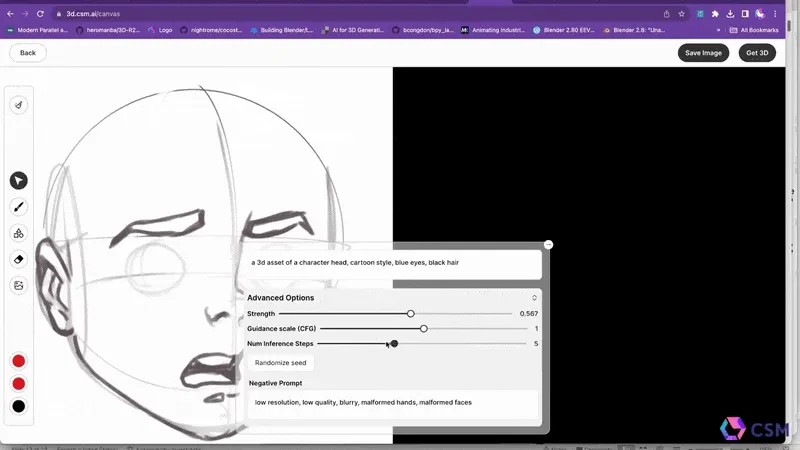

Generating 3D Models from 2D Sketches

Keywords: Sketch-to-3D, AI, 3D Assets Generation

Do you remember in Newsletters Issue 12 when we introduced CSM.ai’s explosion of single-image 3D model generation and real-time AI painting tools? Now, CSM.ai has combined these two functions, allowing users to directly create objects with clean backgrounds from 2D sketches and text descriptions on the web, and then generate 3D models.

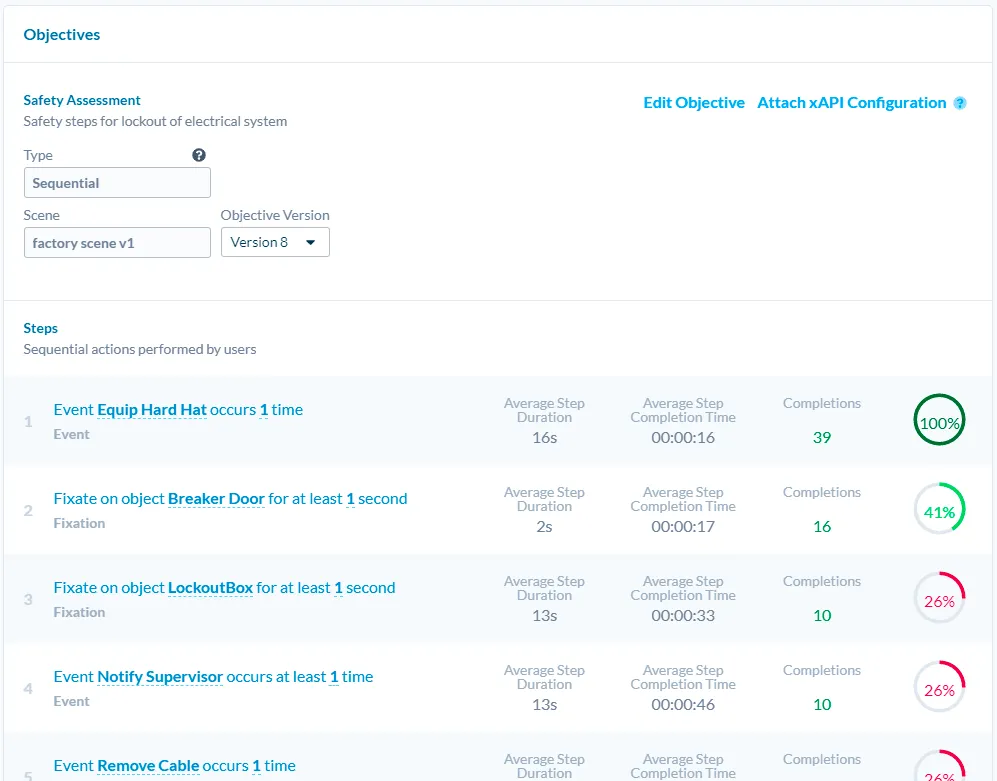

Cognitive3D—A User Analytics Tool in XR

Keywords: 3D Analytics, Unity, Cognitive3D

In mobile applications, many products use tools like Google Mobile App Analytics to analyze user behavior, aiding in the better iteration of their apps.

So, how should the same be done in VR/AR?

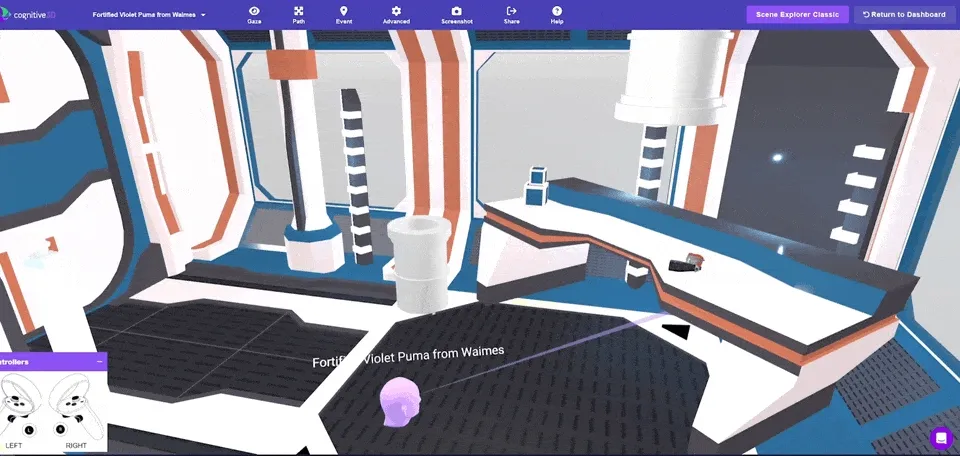

Here, we introduce the star of this segment — Cognitive3D, a 3D analytics platform that measures user behavior in VR/AR. It’s compatible for integration in Unity/Unreal/C++ platforms.

Integrating and using Cognitive3D is very straightforward. Taking a Unity project as an example, after importing the necessary Unity Package and entering the required Developer Key, you just need to drag and drop the Camera and Left/Right Controllers from the Hierarchy Window into Cognitive3D’s Setup Window, then upload the project’s Geometry, completing the basic setup.

After that, when users play in the VR device, all their gameplay will be recorded and uploaded to Cognitive3D’s backend, and can be displayed as a replay of all user actions (seeing such a complete scene, you can understand why we needed to upload Geometry earlier😉):

Besides visualization, Cognitive3D also possesses capabilities like event reporting and other common analytics platform features. You can even set up custom combinations of user behaviors (referred to as an Objective in Cognitive3D) and directly view on the web whether users have achieved the set goals.

If you want a quick and intuitive understanding of Cognitive3D, you can quickly check out Valem Tutorials’ this video. For more detailed usage, you can refer to Cognitive3D’s official documentation.

Video & Code

SwiftUI + Metal - Creating Special Effects with Your Own Shaders

Keywords: SwiftUI, Metal, Shader

This topic is a community contribution, thanks to XR Chestnut for submitting this topic

Shaders, the backbone behind all kinds of magical effects in the gaming world, might seem unfamiliar to many client-side developers.

However, this might start to change with the emergence of SwiftUI and Metal. In Paul’s this video, he introduces us step-by-step on how to create basic shaders in Metal and use them in SwiftUI. At the end of the video, he also recommends The Book Of Shaders and ShaderToy, with the former explaining Shaders in a more systematic and in-depth manner, and the latter providing beautiful Shader effects and source codes.

Besides this video, Paul also kindly launched the Inferno project, which gathers various Shaders designed for SwiftUI. It’s recommended to use in conjunction with this video.

Small News

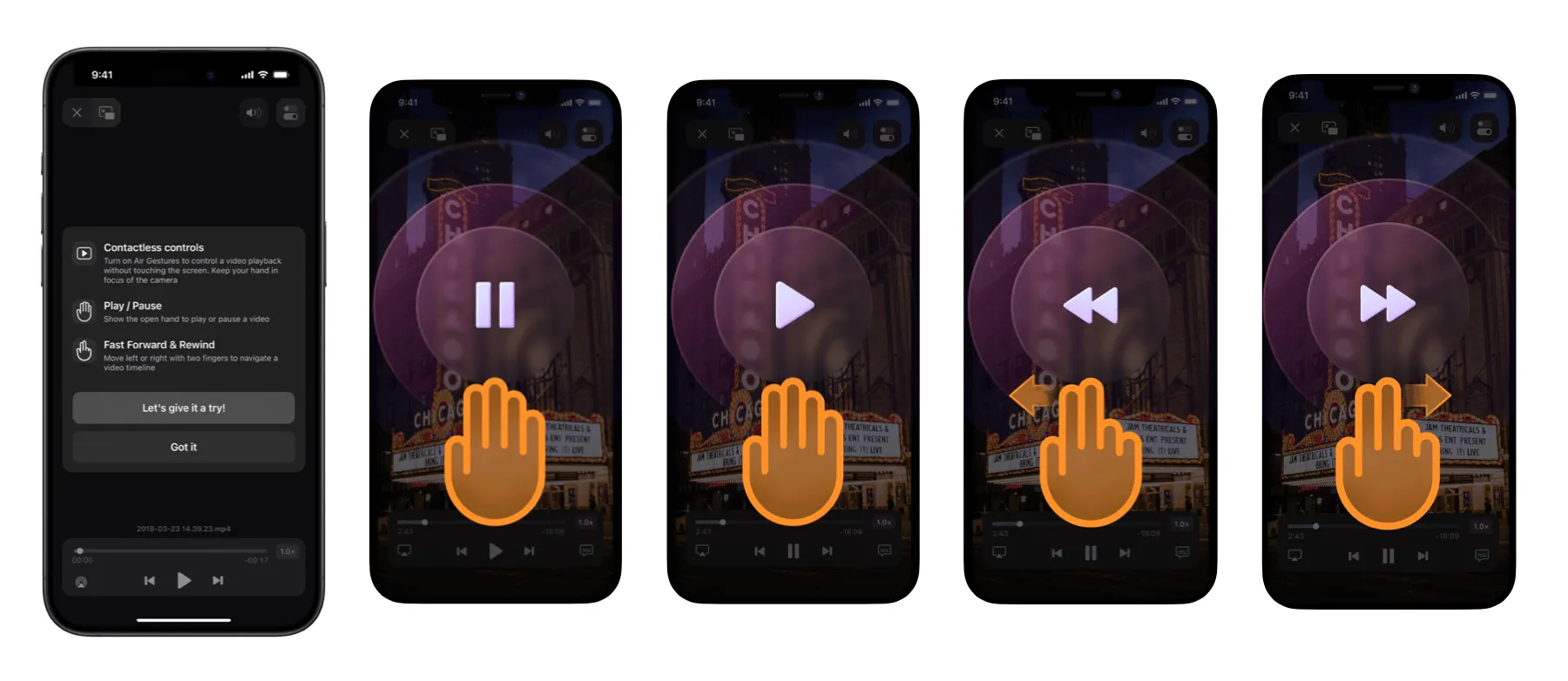

Wow! These Gesture Operations Have Been Realized!

Keywords: Gesture Operations, Gesture Screenshot, Gesture Pause/Play

As early as in Newsletter Issue 8, we envisioned implementing a gesture screenshot operation in Vision Pro. According to user Luna’s sharing, this operation is now realized in Horizon!

At the same time, the air gesture operation, previously seen in Huawei, is now supported by Readdle! In the app, while playing a video, you can use gestures for operations like pausing or continuing to play the video by moving your hand from far to close, or fast-forwarding the video by moving your hand left or right. All these gesture recognitions are done on the device. We tried it out, and the gestures for pausing or continuing to play the video are highly sensitive, while the gesture for fast-forwarding the video has some sensitivity issues.

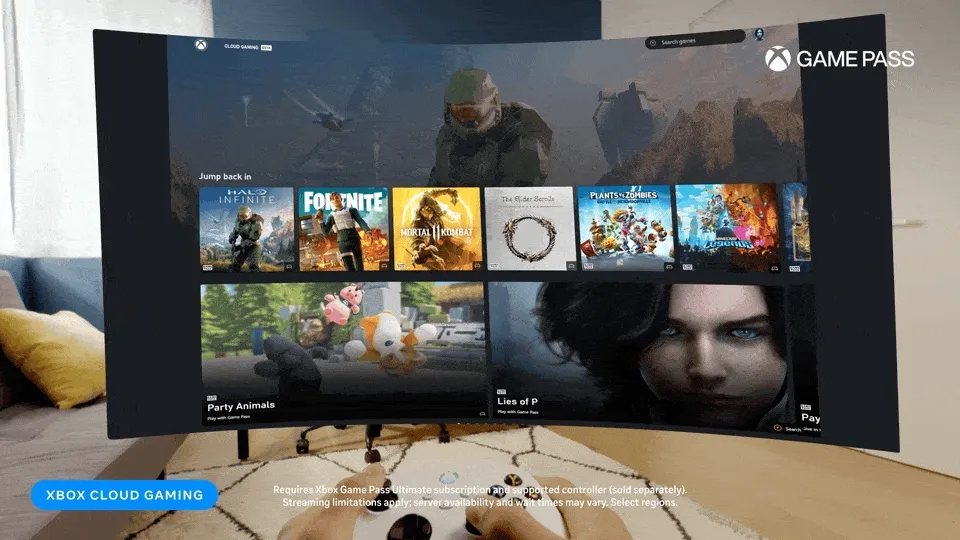

Xbox Cloud Gaming (beta) Lands on Quest

Keywords: Quest, Xbox, Microsoft, Cloud Gaming

Finally, you can now play various AAA titles on Quest using Xbox Cloud Gaming. You might want to start by trying Xbox Game Pass Ultimate.

According to Meta’s official statement, this feature is currently supported on Quest 2, Quest Pro, and Quest 3. As for controllers, it currently supports Xbox controllers, PS4 controllers, and Nintendo Switch Pro controllers, with plans to support PS5 controllers in the future.

If you’re a die-hard Xbox fan, then hurry to the Meta App Store to download Xbox Cloud Gaming (Beta)!

This Issue’s Contributors

| Link | Image |

|---|---|

| Onee |  |

| Zion |  |

| ybbbbt |  |

Recommended Reading

- XR World Weekly 027 - AIxXR must be a good pair

- XR World Weekly 022 - Qualcomm Technology Salon, Persona can replace the background, visionOS 2 30 Days

- XR World Weekly 009

- XR World Weekly 029 - Hurry up and update to the new system.

- XR World Weekly 001

- XR World Weekly 019

- XR World Weekly 032 - Not First, But Best

XReality.Zone

XReality.Zone