XR World Weekly 006

Highlights

- Pixar, Adobe, Apple, Autodesk and NVIDIA jointly formed the USD Alliance

- Developers’ thoughts on visionOS (Vision Pro Developer Hands-on / Vision Pro from a Developer’s Perspective)

- MirrorNeRF: Enabling NeRF to recognize mirrors

BigNews

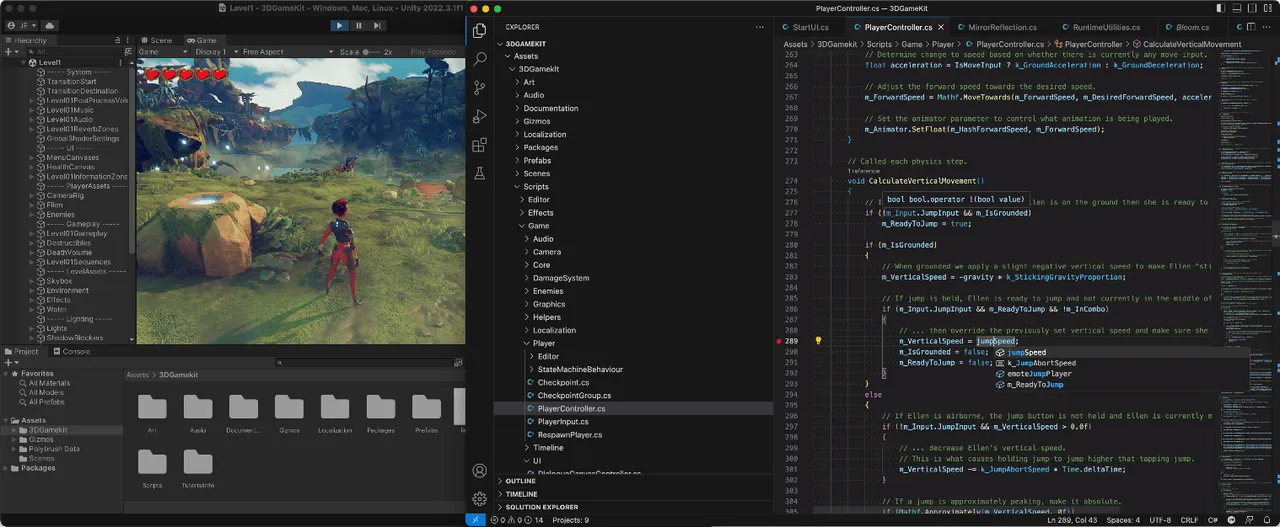

Microsoft released the official Unity Visual Studio Code plugin

Keywords: Unity, Visual Studio Code, plugin

If you are a fan of Visual Studio Code, this powerful editor, then you will be very frustrated to find out: debugging C# in Visual Studio Code is a very unpleasant process — you need to install and configure a lot of things, unable to get the same out-of-the-box experience as Visual Studio. At the same time, the very critical breakpoint debugging function has become less useful due to the aging of Unity’s official plugins.

Now, the situation has finally improved. Microsoft has officially released a Visual Studio Code plugin — Unity (it feels like the big brother can’t stand it anymore and comes to help the little brother). With this plugin, you can get C# environment configuration, breakpoint debugging and other capabilities in one stop. At the same time, for developers who are used to using Copilot to assist programming in Visual Studio Code, this is also very good news (Code + Copilot, whoever uses it knows).

Currently, to use this plugin, you need:

- Use Unity 2021 or later

- Install and enable the Unity plugin in Visual Studio Code

- Use Visual Studio Editor 2.0.20 or later in Unity (Note that you must ensure the version is correct, otherwise code completion and other features will not work after Visual Studio Code opens)

After installing the Unity plugin in Visual Studio Editor, the plugin will automatically help you install the .NET related environment.

Tips

If you get an error because the .NET related installation packages did not finish downloading within 2 minutes due to network reasons, it is recommended that you can set the timeout longer according to vscode-dotnet-runtime’s suggestion and try to adjust your own network conditions.

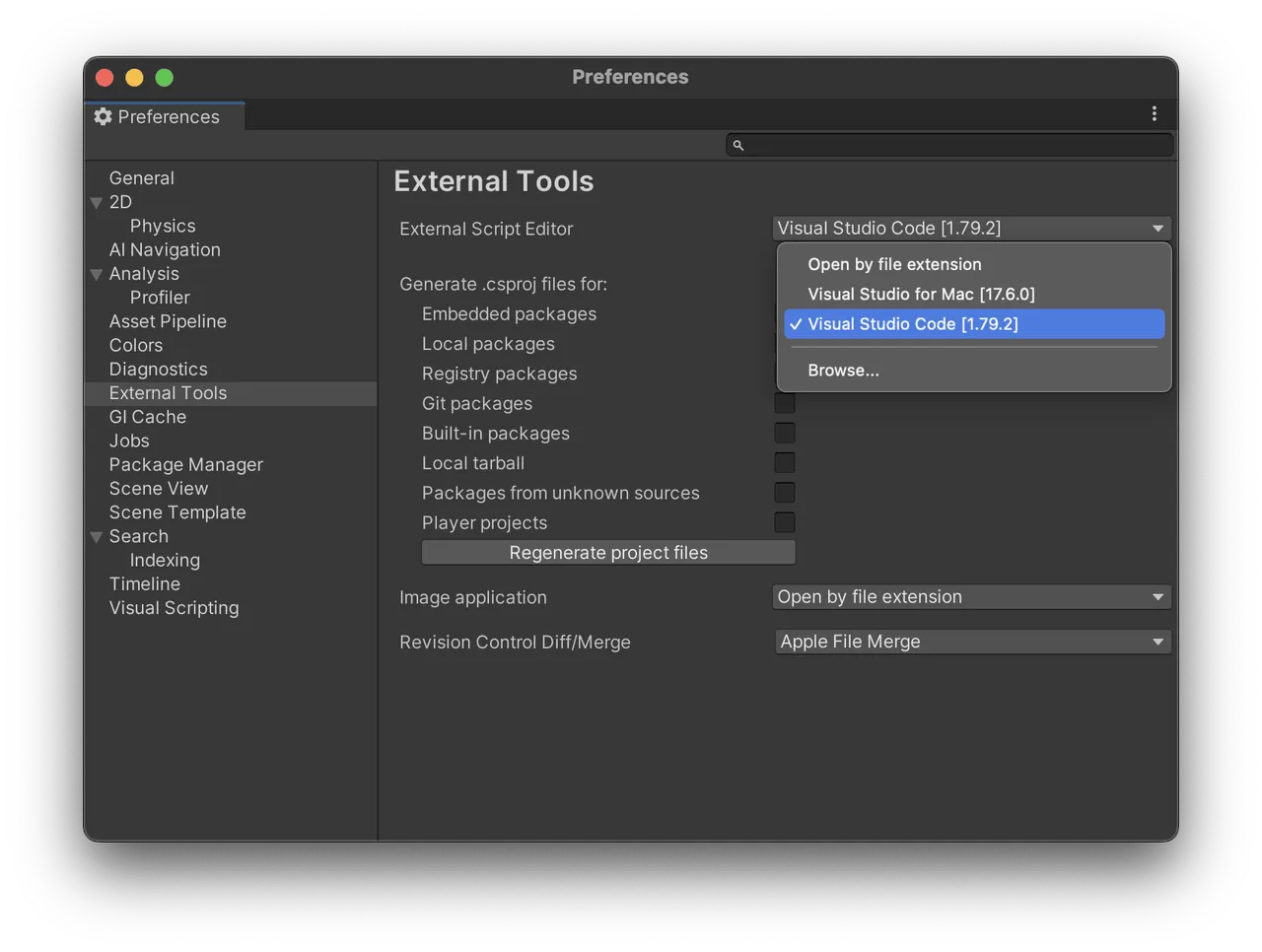

After installing .NET, make sure to change the Script Editor in Unity’s Preferences -> External Tools to Visual Studio Code. Double click the script file to open the project’s C# scripts in Visual Studio Code.

Using breakpoint debugging is also very simple. In Visual Studio Code’s command palette, select Attach Unity Debugger, then select the port number of the foreground Unity Editor, and directly select Play in the Editor to pause the code at the expected location.

If you encounter any issues when using this plugin, or have new feature requests, Microsoft also welcomes you to submit issues to them via Github.

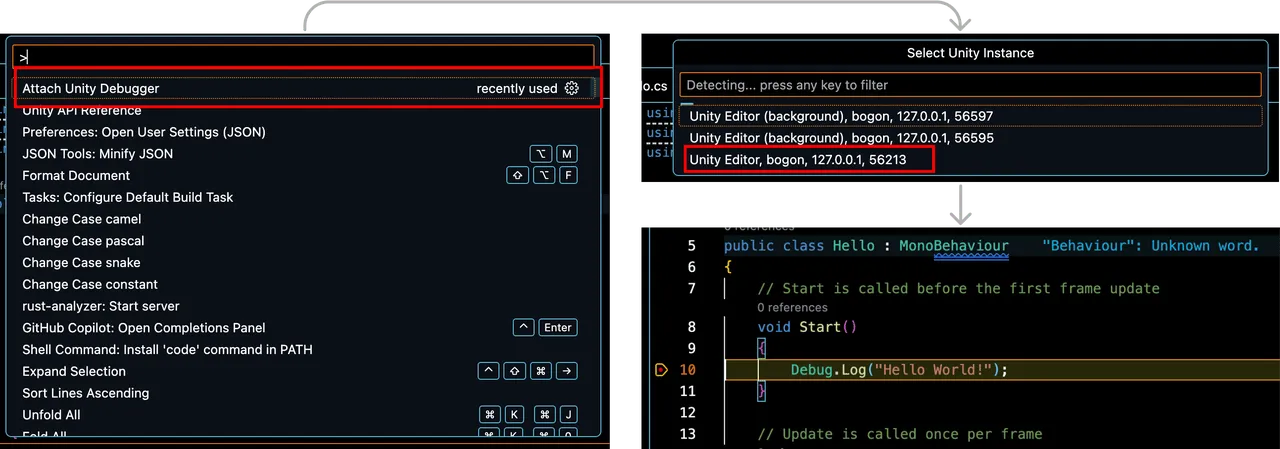

Apple Vision Pro developer lab in Shanghai starts providing fitting opportunities for developers since Aug 15th

Keywords: Apple Vision Pro, lab

Before the official launch of Apple Vision Pro, in addition to development on simulators, developers can also apply for developer kits or apply to visit the developer labs located in Cupertino, London, Munich, Shanghai, Singapore and Tokyo for real machine debugging.

The specific booking times for these labs have been shown on the Apple Developer website. The lab in Shanghai has started accepting developers for real machine debugging in the lab since Aug 15th.

To qualify for participating in the lab, you first need to register for Apple’s developer program (either individual or enterprise), and log in to Apple Developer website with your Apple ID and fill out the corresponding application. The application mainly includes basic information about the visionOS App you are making and screenshots running in the simulator.

In addition, a maximum of two members from the same team can visit the lab. Therefore, Apple recommends that it is best for one designer and one engineer to visit together. Both members need to fill out the application on the developer website and specify the name of their teammate.

Pixar, Adobe, Apple, Autodesk, NVIDIA jointly formed the USD Alliance

Keywords: OpenUSD, 3D model format

AOUSD — stands for Alliance for OpenUSD, is an open source, non-profit organization with Pixar, Adobe, Apple, Autodesk, NVIDIA as founding members, and Unity, Unreal as ordinary members. Its goal is to promote interoperability of 3D content through OpenUSD, and the entire organization will operate under the open source framework of the Joint Development Foundation (JDF) under the Linux Foundation.

On Hacker News, the heat of this news even exceeded the news of room temperature superconductivity at one point.

As the saying goes, laymen watch the excitement, insiders watch the door. So what does the establishment of AOUSD really mean? So much so that its popularity on Hacker News is so high? To make it clear, we first need to briefly review the current state of 3D content before this.

Note:

The 3D content we are discussing here refers to 3D model files created using DCC (Digital Content Create) tools like Maya and 3DMax.

Unlike images, there is no 3D content format like png or jpg that can be widely used in various fields. The reason is that the fields of animation, film and television, games, industrial modeling, browsers, mobile applications, etc. have generated demand for 3D models at different times. For efficiency and business considerations, one or two leading companies in these fields will choose to create their own proprietary 3D formats. Here are some examples:

- OBJ: A 3D format originally designed for Advanced Visualizer, born in the 1990s. Most DCC software supports the OBJ format, but due to its simple functionality (e.g. not supporting multiple models defined in one file), it can only be used in some simple scenarios.

- FBX: Autodesk’s format, widely used for modeling and game development. The two major game engines Unity and Unreal natively support this format.

- STL: A format developed by 3D Systems, mainly used in 3D modeling, which is why the format does not support materials.

- glTF — This is an open standard format proposed by KhronosGroup, currently mainly used in the web field and Google’s Andoird ecosystem.

- USD: A 3D format created by Pixar, open sourced in 2016 (and thus often referred to as OpenUSD), currently widely used in animation and AR application development in Apple’s ecosystem.

The problem caused by so many formats is that each format can only be used in specific fields. For example, although FBX can be used well in game engines like Unity/Unreal, developers in the Apple ecosystem cannot use it, because Apple has no intention or motivation to support such formats.

If you are an iOS developer and you see a very good looking 3D model that you want to apply to your App, but find that this 3D model is only available in FBX format, basically at this point you can only “admire the model with sigh”. (In contrast, most images provide png or jpg formats, whether you are an application developer or game developer, you can easily use images in these formats).

The establishment of AOUSD this time seems to bring a ray of hope for the unification and standardization of 3D content.

Previously, although the USD format had been open sourced and had been heavily promoted and used by companies like Apple and NVIDIA, its form was still an open source project open sourced by Pixar. In theory, its development direction would be mainly determined by Pixar or the open source community, which is certainly an unstable factor for commercial companies like Apple and NVIDIA that use it.

According to the current membership agreement of AOUSD, founding members have to pay an annual fee of $100,000, and founding members also need to be members of the Linux Foundation (the Linux Foundation has three tiers of membership for companies and organizations, requiring annual fees of $500,000, $25,000, and $5,000 respectively from high to low), while ordinary members need to pay an annual fee of $10,000.

After paying the membership fee, founding members and ordinary members can influence the direction of the OpenUSD format to varying degrees according to AOUSD’s work guidelines. To some extent, this approach is like companies forming joint ventures to achieve cooperation, through a common interest group, allowing commercial companies to better jointly advance a certain technology or cause together.

In fact, alliances formed by commercial companies like this are more likely to become a de facto industry standard. Looking back at history, the HTML5 specification formulated by the WHATWG formed by browser vendors defeated the W3C and became the de facto HTML specification.

In addition, because the organization operates under the JDF framework, JDF will also help OpenUSD specifications become international standards.

If you are also a practitioner who suffers from the disunity of 3D formats, and you want to stay up to date on AOUSD related information, you can also follow related information on AOUSD’s forum.

Meta Quest updated to v56

Keywords: SDK update, Quest

Meta Quest currently adopts a release pace of basically one version per month. In the recent v56 version, there are some interesting features that we find very interesting and want to share with everyone:

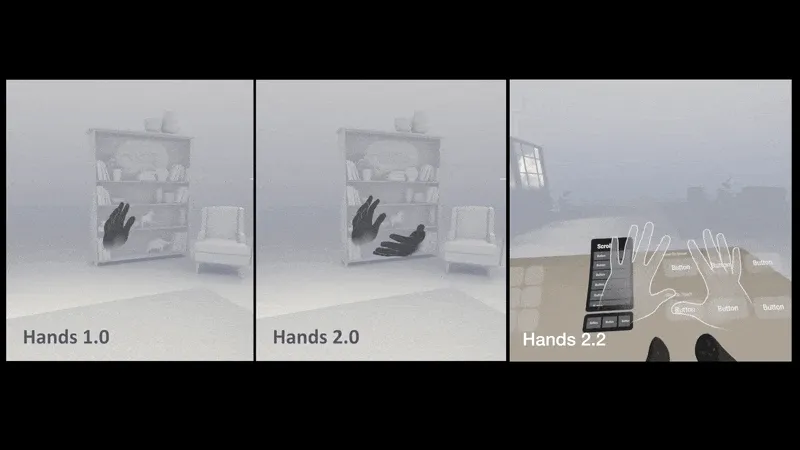

- Hand tracking upgraded to version 2.2: In this version, Quest improved the latency of hand recognition — in general use, latency can be reduced by up to 40%, and by up to 75% in fast hand motions, and the probability of tracking loss when switching between gestures and controllers is reduced. Users can experience fast hand motions in person (but as of this writing, the Oculus Integration SDK version is still stuck at V55, developers please note) in the Litesport, VRWorkout or the officially released Move Fast demo app on App Lab. You can feel the effects of gesture tracking in different versions through the comparison between the following versions:

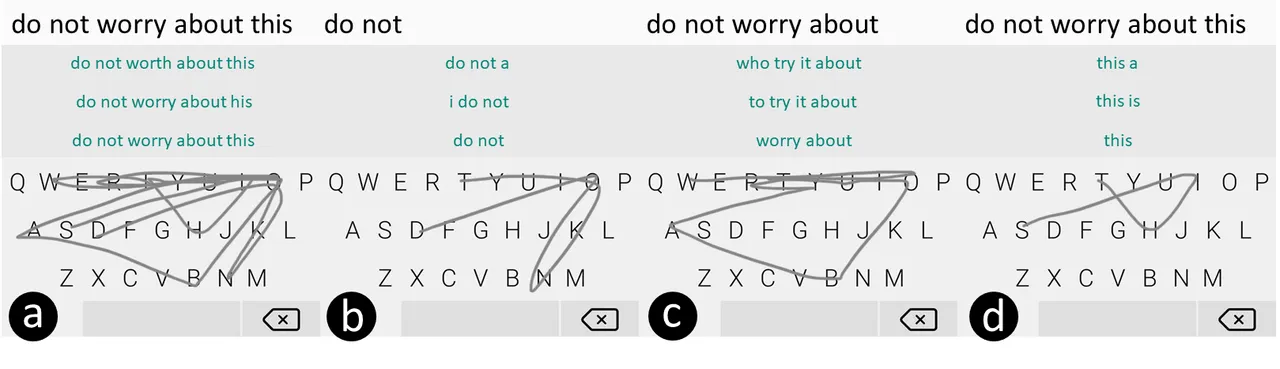

- Supports slide keyboard input: Gesture Typing (also known as Swip Typing) is an input method for smartphones that first became popular on Android and has been available on iOS since iOS 13. In spatial computing, input methods are not as mature as qwert or 9-grid keyboards on phones, because they inherently lack physical feedback and considerations of accuracy and robustness. Gesture Typing may be a very good spatial input method. However, the accuracy of Gesture Typing recognition on the Oculus platform is currently not high, and does not support multi-word input. (For multi-English word input methods based on Gesture Typing, see the paper Phrase-Gesture Typing on Smartphones, and SwiftKey which enables multi-English word input by introducing spaces)

The following is a schematic diagram of Gesture Typing for consecutive multiple English words:

Video

Developers’ thoughts on visionOS (Vision Pro Developer Hands-on / Vision Pro from a Developer’s Perspective)

Keywords: visionOS, developer ideas

At this year’s WWDC, in addition to inviting many media friends, Apple also invited many well-known developers to experience the real machine on site. Possibly due to the overwhelming influence of media friends (after all, they have tens of millions of fans), many developer perspective videos were ignored in terms of dissemination. But fortunately, the Internet is completely open and equal. Through search, we can still find their presence!

For example, the 2 videos recommended this time, Vision Pro Developer Hands-on was recorded by well-known independent overseas developers Malin and Jordi (Jordi’s work Navi was also nominated for the Apple Design Award at WWDC22), while Vision Pro from a Developer’s Perspective was produced by Paul, an instructor at the highly renowned Hacking With Swift tutorial site within the Apple ecosystem.

Compared to most review videos, these two videos are more about thinking through the entire Apple Vision Pro experience from a developer’s perspective, and you will frequently hear technical terms and implementation ideas like SwiftUI, RealityKit, SharePlay, etc. What’s even better is that these developers have generously shared their development ideas on visionOS, and even shared specific thoughts on how to migrate their own apps to the new platform in the future, as well as many interaction details, which is amazing.

So for developers, these two videos are very inspiring. If you have time, it is highly recommended to watch them once. Of course, if you don’t have time, we have also summarized the key points that inspired us from the two videos to share with you!

- Malin and Jordi complained about the two 3D video content provided by Apple (one is a group of children singing happy birthday and the other is a group of people communicating around a campfire), there is nothing wrong with the content itself, what puzzles them is, do people really record 3D videos in such occasions, after all they are public places with so many strangers? And in real scenarios, will people have such a high tolerance for those wearing Apple Vision Pro? At least now if someone wears Apple Vision Pro on the street for a long time, they will most likely be seen as an outlier. But if the content in the 3D video is cats and dogs at home, it may make more sense, after all at home you don’t have to worry about your image, and cats and dogs won’t care if you wear a headset. I think the inspiring point of this discussion is that in addition to the current concerns about shipments and application scenarios, people may still have some psychological reservations and disapproval about this unfamiliar device, which may also become one of the resistances for Apple Vision Pro.

- There are many new challenges in designing the app experience. A point mentioned by Jordi is very interesting. For example, in traditional 2D devices, apps completely occupy the entire screen, and switching between each app is essentially alternating displays within the limited screen, which is easy to understand. But in Apple Vision Pro, the screen changes from a small electronic screen to an infinitely large real world, which is indeed different from the screen logic on iOS and macOS. Will the existing app switching and transition methods still be a good design paradigm? Moreover, the emergence and development of immersive apps also confused Jordi. If the user is interrupted in an immersive app (for example, jumping to another app) and returns to the immersive app, how should we allow the user to maintain an immersive feel, or in other words, how to restore the user’s flow to the state before interruption? I think this is not only a problem developers need to consider, Apple may also need to provide more system-level capabilities in the future to further improve the user experience.

- The current slightly thin development interface of visionOS is actually deliberate by Apple. Regarding the capabilities currently opened up by visionOS, Jordi also mentioned a view that the current situation (the capabilities provided are relatively limited) is a deliberate result by Apple. He illustrated his point of view with the Widget technology. For example, Widgets did not initially provide interactive capabilities, but under this condition, developers focused more on the presentation capabilities of widgets and created many excellent works. When this feature was liked and used by more and more users, Apple then expanded the capabilities of Widgets. He thinks this way of doing things is completely okay, so Apple’s restraint on visionOS actually guides developers to understand the concept of spatial computing step by step, rather than opening up all capabilities and tools at once for developers to play with, which may guide the entire ecosystem in the wrong direction.

- SharePlay will have a very special role in visionOS. Whether it’s Paul, Jordi or Malin, they all mentioned the potential of SharePlay in visionOS! This point may indeed need special attention. I am also very optimistic about this application scenario. For most people, this user experience will be a whole new feeling. At least in the narration of these three people, they unanimously said that this is an unprecedented experience! So incorporating SharePlay gameplay in your personal App in the future would be a good idea.

- visionOS development is very friendly to existing Apple developers. At the same time, the three developers also mentioned that for developers in the existing Apple ecosystem, if you have already used SwiftUI and followed the HIG guidelines, your App will migrate very smoothly from other platforms to visionOS. However, this does not mean that you do not need to re-understand and adapt to this new device. For example, we usually use centered layouts, but in visionOS, since there will be scenes where 3D assets are nested in a 2D plane, you cannot take it for granted to use centered layout on the Z axis at this time, because this will Cause the 3D asset to be occluded by the 2D plane. In addition to such examples, it is not necessarily called adapted to Apple Vision Pro by simply 3Dizing all content. Many apps can retain design and interaction from the 2D era, which is actually not bad at all. Don’t blindly 3Dize, but rather think more about how users can use it comfortably.

In addition, Malin & Jordi also shared app ideas they wanted to make:

Malin:

- Immersive: Multiplayer game, thrown to a place with friends, need to figure out where it is (inspired by geo guessing games)

- Non-immersive: 3D jigsaw puzzle game centered around gravity

Jordi:

- Tracking Allergies, highlight allergenic ingredients in product labels (but may not be achievable because developers cannot obtain camera permissions)

- Real-time subtitle translation to help the hearing impaired, they can see what people are saying after putting on the headset

- Quick control of home devices using the iPhone’s radar sensor and HomeKit devices. For example, look at the light, then pinch fingers to turn on/off the light

Well, these are some of the inspirations and feelings we had while watching the videos. If you are also interested, I recommend you to watch these two videos carefully, you may also gain some new insights!

Tool

Filtsy — High quality filters using LiDAR

Keywords: LiDAR, filters, iOS

You may have seen quite a few filter apps, but most filters only process at the two-dimensional level (after all, the earliest “filters” technology was to place glass or plastic lenses in front of the camera lens to selectively absorb different bands of light to modify the picture effect).

So how would this filter technology “upgrade” to the three-dimensional world? Filtsy app may have given us very good examples.

If you look closely at the two pictures above, you will notice some details in the picture, such as the edge of the wall in the flood, and the reflection of the police light, which take into account the existence of the edge of the wall in the real world, thus making the rendering effect more realistic. The LiDAR and related technologies behind it are also indispensable to achieve such realistic effects.

Tip:

We also mentioned this LiDAR technology in Apple AR technologies you need to know before developing visionOS. Interested friends can also take a look back.

This app was completed by Anton and Jack, the two founders, and the creation process of this app is also very interesting. In the founders’ letter, Anton mentioned that in earlier years, LiDAR was a hardware that cost over a hundred dollars to buy, which was not a niche hardware that could be used by the general consumer.

And because of Apple’s introduction on the iPhone, LiDAR now has a sufficient user base among the vast iPhone users (it is even said that due to Apple’s widespread use on the iPhone, the price of LiDAR has also dropped to a few dollars), which also allows apps like Filtsy to have some room to play. From this point of view, Apple has really silently provided application developers with a broader market in some places we may not notice (thank you, ).

What would it be like if Musk wore Apple Vision Pro?

Keywords: Stable Diffusion, AI, Apple Vision Pro

He would burst out laughing, picture first:

Of course this picture is fake, it is generated using Stable Diffusion XL (SDXL for short) and a model fine-tuned for Apple Vision Pro called sdxl-vision-pro. As you can see, even for a nascent product, it can already render very realistic rendered images in sdxl-vision-pro. For example, a cat and Gandalf wearing Apple Vision Pro, and Apple Vision Pro placed on an indoor table, and even characters in art paintings wearing Apple Vision Pro.

If you are a tech writer who is very focused on Apple Vision Pro (hey isn’t this talking about us?), then I believe at this point, your toolkit has gained another very useful AI tool 😉.

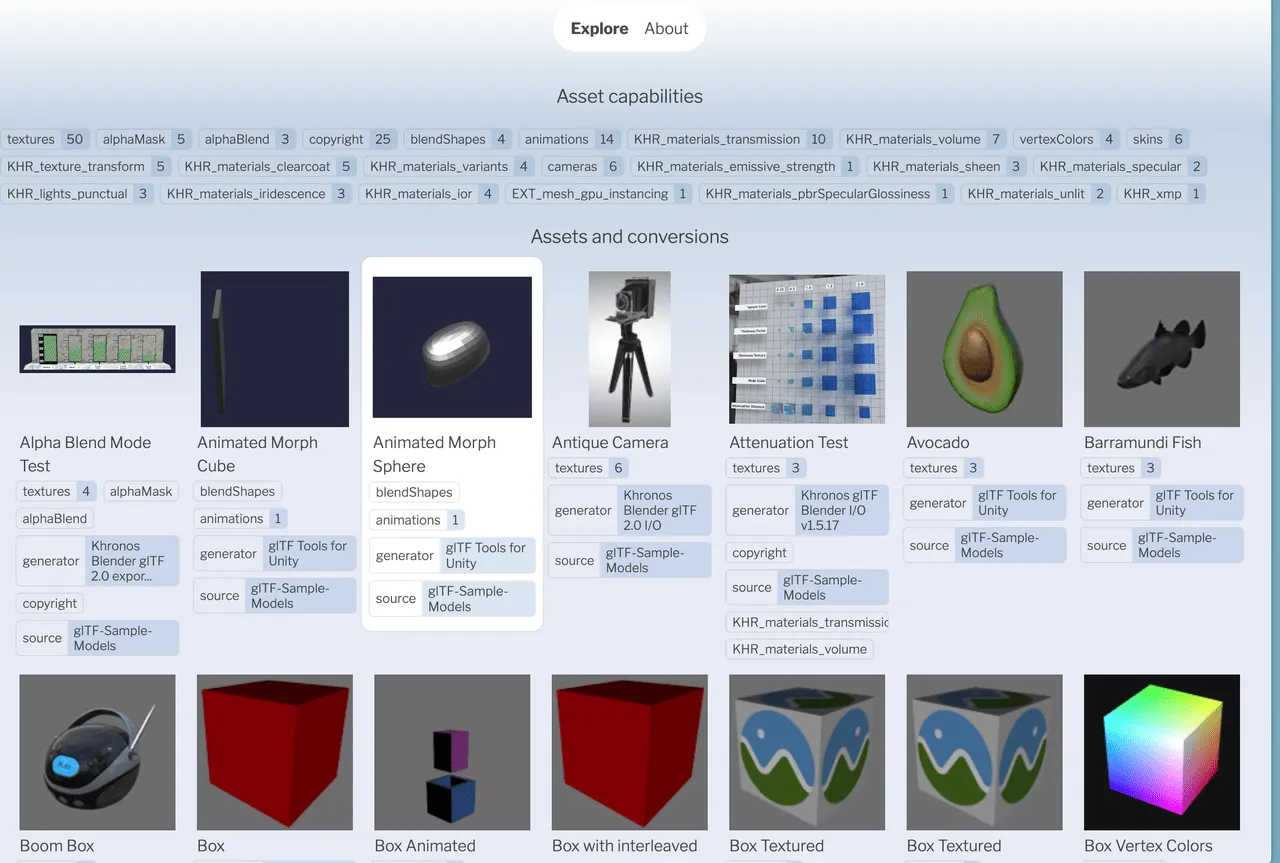

glTF -> USD test set maintained by AssetsExplore-Needle

Keywords: glTF, USD, Needle

AOUSD may be able to build a bridge for the unification of 3D model formats between Apple and the film, animation, and architecture industries.

However, AOUSD still cannot build a bridge between the unified USD ecosystem and Google’s glTF format — after all, Google did not join.

Therefore, for now, if developers want to migrate the more universal glTF format on the Web/Android platform to the more universal USD format on the Apple platform, they still need to switch between the two formats. But to convert properly, you need sufficient test sets to ensure the converter works well.

Fortunately, there are still people in the industry making efforts. The Needle team we recommended in issue 004 tried to build such a test set to demonstrate the capability boundaries of existing tools in converting glTF to USD.

On this page, the Needle team displays the models in glTF-Sample-Models on the Web, and also provides the corresponding USD format files converted using Three.js and Blender, through open source collaboration to check for potential issues in each capability, and through test sets to demonstrate the scope currently supported by the conversion tools.

Paper

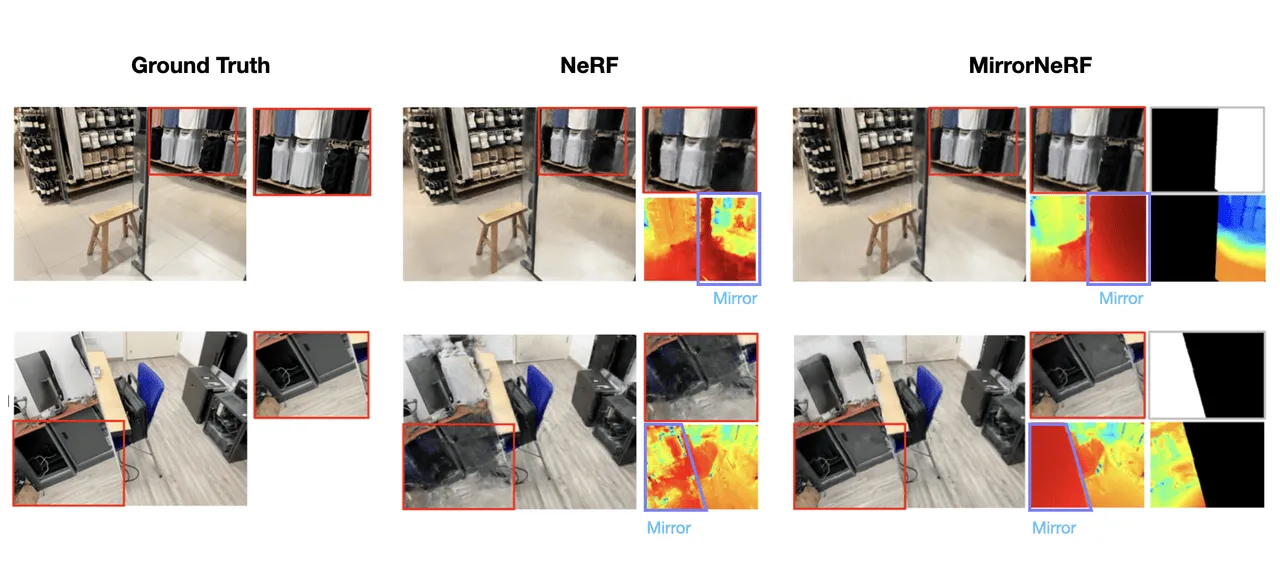

MirrorNeRF: Enabling NeRF to recognize mirrors

Keywords: complex scenes, photogrammetry, neural radiance fields, volumetric rendering

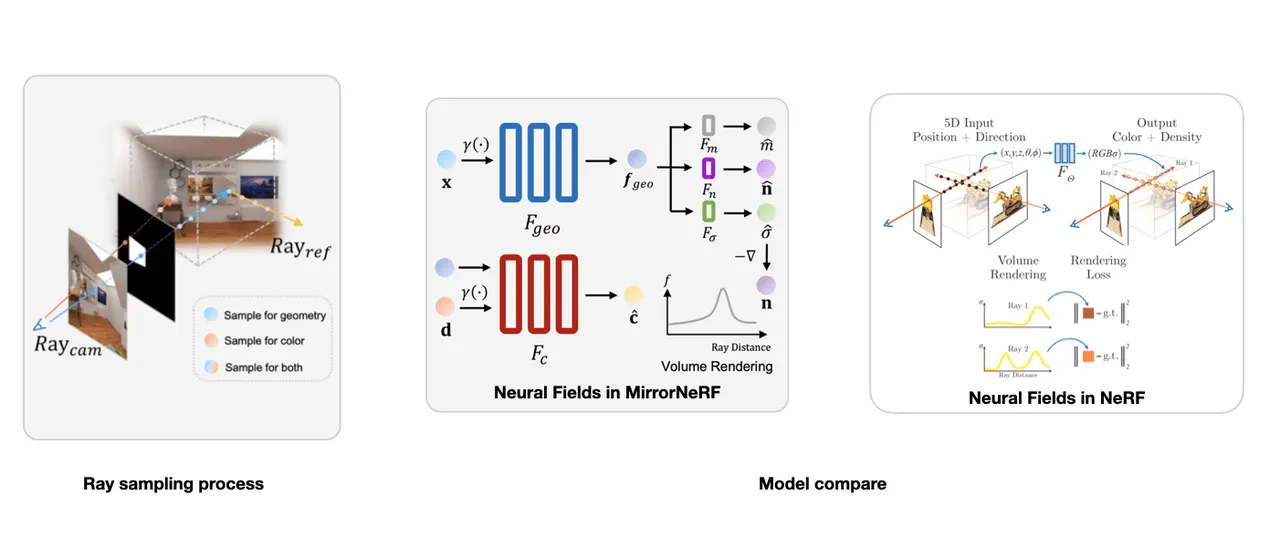

Today I’ll introduce a latest work on NeRF (for what is NeRF, please refer to Issue 3 of our newsletter).

Users who have used LumaAI may have this experience: when reconstructing some complex scenes (such as scenes with glass, mirrors, etc.), the generated scene models are often wrong. This is because general NeRF-based rendering workflows do not consider physical reflection. NeRF will mistakenly identify the reflection in the mirror as an independent virtual scene, resulting in inaccurate mirror reconstruction, and inconsistent multi-view reflections in the mirror.

The input to Mirror-NeRF is the spatial position x and view direction d (consistent with traditional NeRF), and the output includes not only the volume density σˆ and radiance ĉ like traditional NeRF, but also the surface normal nˆ. The normal and reflection probability are then used in the Whitted Ray Tracing light transport model to construct a unified neural radiance field, so that the model considers physical reflection during rendering.

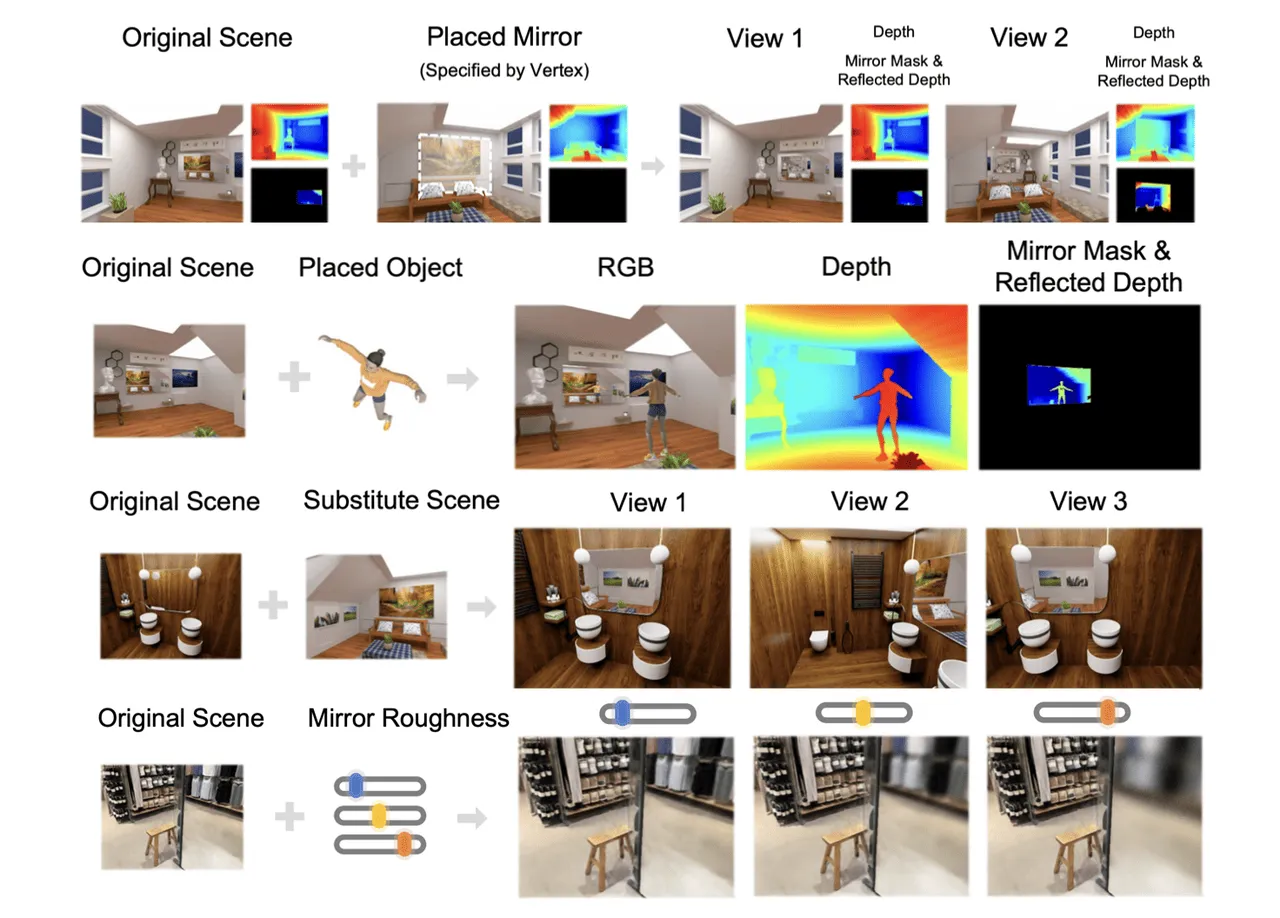

Applying this technique allows us to (interested friends can keep an eye out, the code will be open sourced soon):

- Place new mirrors to achieve infinite mirror reflections between mirrors;

- Place new objects in the scene, mirrors in the original scene correctly reflect the position of the new objects;

- Customize the reflection results in mirrors to create “portal” effects;

- Control the reflection coefficient of mirrors, very easily turn specular mirrors into frosted glass effects;

XReality.Zone

XReality.Zone