XR World Weekly 011

Editor’s Note for This Issue

A busy two weeks have passed (these two weeks have been truly hectic, with many changes in the XR world that need to be digested slowly, and as a result, issue 011 was delayed by two days 😭).

However, all this hard work has been rewarding. In the past two weeks, there have been some “little surprises” that made all the hard work meaningful. One such surprise moment was when we searched for certain keywords and found that our own content was at the forefront of search engine results.

Although as more precise content emerged, our content moved to the back. But the lasting impact of this little surprise has been enough. Without further ado, please enjoy the XR World Weekly 011~

Table of Contents

BigNews

- Unity 2022.3.11 LTS now supports more MR development capabilities

- PICO officially supports Web App and Web developers

- Meta Quest v59 released

- Better real-world connections! Use the Location API to bring more interesting local experiences to your Meta Quest

- Luma AI launches Text to 3D generator

Idea

- What changes can the integration of virtual and reality bring to our lives?

Tool

- Feather: 3D Sketchbook

- 3D Model Viewer

Video

- The real disruptive aspect of Vision Pro: in-depth interpretation by interaction designers + developers

Article

- Designing for spatial computing: from iOS and iPadOS to visionOS

- Industry research report on 3D content creation and generation

Code

- How to change the Window background color in visionOS?

- RealityActions

SmallNews

- Nimo: A “space” glasses similar to Rokid

- iOS 17 beta version now has AirPlay Receiver

- Bazel has been renamed to Bezi

- Unity’s new Runtime Fee may have been introduced to target AppLovin

- Hackathon Flash: ShapesXR in collaboration with XReality Pro to host multiplayer board game Hackathon, SnapAR x Lenslist AR Asset Hackathon results announced

BigNews

Unity 2022.3.11 LTS Supports More MR Development Capabilities

Keywords: Unity, MR

With the release of Quest 3, developers now have better hardware support for creating MR applications. In addition, support in software and development tools is also essential.

In the Unity official blog Explore cross-platform mixed reality development on Meta Quest 3, we can see Unity’s efforts to support developers on the Unity platform:

Firstly, Unity has released the new OpenXR: Meta package to facilitate the development of MR applications on Quest 3 based on OpenXR and ARFoundation (ARFoundation will also be used in conjunction with XR Hands and XR Interaction Toolkit, familiar to VR developers, which you can review in issue 002)

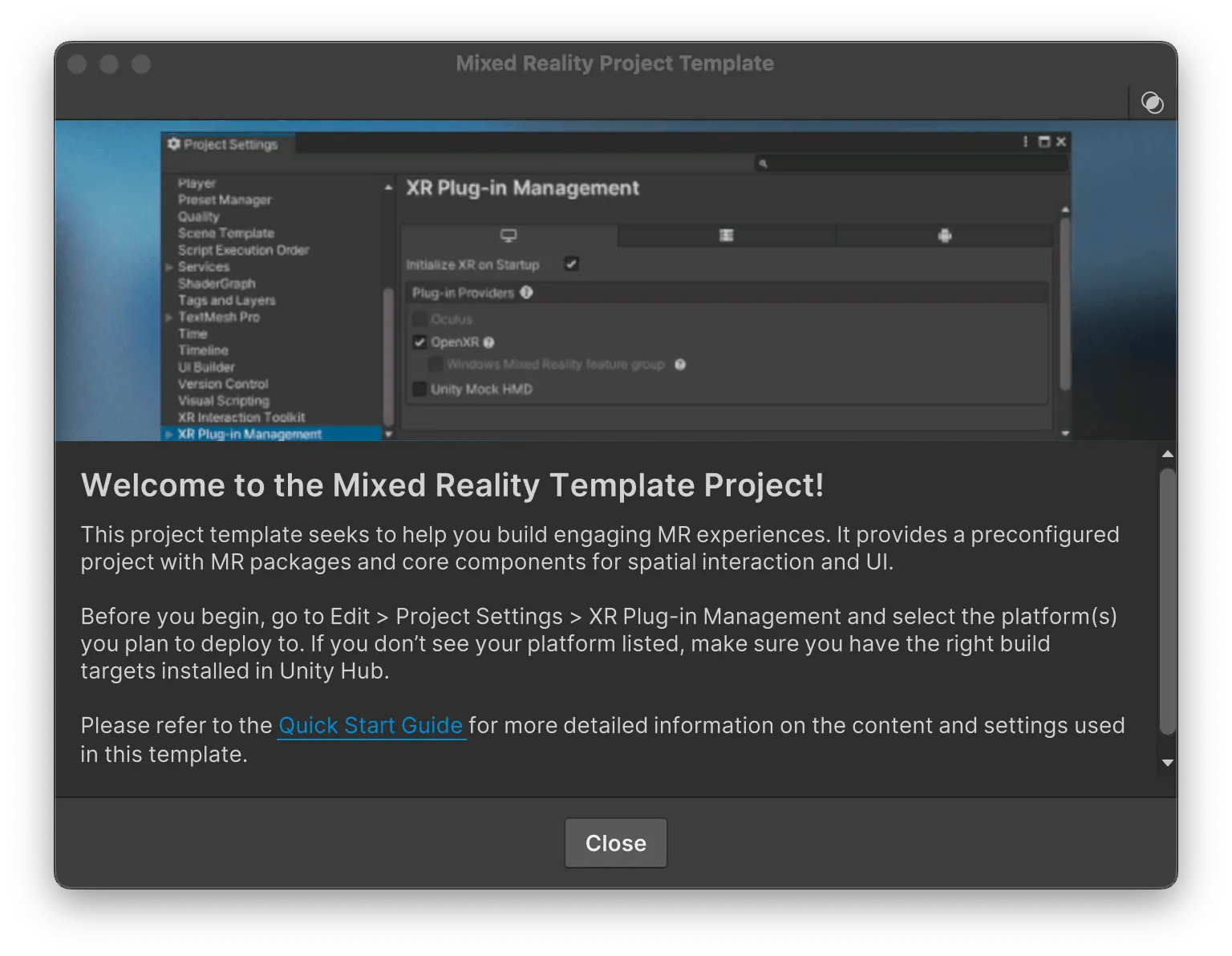

Secondly, Unity provides a new Mixed Reality Template to help developers quickly start an MR project

Note that you need to upgrade Unity to version 2022.3.11 LTS or higher to see this new template.

PICO Officially Supports Web App and Web Developers

Keywords: WebXR, PICO, WebApp, PWA, MR

If you are a web developer and also want to create something interesting in the XR ecosystem, then WebXR and related technology stacks are definitely your best choice.

Fortunately, PICO has begun to provide more support for web developers in its ecosystem. In this article PICO officially supports Web App and Web developers, PICO focuses on explaining the support for Web developer documentation, WebXR standards, and Web App listings. Among the interesting content are:

Now, Web Apps on PICO can also run in MR mode.

Web Apps on PICO that support PWA can run directly in full immersion mode, such as this Needle-made Bike Configuration (if you are unfamiliar with this tool, you can look back at our issue 004)

If you are a very professional web developer and want to know what handy tools are available in the WebXR ecosystem, PICO has also very thoughtfully prepared an Awesome WebXR list for you (developers are certainly no stranger to the various Awesome XXX on Github 😉), making it convenient for you to find the right tools to turn your ideas into reality.

Meta Quest v59 Released

Keywords: Quest, Meta

In the latest Meta Quest v59 version, Quest has mainly updated some system-level optimizations. Some of the more interesting updates include:

- Suggested Boundary (Quest 3 Only): If you start an app without setting up a safety boundary, Quest will automatically provide a usable boundary based on the surrounding environment.

- Assisted Space Setup (Quest 3 Only): Allows users to manually perform a three-dimensional scan of the surroundings to facilitate better interaction with the real world in subsequent MR apps, such as achieving mutual occlusion between virtual objects and real-world objects.

Better Real-World Connections! Use the Location API to Bring More Interesting Local Experiences to Your Meta Quest

Keywords: Quest, Android Location API

With more and more people moving around in the real world wearing Quest 3 with mixed reality mode (which allows you to see the environment), Meta announced that Meta Quest 3, Quest Pro, and Quest 2 will access the Android Location Manager API, allowing developers to build location-based personalized application experiences starting with version v57. Location services provide possibilities for diverse mixed reality and immersive experiences, suitable for scenarios such as fitness or multiplayer games. Referring to the many popular AR experiences on Snap that are directly related to geographic location, developers can now create similar MR experiences that are linked to specific scenarios or even locations. For example, MR experiences that only appear in certain hotels or resorts, or treasures and gifts that appear only at specific locations.

Out of respect for user privacy, users can control sharing of location data at the system or app level, and developers can only access their location data when users choose to share it. It is believed that adding a link to geographic location can better leverage the features of MR, making extended reality not just the first two words, but separated from “reality”.

You can read the related development documentation here.

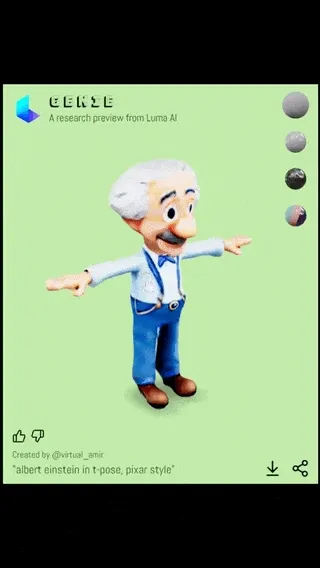

Luma AI Launches Text to 3D Generator

Keywords: AI, Text to 3D, Embodied Dialogue

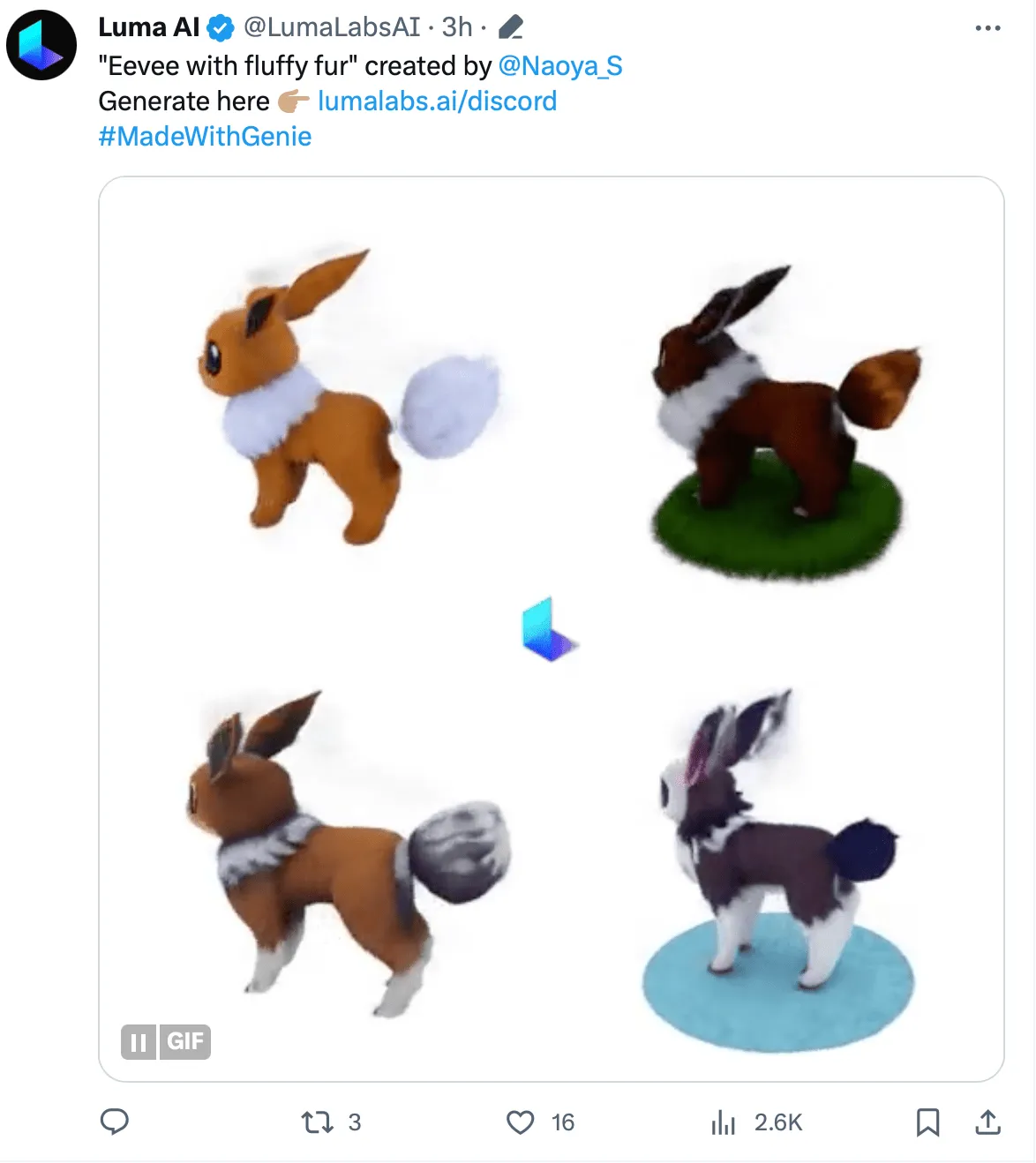

Recently, 3D scanning app developer Luma AI launched a text-to-3D object generation service Genie on their official website and Discord channel. After users join the server, they can describe the objects they want to generate in the corresponding channel, call the AI bot Genie equipped by Luma AI on Discord, and generate the corresponding 3D models. After waiting for a while, users will be notified by channel message that the model generation is complete and a GIF animation preview will be displayed.

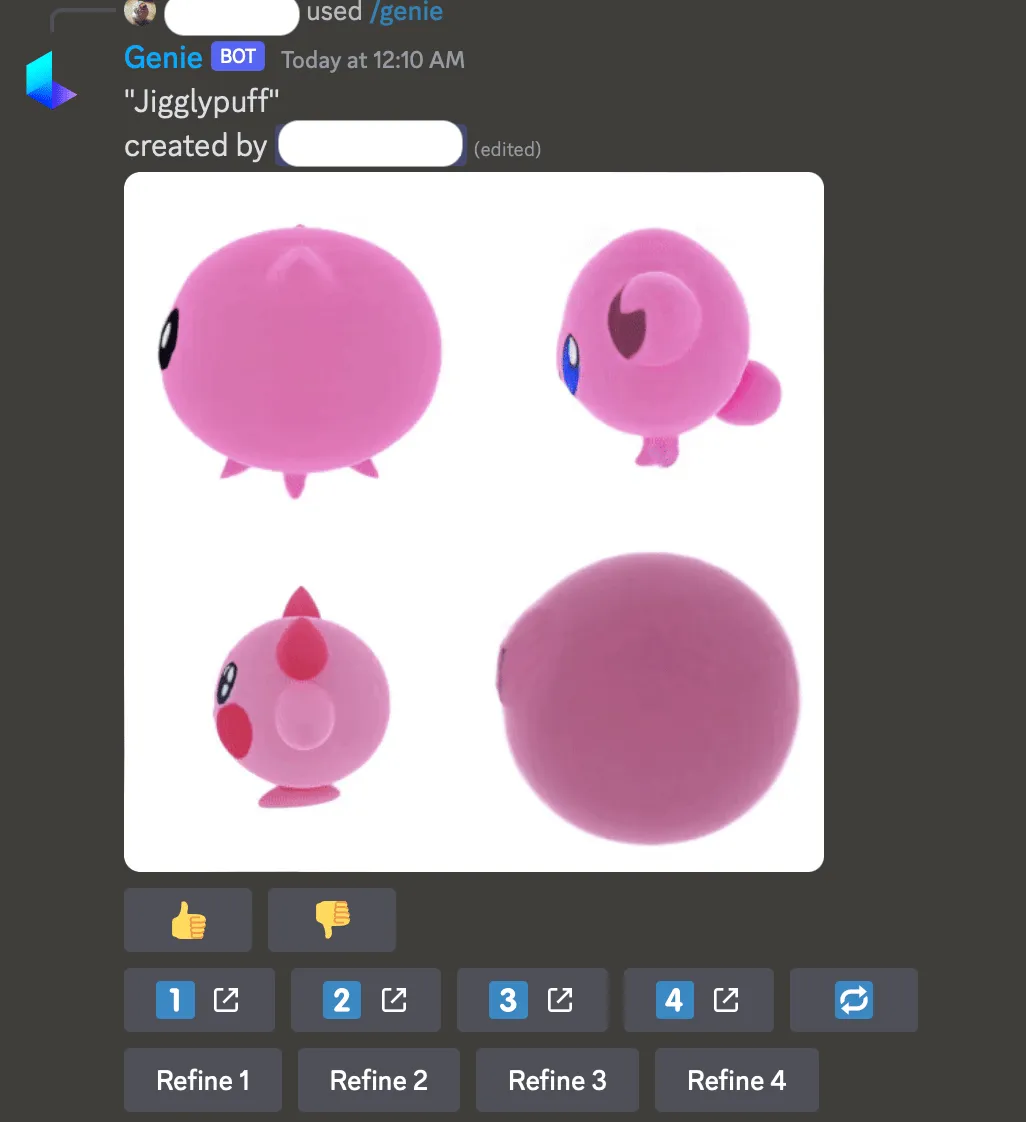

After seeing the trial reports of netizens on various social media, we also quickly tried out the service. After trying a few common 3D object generation options (some small animals), we also tried to combine popular cultural IPs and other content that requires more prior input and definition. We found that support varies… look at IP (for example, the Eevee generated by the official Twitter is much better than the Jigglypuff we generated ourselves, avoiding Jigglypuff discrimination needs everyone’s efforts!)

The overall use and experience are very similar to the “text-to-image” model MidJourney also loaded on Discord. The Bot will give out 4 models for a text description at a time, and users can judge the quality of the 3D model generation to help developers better optimize the model service. You can also click on the link tabs corresponding to different models to open and download the respective models, if you are not satisfied you can regenerate using the same prompt, and you can fine-tune a specific model.

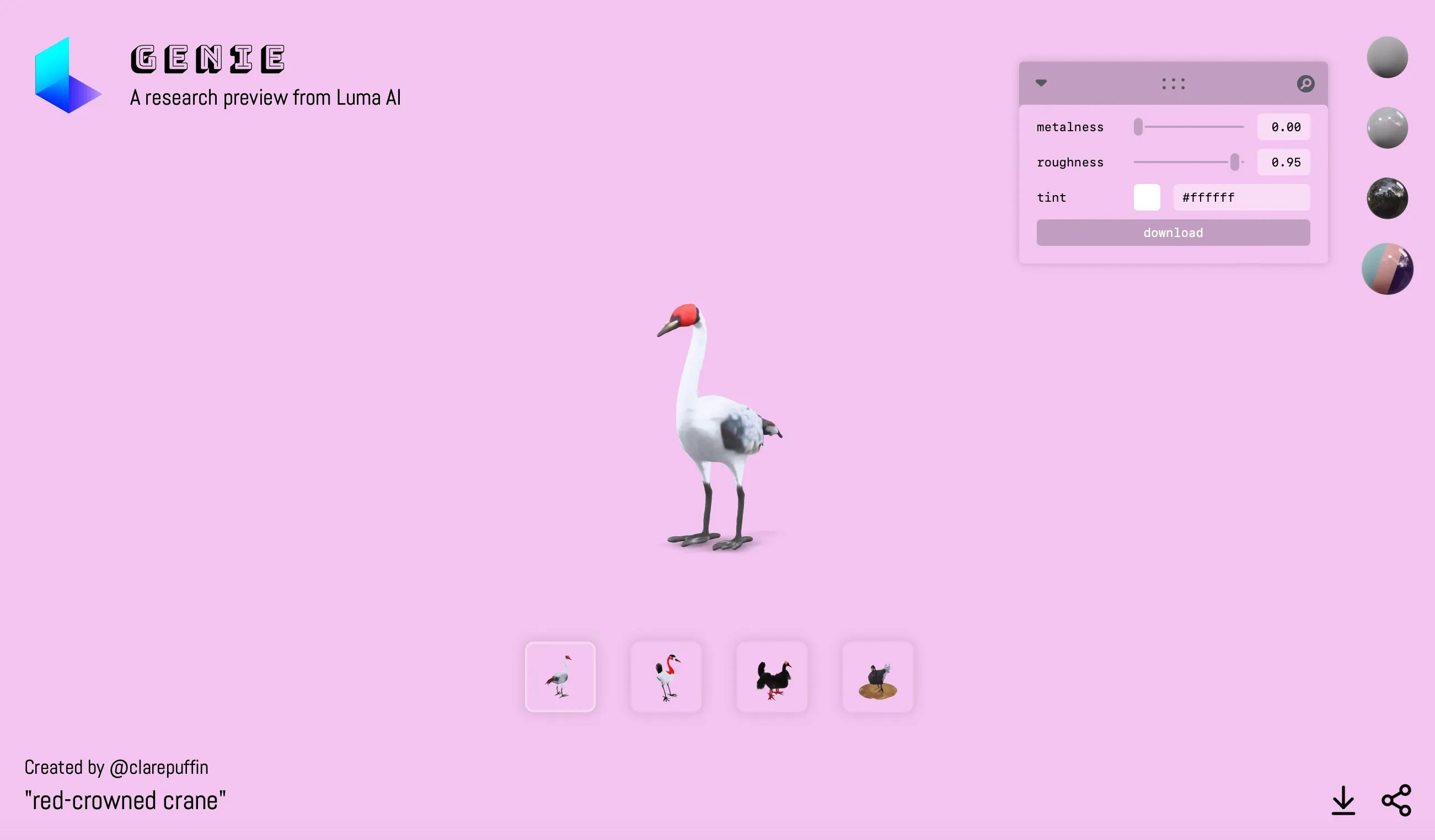

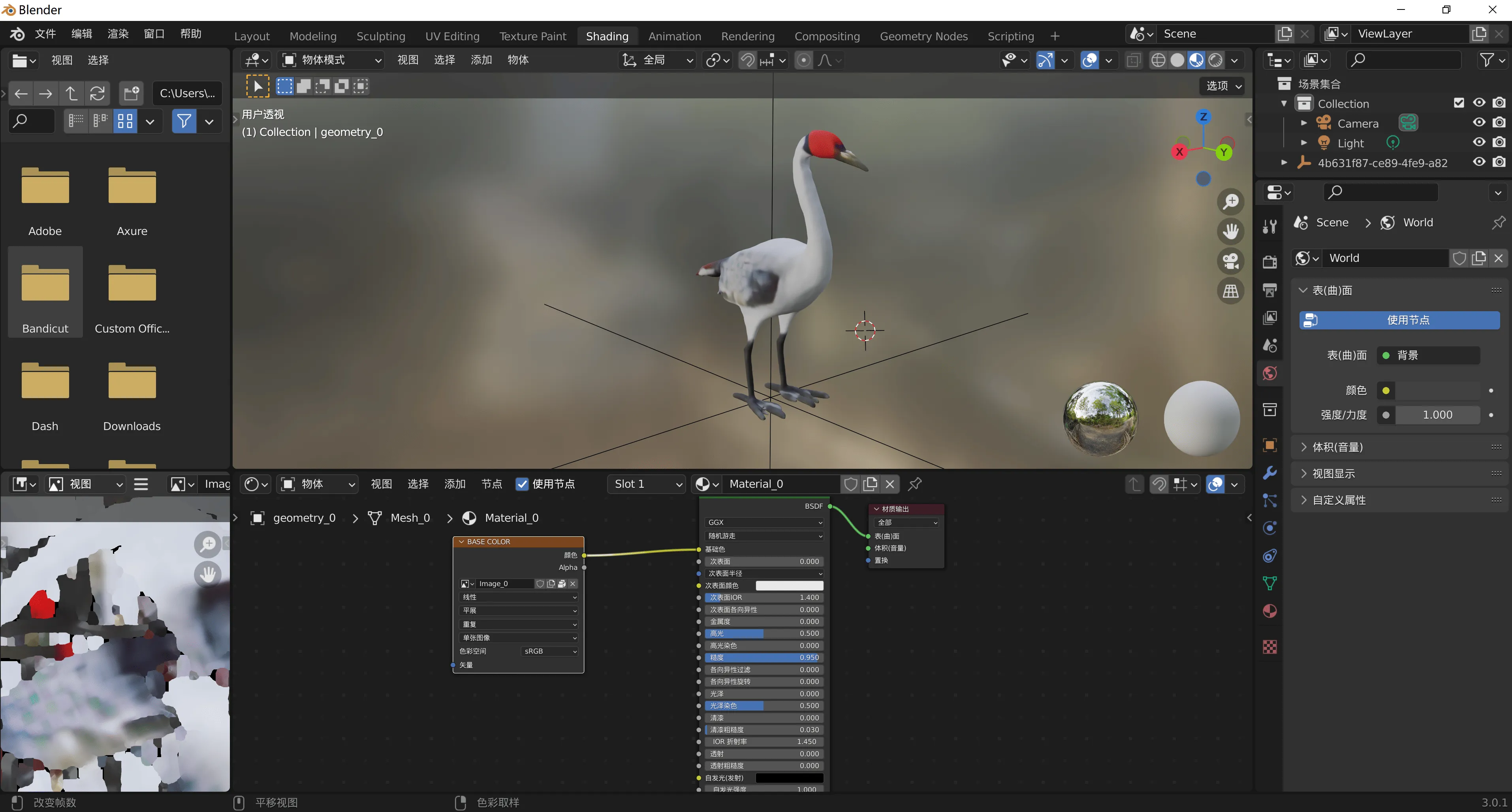

After selecting the link corresponding to the generated and satisfactory model, you can jump to the official website to download (actually, you can see all 4 models corresponding to this prompt at the same time). The model also comes with materials, divided into “default”, “plastic”, “metal”, and “customized” (gloss, roughness, color) for selection. After downloading in glb format, it can be opened with 3D editing software such as Blender, here we see that the material itself also comes with a texture, convenient for further adjustment.

In terms of use cases, in addition to saving the working time of art colleagues and improving efficiency, netizen X Amir also proposed and made a demo, he mentioned that he first used Genie to generate a 3D model of Einstein, then used Adobe Mixamo to make animations and 8thwall to realize the AR experience, and finally previewed the model through the perspective function of Meta Quest 3. Furthermore, he proposed that if the OpenAI API can be accessed in the future, it will be possible to quickly realize a more embodied and concrete dialogue with characters created on sites like Character.AI.

It feels like the interactive filter developed by X netizen Maks Van Leeuwen based on Snap Lens can also be implemented with a similar approach.

Currently, because the model is still in the research stage, the service is currently free of charge, and interested friends are welcome to experience it.

Idea

What Changes Can the Integration of Virtual and Reality Bring to Our Lives?

Keywords: MR

With the release of Quest 3, more and more creators are gradually focusing on MR scenarios. Recently, there have been quite a few such demonstration scenes on social media.

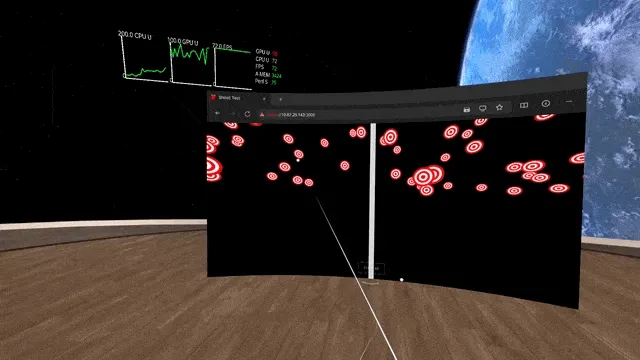

First, a Reddit user shared a video player with real light reflection effects:

This gives us an insight: lighting and shadows are indispensable content to better integrate virtual objects into the real world. This may be the main reason why various system Windows in visionOS provide default shadow effects.

Although this effect is still only achieved by the author’s custom Shader, in the comments, we also see developers from MoonVR indicating that MoonVR already has similar reflection effects, and they are actively working to make this effect achievable in MR mode.

When Hololens was first released, the scene of using Hololens to play Minecraft in the promotional video left a deep impression on many people. And now, thanks to Quest 3, it’s finally possible to play Minecraft outdoors in MR mode.

In this video by AwakenToast, he showed us what it’s like to play Minecraft outdoors with Quest 3 (in fact, this is BlockVerse, made by independent game developer Running Pixel) — you can dig holes in the real world and even throw a bomb into them.

If you are lucky enough to have a Meta Quest 3 at hand, then you might want to give this game a try, it’s a good choice to experience MR~

Tool

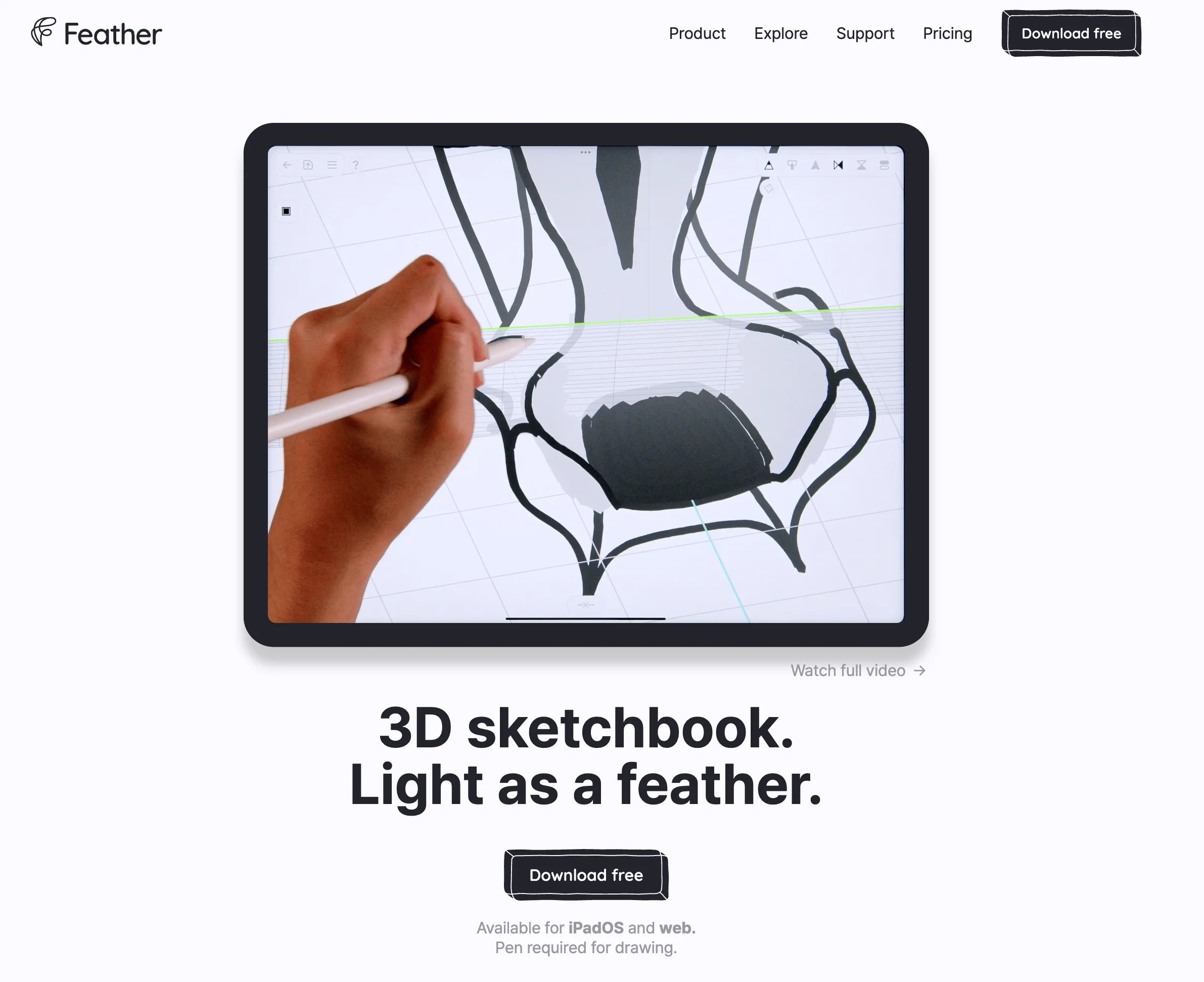

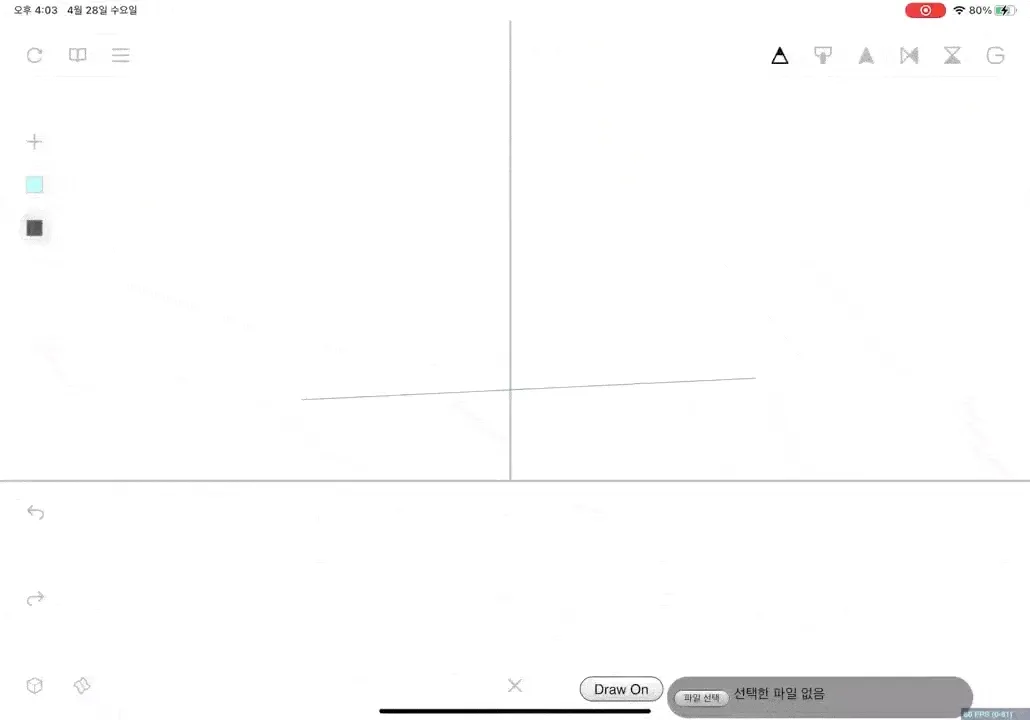

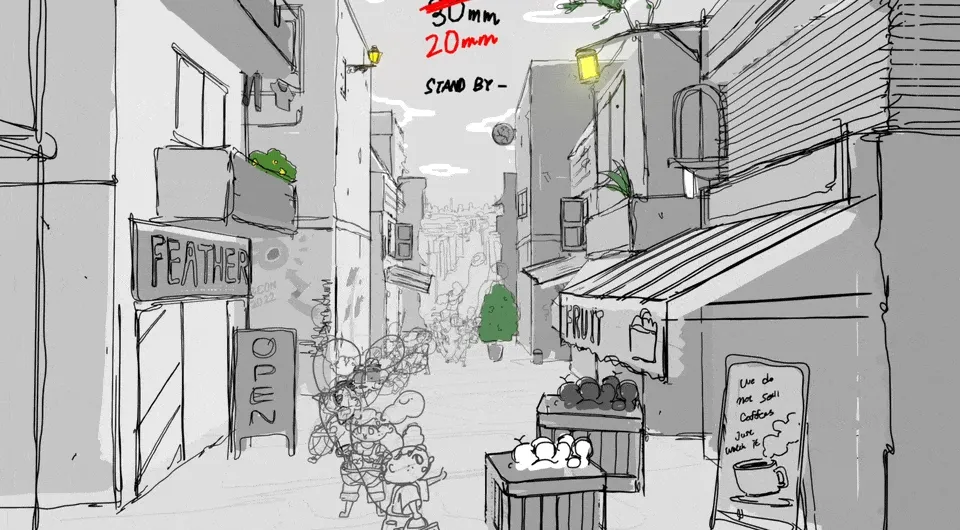

Feather: 3D Sketchbook

Keywords: 3D, iPad, Sketch

”Hand-drawing” in 3D space, for many people, the first thing that comes to mind is VR painting. Indeed, VR painting is a very natural form of hand-drawing in the 3D world, and there are also many excellent artists, such as KudoAlbus.

But have you ever thought about how to solve the problem of expressing a 3D scene on a flat surface if you want to create a 3D scene with “flat hand-drawing”?

On this question, the app Feather offers a possible solution. In Feather, which focuses on 3D hand-drawing, you need to first draw a “reference surface” with a brush, and then, you can draw on this “reference surface” as if using a regular brush. This is shown quite intuitively in an official example:

Of course, the best way to try this out is to experience it yourself. If you have an iPad with an Apple Pencil, it’s highly recommended that you download the app to give it a try.

You can also first check out what can be done with Feather on the Explore page. There are many interesting works there, and this Alia in the Feather town is also a very good piece.

3D Model Viewer

Keywords: 3D, USDZ, obj, stl

Although in issue 006 we enjoyed the beautiful future with OpenUSD — USD can be as universal as JPG, we still need to deal with a variety of 3D models.

If most of your daily scenarios are just to simply view these 3D models, then 3D Model Viewer might be your good helper. This tool allows you to view a variety of 3D model formats online. If you download its Mac app, you can also get QuickLook support.

Video

The Real Disruptive Aspect of Vision Pro: In-Depth Interpretation by Interaction Designers + Developers

Keywords: Vision Pro, Meta Quest Pro, SwiftUI

In the video The real disruptive aspect of Vision Pro: in-depth interpretation by interaction designers + developers, interaction designer and developer LiFE-Original explains in depth from a professional perspective Apple’s Vision Pro, discussing its differences and advantages over other XR devices, as well as its innovations in application development and interaction design. The video author, a student at the Art Center College of Design in Pasadena and a winner of the WWDC23 Student Challenge, discusses from a designer’s and developer’s perspective the disruptive aspects of Vision Pro beyond hardware: Vision Pro solves the problem of high application development thresholds of other XR devices, providing a cross-platform UI framework and a programming language with low learning costs, enabling developers to get started easily.

Furthermore, compared to devices like the Meta Quest Pro, Vision Pro’s interaction design revolves around eye tracking and gestures, with high precision and naturalness. At the same time, the interaction design standards of visionOS have made great contributions in terms of ergonomic standards, learning costs, and design performance.

Article

Designing for Spatial Computing: From iOS and iPadOS to visionOS

Keywords: visionOS, iOS, iPadOS, HIG

If you are a designer who has thoroughly read Apple’s HIG, then you are definitely familiar with various interaction terms in iOS/iPadOS, such as TabBar, NavigationBar. Fortunately, when you enter the world of visionOS, you will find that this brand new spatial computing system has not overturned Apple’s original design but has extended it — this is very helpful for us to bring the beautiful design from iOS/iPadOS to visionOS.

In this article Designing for spatial computing: from iOS and iPadOS to visionOS, the author interprets some basic interaction pages of visionOS from the following aspects to help you quickly switch from iOS/iPadOS to visionOS:

- Windows

- Volumes

- Tab bar

- Sidebar

- Ornaments

- Menus and Popovers

- Sheets

Industry Research Report on 3D Content Creation and Generation

Keywords: 3D movies, spatial video, naked-eye 3D, 3D scanning and modeling

This is an industry research report on 3D content creation, mainly covering 3D content forms and classifications, 3D content production tools and methods, and also focuses on Apple’s layout in spatial video and 3D scanning and modeling. It is useful for XR practitioners to learn related knowledge and understand industry development.

It mentions the most common 2.5D content, which has some 3D features but lacks longitudinal depth information and cannot build three-dimensional objects, essentially still two-dimensional. 2.5D content mainly comes in two forms, one represented by 3D movies, characterized by creating a similar 3D stereo visual effect; the other represented by panoramas, characterized by stitching and expanding the field of view to record as much three-dimensional space content as possible in two-dimensional form.

It also talks about real 3D modeling methods, mainly including:

- 3D scanning modeling: There are 4 main types: laser triangulation scanning, structured light scanning, time-of-flight laser scanning, and global photogrammetry.

- 3D software modeling: Mainly includes CAD modeling, polygon modeling, and digital sculpting.

- AIGC modeling: Known for NeRF technology, a few pictures or a piece of text input can generate a 3D model.

Apple’s 3D content comes in two forms: spatial video and 3D models. Spatial videos are shot with the iPhone’s main camera and ultra-wide-angle camera, providing a stereo vision with the principle of binocular disparity; Apple’s 3D models mainly use LiDAR and photogrammetry technology, provided to developers in the form of APIs for making 3D modeling software.

Apple brought scanning modeling to phones in WWDC23 and provided the function on the iOS version of RealityComposer, which requires LiDAR as a basis and is therefore only available on phones or iPads with LiDAR.

Code

How to Change the Window Background Color in visionOS?

Keywords: visionOS, Window, Color

In visionOS, the default window effect is a special kind of frosted glass effect, whose color changes according to the background color behind the window. However, through the exploration by Tina Debove Nigro, she discovered that we can use the following simple code to change the background color of the window, allowing the window to display a more unique style.

.background(.blue.gradient.opacity(0.2))

RealityActions

Keywords: visionOS, Cocos2D

RealityActions is a Cocos2D-style animation framework that can be applied to entities in Apple’s RealityKit, enabling convenient and user-friendly animation effects. If you are familiar with Cocos2D but not with the animation API in RealityKit, you might want to try this framework, which will bring back the familiar feeling of animation. The framework supports SwiftPM and can be imported for direct use.

For example, the following code allows a little airplane to rotate once every 5 seconds:

airplane.start (RepeatForever (

DelayTime(duration: 5),

EaseBackOut(

RotateBy(duration: 1, deltaAngles: SIMD3<Float> (0, 0, 360)))))

SmallNews

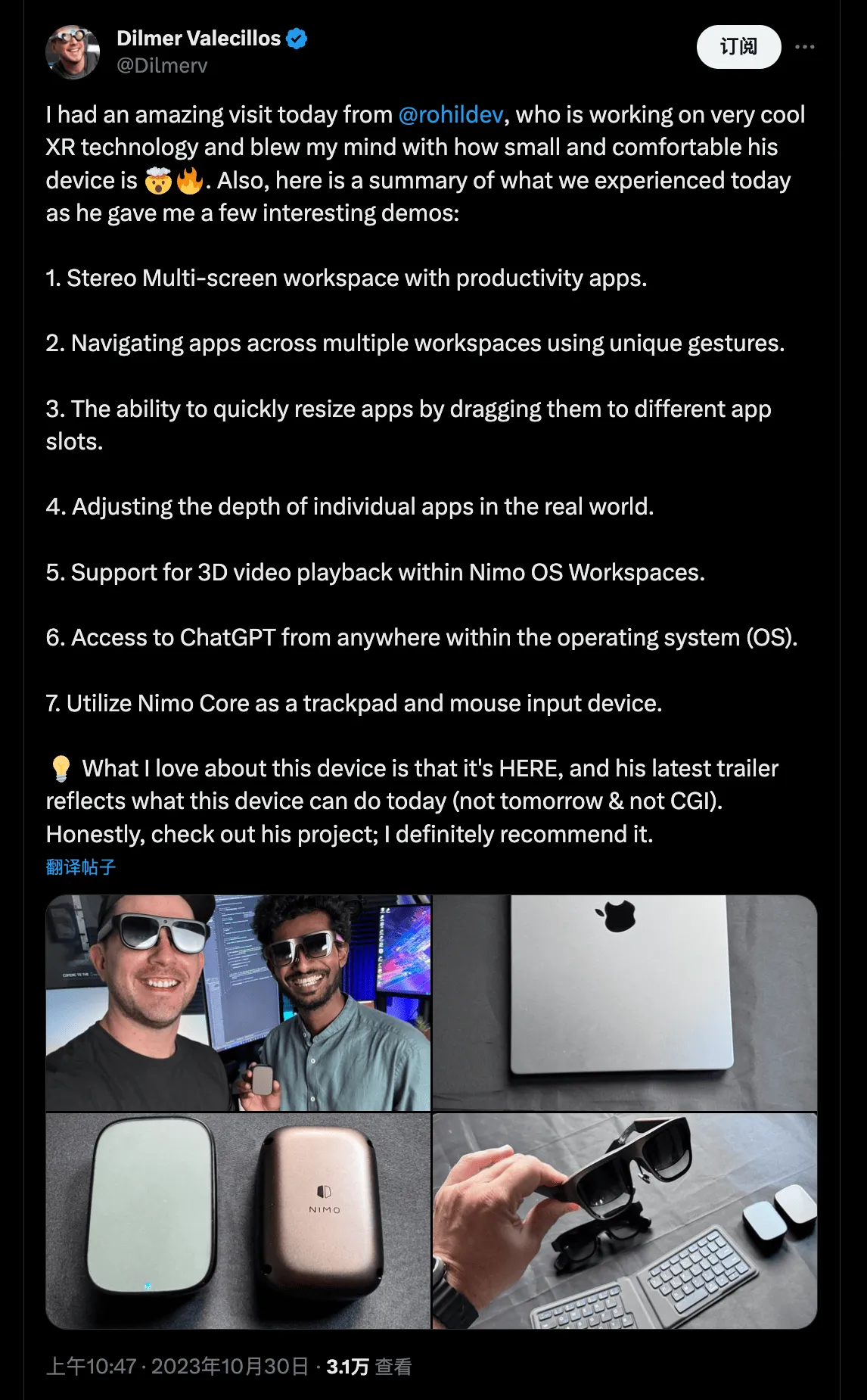

Nimo: A “Space” Glasses Similar to Rokid

Keywords: Rokid, Nimo, AR Glasses, Productivity

Nimo is a company focused on providing productivity in the era of spatial computing. Their CEO Rohildev had previously released a wireless gesture ring called Neyya (officially known as Fin) in 2015. Recently they have launched new Nimo 1 Core and Nimo 1 OS.

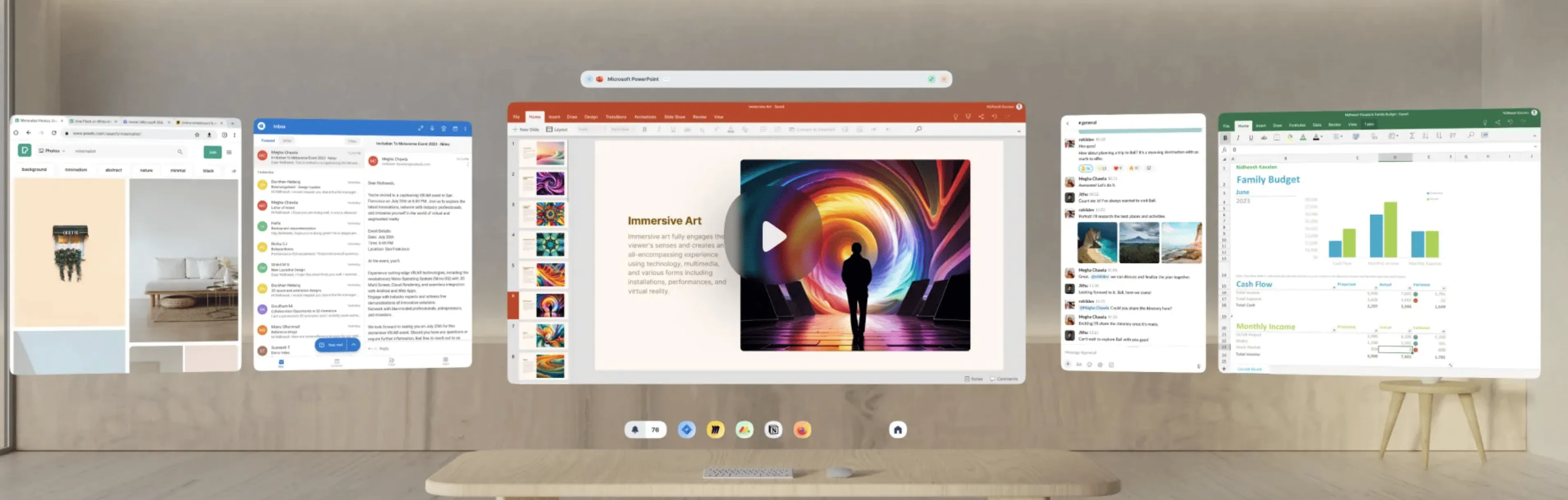

The official Twitter’s promotional video gives the impression that Nimo 1 OS is done with some “refinement”. Dilmer Valecillos, whom we have recommended many times before, interacted with them in this tweet and gave a very positive review.

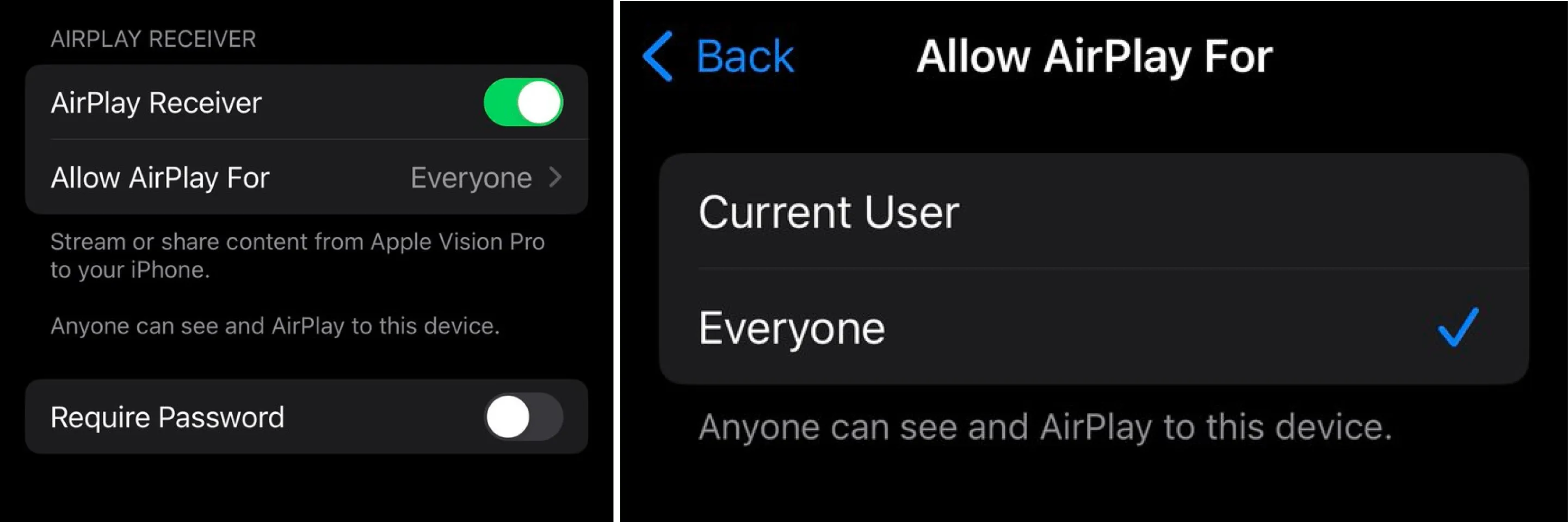

iOS 17 beta Version Now Has AirPlay Receiver

Keywords: iOS 17, AirPlay, visionOS

From our old friend M1’s tweet, it seems that in the new iOS system, Apple has added AirPlay Receiver functionality, which will help visionOS users to stream what they see to iPhone using AirPlay.

Bazel Renamed to Bezi

Keywords: Bazel, Bezi

Remember Bazel we introduced in issue 003? It’s been renamed to Bezi, and a new logo has been launched to match.

Unity’s New Runtime Fee May Be Aimed at AppLovin

Keywords: Unity, Runtime Fee

In this article, an editor from MobileGamer suggests that the recent controversial Runtime Fee incident at Unity had several internal reasons, including Unity’s own profit pressure and possibly being a strike policy by Unity and IronSource (an app data company acquired by Unity) against Applovin (another app data company that Unity once attempted to acquire).

Hackathon Flash: ShapesXR and XReality Pro Host Multiplayer Board Game Hackathon; SnapAR x Lenslist AR Asset Hackathon Results Announced

Keywords: ShapesXR, XReality Pro, Snap, Lenslist, Hackathon

From 11/05 10:00 AM - 4:00PM PST, XR collaborative design software developer ShapesXR and XR development promotion organization XReality Pro held a Hackathon for XR designers and developers, requiring participants to form teams of four or fewer to conceptualize and demo a VR multiplayer board game within 6 hours. The winning team could win up to $4000 in prize money (up to $1000 per member).

In addition, SnapAR, in conjunction with the AR filter developer community Lenslist, also held a hackathon and selected different winners according to different categories such as visual effects, technology, materials, etc. Due to the numerous awards and filters, they are not listed here one by one, but you are welcome to visit the winners’ announcement page to see them.

Finally

Whether it’s quality information you’ve seen or excellent content you’ve written yourself, you can contribute via GitHub Issue or Lark form 😉.

Contributors to This Issue

| Link | Image |

|---|---|

| Puffinwalker.eth |  |

| Onee |  |

| XanderXu |  |

Recommended Reading

- XR World Weekly 030 - The greatest significance of Orion released at the Connect conference is to give people hope

- XR World Weekly 019

- XR World Weekly 026 - Black Myth: Wukong has completed the journey to obtain Buddhist scriptures, but Meta may not have

- XR World Weekly 023 - The increasingly influential OpenUSD specification

- XR World Weekly 028 - visionOS 2 will be officially released on September 16.

- XR World Weekly 027 - AIxXR must be a good pair

- XR World Weekly 007

XReality.Zone

XReality.Zone