Breaking down the details of Meta Quest 3's upgrades to MR technology

Medium Header

XRealityZone is a community of creators focused on the XR space, and our goal is to make XR development easier!

This article was first published in xreality.zone , a Chinese version of this article is available here.

At Meta Connect 2023, which took place September 27-28, Zuckerberg announced Meta’s next-generation headset, the Meta Quest 3.

The Meta Quest 2 was certainly a product that set the industry standard for VR (Virtual Reality), but its experience with MR (Mixed Reality) was a bit underwhelming. I’m not sure if it was influenced by Apple’s Vision Pro release, but the Meta Quest 3 features a number of upgrades to its MR functionality, which were explained at length at the launch event. This article aims to analyze Meta Quest 3’s technical upgrades to MR in detail.

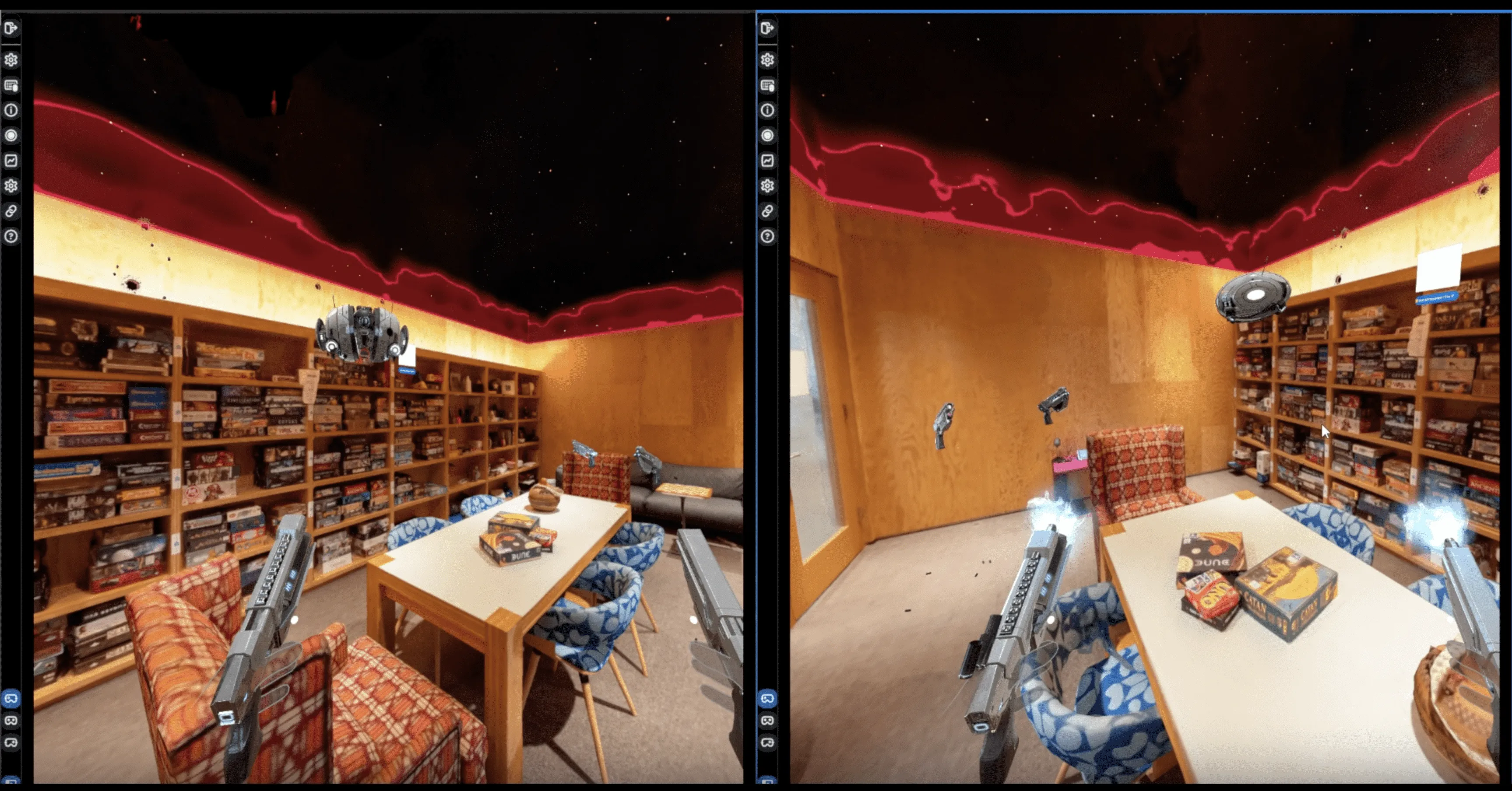

First, in terms of the clarity of the background of the perspective video, in Passthrough mode, unlike the gray background of the Quest 2, the Quest 3 provides a colorful background with 10 times the amount of pixels of the Quest 2. Compared to the cumbersome manual Room Setup in Quest 2 MR mode,the Quest 3 offers an automatic Space Setup feature. When the user puts on the headset, the headset is equipped with two color cameras and a depth camera to understand and reconstruct the real scene around the user in 3D. With the latest Mesh API and Depth API, developers can implement real environment-based physical collisions, spatial anchor positioning across sessions, and real-time depth occlusion in MR (Depth API will be updated in v57 SDK).

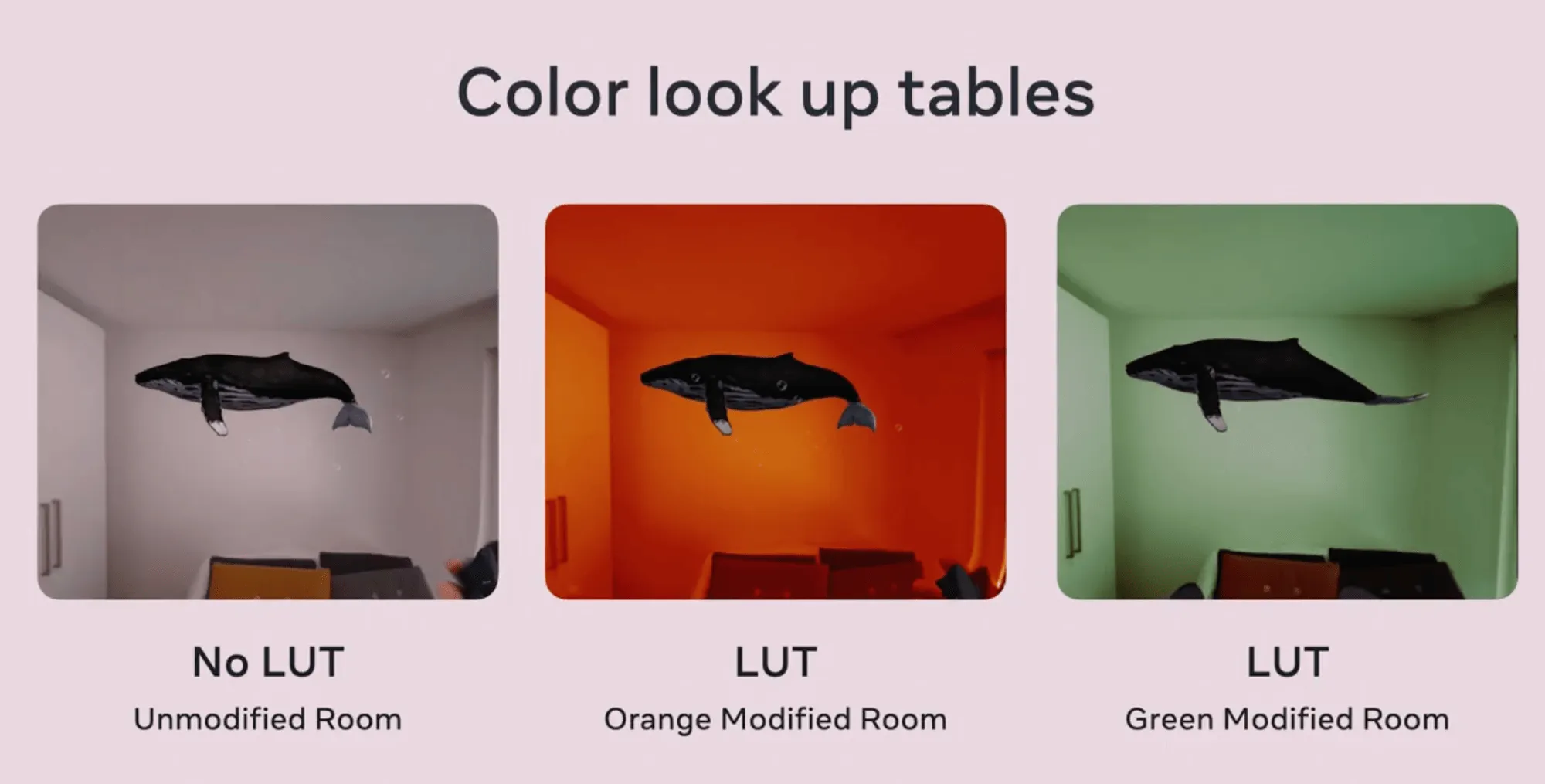

Another interesting API is LUT (Color Look Up Tables) which maps one specified color to another in MR mode. As shown below, the second and third images map the white color in the scene to orange and green, respectively. With the LUT API, developers can change the color of a real scene in MR mode to create a strange sense of atmosphere.

With the new MR technology, Meta introduces a new feature, Augments. Users can place virtual objects in their homes that will be anchored to a fixed location in space. These anchored virtual objects will remain in the same position whenever the user puts on the headset. With Augments, users can place a trophy they won in the metaverse in their living room, or embed a video into a virtual picture frame and hang it on the wall (much like the magical picture frames of the people who live in Harry Potter 😝 ).

In order to maintain a consistently high refresh rate when rendering complex scenes, Quest 3 also implements a feature called Dynamic Resolution, which allows the headset to automatically and dynamically adjust the rendering resolution in real-time based on the workload of the GPU.

MR Mode seems so powerful, but does it come at any cost? The answer is yes, there’s no such thing as a free lunch (that’s what they said at the launch event 🥲), and the sensors and software required to run MR Mode consume more energy and put more pressure on the device in terms of heat dissipation. The Quest 3 will only last about an hour and a half in MR mode, compared to over two hours in VR mode. At the same time, MR mode also takes up more resources from the CPU and GPU, making it impossible for MR apps to get the same arithmetic support as pure VR apps.

In terms of controllers, you can clearly notice that the Quest 3’s controllers don’t have a tracking ring. In order to understand the logic behind this change, we need to understand the tracking principles of Quest controllers: VR Controllers: The Way Of Interacting With The Virtual Worlds

Using the LEDs in the tracking ring to locate the controller is very efficient, but a large tracking ring is a bit un-ergonomic (the user can’t keep their hands close together while holding the controller) and not as elegant as it could be. quest 3 has put multiple LEDs directly into the black panel of the controller this time, and embedded an additional LED in the controller’s grip (between the grip and the user’s anonymous hand). (This time, the Quest 3 puts multiple LEDs directly into the controller’s black faceplate, and embeds an additional LED in the controller’s grip (near where the grip meets the user’s ring and pinky fingers). These embedded LEDs, combined with IMU (Inertial Measurement Unit) data and AI modeling, allow Quest 3 to calculate the controller’s position with relative accuracy even without a tracking ring.

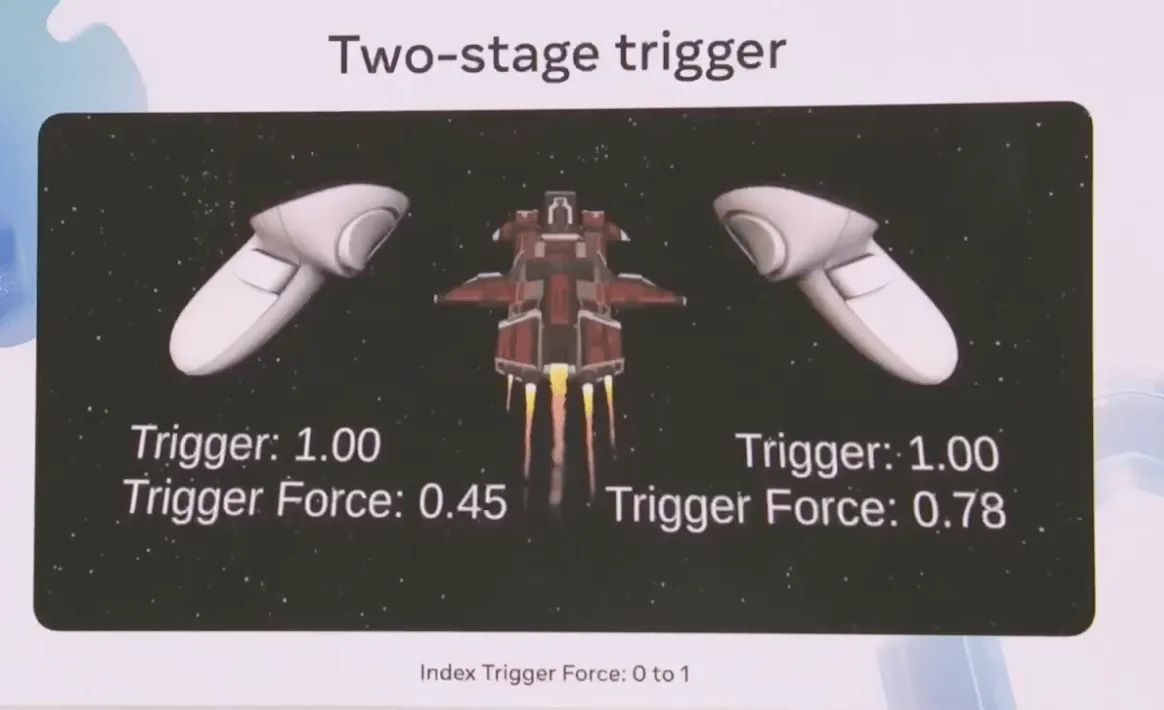

Another update to the controller is the change in trigger feedback for the Index trigger from a one-stage to a two-stage trigger. On Quest 2 controllers, the index trigger was a floating-point number from 0 to 1 (0 being not pressed at all, 1 being pressed to the end). On the Quest 3 controller, when the user presses the index finger button to the end, a second input is triggered if the user presses the button again with force. The feedback data for the second input, like the first, is a floating-point value from 0 to 1, which is stored in the variable Index Trigger Force. Developers can use this two-stage feedback to implement interactions that require the player to control the force, such as pinching an egg: the user needs to control the force with which the index finger is pressed, and if the force is too high, the egg will be crushed. This is a brand new interaction pattern, and its potential is yet to be explored by developers.

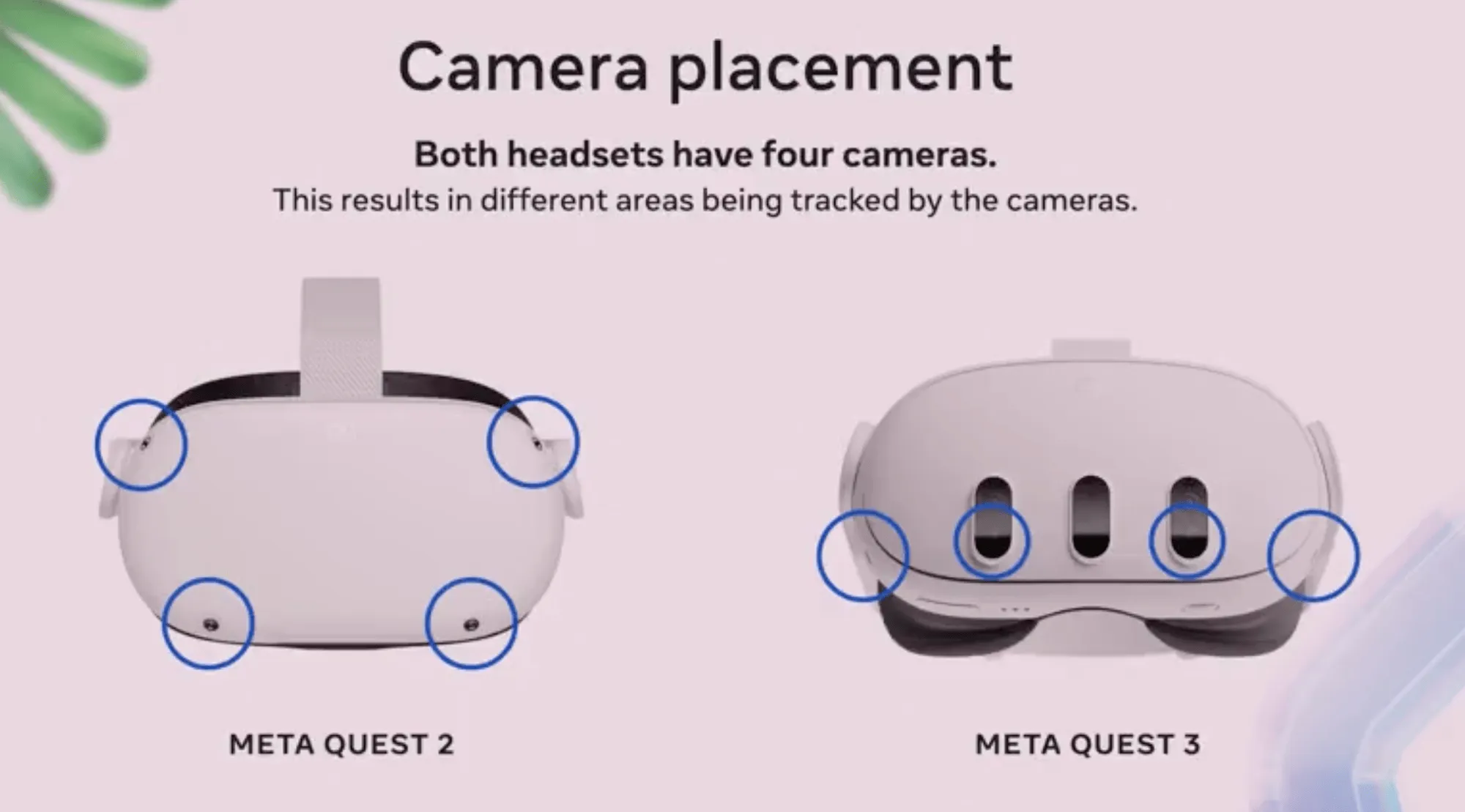

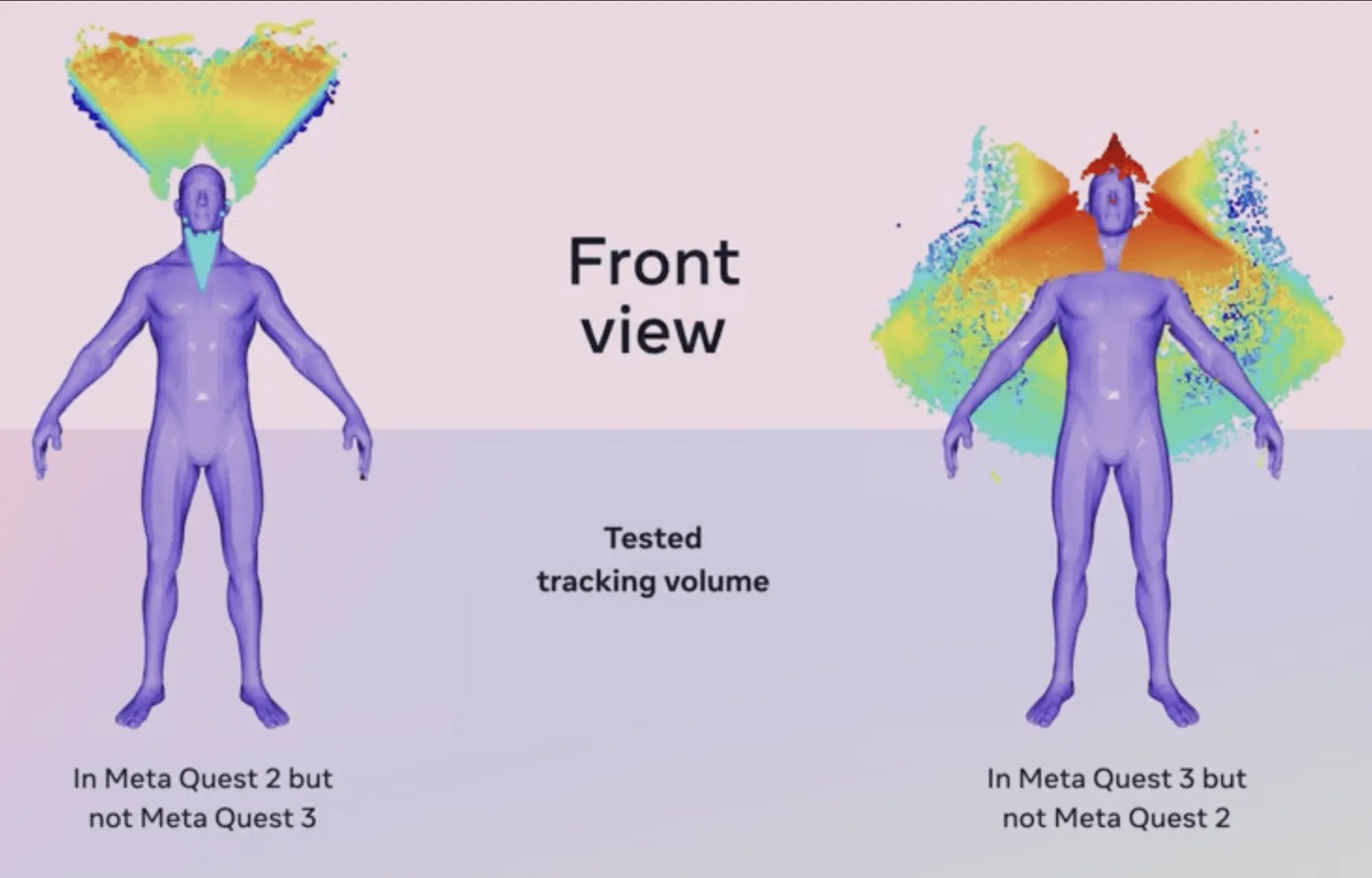

The tracking range of the controllers in Quest 3 has also been adjusted compared to Quest 2. Both Quest 2 and Quest 3 have 4 tracking cameras, the difference being that Quest 2 placed them in the corners of the headset, while Quest 3 places all 4 cameras on the lower part of the headset, with two in the outer corners, and the other two near the user’s eyes. Two in the outer corners and two near the user’s eyes.

The most obvious consequence of this change is that the Quest 3 can’t track the controller directly on the top of the user’s head, and is less able to track a small area directly under the user’s chin. The upside to this is that the Quest 3’s tracking range to the back of the user’s side has been greatly improved, allowing the Quest 3 to maintain tracking of the controller when the user extends their hands out of view to the back of their body. The natural question is, what if the user places the controller above their head? Using only the IMU data and AI modeling, Quest 3 was able to predict the position of the controller above the user’s head very accurately in a short period of time. According to Meta’s research, it is very rare for a user to hold their hands above their head for a long period of time, as this action can be very tiring for the arms. The trade-off for less tracking in these extreme situations, ajnd a larger tracking range that is more likely to be utilized more often, must have been well thought out by Meta.

In terms of gesture recognition, Quest 3 has a 75% improvement in latency for high-speed gestures compared to Quest 2, which means that interactions such as VR boxing, which require accurate gesture recognition at high speeds, will get a big boost.

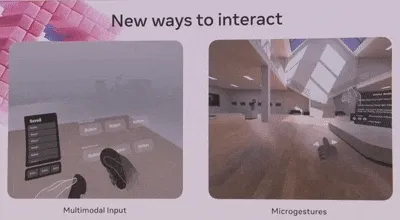

Another highlight is that Quest 3 now supports Multimodal Input, which allows users to use both the joystick and gestures for input. With Multimodal Input, users can hold the joystick in one hand and use gesture tracking with the other to interact directly with virtual objects; users can also click virtual buttons in space directly with the finger holding the joystick. In addition, Quest 3 offers Microgestures interactions, which allow users to enter commands with only small finger movements.

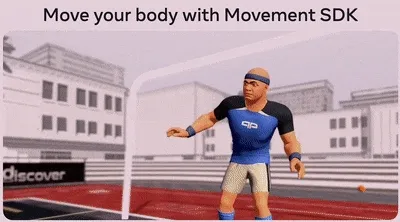

Using the Movement SDK, Quest 3 can track the movements of the user’s upper body through the downward-facing camera on the headset. Not only the upper body, but Meta’s machine learning model, trained with a large amount of data captured from real people, allows the system to hypothesize the user’s lower body’s movement posture. These updates make it possible to develop applications that require accurate recognition of a user’s body posture, so maybe you’ll be able to play sandbagging in VR in the future.

With that said, let’s take a look at what new tools Meta has to offer to help developers create more and better MR apps. After all, MR is an emerging technology that is immature in terms of hardware and content. A thriving developer ecosystem will play a key role in MR’s growth in the coming years.

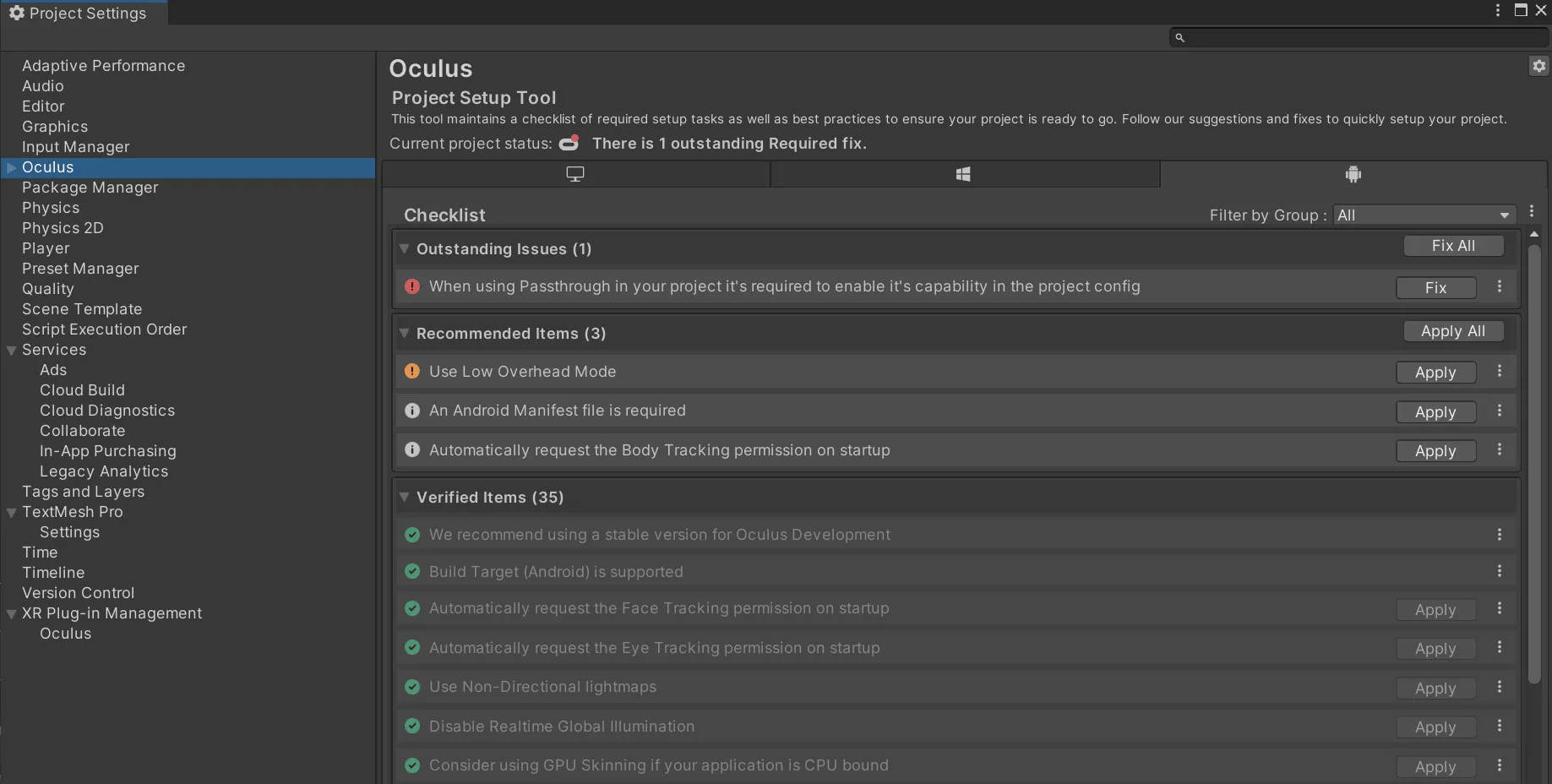

First up are two tools that make Unity XR development easier Unity Project Setup Tools and Building Blocks. /The first is two tools that make Unity XR development less difficult Unity Project Setup Tools and Building Blocks. Project setup for Unity XR projects can be very tedious, sometimes taking hours to complete (and anyone who’s ever had to repeatedly fail to build a project because of project setup issues knows what it’s like). With the Project Setup Tool, developers can automate project setup with just a few clicks.

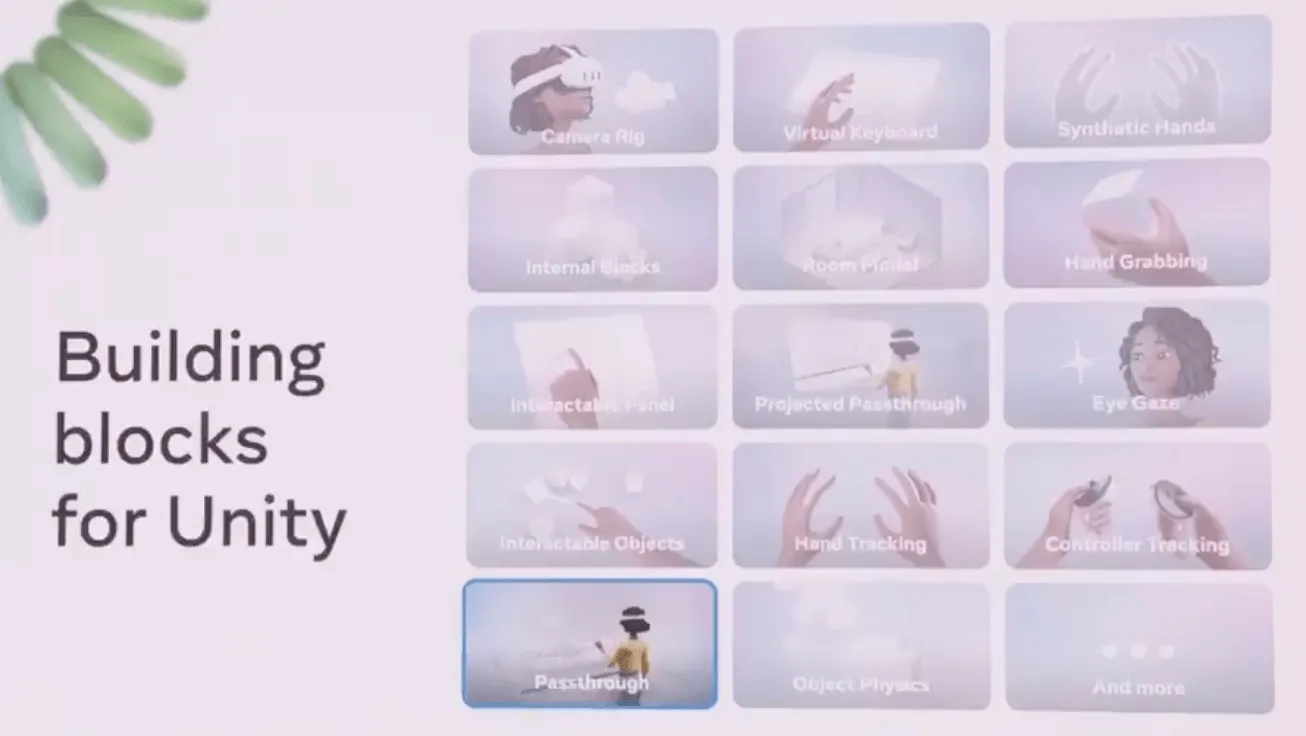

Building Blocks provides some ready-made functional components in Unity Editor, Unity developers can just drag and drop these components into the project to realize the corresponding functions, such as Camera Rig, Passthrough, etc.

Next up are two tools to speed up XR project development, the Mixed Reality Utility Kit and the Meta XR Simulator. The challenge with MR app development is that developers can’t predict what the user will do. The challenge with MR application development is that developers can’t predict the user’s environment, and it’s difficult to have a quick and easy solution to the problem of where to place virtual objects. With the Mixed Reality Utility Kit, developers can directly invoke Spatial Queries to ensure that virtual objects are placed in the desired location in any environment. The tool also provides several maps of pre-scanned environments so developers can test the program’s performance in environments other than their own office, and the Mixed Reality Utility Kit will be available at the end of this year with support for both the Unity and Unreal engines.

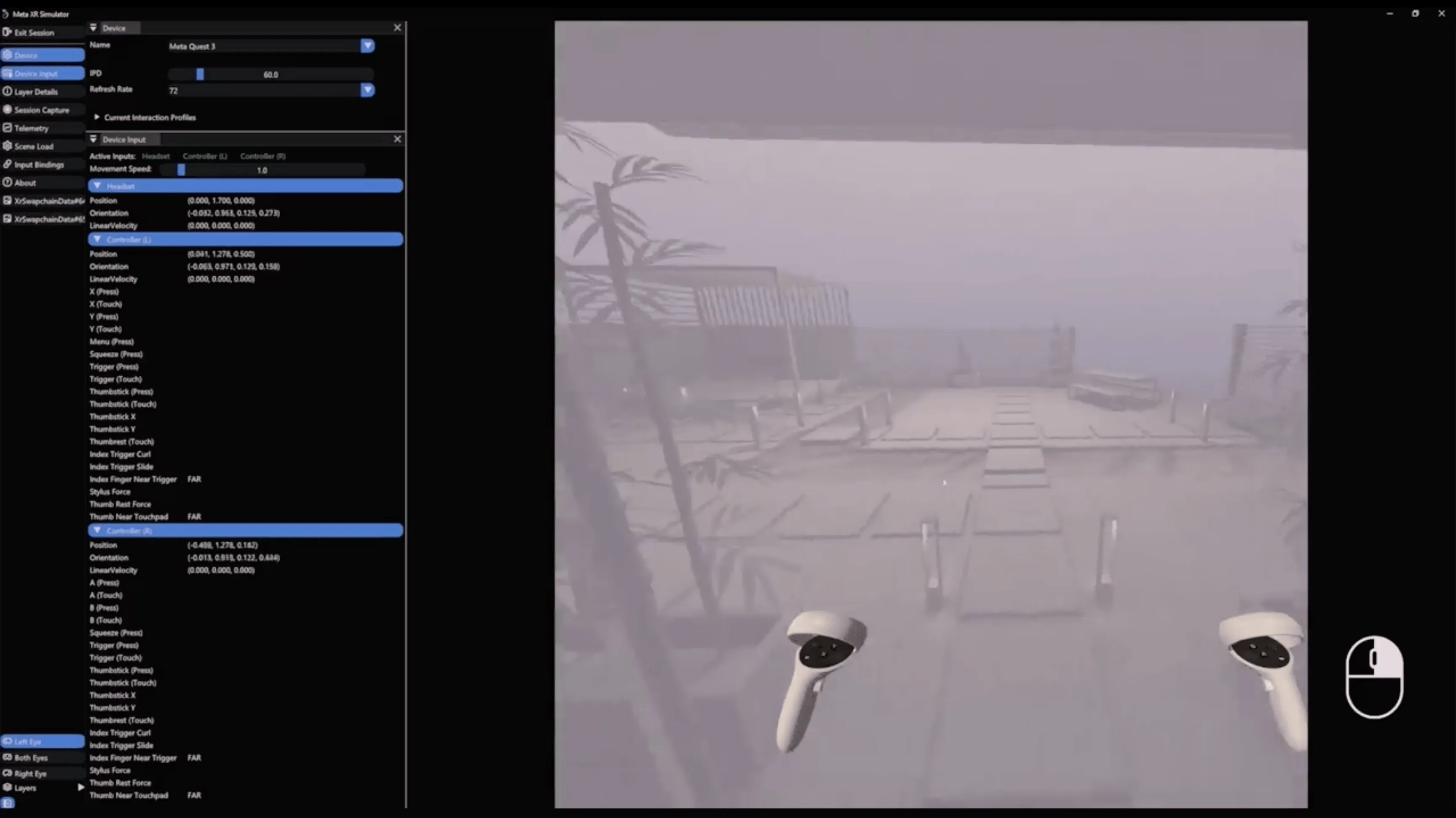

Meta XR Simulator is an XR project testing tool for PC and Mac that helps developers test XR apps without the use of a headset to improve the efficiency of development iterations (the kind of development where you change two lines of code, Build to the headset, realize you forgot to change a variable, and then change it again and Build again is not very efficient). Users can simulate input from the Quest headset on their desktop with a keyboard and mouse or even an XBOX controller. The tool provides some synthetic environment maps for developers to test MR applications in different environments.

One of the highlights of the Meta XR Simulator is Session Capture, which allows a series of user inputs to be recorded as a “video”. This allows a series of user inputs to be recorded as a “video”, which can be replayed over and over again in subsequent tests to speed up testing.

Any developer with experience in multiplayer games will know that testing multiplayer games is not an easy task, and the problem is even more difficult in multiplayer MR projects. In addition to testing network connectivity, synchronizing spatial coordinates across multiple devices takes time to setup, and Meta XR Simulator thoughtfully provides Multiplayer testing by running several instances of the app on your computer at the same time to simulate network connectivity across multiple devices. What’s even better is that these instances can share a virtual synthetic MR space, which solves the problem of synchronizing space coordinates, so Meta has really put a lot of thought into testing multiplayer MR apps.Meta XR Simulator supports both the Unity and Unreal engines. For hardcore developers, Meta also provides a native C++ interface.

Another good news is that it is now possible to develop MR apps on Quest using ARFoundation, the official cross-platform AR development framework provided by Unity, which allows projects to be deployed to multiple platforms at the same time. Meta’s efforts in cross-platform support are really something to behold, as they said at the launch event ---- Your ecosystem, your engine, your choice.

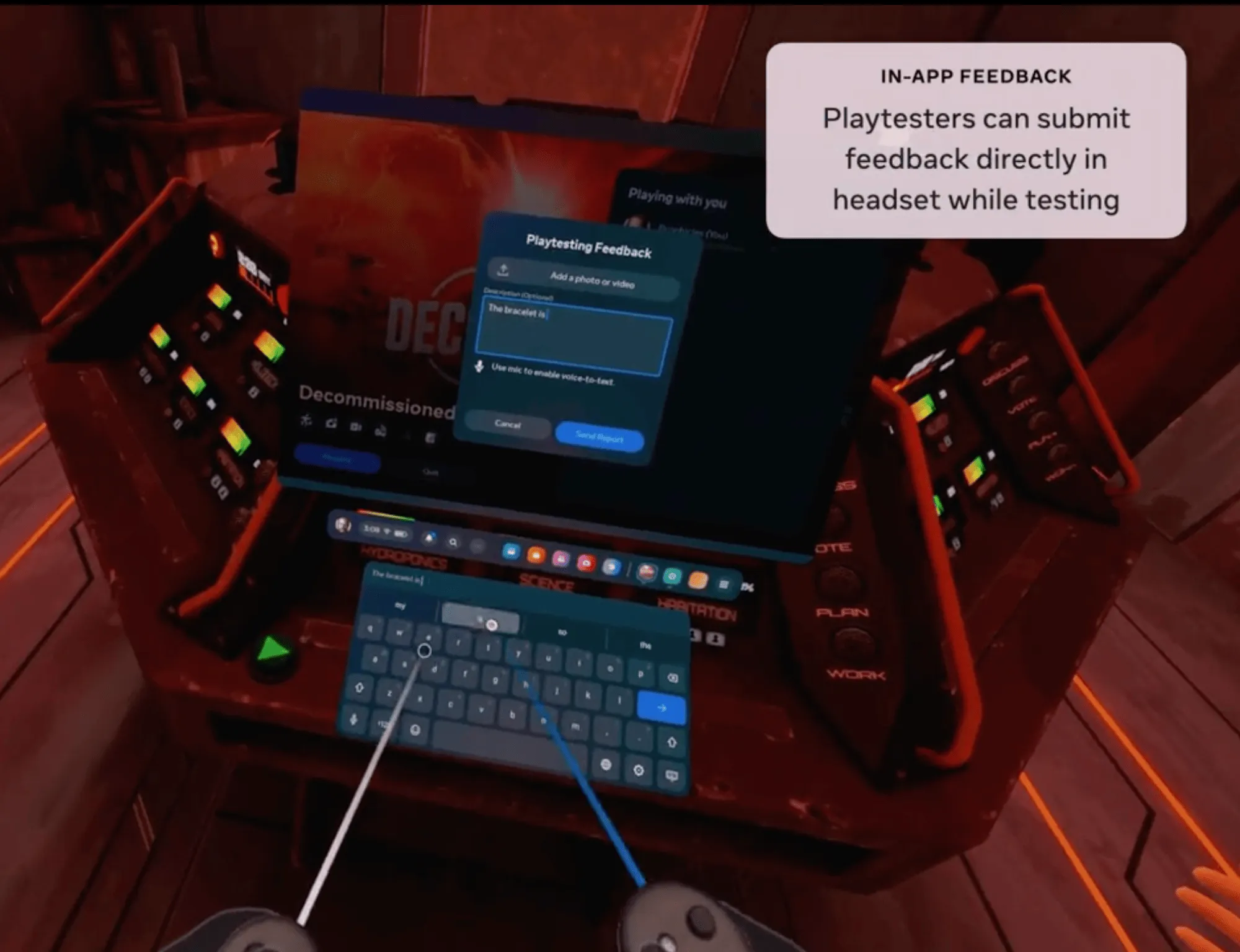

Meta also provides a number of other tools to help with testing, Release channel URLs provide a feature similar to Apple’s TestFlight, where developers can share early builds of a project via a URL link to get more people to participate in testing, and In-app feedback allows testers to submit feedback directly into the game while wearing a headset, and automatically sends testers the feedback they’ve received. In-app feedback allows testers to submit feedback directly in-game while wearing a headset, and automatically attaches the tester’s device model and build number to the feedback, allowing developers to filter feedback from specific devices and specific build numbers. Instead of scrambling to fix a bug and upload a new version, developers can roll back to any previous version of the app and then take their time to troubleshoot the cause of the bug.

Despite all the heavy information, Meta’s AI replaced XR as the main character in the Keynote of this conference. A few bloggers took offense, such as TonyVT SkarredGhost, who said, “There were no surprises, and the AI completely stole the show”. completely stole the show.”

Indeed, in the hour and a half long Keynote, there was less than 20 minutes of Quest and VR/MR related content, with the rest of the time dedicated to Meta AI related content. The rest of the time was dedicated to Meta AI-related content, which undoubtedly made many Quest fans feel “sad”.

However, in terms of the proportion of content in the session, content related to the Quest platform still accounted for the majority of the content (11:1:5 ratio of Quest, Spark AR and AI), so there is no need to be too discouraged.

Like Apple’s WWDC, as a developer conference, in addition to the Keynote, there will also be a few sessions at Meta Connect to expand on some of the details.

The software features above are just a few of the things we found interesting in the sea of updates, so if you’re looking for more details, we’d recommend checking out the official sessions.

Over the course of the two-day conference, Meta offered a number of these sessions related to Quest and Spark AR (thank goodness there were only 12, not as many as the 46 sessions that Apple’s WWDC spatial computing had to offer):

| Days | Session Addresses | Main Content |

|---|---|---|

| Day 1 | State of Compute: Maximizing Performance on Meta Quest | How to optimize performance on Quest 2, Quest Pro, How to Optimize Performance on Quest 2, Quest Pro, Quest 3 |

| Growing Your VR Business on Meta Quest | A case study of some third party developers on how to build their own business model based on VR and gain exposure | |

| Beyond 2D: How Kurzgesagt Scaled to VR | Kurzgesagt is a well known popular science channel on Youtube (with 2000w subscribers). In this session, Kurzgesagt describes how they created Out Of Scale, a very interesting VR science game for VR. | |

| Action, Reaction: Turning Inputs Into Interactions | How to use Meta’s latest technologies such as Hands, etc. for more natural inputs. more natural inputs. | |

| Discover the World of Meta for Work | Explore three areas: why commercial companies should use MRMeta’s platform how to innovate some of the things we’ll be looking at later in the year. | |

| Day 2 | Meta Quest 3: Realizing Your Creative Potential | Various hardware updates and some new APIs for Quest 3 |

| Unlocking the Magic of Mixed Reality | Presence Platform is an umbrella term for a series of Mixed Reality SDKs that Meta will be launching in 2021, and this session introduces the highlights of the Presence Platform’s capability updates. | |

| Everything You Need to Know to Build on Meta Quest | Introducing the Meta Quest Developer Hub, playback testing, performance testing, and Meta XR Simulator. | |

| What’s New in Meta Spark on Mobile | Introduced Spark - the latest developments and updates to Meta’s AR platform for cell phones. | |

| Get Moving: The Latest from Movement SDK | describes how to use Movement SDK’s Body Tracking, Face Tracking and EyeTracking of the Movement SDK to enhance the user’s social experience and learn to use motion data to provide a more realistic interaction experience. | |

| Building Mixed Reality Experiences with WebXR | Introduces Meta’s latest tools for WebXR, such as the immersive Web Emulator and Reality Accelerator Toolkit. | |

| Breaching the VR Gaming Market: How Breachers Broke Through | With a new version of Breachers, a multiplayer shooter, learn how Triangle Factory built the game and released a new version based on a community-centered development process. |

Author of this article

| Link | Image |

|---|---|

| Yuchen |  |

| Onee |  |

Medium Footer

If you think this article is helpful for you, you are welcome to communicate with us on X, xreality.zone

Recommended Reading

- What Is Spatial Video On iPhone 15 Pro And Vision Pro

- Open Source Framework RealityShaderExtension: Transfer Shaders from Unity and Unreal to visionOS - Writing Shaders on visionOS Made Easy

- Before developing visionOS, you need to understand the full view of Apple AR technology

- Advanced Spatial Video Shooting Tips

- Beginner's guide to AR: Exploring Augmented Reality With Apple AR Tools

- Unlocking the Power of visionOS Particles: A Detailed Tutorial

- A 3D Stroke Effect: Getting Started with Shader Graph Effects on visionOS - Master Shader Graph Basics and Practical Skills

XReality.Zone

XReality.Zone