What Is Spatial Video On iPhone 15 Pro And Vision Pro

XRealityZone is a community of creators focused on the XR space, and our goal is to make XR development easier!

This article is first published in xreality.zone

For most consumers, the “spatial video” mentioned in Apple’s fall event may be just one of the many features that are not very eye-catching. The reason why it is not very eye-catching is probably because in addition to this segment of the show at the press conference, it is difficult for us to get a deeper perspective on what spatial video will look like:

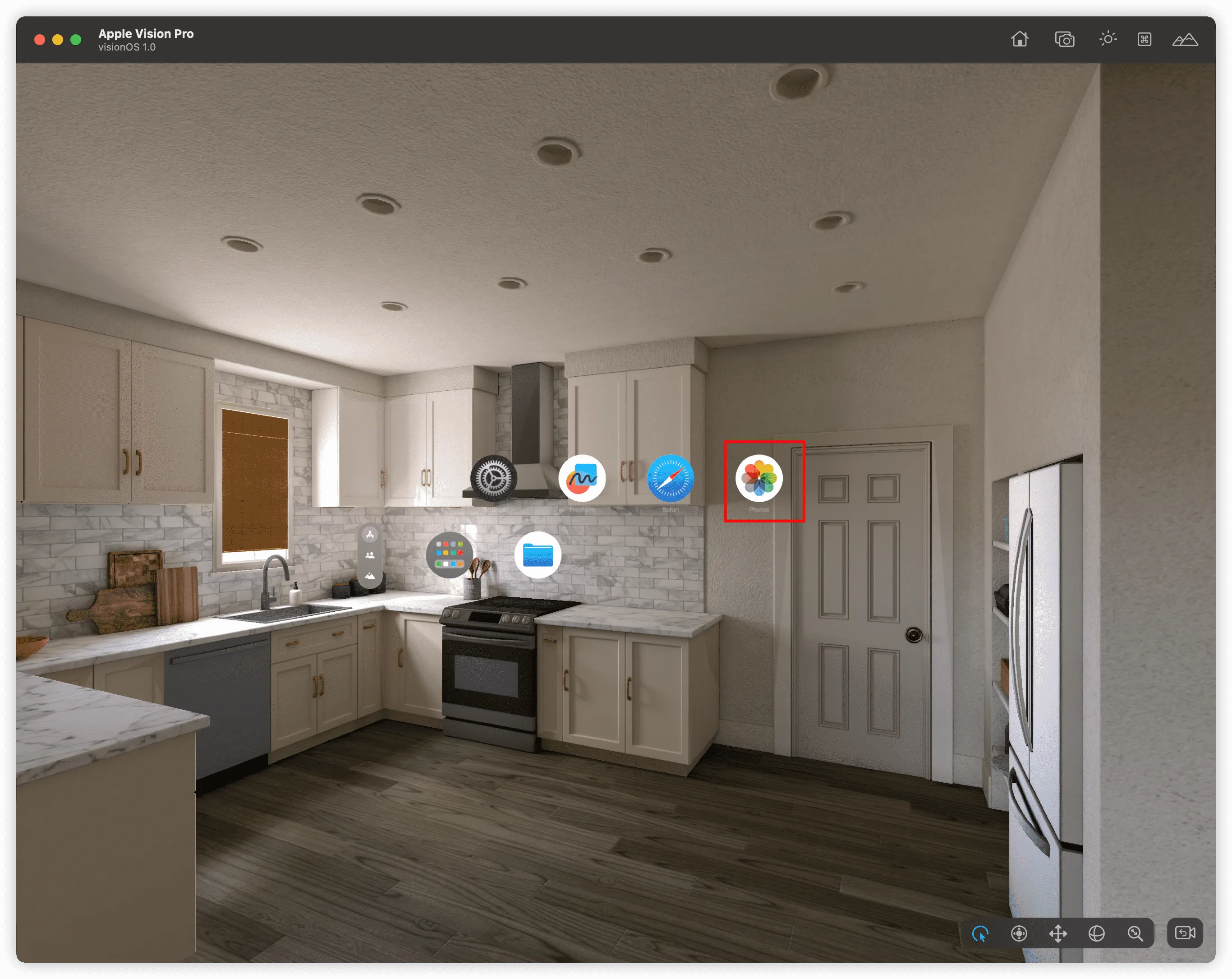

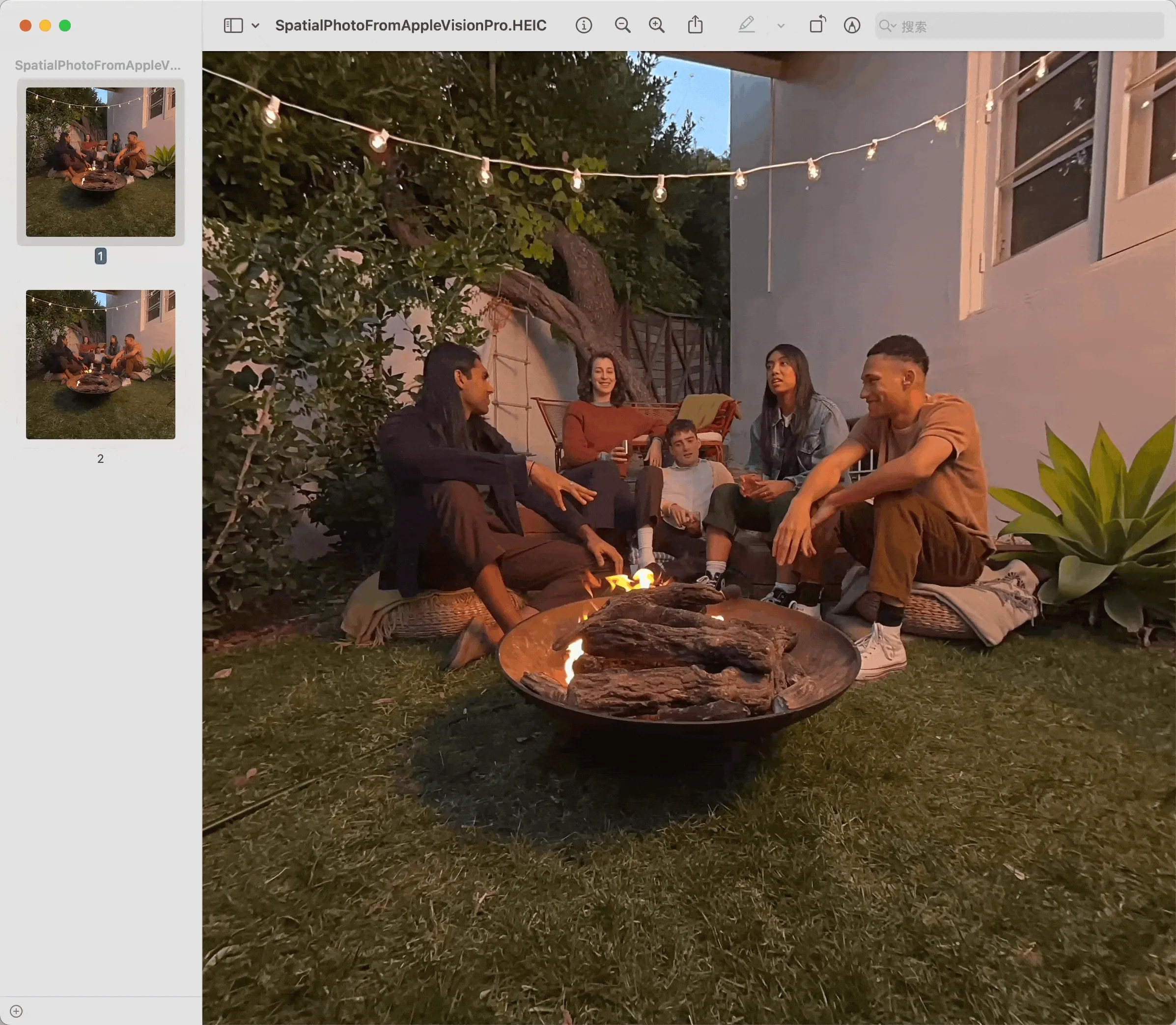

For now, we still can’t see the “spatial video”, but in fact, starting from Xcode 15 beta 8, developers can get a general idea of the specific viewing experience of “spatial photos” through the startup screen of the built-in album App in visionOS:

So in the following article, we will talk about three things related to “space” together:

- How to experience spatial photos in the simulator

- What is spatial video/photo

- What did Apple do

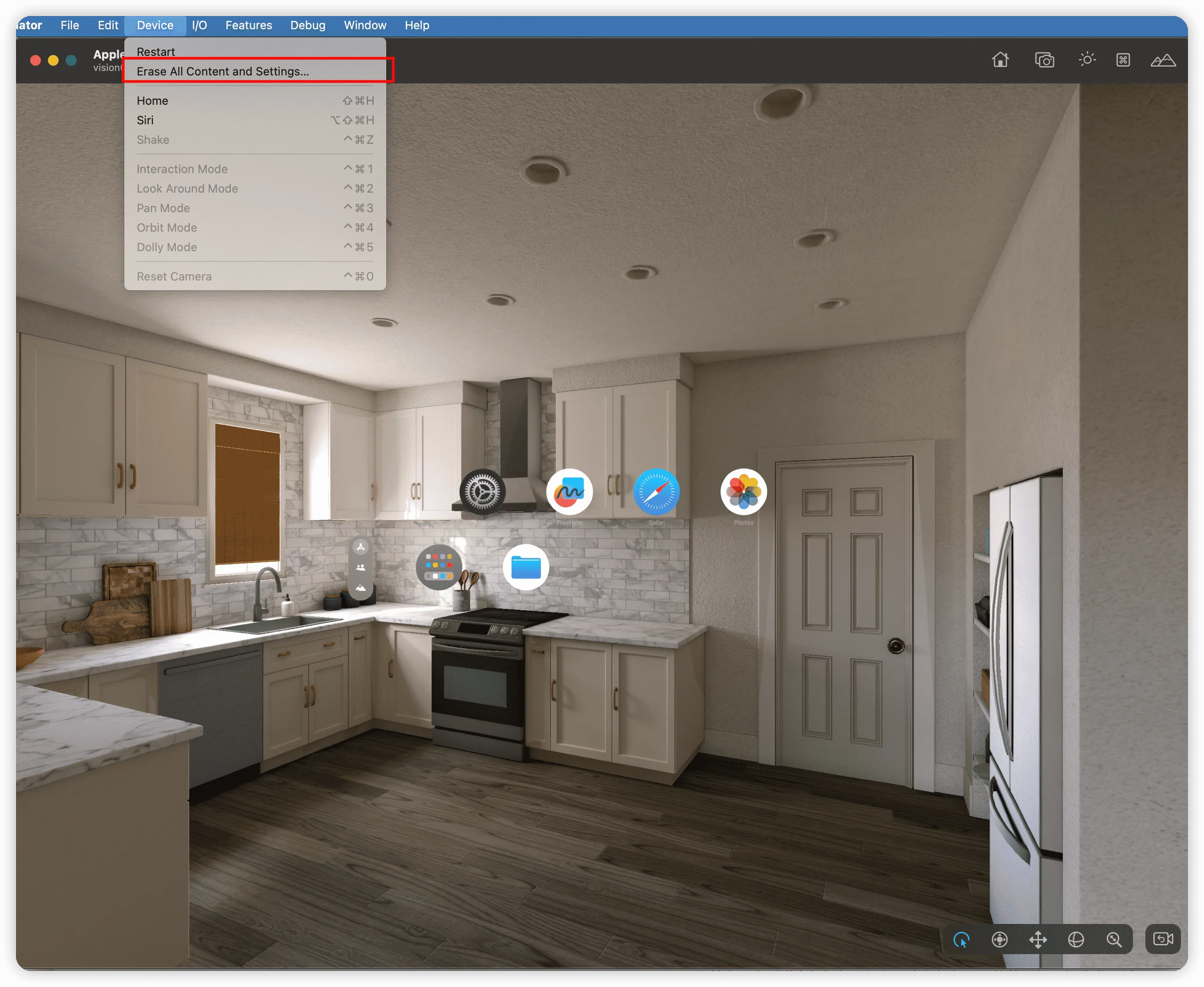

How to experience spatial photos in the simulator

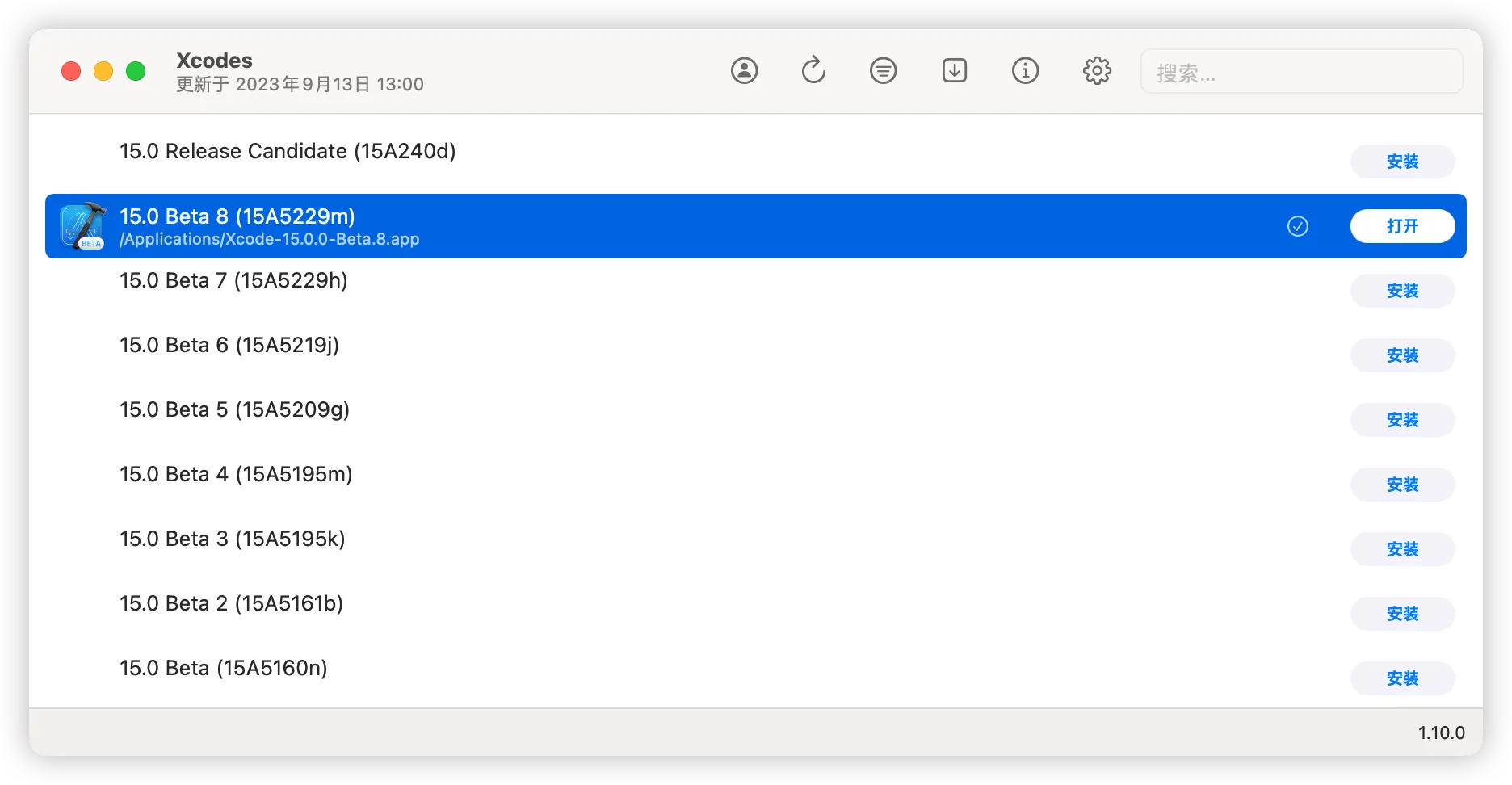

First of all, you need to download an app called Xcodes. After that, log in to your Apple ID in this App, then you can select the beta version of Xcode in the list to download. Here we choose downloading beta8 version (currently, only beta version Xcode can install and use visionOS simulator)

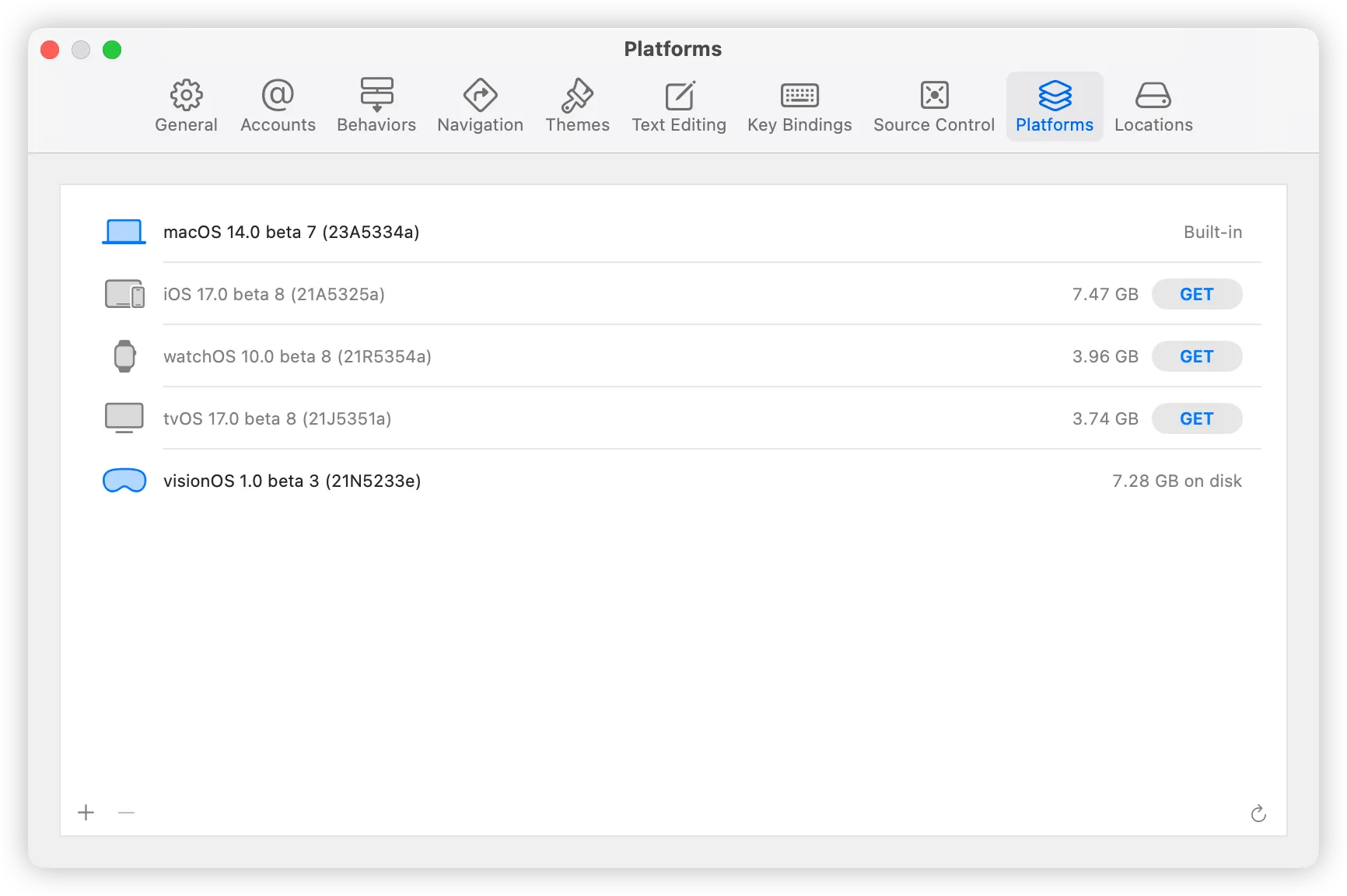

When installed Xcode beta8, you can install the corresponding visionOS SDK and simulator through Settings -> Platforms:

Open the simulator, click the Photos app directly, after the Photos App starts, you can see the example of spatial photo showing at the beginning of this article:

Tip

If you accidentally close this startup screen, you can choose to reset the simulator in the menu bar of the simulator to see this startup screen again.

But, since the simulator can only show flat 2D content, we cannot perceive a particularly strong 3D effect in the simulator. To explain the ins and outs of this matter clearly, we have to continue in the next topic.

What is spatial video/photo

If you have watched 3D movies in the cinema, you should have experienced spatial videos. Avatar, released in 2009, let the public know about this new 3D movie viewing experience.

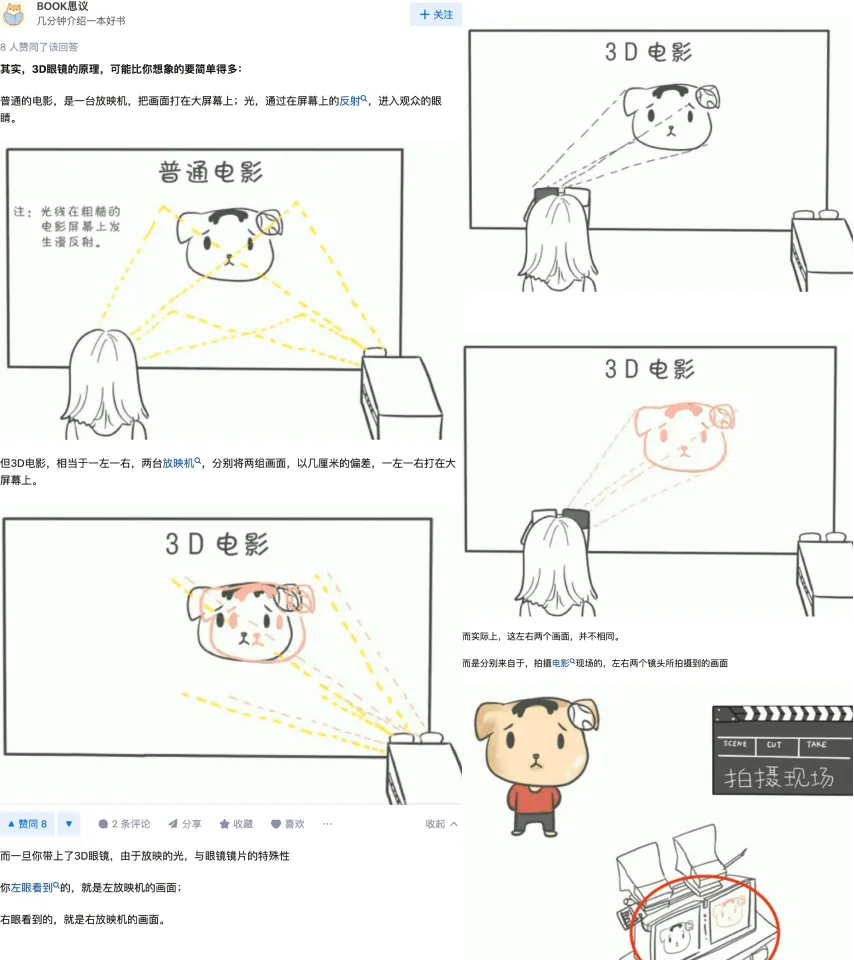

For 3D movies, in the past ten years or so, everyone has more or less understood the principle behind it. Using the parallax effect of the human eye, two cameras are used to shoot during the filming of the movie, and two projectors are used to project during the screening. Zhihu user BOOK’s answer also explained the principle very simply and clearly:

After having the theory, 3D movies also need a specific video format to store. In the current practice, we generally see two types of 3D movie storage formats:

- Anaglyphs 3D

- Split Screen 3D

Let’s talk about Anaglyphs 3D first. This form of 3D is achieved by using filters of different colors (usually red and cyan) to color mix the images of each eye to achieve a 3D effect. Since the final naked eye display effect is very similar to anaglyphs, it is also called anaglyphs 3D. If you get 3D glasses with red and blue lenses in the cinema, you will see a picture like this after taking off the glasses:

This form of 3D video is generally seen in cinemas.

Another form of 3D video is Split Screen 3D. This form of 3D video generally puts the left and right eye images in the same frame of the video at the same time. In practice, we will see two types of placement, namely side by side (Side By Side) and top and bottom (Top and Bottom):

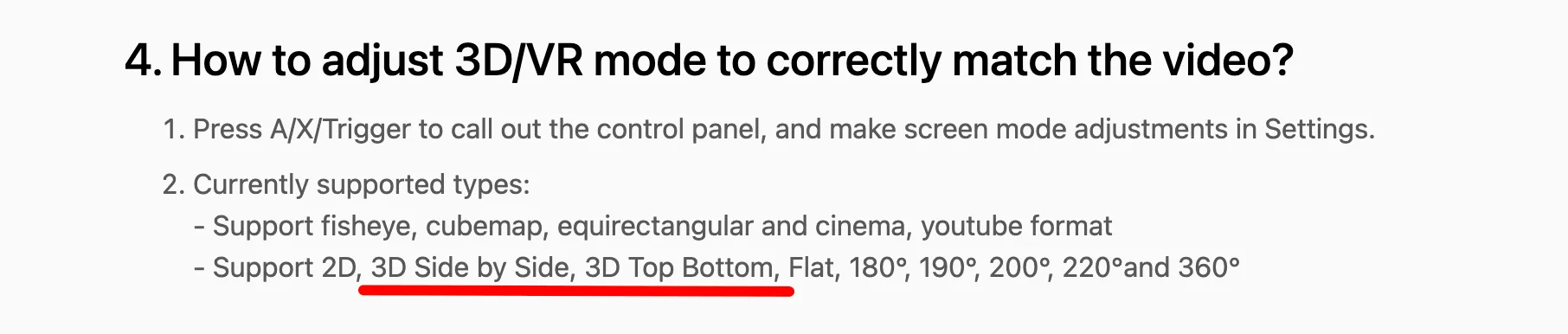

This form of 3D video is generally seen in the player of various PC/Mobile/VR headsets. For example, the 3D mode of the famous player nPlayer on iOS and the well-known VR player app MoonVR on VR headsets all support this Split Screen 3D mode:

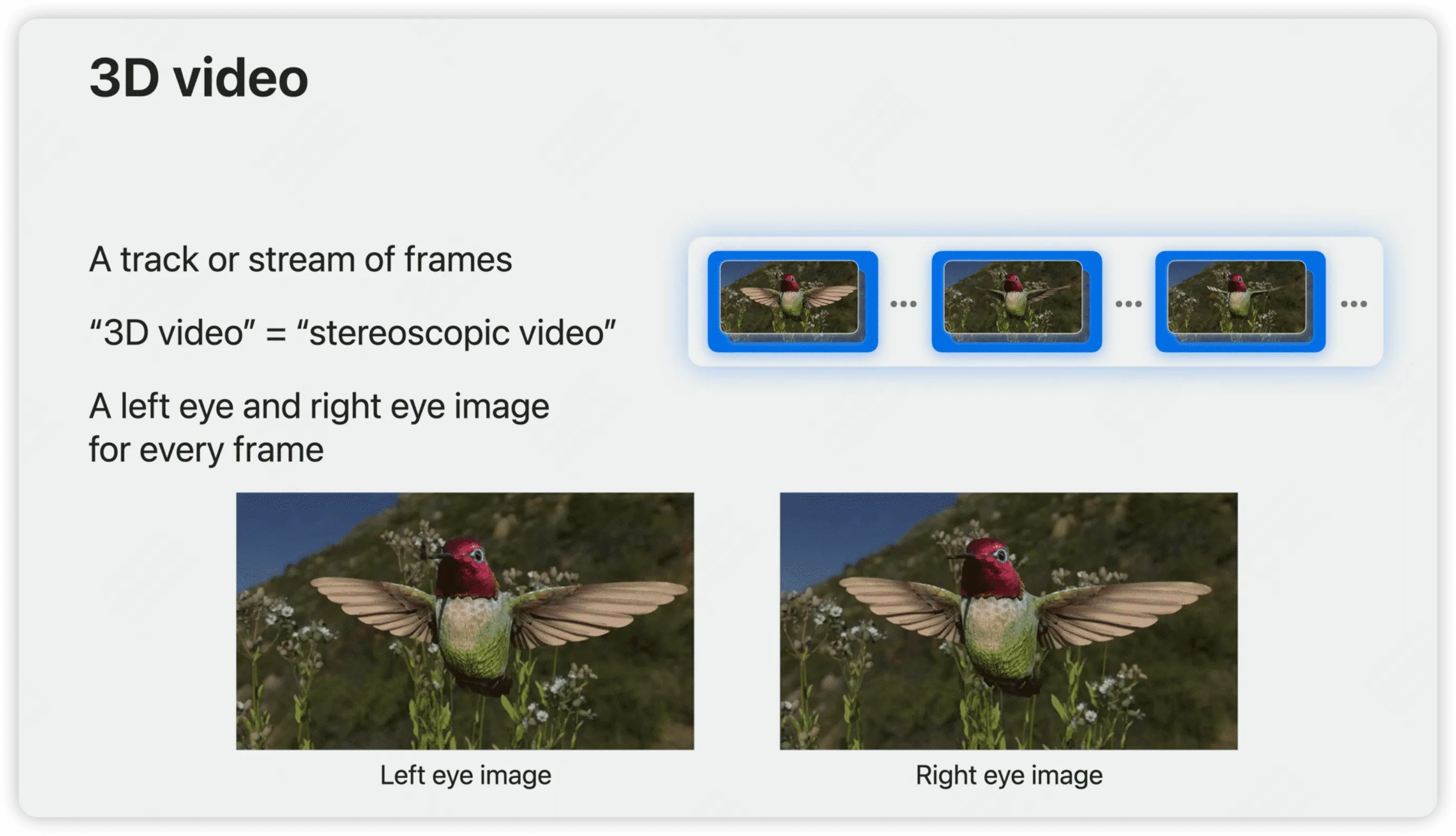

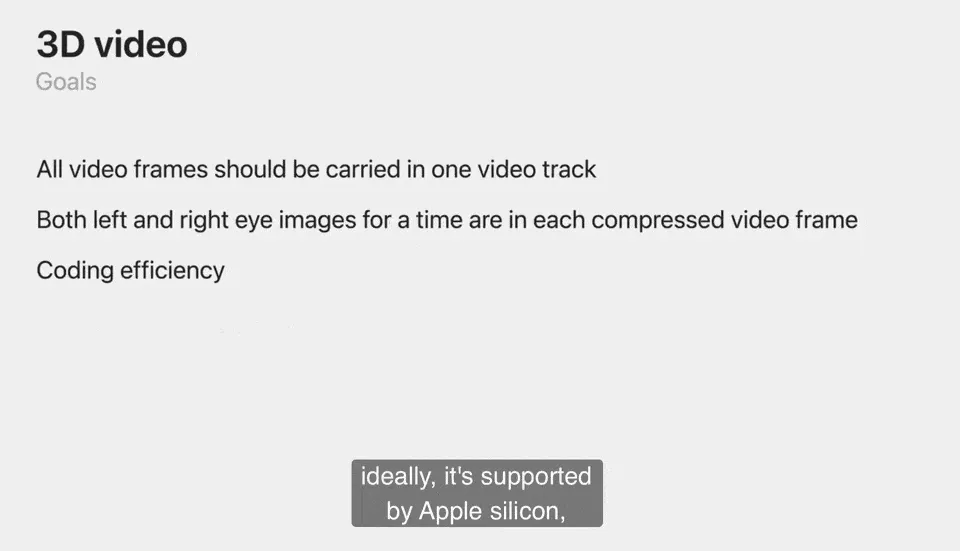

The “spatial video” mentioned in this Apple event is not a particularly novel technology. Like the 3D movies we have seen before, it also uses the parallax of the left and right eyes to make people feel “stereo”. In WWDC’s Session 10071, 3D video was also explained as follows:

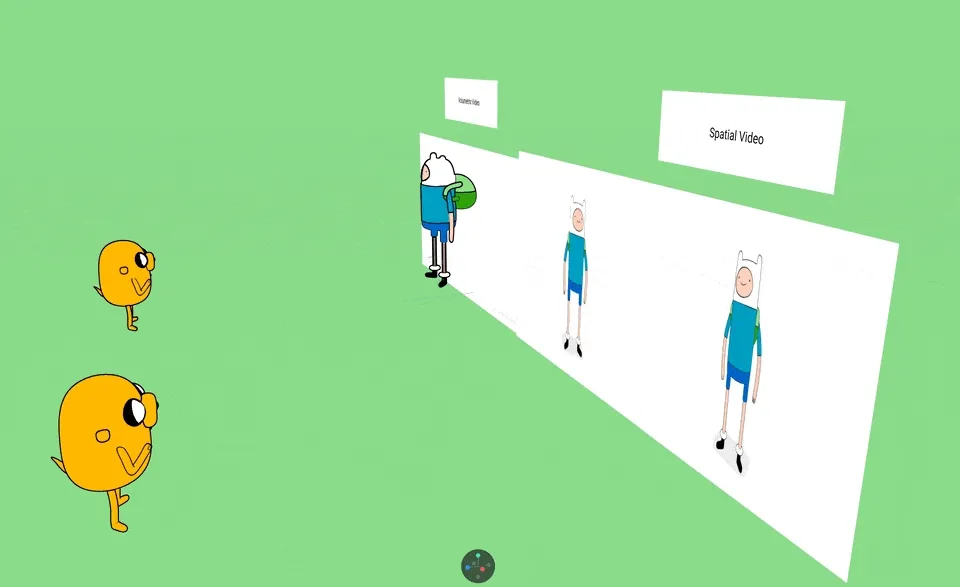

The principle of spatial photos is also the same. By taking two photos from different angles, you can use the parallax of the human eye to show the 3D effect. Through some 3D cameras, we can also get “3D photos” similar to 3D videos (the following photos are from the sample collection of QooCam EGO):

By using some technical methods, we can find out that the spatial photo we saw in the simulator startup screen is actually a simple HEIC format picture, but it saves two photos in one HEIC at the same time. These two photos are very similar as a whole, but because of the different positions when shooting, they will have very small parallax:

So now, you have already understood what “spatial video” and “spatial photo” are, you may wonder that Apple did not create any new technology, and it even looks like a very “ordinary” technology. It seems like that we have been “tricked” again?

Tip

Here we say it’s “ordinary” because another type of “3D video” - volumetric video may be more “technological” compared to the spatial videos. For example, the application Wist we introduced in the XR World Guide 002 is a representative of volumetric video.

Because volumetric video records the depth of the entire real world, so even if you watch it on a 2D plane, as long as you switch the perspective up, down, left, and right, you can also perceive a strong sense of stereo.

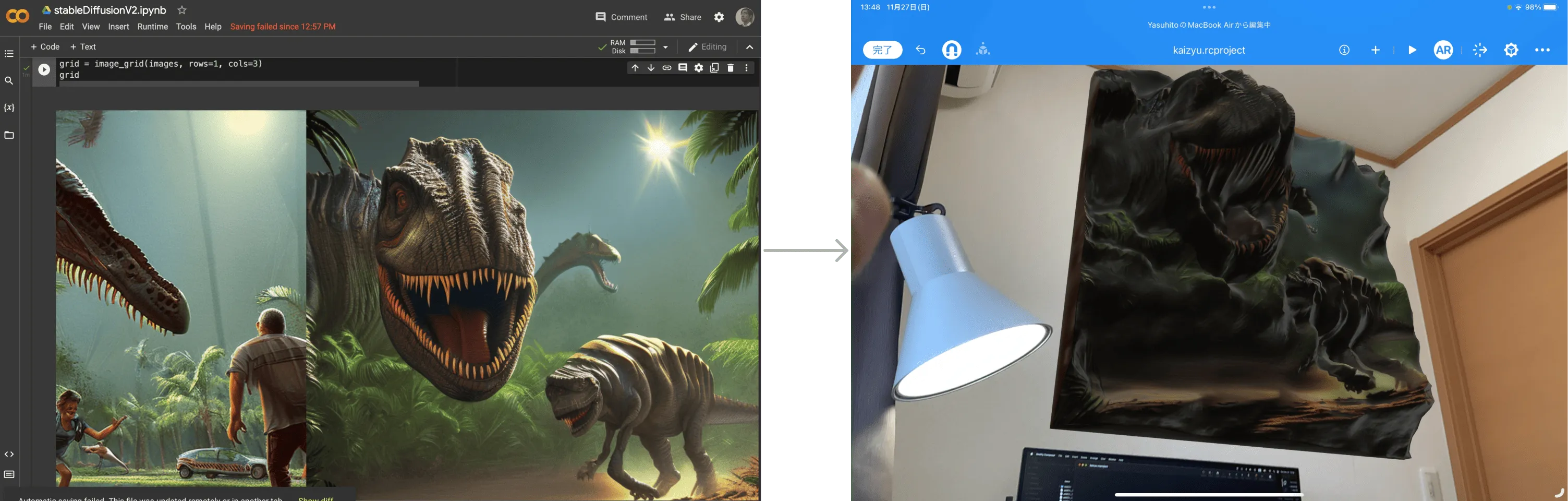

Indeed, it is possible to obtain depth information by using two photos with different perspectives, and even directly estimate the depth information with a single photo through some AI models. For example, the developer Yasuhito Nagatomo introduced his attempt in this tweet, but this is not within the scope of this article for the time being, we just need to know it briefly.

What did Apple do

Actually, let’s think about it calmly. Many times, the complexity of technology is not directly related to the value of it. The popularity of technology may be more influential to the value brought by technology.

Before smartphones that can take photos appeared, taking photos and videos were actually a very “ordinary” technology, but they were more limited to professionals. Until the advent of smartphones that can take photos, “taking photos” and “recording videos” have become technologies that ordinary people can easily get, which also ushered in a corresponding explosion in the Client end market.

What Apple did on spatial video and spatial photo this time is very similar to what smartphones did—making technology be more popular. Apple has spent a lot of effort on making technology more popular on the production-side hardware, consumer-side hardware, and software-side.

On the productivity hardware side, before the appearance of iPhone 15 Pro, if you want to shoot spatial videos or photos, you need to use two cameras to shoot at the same time or buy a professional 3D camera like QooCam EGO. Now, you only need to have an iPhone 15 Pro / Pro Max. (Although the overall sales of the mobile phone industry has declined, iPhone 14 Pro/Pro Max still sold more than 40 million units worldwide in H1 2023. You can imagine the impact of this level of device on the popularization of spatial videos and spatial photos.)

On the consumer hardware side, Apple Vision Pro, the strongest all-in-one headset in this world, must provide the best hardware foundation for viewing spatial videos and spatial photos. Since you may already know the hardware parameters of Apple Vision Pro very well, we won’t explain it in detail here.

On the software side, by using HEVC(a video encoding format), and HEIF(a image format standard), Apple has successfully pushed the compatibility of spatial videos and spatial photos forward again.

Tip

Are you confused? Didn’t we see a HEIC spatial photo just now? What is this HEIF? Don’t worry, let’s talk about these things slowly.

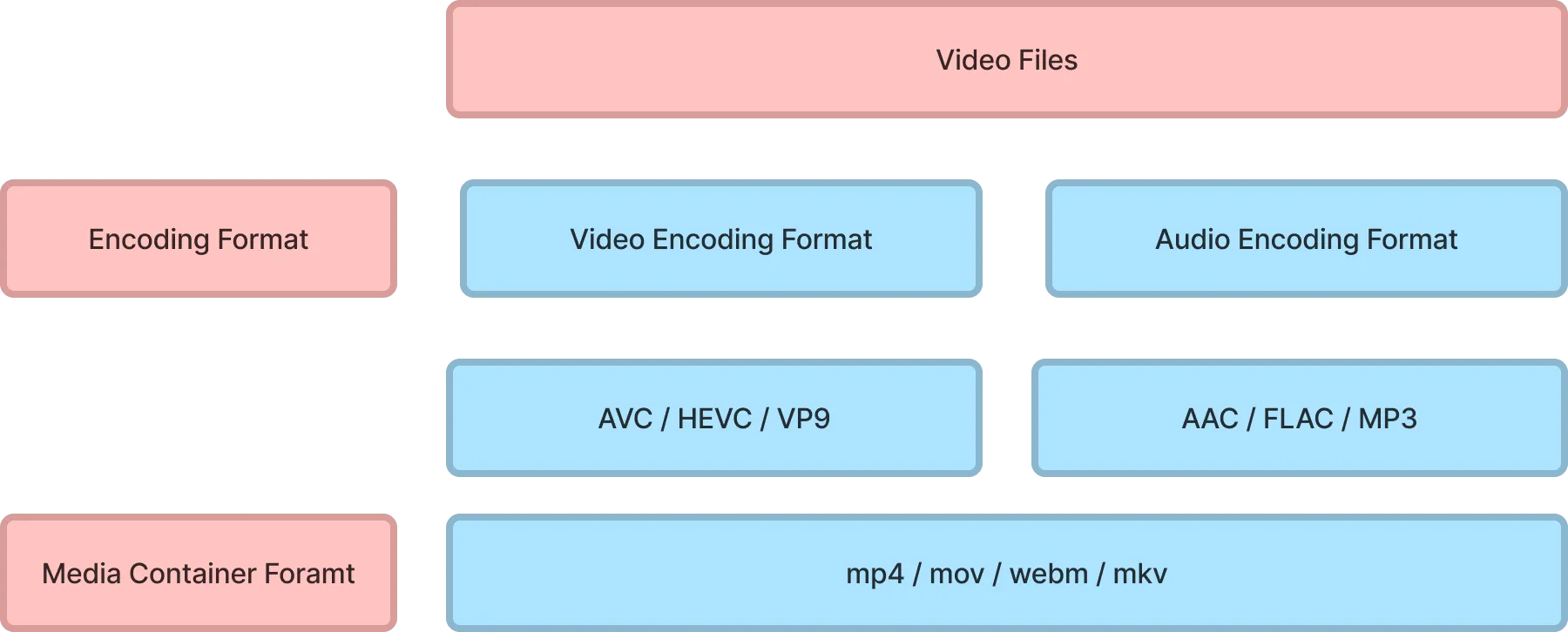

Let’s talk about HEVC first. This is a video encoding format. The reason why we emphasize that this is a video encoding format here is because the extensions of various video files we usually see (mov/mp4/webm, etc.) actually represent the container format of the video file. For video, the container format solves how to package multiple types of multimedia content together, while the encoding format needs to solve how to efficiently compress multimedia content for transmission.

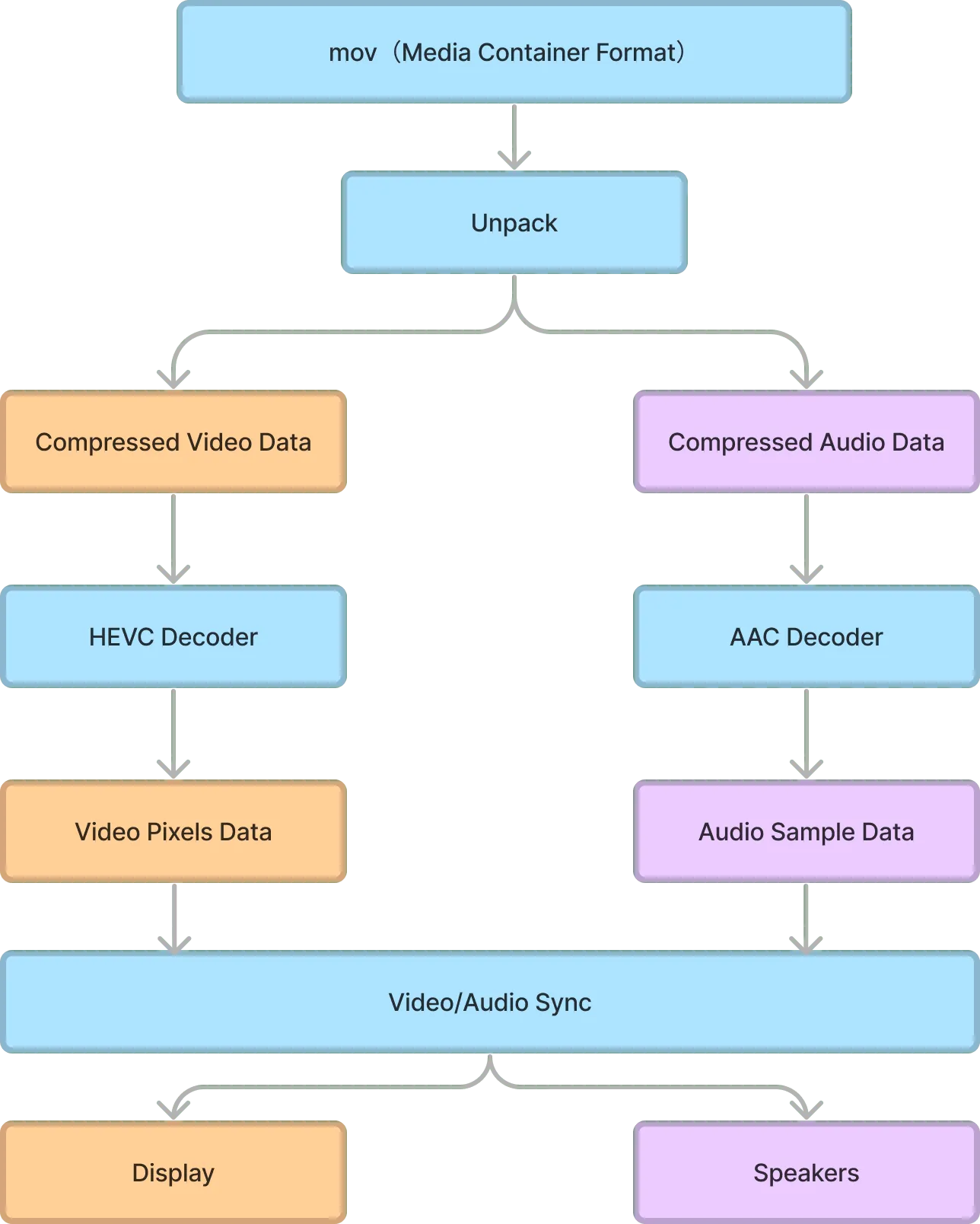

A video file (here is mov) needs to go through the following process to be displayed normally on our device:

Tip

Of course, multimedia container formats and encoding formats cannot be combined arbitrarily. Generally, a container format will support some specific encodings. If you are interested, you can learn about some of the common container formats here.

The video format that using by spatial videos this time is MV-HEVC (Multiview HEVC), which can be understood as an extension of HEVC. The main purpose of this format is to efficiently encode two video frames with only different perspectives in an efficient compression method. The existing 3D video has not done any optimization in the encoding, so for example, the sample shot by QooCam EGO below, each frame is actually stored in Side by Side form. The picture of the left and right eyes, you can imagine that there will be a lot of redundant information between the left and right eyes (theoretically, such redundancy will make the file size directly 2 times that of a single frame video file)

At the same time, since MV-HEVC is fully compatible with HEVC decoders, it can also be played as a normal HEVC video on devices that do not support 3D viewing.

Summarizing briefly, with MV-HEVC, this new video format can be on par with all existing HEVC video files in terms of size and compatibility, and it can also be well supported and played by all Apple devices (only Apple Vision Pro can play it, this feeling, is it like LivePhoto back then?), which undoubtedly has a great boost to the spread of spatial videos.

Tip

Since iOS 11 era , both HEIC and HEVC formats have very good compatibility in Apple devices. Think about it, iOS 11, that was 6 years ago!

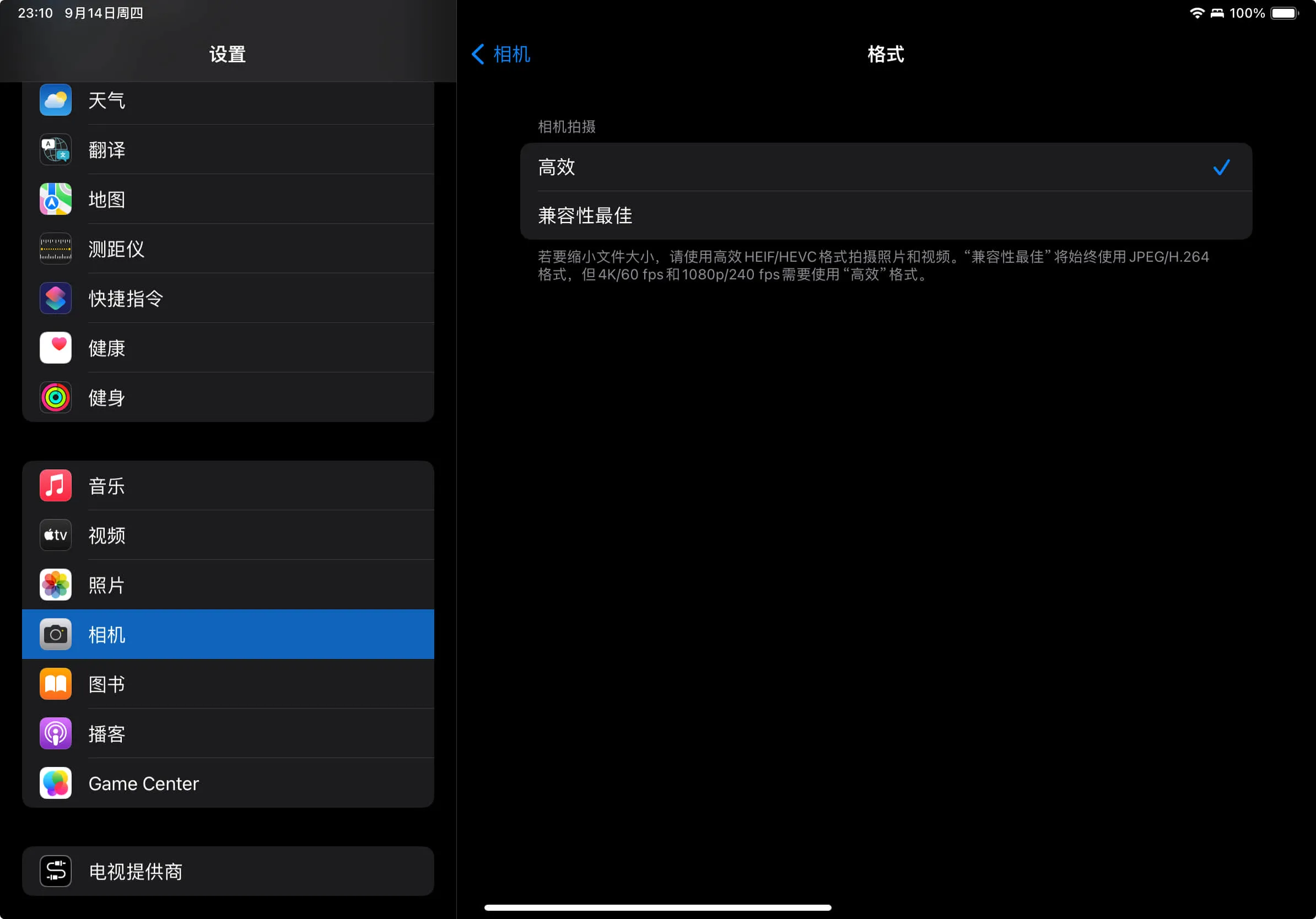

The principle of HEIF corresponding to spatial photos is also basically the same. This is a picture standard that can hold a single picture or multiple pictures (that is, a picture sequence). There are many different file extensions for this standard in actual scenarios, such as .heif, .heic, .heics, .avci, .avcs, .avif, etc. On the Apple platform, we generally see this kind of standard file with .heic as the file extension, so in the context of the Apple platform, HEIF and HEIC actually refer to the same thing (when you set the camera format of the camera to efficient in Settings -> Camera -> Format on iPhone/iPad, all the photos taken by the camera will be saved in HEIC format)

Just the same as spatial videos, a HEIC spatial photo can be viewed as a normal photo on devices that do not support 3D viewing, while some 3D photos before do not have this effect:

Summarizing briefly, through the detailed interpretation of these formats above, we can see that in order to make the two new multimedia formats of spatial videos and spatial photos “fly into the homes of ordinary people”, Apple has quietly put in a lot of effort behind the scenes to make them able to “seamlessly connect” with all existing file formats (writing here, we think of a sentence: the best technology is to make you feel the existence of it).

So, although many people are saying that iPhone 15 series is squeezing toothpaste, and the spatial video on iPhone 15 Pro series is still uncertain when it will be launched, but in our opinion, the effort Apple has put in behind the scenes to promote spatial videos/spatial photos is what we creators should pay more attention to.

After all, let’s not talk about spatial videos, just the more common panoramic videos can bring us very shocking effects on Apple Vision Pro:

We are looking forward to how many interesting new apps will appear in the Apple ecosystem when spatial videos and spatial photos become universal media formats.

Recommended Reading

- Far More Than Games: Review the Exciting MR Capabilities in PICO 4 Ultra

- How to Quickly Create an MR Application on PICO 4 Ultra with AI? - Using Cursor to Speed Up Development

- visionOS 2 PortalComponent - A teleportation wonder that better meets expectations

- Solving Nested Transparent Objects in RealityKit with Rendering Ordering - Part 2

- How to Use MPS and CIFilter to Achieve Special Visual Effects on visionOS

- visionOS 2 HandMatcher HandVector Update to v2 and Add New FingerShape Function - A gesture matching framework that allows you to debug visionOS hand tracking function in emulator

- A 3D Stroke Effect: Getting Started with Shader Graph Effects on visionOS - Master Shader Graph Basics and Practical Skills

XReality.Zone

XReality.Zone