How to Quickly Create an MR Application on PICO 4 Ultra with AI?

Everyone is talking about how AI significantly enhances individual creative capabilities. And MR will give us more abilities in the virtual world that we don’t have in the real world.

So, wouldn’t it be perfect to try using AI to create an MR application for ourselves? 😎

In this year’s new release Deadpool & Wolverine, the long-dormant Human Torch appears before our eyes again. I must say, years ago in the Fantastic Four, the scene where the Human Torch could shoot flames from his hands left a very deep impression on me:

Coincidentally, with the release of PICO 4 Ultra, PICO’s SDK has also followed up with a wave of MR-related capability updates.

Therefore, using AI combined with PICO’s SDK to give ourselves similar abilities became a very natural thing to do.

Said and done, after an afternoon of trying, such a small application was quickly completed, let’s call it HotFire 😆:

At the same time during this process, the various thoughtful assistance from PICO’s developer SDK also left a deep impression on me ❤️.

After all, before this, I had only seen some simple Unity Hello World tutorials and didn’t have much in-depth Unity XR development experience.

And combined with PICO’s various help documents and tools, as well as AI support, the entire application implementation process was surprisingly smooth 😆.

How smooth was it? First is the most crucial first step: establishing overall concepts through the official documentation.

In PICO’s official documentation, three types of documents are provided in order from shallow to deep, providing corresponding index documents for developers who are completely unfamiliar with XR, slightly familiar with XR but unfamiliar with PICO, and very familiar with development and need to quickly get started:

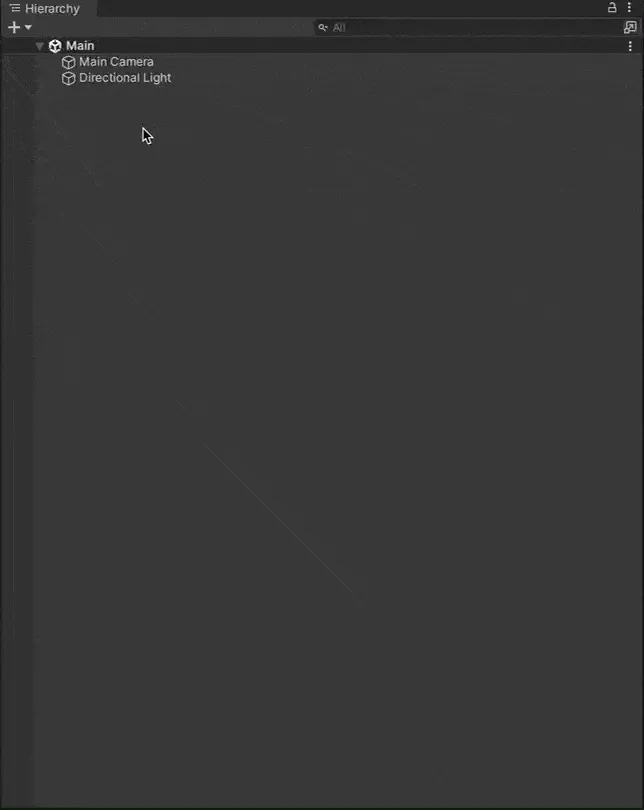

After having some basic concepts, you can choose a platform you’re familiar with to develop, here we use the Unity platform for development:

As a developer who deeply believes in “Talk is Cheap, Show Me the Code”, I know very well that what helps developers the most is often rich code examples.

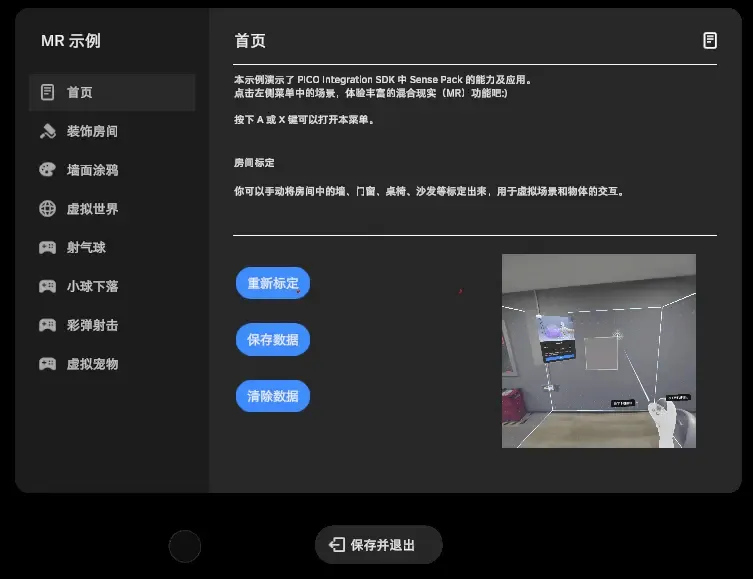

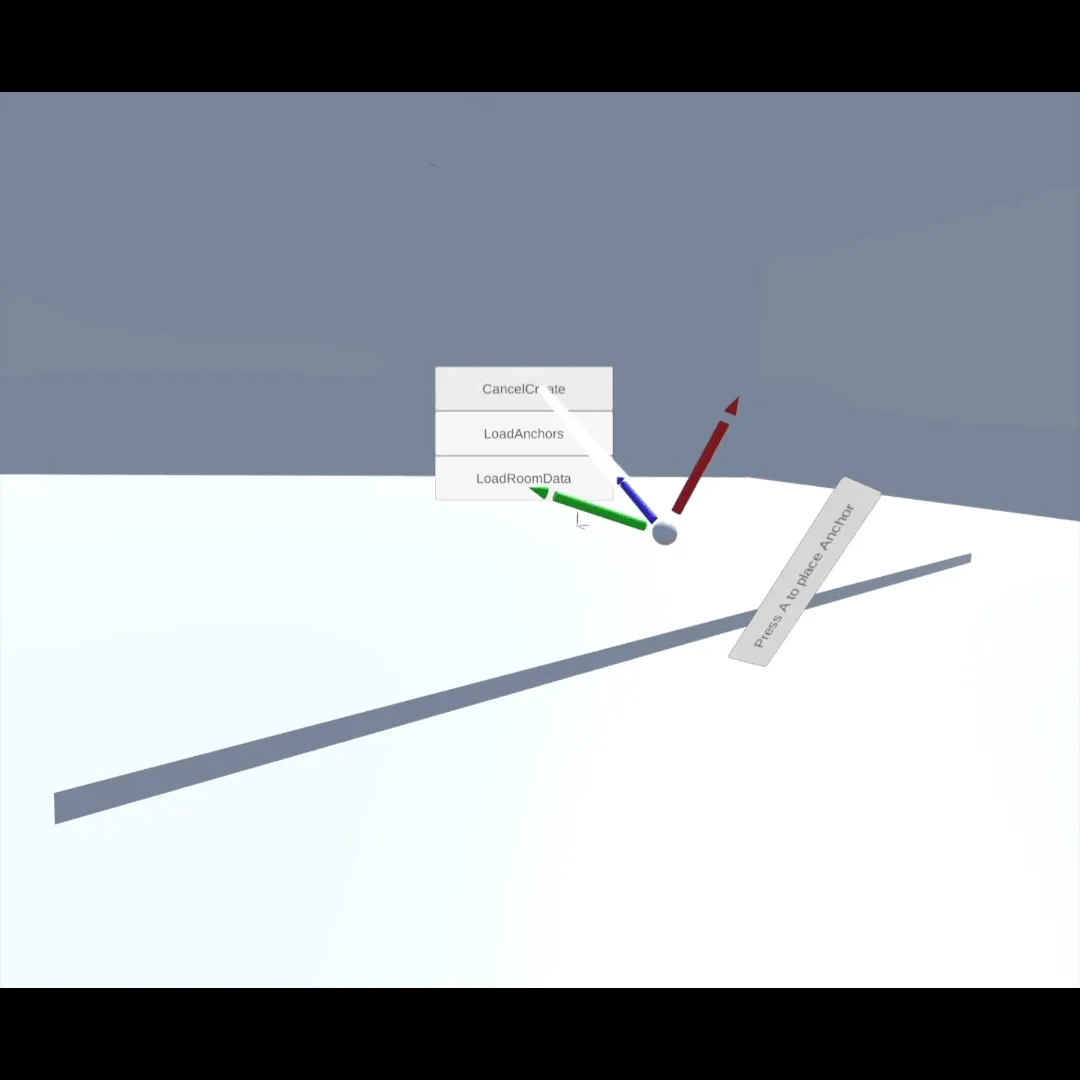

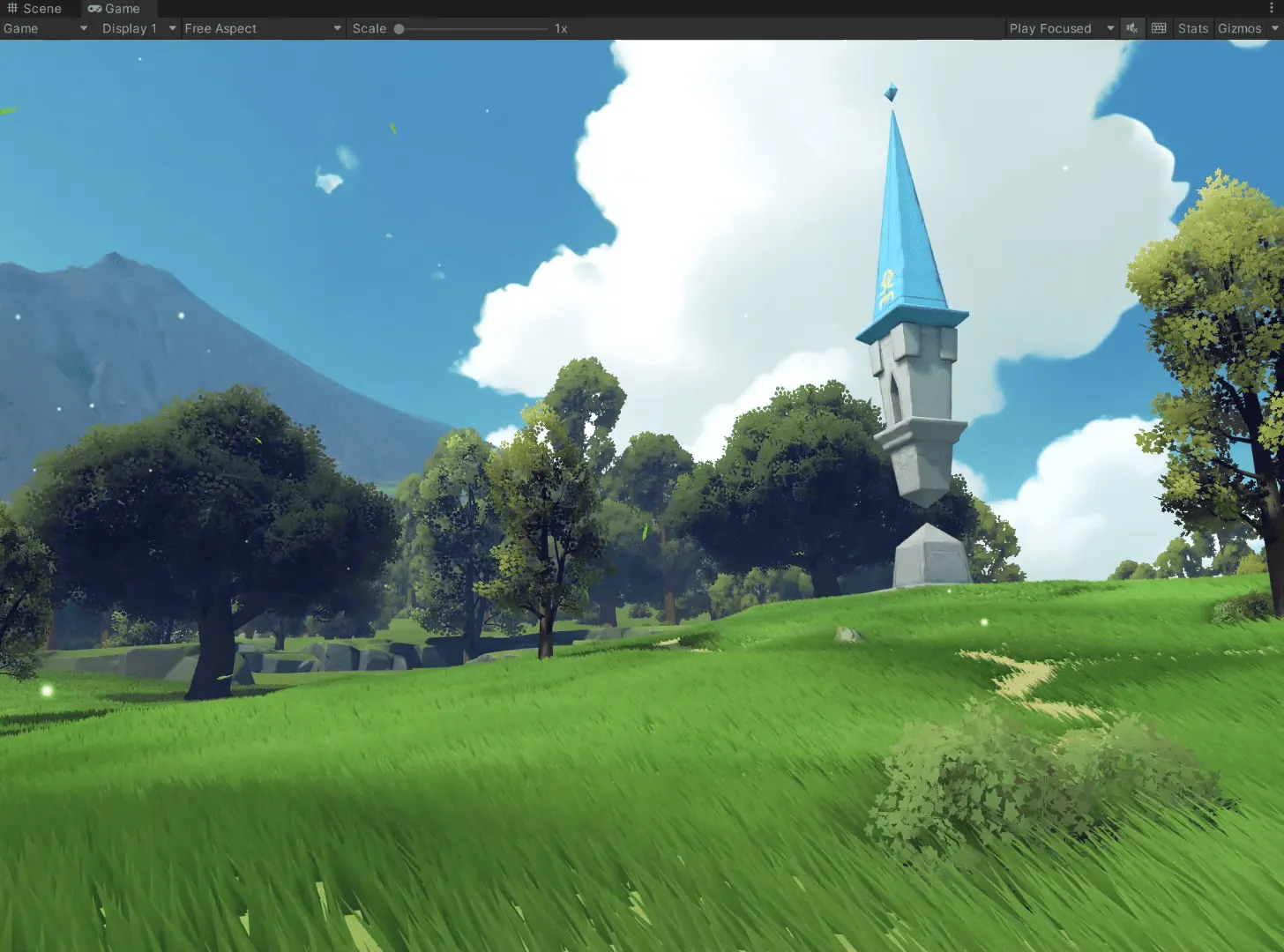

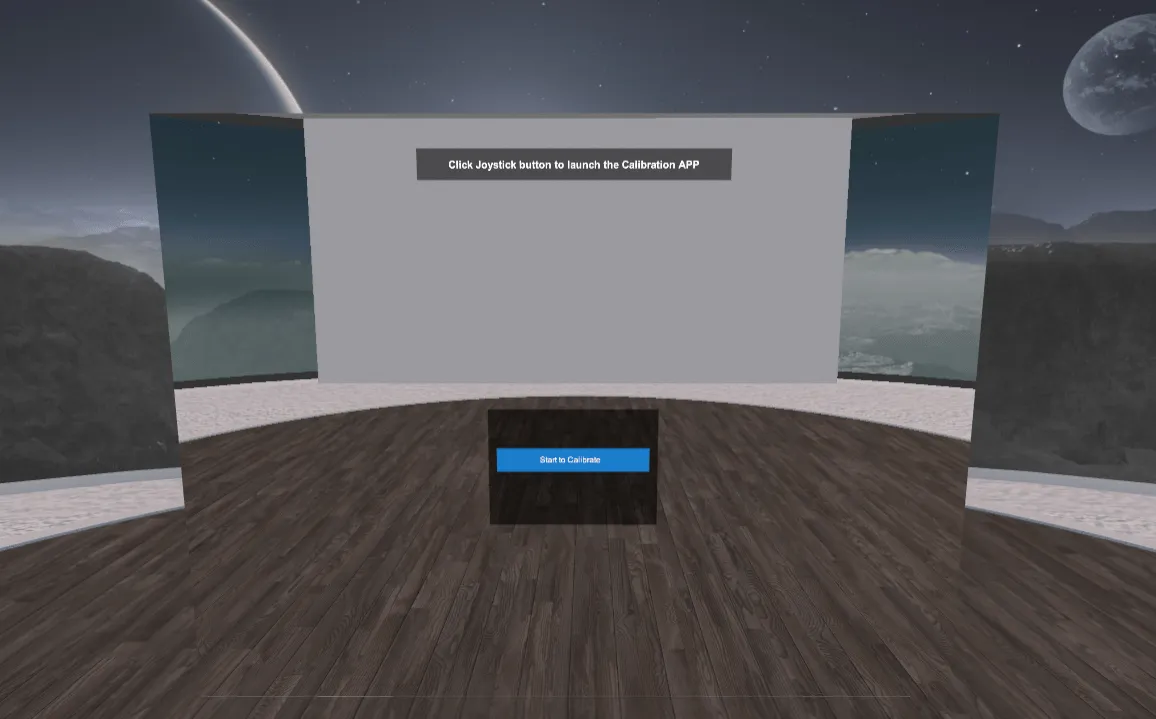

After simple exploration, PICO’s official rich code examples also appeared before our eyes:

| Example | Screenshot | Portal |

|---|---|---|

| Interaction Sample |  | Click here |

| Mixed Reality Sample |  | Click here |

| Spatial Anchor Sample |  | Click here |

| Getting Started Demo |  | Click here |

| Toon World (Performance Optimization Demo) |  | Click here |

| Space Arena (Multiplayer Social Demo) |  | Click here |

| Micro War (Multiplayer Game Demo) |  | Click here |

| Body Tracking Demo |  | Click here |

| Adaptive Resolution Demo |  | Click here |

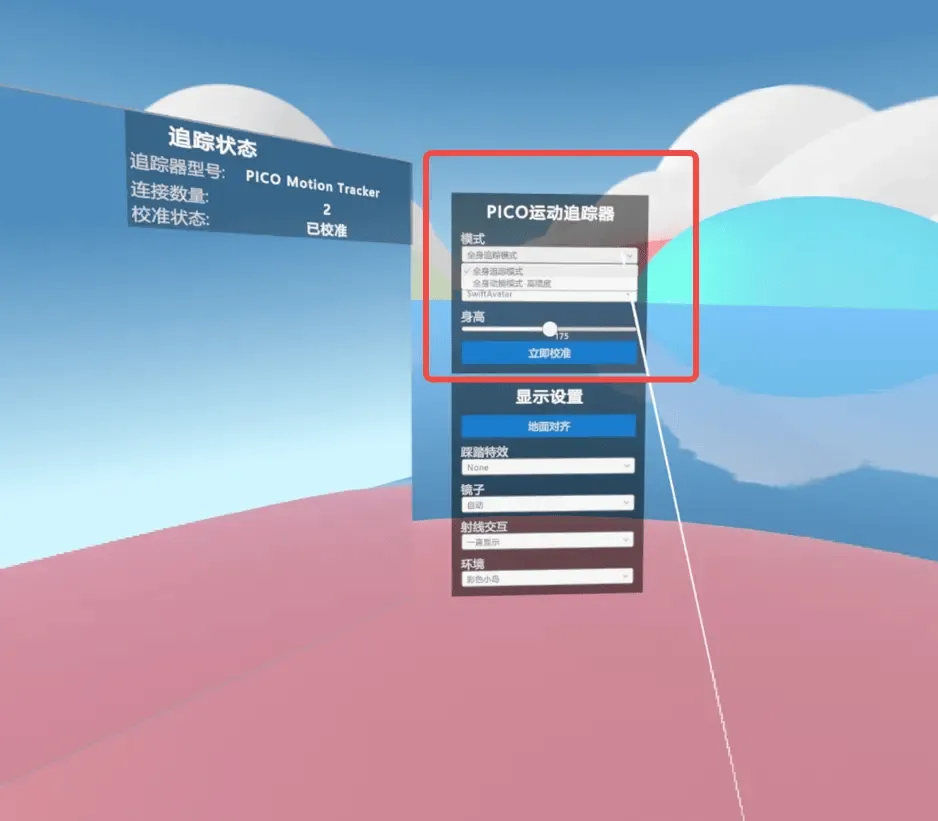

| Motion Tracking Sample |  | Click here |

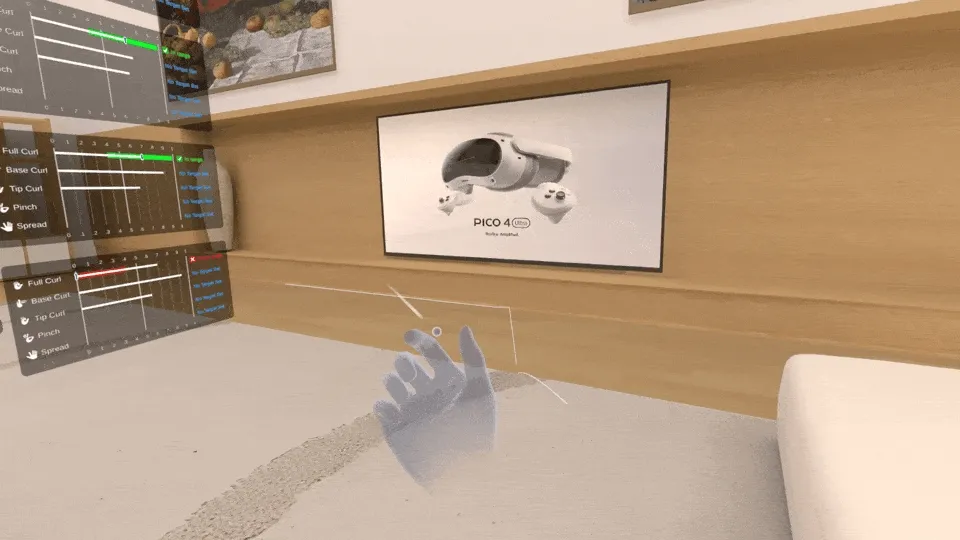

Conveniently, the gesture recognition functionality we want to achieve for this 🔥 effect has a similar implementation in the interaction sample here, where we can open and close a window by making a fist.

Using this example, it looks like we can quickly get started making an application with similar functionality, which is our HotFire.

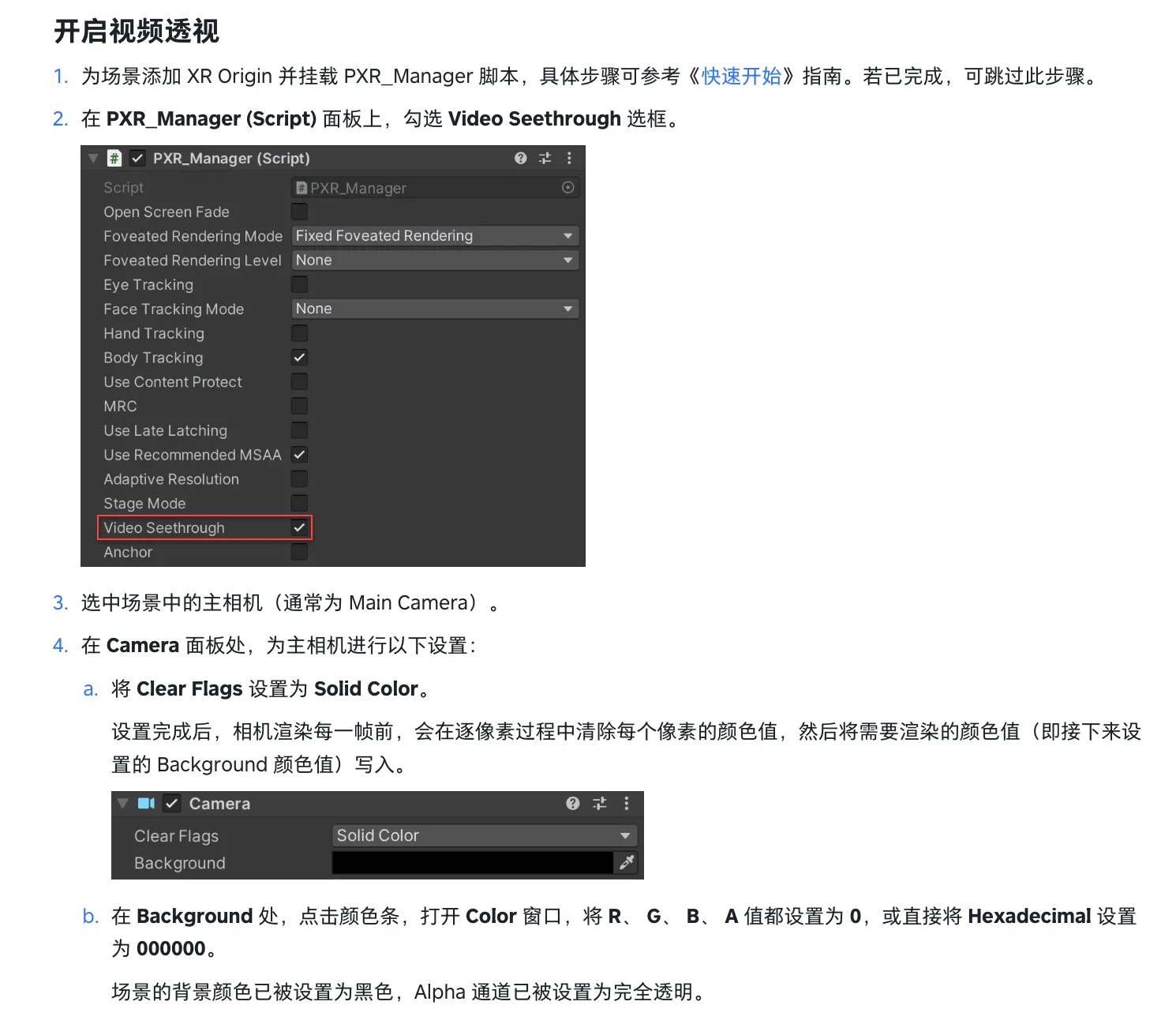

The next question is, in the original sample, this application is a VR immersive application, but now we need to make an MR application that needs to see the real world through transparency.

Although Unity and PICO SDK’s design philosophy has tried to make developers implement certain functions through configuration rather than writing code, this has created a new problem: some functions that require multiple systems to work together need to modify many configuration items.

For example, if we purely look at the configuration documentation for the video see-through function, we’ll find that we need to modify multiple places through four or five steps to implement this function.

PICO also realized this problem, so in the latest version 3.0.5 of PICO SDK, PICO provided a feature called PICO Building Blocks.

Through this feature, the originally complex configuration work can now be completed through one-click configuration in the Unity window.

Moreover, Building Block will check and modify parts in the current project configuration that don’t match certain functions. This greatly improves the probability of beginners getting the configuration right on the first try.

Of course, currently, besides enabling video see-through functionality, other complex configurations like controller tracking, controller & canvas interaction, gestures, and grab tracking can all be quickly set up in this way.

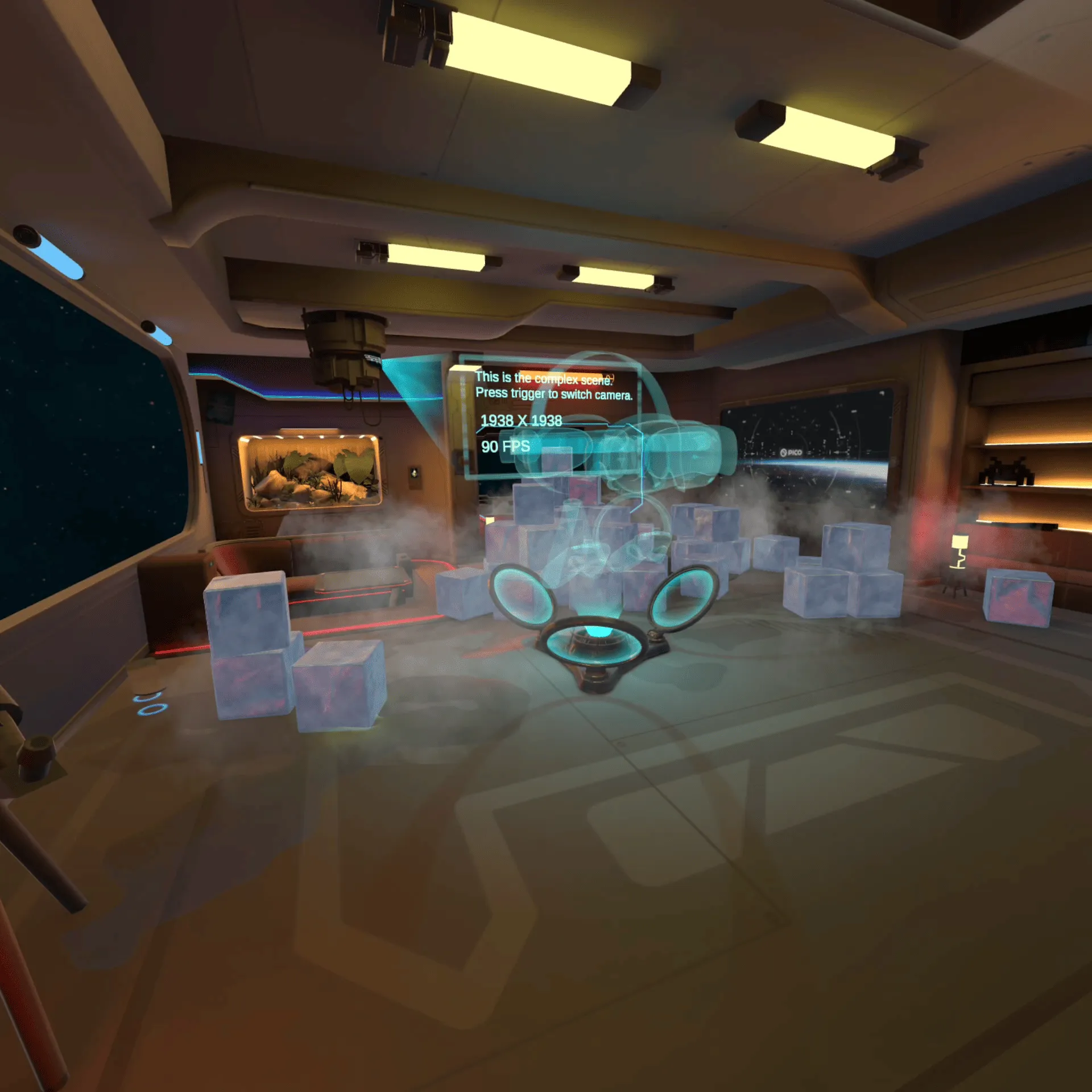

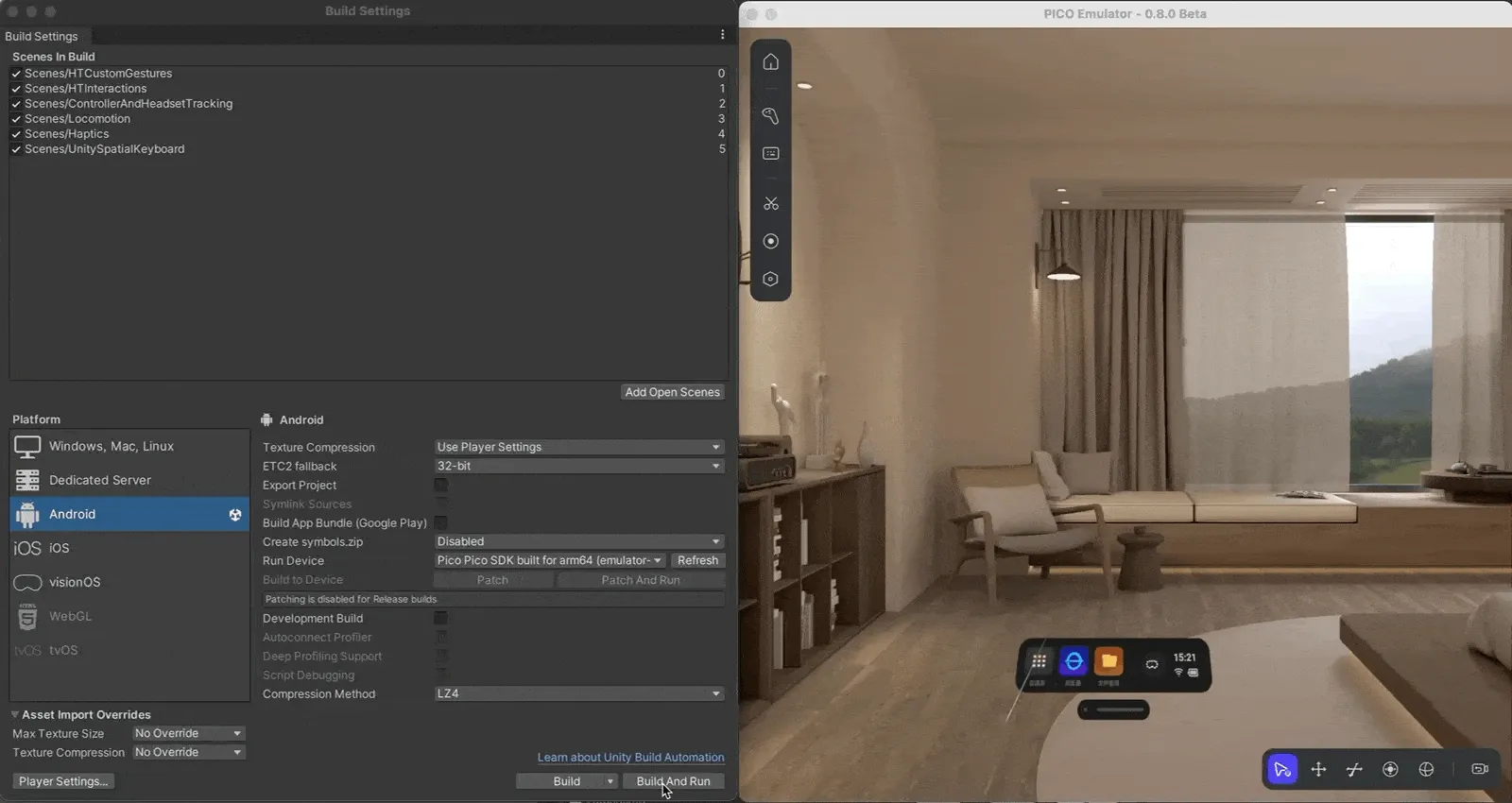

And with the continuous improvement of PICO developer tools, for functions like see-through video, we can now verify directly using the PICO Emulator without enabling real hardware:

Alright, with PICO’s developer tools, we can now very quickly implement Unity configurations, but what if we need to do some coding work?

The answer is: use Cursor! In this AI-filled era, basic code is often no longer a roadblock.

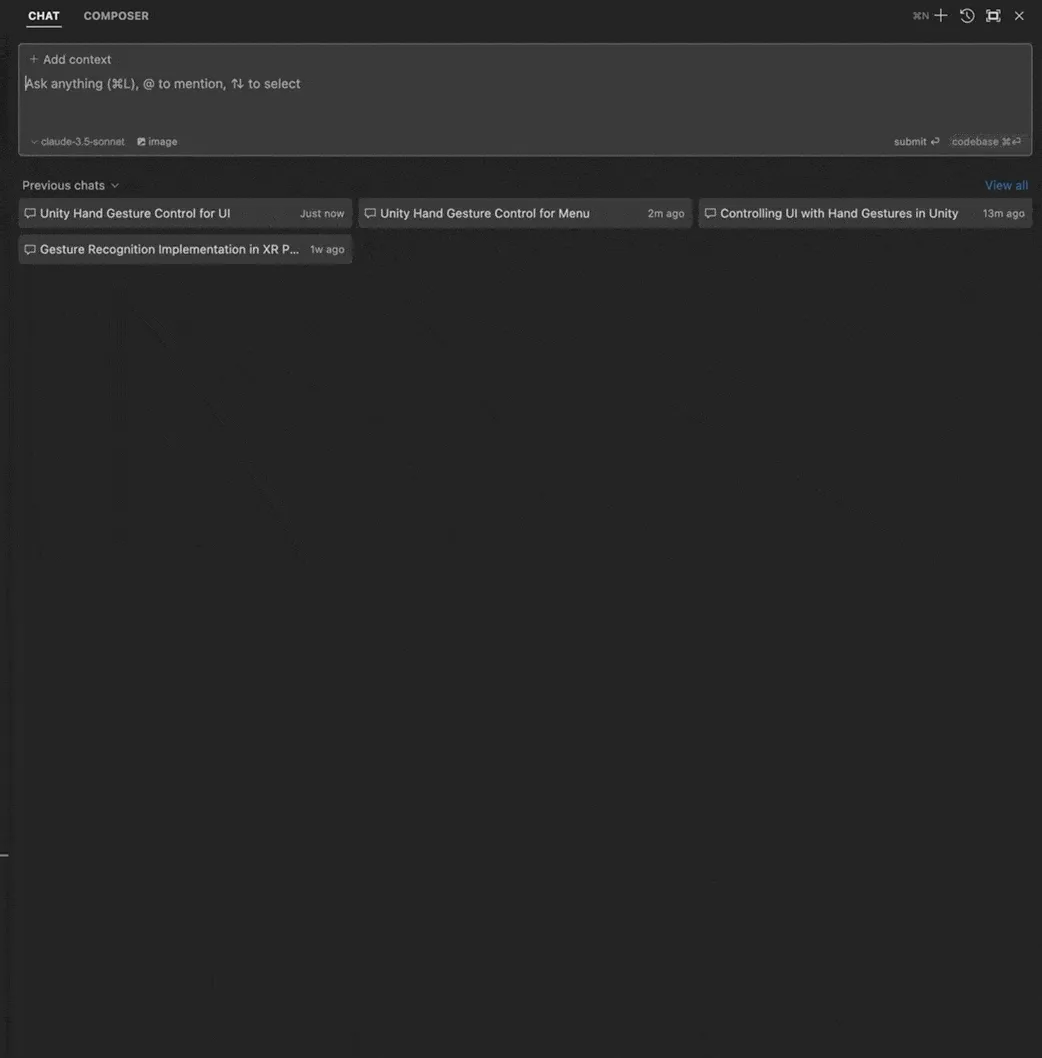

For example, to implement the functionality where flames follow hand gestures opening and closing, we can first use Cursor’s Chat feature to roughly understand how the corresponding functionality is implemented.

By directly using the @Codespace command with appropriate prompts, we can understand where the corresponding function code is:

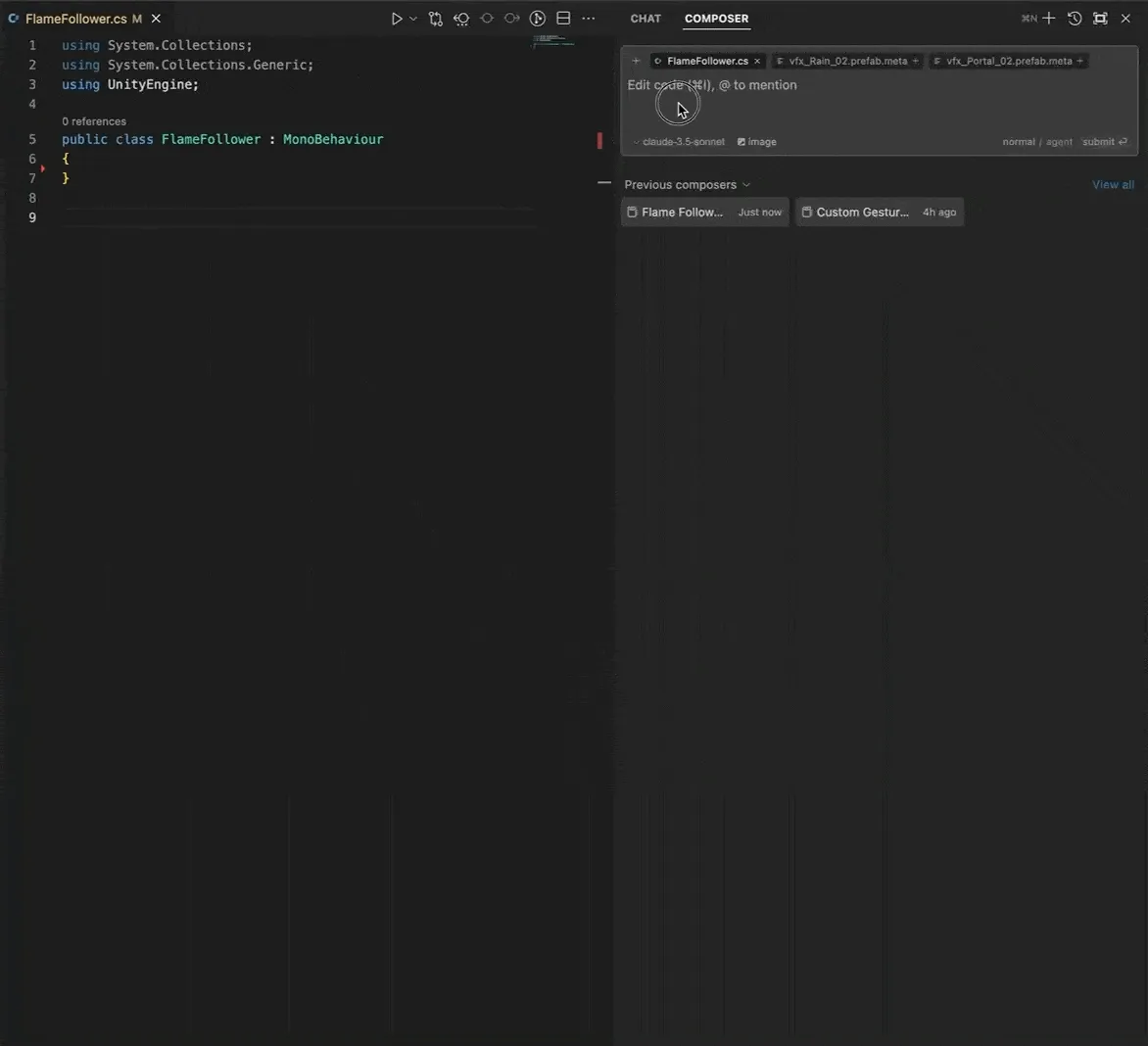

Next, we just need to create a new script to implement such functionality, and we don’t even need to look up the actual C# APIs ourselves, we can leave it all to Cursor!

Here, we want this script to implement the functionality of flames following hand movement. Even if I have no knowledge of C#, I can directly use prompts to let Cursor do this development work for us, just by telling it:

@Codebase I want this FlameFollower to have these properties:

flame: for configuring the flame object

flameFollower: for configuring the object that the flame object should track

flameAudio: for making sound when the flame appears

I want to implement that flame follows flameFollower’s movement, and expose enable/disable Flame methods externally, when flame is enabled, it will play flameAudio

We can automatically implement the corresponding logic:

Next, with the help of Unity ecosystem’s rich asset materials, we can directly reuse the flame effect from Unity Particles Pack, and there we have it, we’ve achieved the superpower we imagined!

This is just a casual afternoon exploration, if we want to realize more imagination, many of PICO’s official examples can be our good helpers, like this example of shooting spider webs with the right hand:

With a little modification, we should be able to implement a more complete Spider-Man remake version that has been implemented on other platforms:

Not just that, with more unique platform capabilities from PICO developer SDK, some more imagination might be easier to implement.

For example, with PICO Unity Avatar SDK + Shared Spatial Anchors, maybe in the future we can see a virtual Cosplay exhibition offline.

Additionally, if you’re a Web developer unfamiliar with game development, PICO itself also provides a very complete WebXR development platform to help you develop your XR applications based on the Web platform. They even help you establish resource lists like Awesome Web XR Development to help you quickly get started:

Of course, the implementation of more creative ideas still relies on creative developers trying them out. If you’re interested in developing MR applications on PICO, don’t forget to check out the content-rich PICO Developer Documentation. 😆

Recommended Reading

- How to Play Spatial Video On iOS 17.2

- What Is Spatial Video On iPhone 15 Pro And Vision Pro

- Beginner's guide to AR: Exploring Augmented Reality With Apple AR Tools

- Solving Nested Transparent Objects in RealityKit with Rendering Ordering - Part 2

- Breaking down the details of Meta Quest 3's upgrades to MR technology

- Before developing visionOS, you need to understand the full view of Apple AR technology

- Solving Nested Transparent Objects in RealityKit with Rendering Ordering - Part 1

XReality.Zone

XReality.Zone