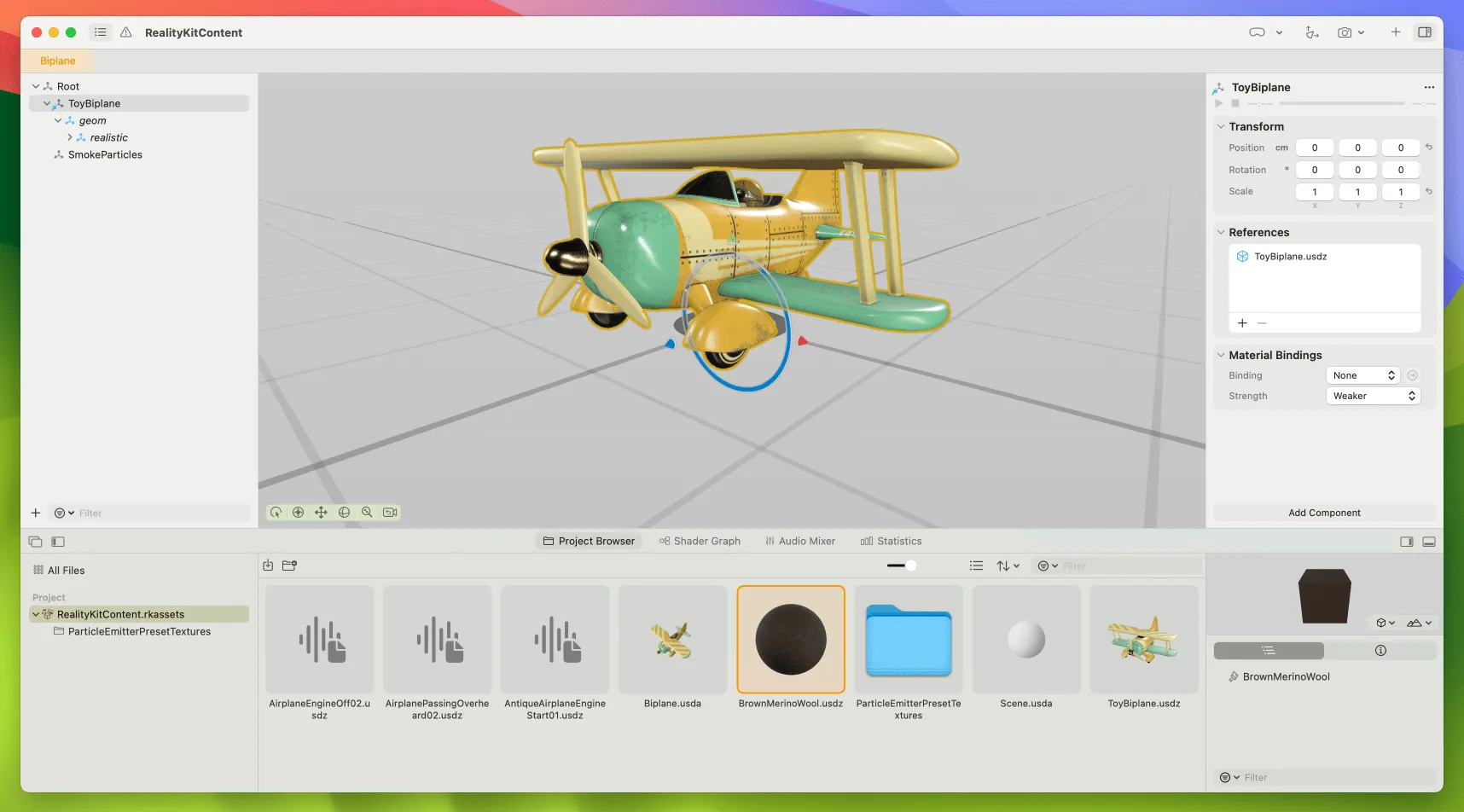

Open Source Framework RealityShaderExtension: Transfer Shaders from Unity and Unreal to visionOS

Shaders on visionOS

There are two ways to use Shaders on visionOS: ShaderGraph or LowLevelTexture(LowLevelMesh) + ComputeShader

- Shader Graph: Essentially MaterialX with specific extensions, working with Apple’s RealityComposer Pro (RCP for short) to enable quick Shader creation through drag-and-drop node connections.

- Compute Shader: Requires manual Metal Shader code writing, more complex but offers greater flexibility.

Unity has its own Shader Graph, and Unreal’s Blueprint system also allows for node-based Shader creation. From this perspective, all three systems share significant operational similarities, theoretically making migration straightforward. However, Unity and Unreal are highly mature game engines with rich material effects and supporting tools. Achieving the same effects in Apple’s native RealityKit requires manual migration of many Shaders. While Unity’s PolySpatial can automatically translate Unity Shader Graph into visionOS-supported MaterialX version Shader Graph materials for simulator and device execution, unfortunately, when using native Apple development, PolySpatial can’t help, and all effects still need to be migrated manually.

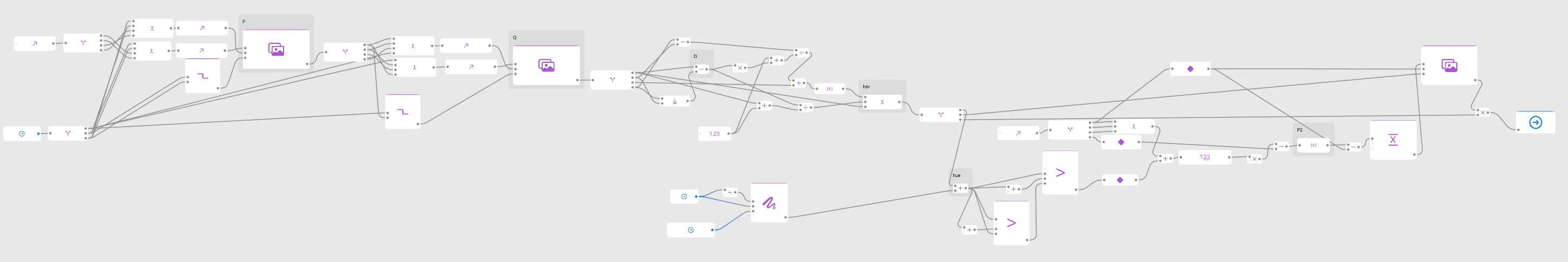

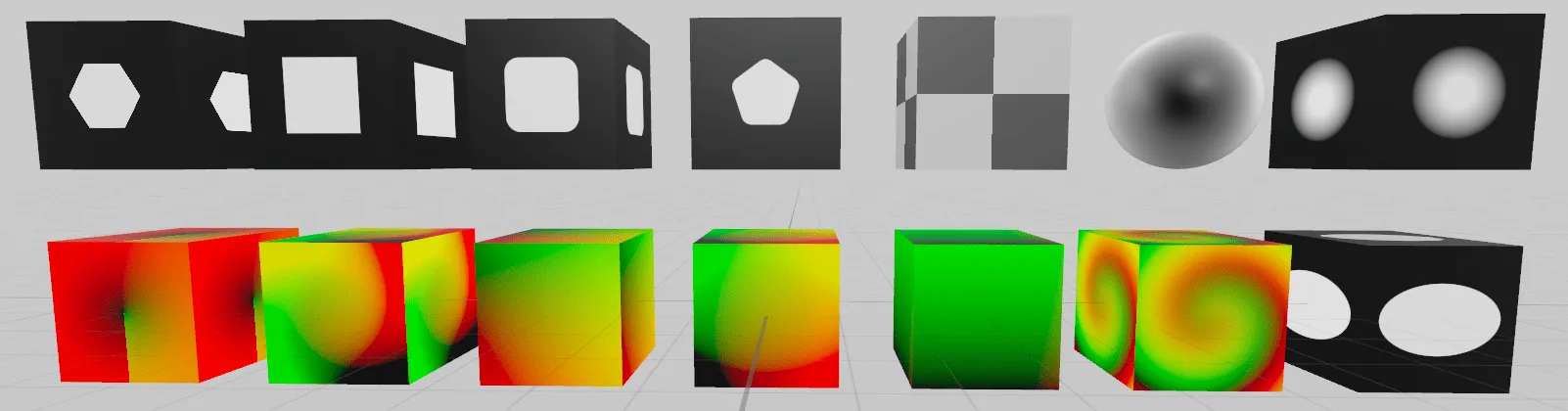

In my daily development, I frequently switch between Unity and Apple native development, occasionally helping with Unreal to native migrations as well. A common challenge in this process is that many built-in Shader effect nodes in Unity and Unreal are not natively available in RCP and need to be manually created. This led me through a series of painful migration processes, resulting in complex node connections like those shown in the image below, all manually migrated:

RealityShaderExtension

Believe me, after going through it once, you never want to experience the writing and debugging process again. So I organized these migrated nodes into an open-source project RealityShaderExtension, available for other developers to use, making it easier for everyone to complete Shader migrations from Unity and Unreal to Apple’s native visionOS.

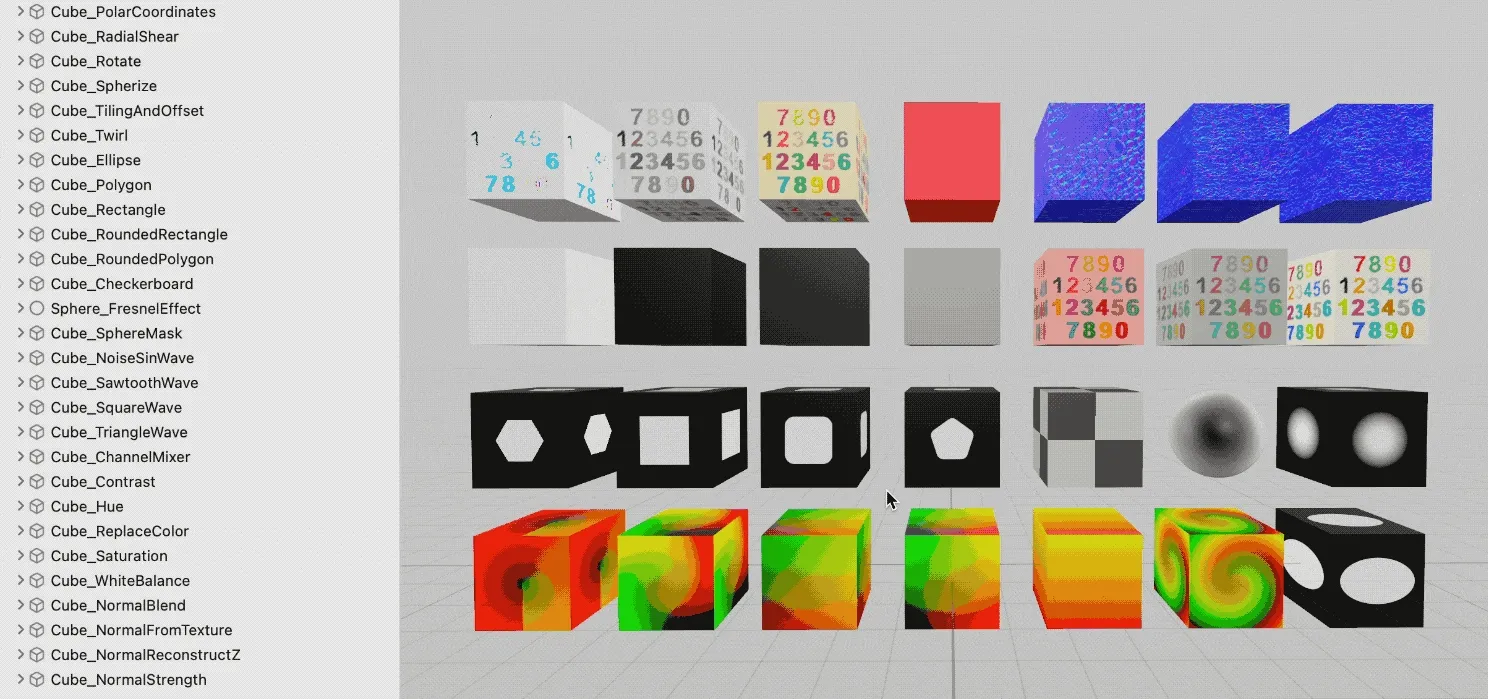

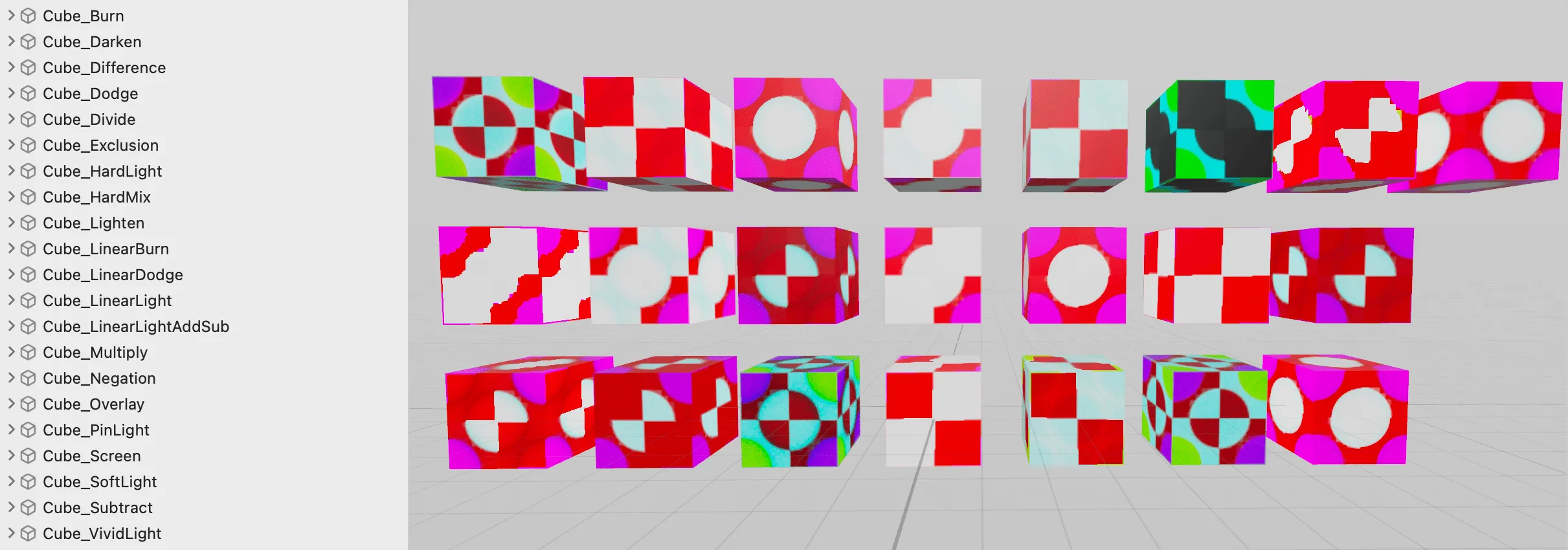

RealityShaderExtension has replicated 28 Shader Graph nodes from Unity and 28 Blueprint nodes from Unreal, plus over 20 color blend modes and 8 color space conversion nodes.

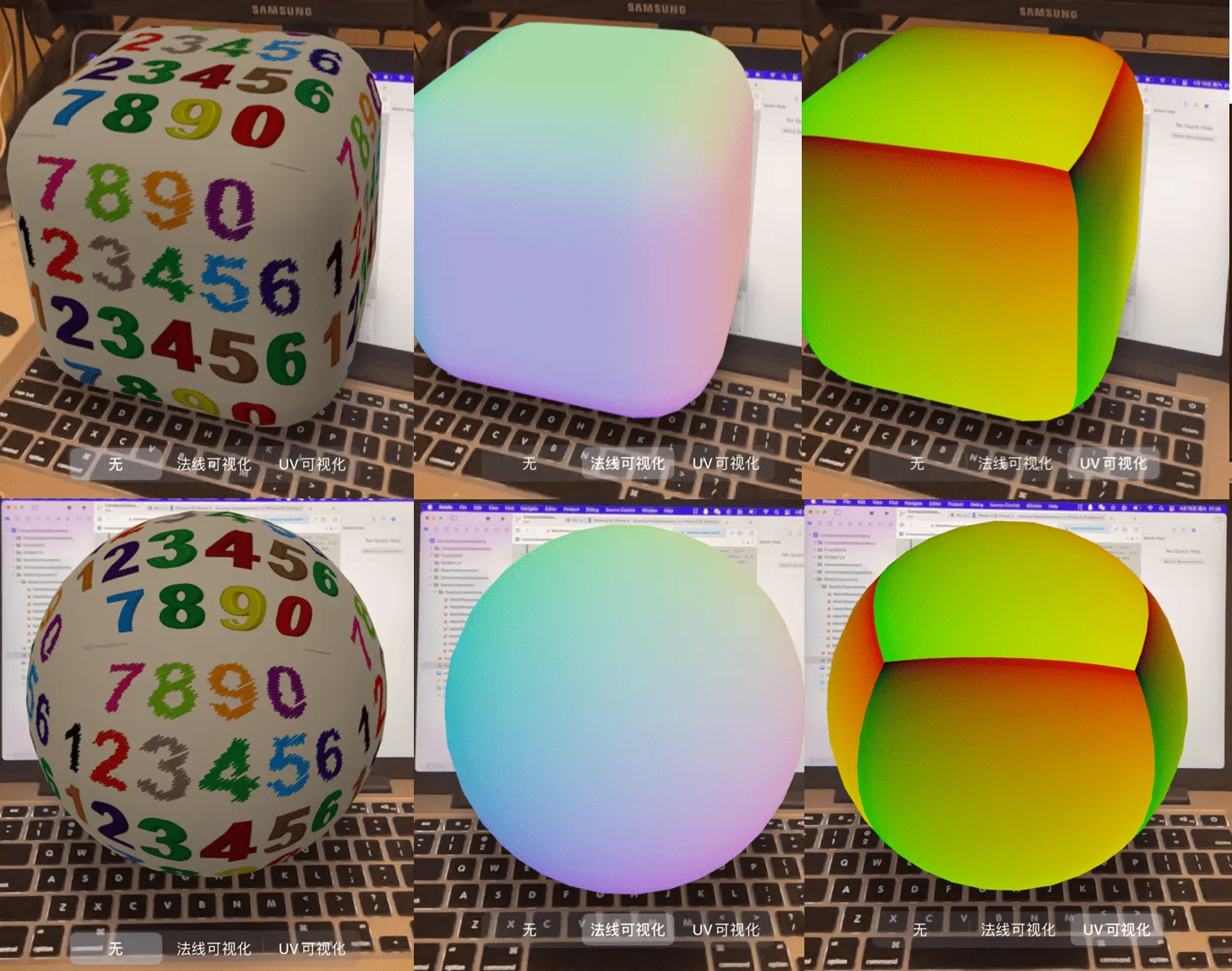

- Unreal node reference documentation: Unreal Engine Material Functions Reference Replicated 28 Blueprint Shader nodes, example effects shown below:

- Unity node reference documentation: Unity Shader Graph Node Library Replicated 28 Shader Graph nodes, example effects shown below:

- Color blend nodes, Unity reference documentation: Unity Blend Node and Unreal reference documentation: Unreal Blend Functions Replicated over 20 color blend modes and 8 color space conversion nodes, example effects shown below:

Shader Graph Debugging

During the manual migration of these nodes, I encountered many bugs, which can be categorized into three types:

- Accidental mistakes

- Misunderstanding (misuse) of RCP node parameters

- RCP’s own bugs

Let’s discuss how to handle these bugs.

a. Accidental Mistakes

The simplest approach is to spend considerable time checking and comparing nodes and connections. We can also use “false-color images” to verify output values or intermediate values.

”False-color images” refer to mapping output values to the [0, 1] range and using them as RGB values for output.

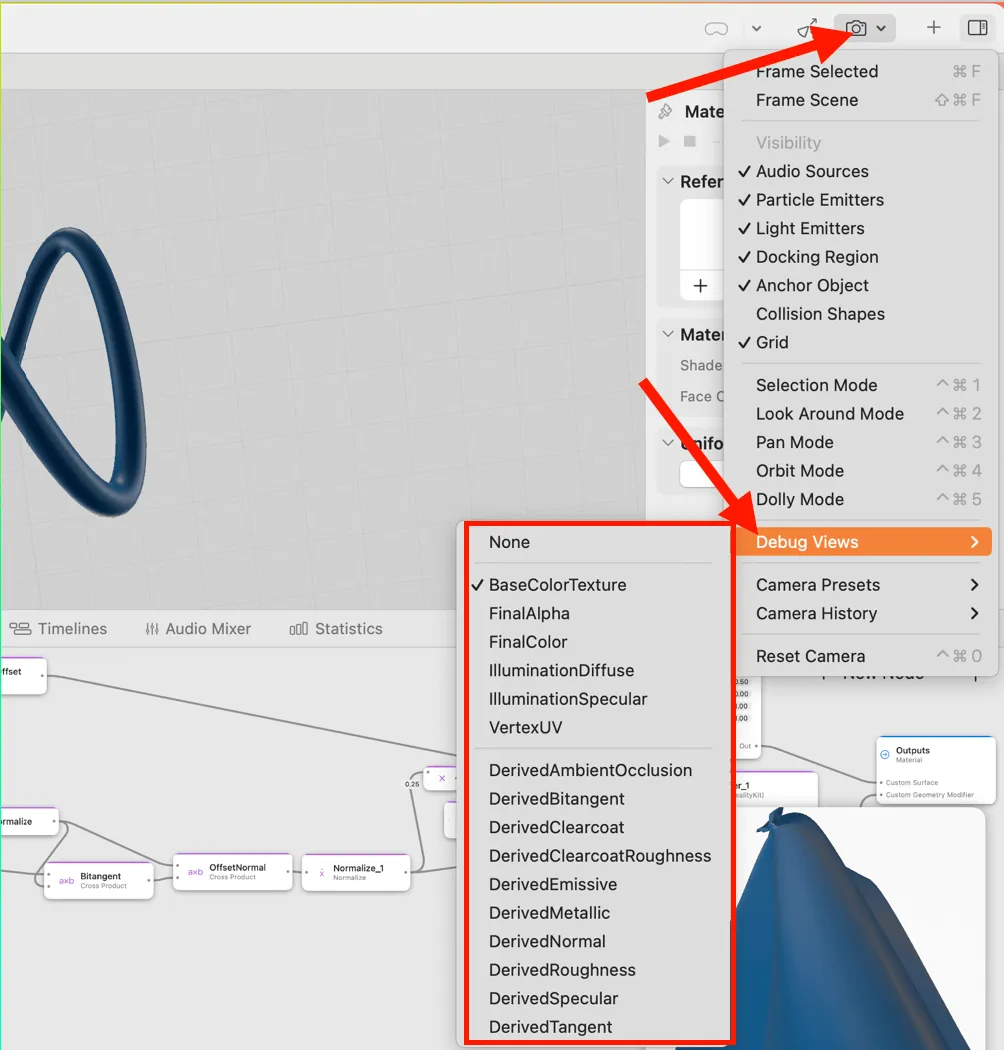

When writing code in Xcode, RealityKit’s built-in debug component ModelDebugOptionsComponent can display UV coordinates and normals in different colors.

In RCP, there’s also a built-in Debug Views feature in the top-right corner that can display normals, UV coordinates, roughness, etc., in colors.

Besides the built-in methods, we can also manually convert values to colors for display. In the project examples, many output values are manually converted to RGB colors for more flexible visualization.

b. Misuse of RCP Node Parameters

The most common issue is that some RCP node parameters have different meanings compared to Unity and Unreal.

For example, RCP doesn’t have a lerp function, but you can use Mix instead, though their parameter orders are reversed. The same goes for the Step function, where the parameters are also reversed:

//GLSL

lerp(a, b, t) = b * t + a * (1-t)

step(edge, x)

//RCP

mix(F, B, m) = F * m + B * (1-m)

step(in, edge)

For instance, RCP’s conditional selection node MTLSelect selects parameter B when the condition is true and parameter A when false, which differs from conventional logic and requires special attention.

Some function names are also different from common ones, such as the derivative functions ddx, ddy, fwidth, which in RCP use their full names:

- ddx(dFdx): Screen-Space X Partial Derivative

- ddy(dFdy): Screen-Space Y Partial Derivative

- fwidth: Absolute Derivatives Sum

c. RCP’s Bugs

During development, you might encounter some RCP bugs where effects don’t update automatically or incorrect values are provided. Common solutions include:

- Restarting RCP

- Recreating the problematic Node and reconnecting

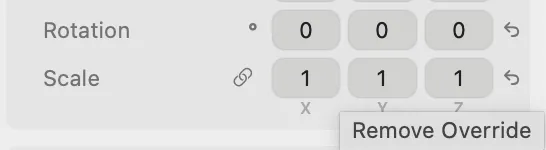

- Selecting the problematic node and clicking the Remove Override button ↩️ in the input/output panel

If you encounter particularly strange issues while writing Shader graphs where everything seems correct but the effect isn’t working properly, you’ll need to investigate - it’s likely that a node has malfunctioned. Deleting and recreating the node, or clicking the Remove Override button ↩️ to reset inputs and outputs might restore normal operation.

Instancing Technology

I used Instancing when creating Node Graphs in the project. Instancing is similar to a singleton pattern - it saves CPU and memory costs because it only loads one instance in memory and reuses it. However, this can make usage slightly inconvenient, so there are generally 3 ways to use nodes from RealityShaderExtension:

- a. Directly copy and paste all Node Graphs, including nested ones. No need to import source files, but requires manual copying of nested Nodes.

- b. Place source files in the project, right-click to create Instancing, then copy and paste Instancing nodes. Suitable for frequent reuse.

If you modify the original Node Graph, all Instancing content will synchronously change.

- c. Create Instancing, then disable Instancing. No need to import source files, equivalent to automatic copying.

If you modify the original Node Graph, disabled instances won’t change because they are different nodes.

Future Outlook

After completing the migration of nearly a hundred nodes in RealityShaderExtension, I believe the current Shader Graph has a low entry barrier suitable for beginners while being functionally complete enough to build complex effects.

However, RCP currently has some bugs and needs further functional improvements, such as:

- Enhanced collaboration with code in Xcode

- Improved bidirectional indexing of material files so that moving source files won’t affect files referencing them

Finally, I hope RealityShaderExtension will be helpful for everyone’s development!

References

- RealityShaderExtension

- Unreal Engine Material Functions Reference

- Unity Shader Graph Node Library

- Unity Blend Node

- Unreal Blend Functions

Author

Recommended Reading

- How to Use MPS and CIFilter to Achieve Special Visual Effects on visionOS

- A 3D Stroke Effect: Getting Started with Shader Graph Effects on visionOS - Master Shader Graph Basics and Practical Skills

- visionOS 2 PortalComponent - A teleportation wonder that better meets expectations

- visionOS 2 HandMatcher HandVector Update to v2 and Add New FingerShape Function - A gesture matching framework that allows you to debug visionOS hand tracking function in emulator

- Beginner's guide to AR: Exploring Augmented Reality With Apple AR Tools

- How to Play Spatial Video On iOS 17.2

- How to Quickly Create an MR Application on PICO 4 Ultra with AI? - Using Cursor to Speed Up Development

XReality.Zone

XReality.Zone