XR World Weekly 010

Editor’s Note

Half a month has passed in the blink of an eye, and quite a few interesting things have happened during this half month. For example, news about ByteDance’s plan to abandon the PICO business was successively forwarded in various groups, and this news even affected overseas media:

- Reddit: PICO XR will be gradually shut down — ByteDance abandons the Metaverse [RUMOR]

- Mixed-News: Bytedance to gradually shut down Pico, report says - Bytedance denies

However, the official denial came soon after. These two pieces of news on Reddit and Mixed-News were quickly labeled as “FakeNews”.

Let’s not worry about the various truths and falsehoods in this matter for now. The reaction of overseas media alone fully demonstrates that PICO still occupies a place in the international market. This is completely different from the situation ten years ago when Chinese manufacturers could hardly make any effective voices when smartphones were just beginning to develop.

Coincidentally, Apple CEO Tim Cook visited China for the second time this year and also mentioned that Chinese developers will achieve global success in the AR era. (Additional information: Chinese developers contributed 0.523 trillion US dollars to the 1.1 trillion-dollar developer revenue and sales on the App Store in 2022)

This fully indicates that in the XR era, developers from China have full opportunities to have more voices.

So, whether you are a newcomer who has just entered this industry or a veteran who has been working in this industry for many years, when you see news like the one at the beginning, please don’t mind. There will definitely be various stories happening on the way to the top of the mountain, but as long as we have physical strength and clear minds, it is definitely not a questionable thing to eventually reach the top of the mountain.

Therefore, taking this opportunity of the 10th of the XR World Weekly, we would also like to give everyone some encouragement in special ways.

We will launch a lottery activity on five platforms, namely Weibo, Jike, WeChat Official Account, Juejin, and Twitter. We will randomly select 3 students on each platform to give away a genuine Apple thermos cup, with a total of 15 prizes waiting for you!

So what conditions need to be met to participate in the lottery?

Friends who comment + repost on the 10th of XR World Weekly and follow XRealityZone’s social media accounts will have the opportunity to participate in our lottery activity!

Table of Contents

BigNews

- Reality Composer Adds Object Capture functionality

Idea

- Exploring “true” 3D interactive design

- Playing with particles on visionOS

- If games have Object Capture…

- What is the experience of reading long replies in space?

Video

- How to shoot and produce 3D spatial images?

- Let’s Make A VR Game

Code

- RealityBounds—a convenient way for you to quickly view boundaries in visionOS

SmallNews

- John Riccitiello resigns as Unity CEOHere is the translation:

BigNews

Reality Composer Adds Object Capture Functionality

Keywords: Reality Composer, Apple, Object Capture

In 2021, Apple introduced Object Capture, a technology that allows users to generate 3D models from photos (we also discussed this in Before developing visionOS, you need to understand the full view of Apple AR technology). Initially, Apple only provided an API for this capability and did not offer a complete app for regular users.

As a result, there have been several third-party apps on the App Store that encapsulate this technology, such as Amita Capture and Pixel 3D, which have done a great job in productizing this technology.

However, before iOS 17, using this feature required taking photos on the phone and then transferring them to a Mac for processing. The whole process was somewhat cumbersome.

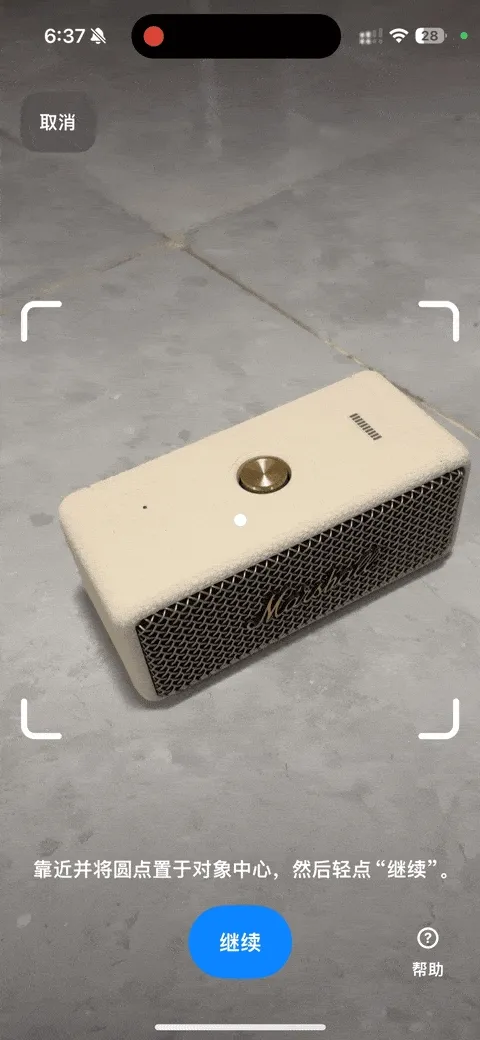

In iOS 17, Apple not only brought the photo processing functionality to the phone (you can find more details in the article “Meet Object Capture for iOS” on wwdcnotes.com), but also provided an official demo with an upgraded photo capturing experience. Compared to the previous direct photo capture without any guidance, the new official demo has a circular progress indicator to help users understand the progress of photo acquisition.

Not only that, Apple even officially provides this feature on the mobile end’s Reality Composer:

It is worth noting that the 3D models generated from photos on the phone have lower accuracy. If you want a higher accuracy model, you still need a similar macOS app or directly process the photos through the command line tool as mentioned above.

However, for ordinary people, even the lower accuracy models produced on the phone can be “deceptively real” in most cases. For example, take a look at the two PS5 controllers in the picture below. Can you guess which one is real and which one is fake?

Idea

Exploring “True” 3D Interaction Design

Keywords: 3D UX, Prototype, Hand Interactions, Eye Tracking

We often hear doubts like this: our phones, computers, and TVs can already meet almost all of our needs, so why do we need Mixed Reality (MR)? It seems that people think MR is just icing on the cake for existing demands. However, in reality, all our interactions with machines are currently in two dimensions: tapping and sliding on phones, computers, and tablets, pressing buttons on remote controls. But in fact, we are more familiar with three-dimensional interactions. It’s just that previous technologies and platforms have locked us into two-dimensional interaction planes.

Now we have the opportunity to use Video See-Through (VST) devices to experience three-dimensional clicking, rotating, and dragging in MR. Taking Apple Vision Pro as an example, although Apple currently allows some existing apps to run in window mode, if all apps are only based on the window form, it doesn’t really have much appeal, just like Gray Crawford, who once worked at Gray Crawford, said.Ultraleap / Leap Motion worked as a designer/engineer wrote in his master’s thesis:

Even presented with this supremely expansive and unexplored domain of spatial interaction design and physical phenomena in immersive computing, designers will hamper themselves and their users by perpetuating old UI mechanics, turning VR etc into a rough simulacrum of the constrained physical world rather than the means for its transcendence.

Before we start the following topic, we strongly recommend everyone to read this old but still relevant article to whet your appetite: A brief rant of THE FUTURE of interaction design.

In the Space of visionOS, all known principles of interface design can be rewritten. This is what we imagine the future to be. Today, we introduce a designer from Tokyo named dmvrg, whose explorations in 3D UX have given us a lot of inspiration. Next, we will take a look at his thoughts on design in spatial scenarios through three series he shared on X.

Part 1: Gesture Interaction

https://twitter.com/dmvrg/status/1231916045503328261

In this series of tweets, dmvrg shared with us some existing design principles/suggestions for gesture interaction from various companies. We can learn and compare the design principles for gesture interaction from these existing resources. Although many designs are still focused on 2D interface design, such as scaling UI windows and scaling UI windows themselves, there are some ways of directly interacting with objects using gestures that are worth referencing:

- Microsoft MR Design Principles

- Meta Gesture Interaction Design Principles

- UltraLeap Gesture Interaction Design Principles

- Apple Gesture Interaction Design Principles

- PICO Gesture Interaction Design Suggestions

And here are some special and inspiring ideas that we have highlighted separately here and showcased in the following image:

- Meta’s reference frame for scaling objects; pinch-and-pull sliding method

- Ultreal’s use of chassis for rotating objects; creating and manipulating objects with both hands; virtual character guidance design

- Apple’s hand-eye coordination operation; inclusive design for people with disabilities

- HoloLens’ use of bounding box boundaries for rotation and scaling; different gestures designed for object sizes

Using these gesture interaction design principles mentioned above, dmvrg has designed a large number of interaction prototypes, compared and analyzed in detailHere is the text: As shown in the demo video below:

https://twitter.com/dmvrg/status/1706409567620596127

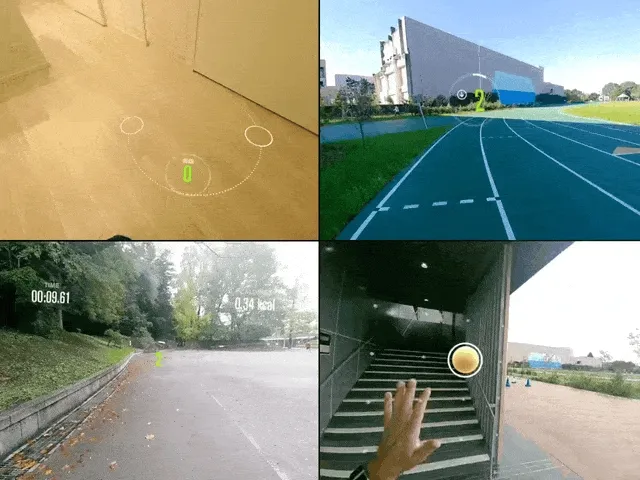

We can see the flip of the interface after a click event, as well as pinch and zoom, and the interface following finger movements. At the same time, we also see a pinch-and-pull interaction similar to what Meta proposed. dmvrg has also designed a set of fitness-specific UX interfaces, such as guiding users to place their hands in the correct push-up position and making fitness more fun through guided virtual targets. (Although we do not recommend using current headsets for these actions) We have seen similar applications at last year’s ADA: Active Arcade

In addition, dmvrg also recommended many books on gesture design. We have looked through several of them and the ones we highly recommend are:

- “Hands” by Napier, John

- ”Brave NUI World Designing Natural Use” by Daniel Wigdor and Dennis Wixon

- ”Game Feel A Game Designer’s Guide to Virtual Sensation” by Steve Swink

These three recommended books, cover images from Amazon

Part 3: Eye-Tracking Interaction UX

https://twitter.com/dmvrg/status/1429042498790252550

In eye-TrackingIn the series of tweets on interaction UX, the first recommended article is an overview of “What is Eye Tracking”. We can also learn some guidance on eye gaze interaction design from design guidelines of some major companies:

- Microsoft’s eye gaze design principles in MR

- Apple’s eye gaze interaction design guidelines

- Tobii’s eye gaze interaction design guide

Microsoft mentions that the gaze dwell time can be used to analyze the rationality of app design and user preferences. However, due to privacy concerns, most vendors currently (as of October 2023) do not allow developers to access this information.

As we can see, there are relatively few specific guidelines on eye tracking. More exploratory opinions can be found in some literature, including conferences such as CHI, VR&3DUI, ISMAR, such as:

- Gaze + pinch interaction in virtual reality, SUI 2017

- A Comparative Analysis of 3D User Interaction: How to Move Virtual Objects in Mixed Reality, VR 2020

- Radi-Eye: Hands-Free Radial InterfacesHere is the text: for 3D Interaction using Gaze-Activated Head-Crossing, CHI 2021

- Exploring 3D Interaction with Gaze Guidance in Augmented Reality, VR 2023

Similarly, based on some eye-tracking interaction design principles mentioned above, dmvrg has designed some eye-tracking interaction prototypes. Please take a look at this demonstration:

https://twitter.com/dmvrg/status/1706409567620596127

Part 3: Other UX Prototypes

In addition to the aforementioned interaction design prototypes, dmvrg has also designed some inspiring interaction prototypes that cannot be classified into these two categories. For example, the Mirrored Word Prototype:

https://twitter.com/dmvrg/status/1440823006985478150

We believe this can be combined with objects like magnifying glasses for use in puzzle games. Of course, map apps can also allow users to view certain landmarks on the street, such as the geological features of the area in the past, through a window. Speaking of maps, dmvrg has also designed a navigation prototype: Map Prototype. Although there may still be room for improvement, this is another design exploration that utilizes MR for navigation, detached from AR on smartphones.

https://twitter.com/dmvrg/status/1304594664511516672

Hands and eyes (even our feet), as natural organs for perceiving the world, will be important carriers of interaction in spatial computing in the foreseeable future. We have shared with you the design and imagination of designers and developers in the community regarding these interactions. At the same time, we should also note that these carriers are not as stable as we imagine. As Google researcher Peyman Milanfar shared in his tweet:

“Even when we try to keep them perfectly steady, our hands and other muscles always shake a little bit. The natural tremor has a very small, random magnitude and frequency in the range of ~10 Hz. This motion consists of a mechanical reflex and a second component that causes micro-contractions in muscles.”

So we believe that we should not design hand operations that require long periods of staring or immobility. This can not only reduce the computational cost of hand-eye tracking devices but also better align with our physical characteristics. As we gain a better understanding of human physical mechanisms, we will be able to design and implement future 3D interactions more effectively.

Playing with Particles on visionOS

Keywords: visionOS, Reality Composer Pro, Particle System

After much anticipation, with the release of Apple Vision Pro, RealityKit and Reality Composer Pro finally have their own advanced particle system.

A ParticleSystem is a 3D tool used for creating and rendering phenomena such as rain, snow, debris, sparks, smoke, falling leaves, and floating dust. Apple provides 6 presets for particle effects:

Our dear friend, Yasuhito Nagotomo, has also put together a PDF to help everyone quickly get started with this particle system. However, if you are a developer familiar with Unity or Unreal, you may find that the particle system on visionOS is not as mature yet, for example:

-

Rendering options for particles:

-

Unity provides rendering options such as Billboard, SketchedBillboard, HorizontalBillboard, VerticalBillboard, and Mesh.

-

In visionOS 1 Beta 4, there are only three rendering modes based on Billboard: Billboard, BillboardYAligned, and free.

The Billboard rendering mode refers to the technique where each particle always faces the camera. This is particularly useful for certain types of particle effects where a flat 2D texture (such as smoke, fire, or sparks) represents the particle itself. Facing the camera at all times ensures that these textures are always visible and prevents the user from seeing the particles from the edges, where they would be nearly invisible.

-

-

Number of associated particle emitters:

- Currently, visionOS 1 Beta 4 only supports a maximum of two associated emitters: Main and Secondary (referred to as

mainEmitterandspawnedEmitterin the code).

- Currently, visionOS 1 Beta 4 only supports a maximum of two associated emitters: Main and Secondary (referred to as

-

The particle system does not support ShaderGraph.

- Nagatomo has proposed a solution for this currently: using texture animation:

- Using Blender: Fluid simulation => Export PNG images => Create a texture atlas => Use RealityKit’s particle system to play texture animations

Of course, there is still much to explain about particles. If you want to know how to play with particles in visionOS and add more fun to your project, stay tuned for an upcoming article titled “Playing with Particles in visionOS”.

If games have Object Capture…

Keywords: MR, Object Capture

Earlier, we shared the capabilities of Object Capture technology. So what scenarios can this technology be used in? Well, 3D artist Sergei Galkin has provided us with a possibility: duplicating your car and controlling it with a PS5 controller.

https://twitter.com/sergeyglkn/status/1714630699704086702

What is the experience of reading long replies in space?

Keywords: visionOS, Twitter Threads

What is the biggest surprise that spatial computing devices like Apple Vision Pro bring us? It’s definitely the limitless boundaries of scenarios.

In such large-scale scenarios, many development paradigms that were originally designed for 2D screens may change. For example, on visionOS, you can do this to read long tweet replies:

https://twitter.com/graycrawford/status/1714347211977605293

Video

How to shoot and produce 3D spatial videos?

Keywords: Binocular Stereo Vision, Depth Perception, Spatial Video

Our previous article What is spatial video on iPhone 15 Pro and Apple Vision Pro? is just a simple introduction to spatial video, and many details related to spatial video/3D effects are not covered. Therefore, we also recommend a series of videos on how to shoot and produce 3D images: “GoneMeme’s series videos”

Among them, there are a few episodes that we want to summarize:

V Series Episode 1: Introduction to Stereoscopic Imaging Principles

- Mechanism of human stereoscopic vision

-

Physiological characteristics

-

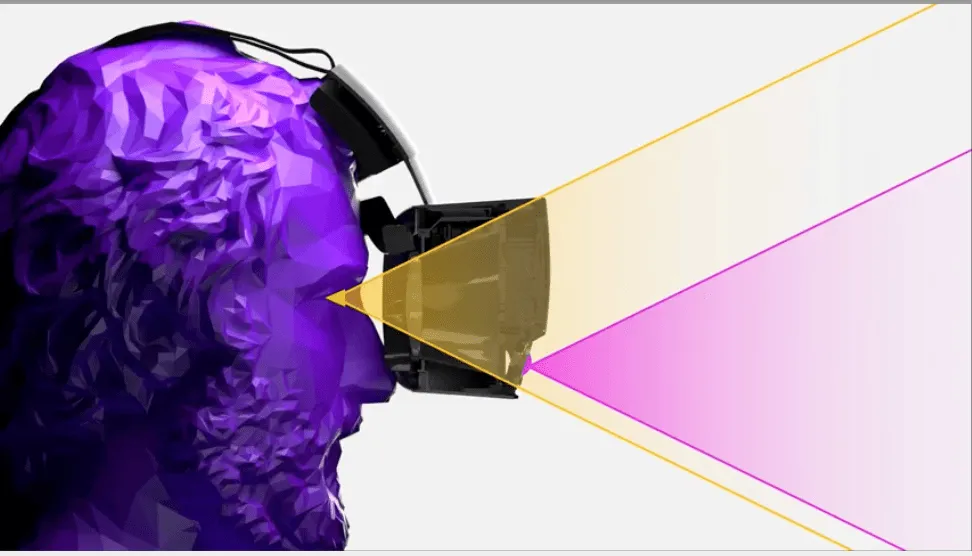

The physiological mechanisms for judging depth, in order of priority, include: binocular convergence, binocular disparity, monocular focusing (the tenseness of the ciliary muscles and the degree of blur in defocused areas when focusing)

Human eye equivalent aperture: F2.4 - F6.8

- The optical design focal length of Quest 2 is 1.3 meters (distance from standing or sitting position to the ground; 20 times the interpupillary distance (65mm))

-

-

Psychophysical characteristics

- Mechanisms of the brain in supplementing depth information

- Angular/affine/motion parallax (objects appear bigger as they get closer)

- Occlusion (near objects obstruct distant objects)

- Lighting and shadows (different lighting produces different patterns of shadows)

- Texture (regular distribution of features)

- Experience

- Mechanisms of the brain in supplementing depth information

-

- Binocular Convergence (Vergence)

- A large part of the human sense of depth perception comes from the perception of depth of the fixation point.

- Current mainstream display devices (including Quest, PICO, Apple Vision Pro) use optics with fixed focal lengths, which cannot solve the problem of mismatched focus depths (Vergence Accommodation Conflict, VAC). Future solutions:

- Use headsets with variable focus. In SIGGRAPH 23, Meta unveiled a prototype headset, Butterscotch Varifocal, which can physically adjust the focus. By tracking the wearer’s gaze, when the wearerWhen the wearer focuses on nearby objects, the head-mounted display physically moves the screen closer to the wearer’s eyes. This feature can help users enter a tense state of the ciliary muscle when their eyes are focused.

- The rendering of the fixation point simulates the blur of out-of-focus.

V Series Part 3: Pupillary Distance

-

Definition

Pupillary Distance: The distance between the pupils of the eyes when looking straight ahead into infinity.

Interpupillary Distance: The distance between the optical centers of the two lenses in a head-mounted display.

The distance between the parallel optical axes of two lenses in a camera is also called pupillary distance, referred to as focal length for distinction:

Actual Focal Length: Focal length of a physical camera

Virtual Focal Length: Focal length of a virtual camera

-

Importance of pupillary distance:

In the case of mismatched pupillary distance, i.e., when the eyes and lenses do not align on the same straight line, it may cause image blur, distortion, and abnormal depth perception.

In the case of mismatched pupillary distance and focal length, it may cause abnormal depth perception.

-

Importance of pupillary distance for MR (Mixed Reality):

In VST’s head-mounted display devices, this issue will affect the basic user experience. We can see that Apple Vision Pro directly places two main cameras for image acquisition below the average pupil distance, and the MR color perspective camera of Meta Quest 3 has also moved from the sides of Quest 2 to the position of the average pupil distance. (For details, see the previous article Detailed Analysis of the Upgrades of Meta Quest 3 in MR Technology).

In addition to this, Meta has also published some research aiming to achieve re-projection of alignment without the need for correction when the perspective changes.

Moreover, Meta has also published some research aimed at achieving realignment of reprojection without the need for correction when the perspective changes.

Except for the videos we summarized, this series of videos on the imaging principles and correction introduction of fisheye cameras, disparity analysis introduction, VR 180 video principles, and discussions on deficiencies are all full of practical information, which is very worth watching and learning for enthusiasts and practitioners related to this field.

Let’s Make A VR Game

Keywords: VR, Tutorial, Game, Grab, Continuous Move,

https://www.youtube.com/watch?v=pm9W7r9BGiA

If you want to learn how to make a VR game easily, then this Let’s Make A VR Game series of Valem Tutorials can serve as a good introduction.

In this series of videos, Valem introduces how to make a VR game from scratch, covering the following topics:

- Project configuration

- Scene building

- Object grabbing

- Player movement

- Narrative logic

- Sound effects

These topics basically include the basic skills needed to make a basic VR game, which is very suitable for those who have some Unity experience and want to learn about the process of VR game development.

Code

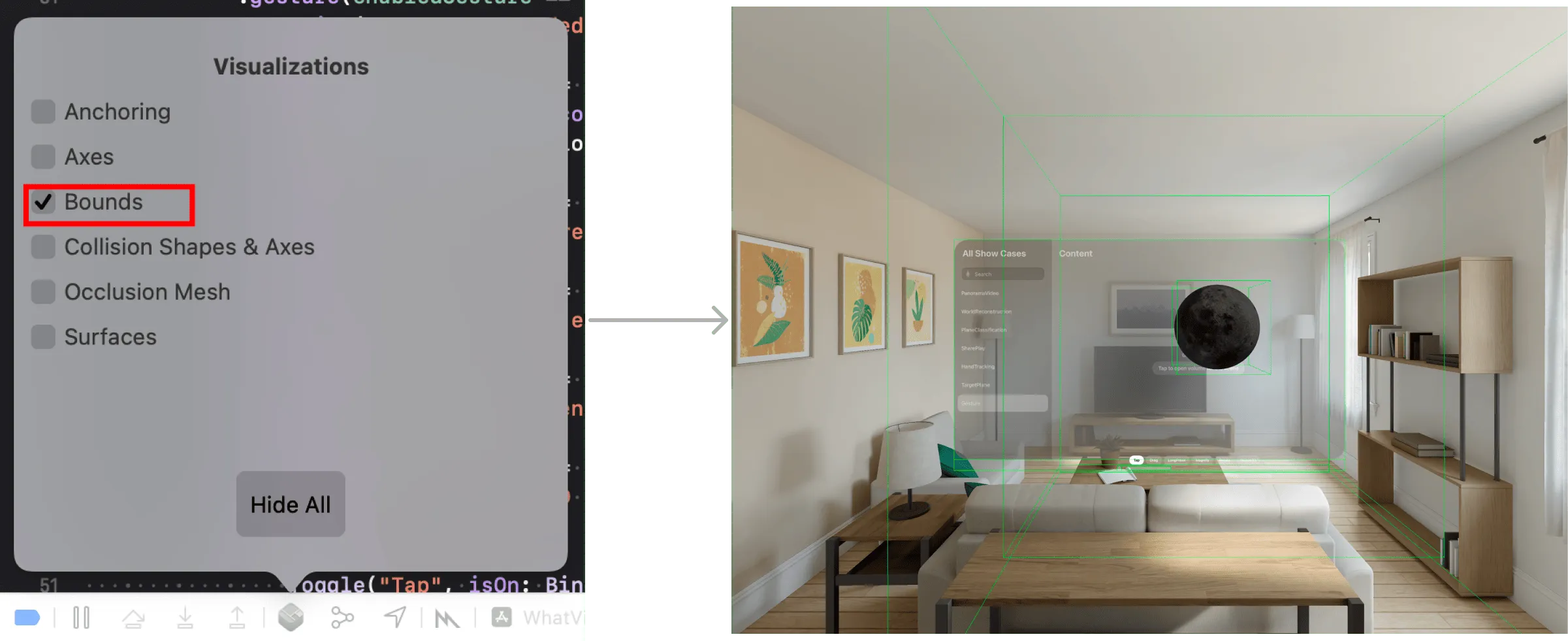

RealityBounds—a convenient way for you to quickly view boundaries in visionOS

Keywords: Volume, Swift Package, visionOS, Debug

For years, 3D software engineers around the globe have been trying to solve a seemingly simple question: “Is my content in the right spot?“.

Indeed, the question of “Is my content in the right spot?” may not only be asked by 3D software engineers, but also by front-end, iOS, and Android engineers every day. However, in 2D UI development, various mature debugging tools are available, such as WebInspector in browsers, Lookin on iOS, LayoutInspector on Android, engineers who develop on a 2D plane can quickly locate the problem.

However, in the 3D world, especially in the world of RealitKit + SwiftUI where everything is brand new, such debugging tools are lacking. Although Xcode provides the Bounds option in Debug Visualize, it can only display the edge of an object and cannot determine the specific length of an edge.

Fortunately, a guy named Ivan Mathy recently open-sourced a SwiftPackage called RealityBounds to help us draw our own view boundaries in visionOS to determine if our scene exceeds the boundaries.

The usage is also very simple. After importing the corresponding Swift Package, just add the following code in your code:

import SwiftUI

import RealityKit

import RealityBounds

struct GameWindow: View {

var body: some View {

RealityView { content in

content.add(BoundsVisualizer()

}

}

}

You can see the scene boundaries like this in the simulator:

However, currently, if you use the default initialization parameters, you will not see it in the Volume Window of visionOS. So now we suggest initializing with a smaller value first. We have also submitted an Issue for this repository.

// The default parameters are [1,1,1]

content.add(BoundsVisualizer(bounds: [0.9,0.9,0.9]))

SmallNews

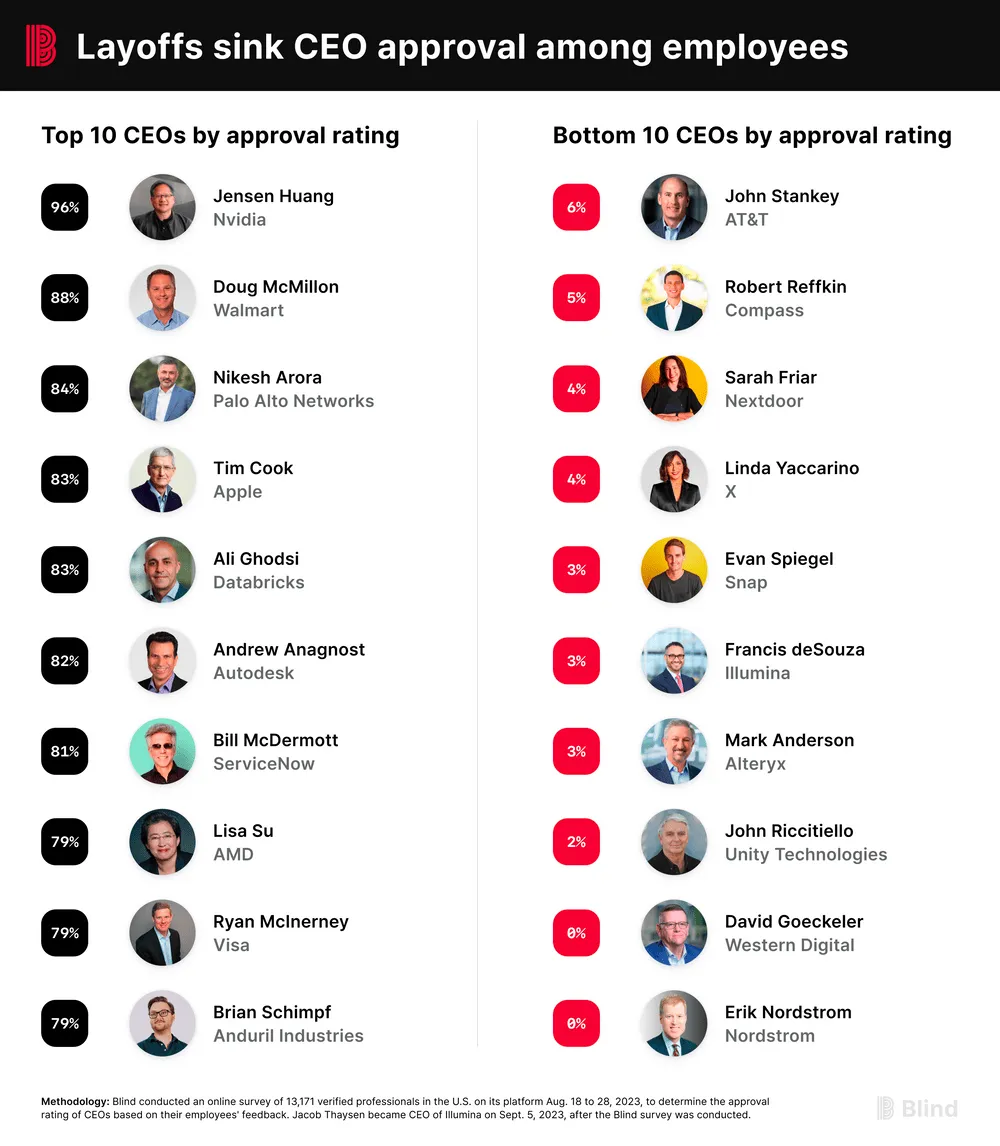

John Riccitiello resigns as Unity CEO

Keywords: Unity, CEO, John Riccitiello

Possibly influenced by the recent controversy over Unity’s fees, John Riccitiello, chairman and CEO of Unity, has resigned recently.

Coincidentally, in a previous survey by Blind on the most popular tech company CEOs survey, John Riccitiello ranked last (with only 2% approval rating).

Contributors for this issue

| Link | Image |

|---|---|

| Zion |  |

| Onee |  |

Recommended Reading

- XR World Weekly 014

- XR World Weekly 021 - Apple launches the web version of the visionOS App Store

- XR World Weekly 019

- XR World Weekly 025 - Bad news and good news for Quest players

- XR World Weekly 026 - Black Myth: Wukong has completed the journey to obtain Buddhist scriptures, but Meta may not have

- XR World Weekly 008

- XR World Weekly 024 - PICO is about to launch a new product

XReality.Zone

XReality.Zone