XR World Weekly 012

Editor’s Note for This Issue

Another joyful two weeks have passed, still full of changes (I believe many have been captivated by the various twists and turns in stories from OpenAI), and for us at XReality.Zone, the most exciting event these two weeks has been the launch of the Let’s xrOS project (yes, we played a little joke here; if you’ve seen the Code Name of visionOS in the early versions of Xcode beta, you’ll understand what xrOS means).

The initial intention of this project is to provide a handy tool for most developers and friends interested in visionOS to communicate better through various Apps. Since the project somewhat resembles an AppStore, we drew some inspiration from the appearance of the app stores on PICO and Quest during the conceptualization process (hence the content of “What would the App Store on visionOS look like?”). It may not be perfect at the beginning, but rest assured, we will continue to iterate and resolve various small bugs in the experience.

That’s all for now. More details, and more changes in the XR world, will be told to you in the XR World Weekly Issue 012~

Table of Contents

BigNews

- What would the App Store on visionOS look like?

- Will ai pin be the next iPhone?

- Unity’s visionOS Beta Program Officially Released!

Idea

- Telegram will launch visionOS version

- What’s it like to play XR while lying down?

Tool

- CSM.ai: Generating 3D models from a single image

- GPTs related to visionOS development

- Blockade Labs releases AI Scene Mesh Generator

- Gaussian Splatting can now run in browsers!

- The explosion of real-time AI drawing tools

- Using AI to generate 360° panoramic animation

Video

- The Secret Behind Portal Effect: How to create portal effects in Unity

Article

- Eyes & hands in AR: Exploring single-hand interaction in AR

Course

- XR Development for Beginners by Microsoft: Microsoft’s official introductory XR course

SmallNews

- Google launches geospatial-based AR hackathon

- 19 movies on Apple TV now support Apple Vision Pro 3D viewing

BigNews

What would the App Store on visionOS look like?

Keywords: Let’s xrOS, visionOS, App Store

On the upcoming new platform visionOS, Apple promises to bring a newly designed App Store. The emergence of the App Store turned the iPhone into the representative of the mobile internet era. What kind of experience will the new App Store bring us? Although we don’t know yet, we can get a glimpse from the existing XR device app stores.

- PICO’s app store

Although there seems to be no difference in screenshots, when using the actual device, you can see that the promotional images have a three-dimensional effect, so it’s reasonable to speculate that the images rendered for the left and right eyes have a certain parallax to create a three-dimensional sense. Other than that, the operations are not much different from those in mobile device app stores. The advantage of this design is that it’s easy for users to get started with no learning cost, but the downside is the small effective interaction area and just an enlargement of two-dimensional content, not making good use of the advantages of the infinite canvas. This is PICO’s definition and exploration of spatial app stores.

- Quest’s app store

By comparison, Quest’s app store has done some new explorations using spatial features. Some apps have three-layer icon content similar to those on visionOS when opened, as shown in the video below. These layers mainly reflect the current app’s characteristics, like Fruit Ninja with a ninja house as the background, fruits, and ninjas as the midground, and the app store as the top layer. The advantage of this design is that it fully utilizes three-dimensional space to refresh the app store experience. Of course, there are disadvantages. First, Quest uses a simple stacking of two-dimensional layers, so it looks like a flat image from the side, which can cause a severe sense of disconnection. Moreover, not all apps have such an introduction page, leading to an overall lack of uniformity.

So, it’s not hard to see that everyone’s thoughts on the app store experience in three-dimensional space are through different means to use the advantages of three-dimensional space, showing more app content to innovate the store experience. But currently, this new experience is still just visual. If it can also involve other aspects, like trying some core functions directly in space before downloading or purchasing, maybe that will be a true A whole new App Store. Of course, what exactly the App Store on visionOS will be like, we still need to wait until early next year, but if you want to experience the visionOS demos developed by the community right now, you can try the Let’s xrOS project.

This is a creative commons platform launched by XReality.Zone (that’s us, haha), aiming to inspire developers and share creativity. If you have a cool Demo you’d like to share with the community, you just need to submit the project’s binary (no need to open-source the code!). What are you waiting for? Let’s xrOS!

Will ai pin be the next iPhone?

Keywords: Humane, AI, Hardware

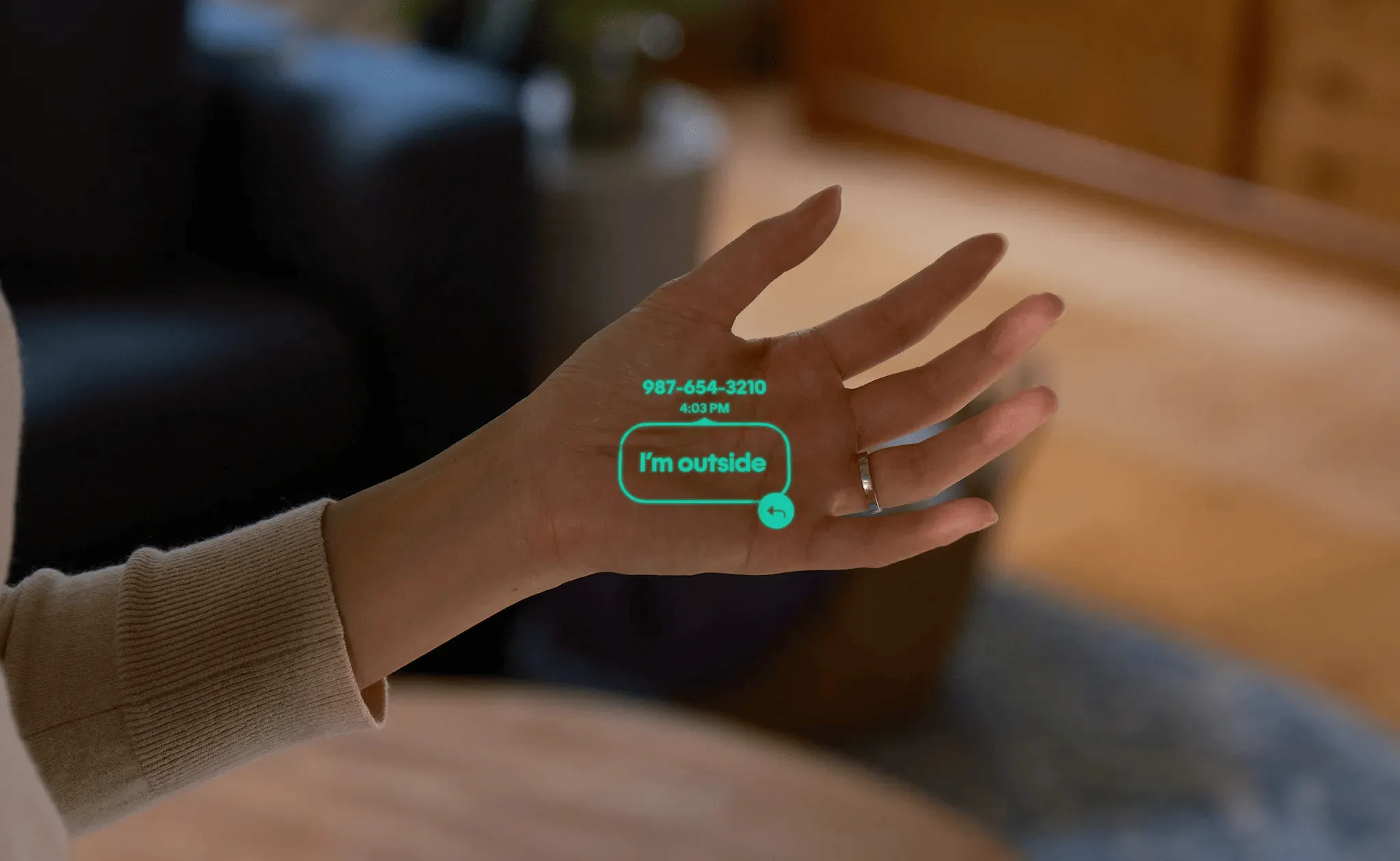

Do you remember the sci-fi movie “Her,” which told a story about an artificial intelligence falling in love with a human (the protagonist of the movie “Her” is shown below, wearing the AI device on his chest)?

Over a decade later, the AI device depicted in the movie has become a reality, released by the American company Humane and named ai pin.

Humane is a very Apple-like company, as its founders Imran Chaudhri and Bethany Bongiorno both worked at Apple. They established this company with a focus on the importance of human connections, aiming to create a smarter, more personalized future. Ai pin is their first AI + AR product embodying this ambition.

Ai pin is a smart wearable device with a small battery in its body, powered by another battery pack connected magnetically, which can be clipped anywhere on the clothing. It mainly uses voice for AI interaction, a camera for environmental perception, and computational interaction. Ai pin first made its public appearance at this year’s spring fashion show in Paris, and its founders demonstrated some of its features in a TED talk in May. The product was officially launched on November 9, 2023. This device is screenless, prioritizes privacy, doesn’t require wake words, and is activated by physical pressing, thus not needing ‘always-on listening’. Its use scenarios include:

1. Making Calls and Sending/Receiving Text Messages

When a call comes in, ai pin automatically filters and ensures that only necessary and urgent contacts can reach you. Text messages are also filtered, and you can read the message information by lifting your hand, or let AI summarize the content or search for useful parts. Additionally, text message input only requires voice, eliminating the need for a keyboard.

2. Language Translation with User’s Voice Characteristics

When ai pin detects that the language spoken by the person opposite you is different from yours, it automatically helps you translate. In subsequent conversation scenarios, it can also translate your language into the other person’s language, and it does so with your voice pronunciation.

3. Catch me up

This is a special keyword that allows ai pin to sort out what needs attention from all accessible data, including schedules, arrangements, text messages, etc. It can be understood as a Siri with access to all data, helping you summarize current affairs and plans.

4. Staying Healthy

Using the camera, it can analyze the food and products you see to determine whether they are safe to consume (to prevent allergies), and it can also track your daily intake to prevent overeating, etc. Incidentally, the camera can handle many visual tasks, such as product analysis, and double-tapping allows for photo and video capture.

5. Enjoying Music Anytime, Anywhere

The ability to play music anytime, anywhere may not seem novel, but it can also create music directly according to the user’s request. Of course, all controls can be managed through voice or by hand.

In summary, this is a brand-new AI interaction device, the “ideal” basic functionality we expect from Siri, a thoughtful AI assistant, a more convenient ChatGPT not just limited to computer text communication. However, some believe it’s just a GPT product in disguise, unable to justify its $699 price plus monthly fees of $24 for network connection and GPT API. There are even critics who call it an advanced version of a surveillance device.

Because of Humane founders’ Apple background, many naturally compare their device to the upcoming Apple Vision Pro. Past criticisms of Apple Vision Pro focused on technology obstructing human interaction, like a father needing to wear Apple Vision Pro to record spatial videos at his daughter’s birthday (now you can record with an iPhone!), completely blocking interaction with his daughter. In contrast, ai pin is more about intelligently, quickly, and effectively assisting people in decision-making, problem-solving, organizing thoughts… Of course, apart from the high pricing, it’s also criticized for its limited battery capacity and functionalities, with some saying it’s just a ChatGPT in a box bought from Alibaba. So, about the future, would you prefer a Vision Pro on your head or an ai pin on your chest?

Unity’s visionOS Beta Program Officially Released!

Keywords: Unity, visionOS, PolySpatial

Earlier this year, at WWDC, Unity announced platform support for Apple Vision Pro and introduced Unity PolySpatial to the world, allowing for the creation of unique spatial experiences that can run alongside other apps in Apple Vision Pro’s shared space.

Finally, on the early morning of November 17, 2023, Beijing time, Unity announced that the Unity visionOS Beta Program is officially open to all Unity Pro, Unity Enterprise, and Unity Industry customers.

In this beta, Unity brings familiar workflows and powerful creative tools for developers to create immersive games and apps for Apple’s new spatial computing platform, Apple Vision Pro. On Unity, there are mainly three ways to create immersive spatial experiences on the visionOS platform.

- Port existing VR games or create a brand new immersive experience, replacing the player’s environment with your own.

- Use the perspective channel to blend content, creating immersive experiences that merge digital content with the real world.

- Run multiple immersive apps simultaneously in shared space through perspective.

Developers can also build windowed applications, content running in windows, where users can adjust the size and reposition windows in their space. This is the simplest way to bring existing mobile and desktop applications to visionOS and is the default mode of content running when setting visionOS as the target platform.

We’ve also compiled some relevant documents and news links for easy reference!

- Unity’s official announcement link for visionOS Beta Program

- Unity Pro 30-day free trial registration

- Unity visionOS Beta Program instructions (video version)

- PolySpatial (version 0.62) documentation

- Unity’s issue tracker

- Unity’s bug Reporter

- Unity’s feedback

Idea

Telegram to Launch visionOS Version

Keywords: visionOS, Telegram

Pavel Durov, CEO of Telegram, announced in his Telegram Channel the upcoming release of a visionOS version of the Telegram app, along with a preview video. This video was also shared by various netizens on X.

The trailer showcases large-screen video playback and when sending AR stickers, the sticker animations break through the boundaries of the app window, creating an immersive feeling. It also showed a scenario of sending messages using Siri. It’s encouraging to see more major companies adapting their apps to this new platform and updating their usage methods based on platform features. However, it’s important to note that currently, products from major companies are still mainly designed around windowed interfaces, not departing from traditional two-dimensional flat operations.

More about hand-eye interaction exploration on spatial computing platforms can be read in our Newsletter Issue 10.

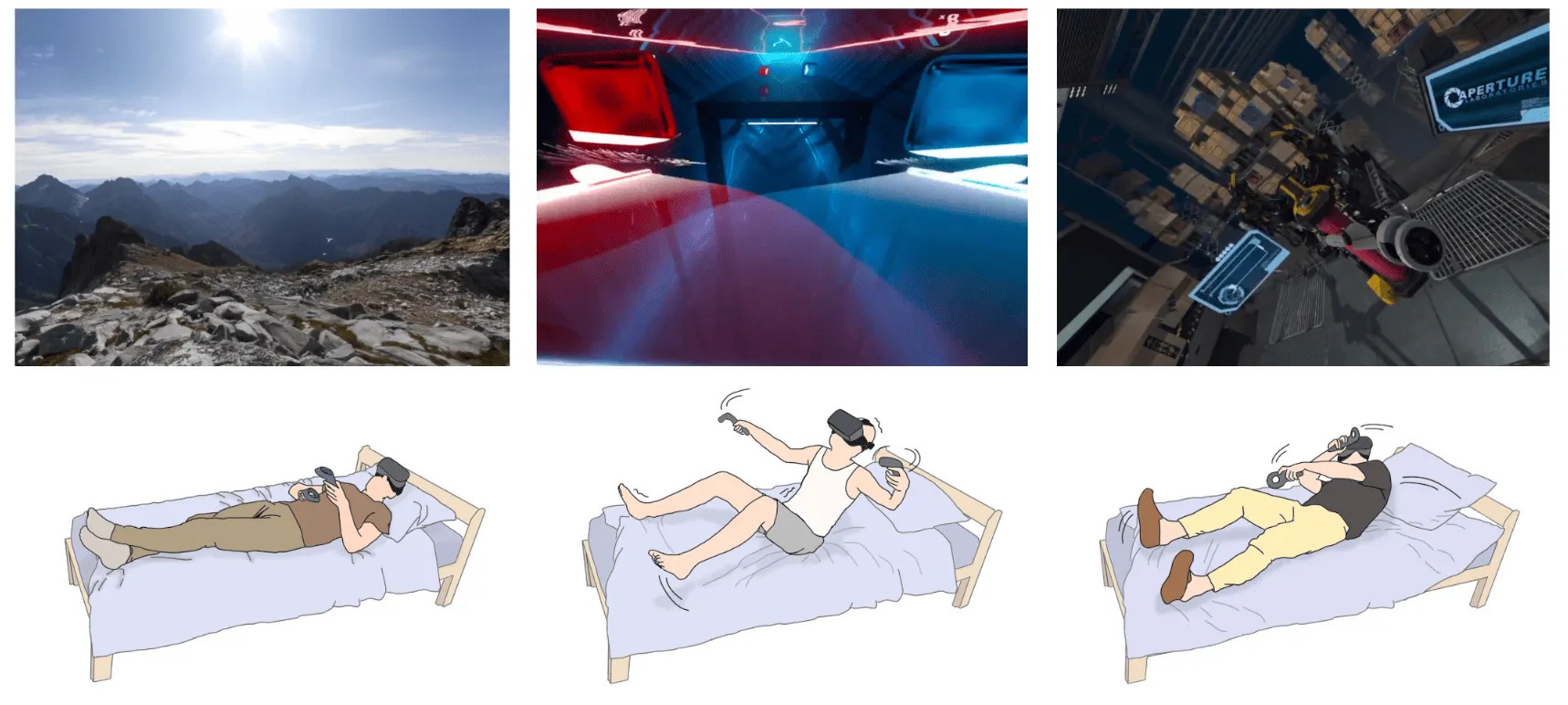

What’s it Like to Play XR While Lying Down?

Keywords: Pillow, lay down, Quest 3

Standing, sitting - these seem to have become the basic postures for playing VR/XR. Have you ever wondered what it would be like to play while lying down?

The answer is: A terrible experience (😂). A paper presented at CHI 2023, “Towards a Bedder Future: A Study of Using Virtual Reality while Lying Down,” tells us that if you want a good experience playing while lying down, all existing applications need to be rethought, otherwise it will lead to poor user experience or even dizziness.

Perhaps having seen this paper, Lucas Rizzotto recently launched an app called “Pillow” in the Quest store, designed specifically for playing while lying down. Its features include:

- Extremely comfortable gaming experience: no dizziness and comfortable even when the device’s weight is on the user while lying down;

- Novel gaming perspective: playing while lying down offers a completely different experience;

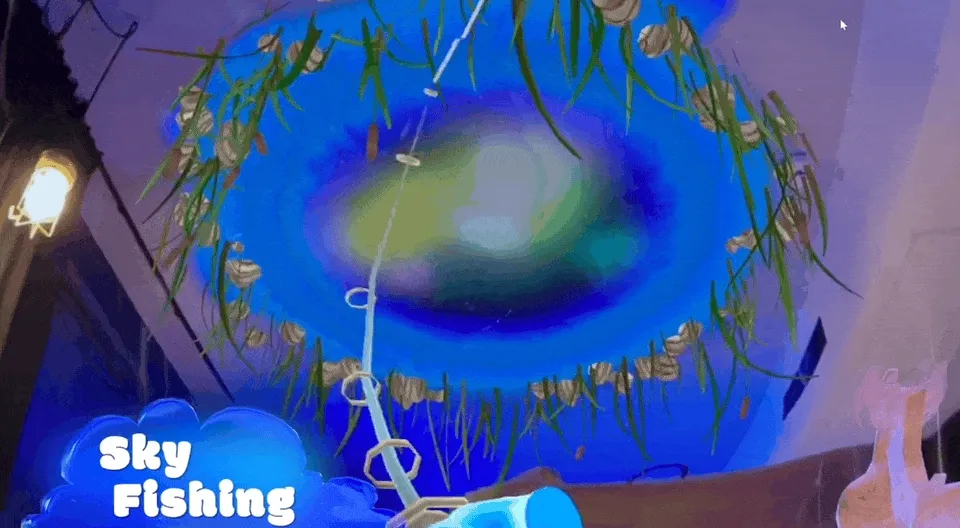

This app includes four scenes, each providing a dream-like experience (the following videos are from app store screenshots):

- Fishing

Also the fish swimming from the air will guide you to review and summarize the day.

- Starry Sky

Open the ceiling of the room to see a different Arctic sky.

- Bedtime Story

Read a bedtime story at Bedtime Stories and have a beautiful dream.

- Deep Breathing

This app shows us more possibilities in XR. If you happen to have a Quest device, you can try it out; it’s currently priced at $9.99.

Tool

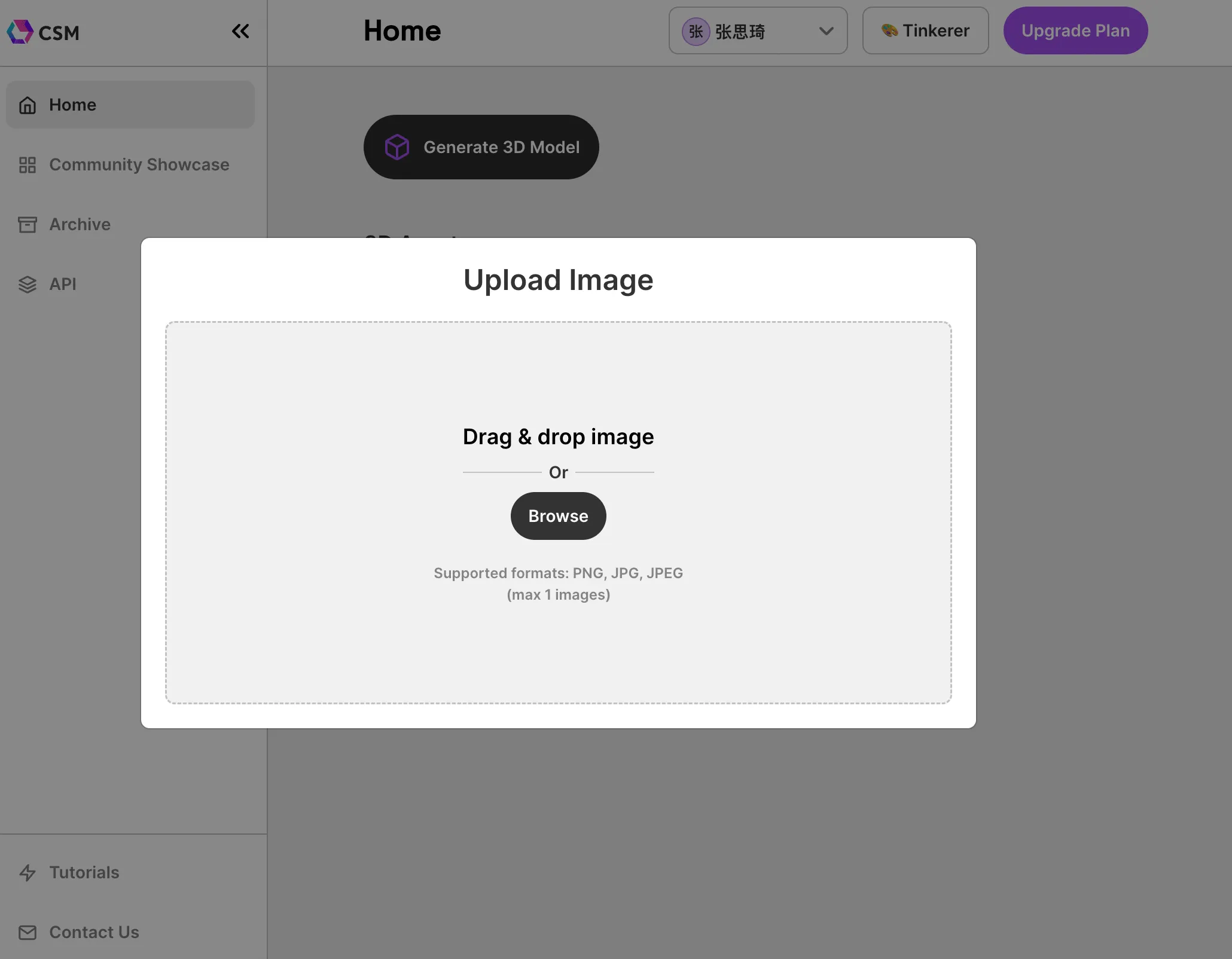

CSM.ai: Generating 3D Models from a single Image

Keywords: AI, 3D Assets Generation

Recently, tools for generating 3D models from images have been emerging one after another. CSM.ai is a new force in this category.

The tool is very user-friendly. One way to use it is through its web page: upload an image, select the desired model, and generate 3D materials. Another method is similar to Midjourney on Discord, where you operate through various commands. This tool’s operations are not too complicated. It’s worth mentioning that its output formats include common ones like obj and glb, as well as usdz. However, since the output textures bake the lighting and lack PBR material maps, they might need manual adjustment for practical use.

Regarding the quality of the generated models, it’s a bit like opening a mystery box. While I’ve seen impressive results online, such as the test results by @cocolitron, my own high-definition photos didn’t yield ideal results (a friend reminded me that it might not be the tool’s issue but rather that I’m too abstract…).

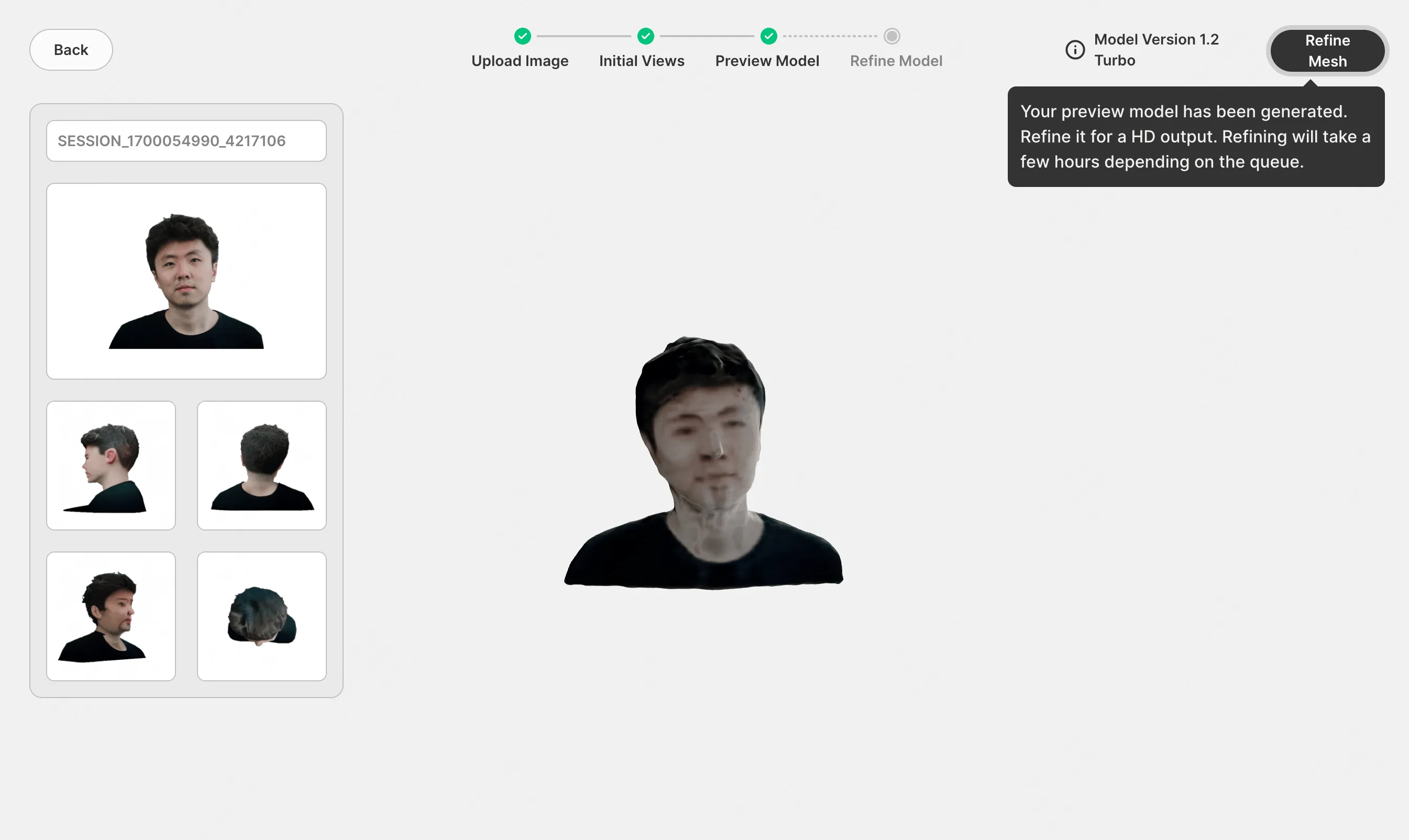

Most text-to-image or image-to-image algorithms currently use Stable Diffusion. The core technology for image-to-3D also borrows from Stable Diffusion’s framework, but the network has been fine-tuned to generate multiple specific viewpoints from a frontal image.

For instance, the commonly used Zero123 can generate a right view given a left view of a computer. Finally, combining this generator with 3D reconstruction methods like NeRF and some algorithmic tricks, we get a complete process from image to 3D. For those interested in this technology, we also introduce a few tools like threestudio, SyncDreamer, and DreamGaussian, covering the mainstream methods in this category. However, they all require powerful graphics cards (at least a GeForce RTX 3060) to run.

GPTs Related to visionOS Development

Keywords: visionOS, GPTs, OpenAI

On November 6th this year, at OpenAI Dev Day, Sam announced many new features and improvements that excited the entire tech world!

However, as an XR application developer, I am most concerned about which tools can help improve my development efficiency. Looking around, I think GPTs tools should be the most direct choice. Although the official says that GPTs’ app store will be launched by the end of the year, we currently have no official channel to know all the GPTs applications available on the internet.

Fortunately, Airyland | code.bit launched a Product Hunt-like GPTs Hunt website, listing GPTs applications it collected from various channels. We found some related to visionOS development (Swift, SwiftUI, etc.).

- SwiftUI GPT Tools

- SwiftUIGPT

- Apple Architect

- Swift Learner

- Swift Copilot

- Swift Scarlett

- SwiftGPT

- Swift GPT

Moreover, Airland also made their data collection publicly available (see details on X). Those interested in continuous updates or more information can follow the latest developments.

Blockade Labs Releases AI Scene Mesh Generator

Keywords: Skybox AI, Mesh Generation, AIGC

Remember Blockade Labs, which we mentioned in Newsletter 003 for generating panoramic images using AI technology? Recently, the tool has been updated with an AI World Mesh feature. It transforms user-entered text into panoramic images and then, through some depth map-guided deformation, turns it into a Mesh with a sense of depth.

We tried it too, for example, inputting “beautiful grassland” and viewing the exported Mesh file in GLB Viewer. Although currently, the results seem to be based on fixed-topology deformation on a spherical grid and may appear distorted far from the center, they offer a lot of playability in some fantastical and open scenes.

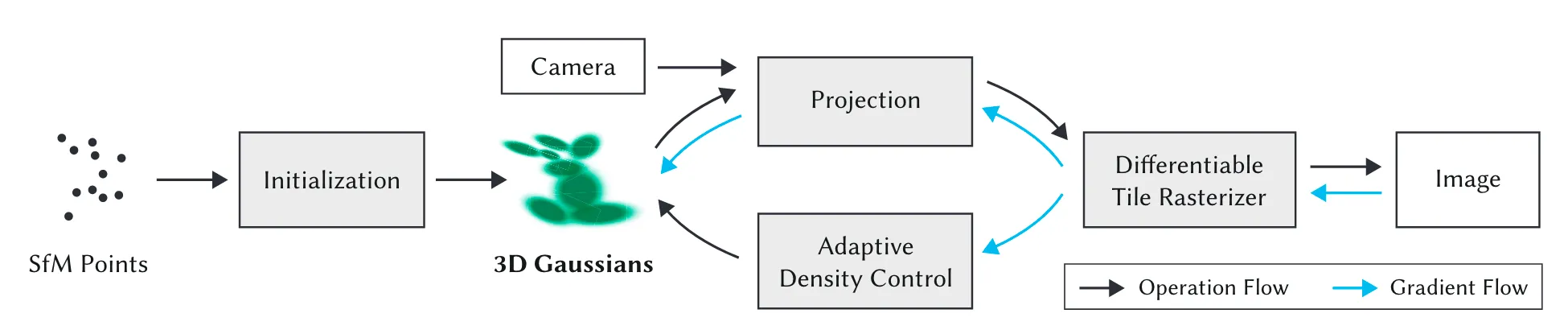

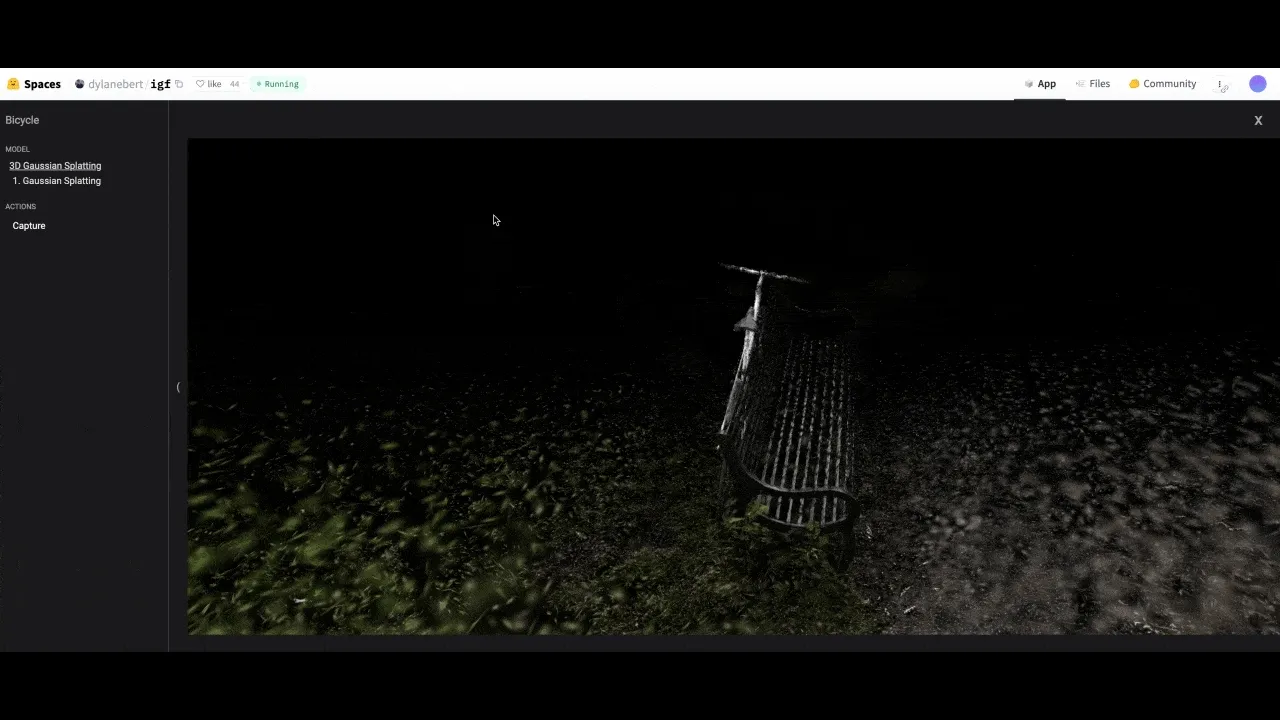

Gaussian Splatting Now Runs in Browsers!

Keywords: Gaussian Splatting, Scene Reconstruction, JavaScript

Fans of neural radiance field AI scene reconstruction (like NeRF) might have heard of the recently popular Gaussian Splatting. Both LumaAI and PolyCam support this technology, offering ultra-fast reconstruction and rendering speeds, allowing users to render reconstructed scene radiance field models in real-time on iPhones.

The technique uses anisotropic three-dimensional Gaussian spheres (imagine colored semi-transparent small ellipsoids) to reconstruct real scenes captured by users and employs differentiable block rasterization to splat (splash) these Gaussian spheres onto a 2D viewpoint for rendering. Those interested in the specifics can learn more about this technology from this introduction video.

Last week, Dylan Ebert from Hugging Face packaged this rendering technology into a JavaScript library, gsplat.js, making it easier for developers to incorporate reconstructed scenes into their creative work (thanks also to Kevin Kwok and Michał Tyszkiewicz, who first adapted it to WebGL).

The Explosion of Real-Time AI Drawing Tools

Keywords: Real-time AI Art, Latent Consistency Model, Stable Diffusion

Recently, many have been swept away by real-time AI drawing tools like KREA AI, but are unable to experience them firsthand due to the lack of invitation codes. However, AI drawing has always been driven by open-source efforts, and this real-time acceleration of Stable Diffusion technology actually comes from Tsinghua MARS Lab’s open-source project Latent Consistency Model. So, if you have a capable graphics card (at least GeForce RTX 3060), why not try deploying one locally?

Creating 360° Panoramic Animations with AI

Keywords: 360° AI Art, AI Animation, AnimateDiff, Stable Diffusion

A user named Baku shared a solution for AI-generated 360° panoramic animations. Using 360 LoRA (a fine-tuned model of Diffusion aimed at generating 360° ERP panoramic images) combined with AnimateDiff (a module for creating animations with Diffusion), immersive panoramic dynamic scenes can be created. With the plethora of AI tools available, how to better integrate them into your applications is a critical consideration.

Video

The Secret Behind Portal Effect: How to Create Portal Effects in Unity

Keywords: Portal, XR, Unity

”Portal” effects often appear in XR content, with advanced portals featuring real-time depth of field changes. Youtuber Valem shared the production method for this effect:

Method 1: Set a second camera in the teleportation space, completely following the player’s movement. However, using two cameras might add some complexity and production limitations.

Method 2: Use Stencil Shader. To put it simply, Stencil Shader acts like a “mask.” By setting shapes on the mask, the system only renders the parts that match the shape.

This method’s advantage is that it doesn’t require a second camera and can present the effects of multiple spaces simultaneously, creating impressive visual spectacles. The well-received game “Moncage” likely uses a similar method.

Article

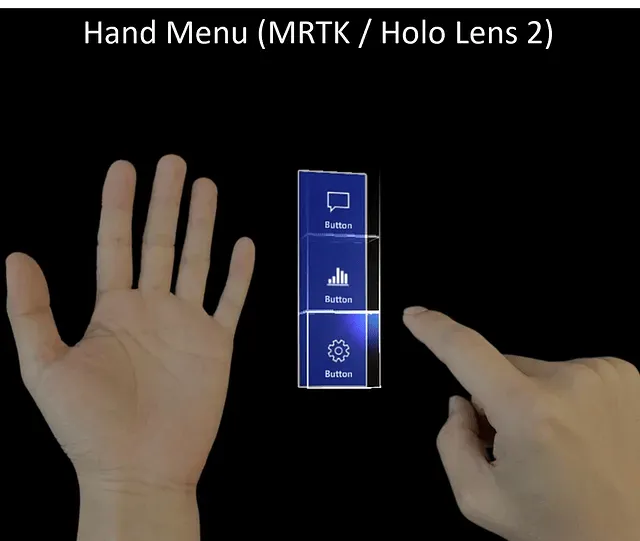

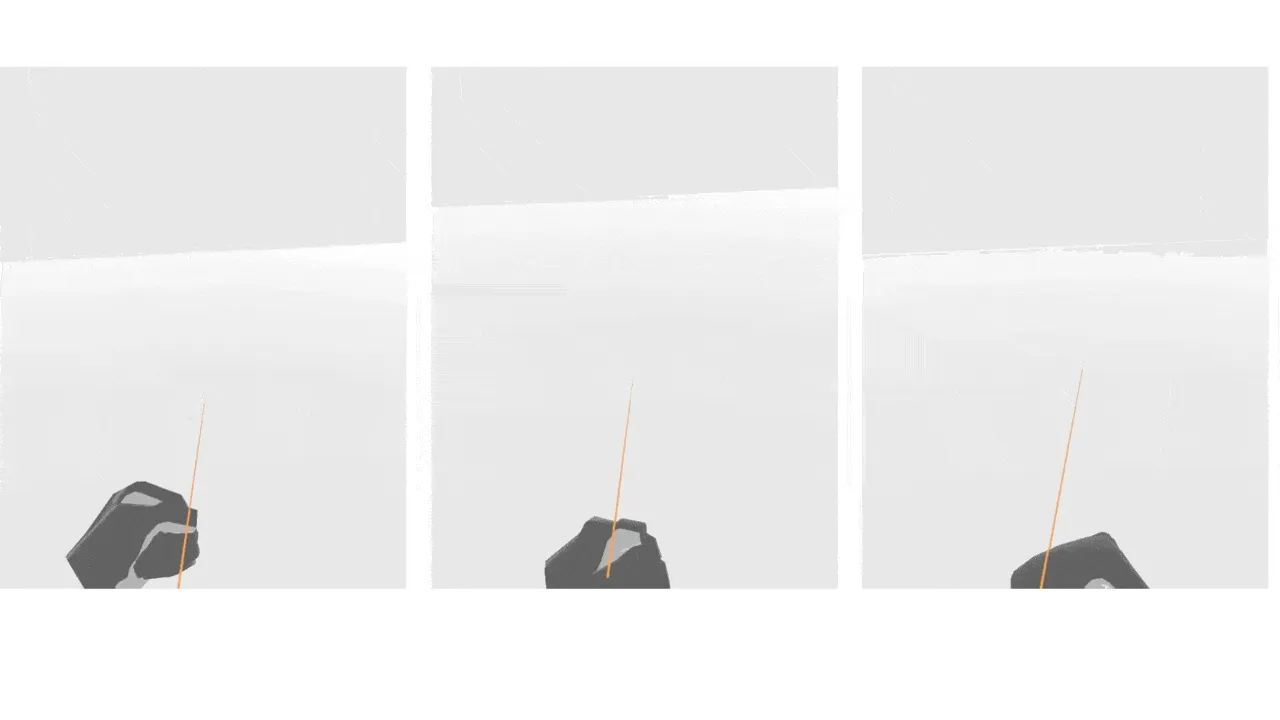

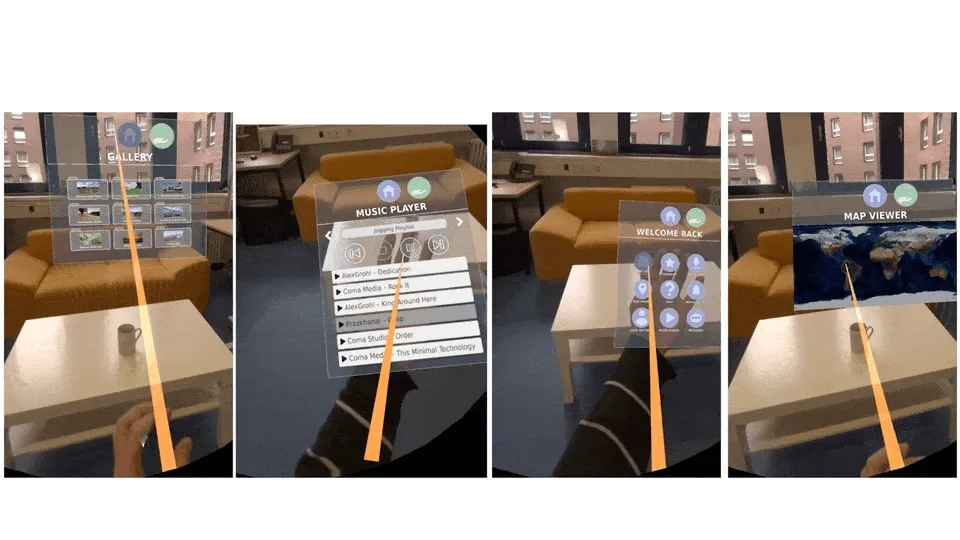

Eyes & Hands in AR: Exploring Single-Hand Interaction in AR

Keywords: AR, UX, UI

Computer Association member Ken Pfeuffer published their research on single-hand interaction (paper link). Whether it’s familiar smartphones or cool sci-fi movies, smooth operations and cool effects are often achieved with one hand. Many mainstream XR devices support the “pinching” gesture, a representative single-hand interaction. More complex processes, like bringing up menus and making selections, typically require both hands: for example, turning the left wrist and clicking with the right hand to bring up a menu. This isn’t complicated, but imagine when we are holding coffee or carrying something in one hand, two-handed operations become inconvenient.

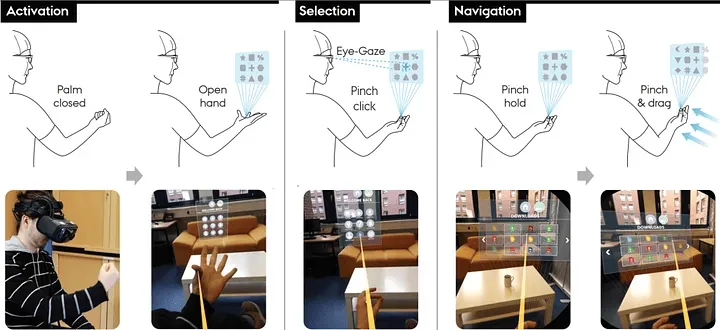

The author introduced PalmGazer, a single-hand operation UI concept for XR devices, combining gesture and eye-tracking technology, allowing users to control a hand menu with just one hand. This UI system has undergone multiple design iterations and can be achieved with eye movement + single-hand gestures:

- Summon menu: Open palm to call up the hand menu.

- Selection: Pinch to select.

- Navigation: Pinch and drag to scroll the menu. This is where PalmGazer innovates, as normally, menus move with hand movement, making scrolling difficult.

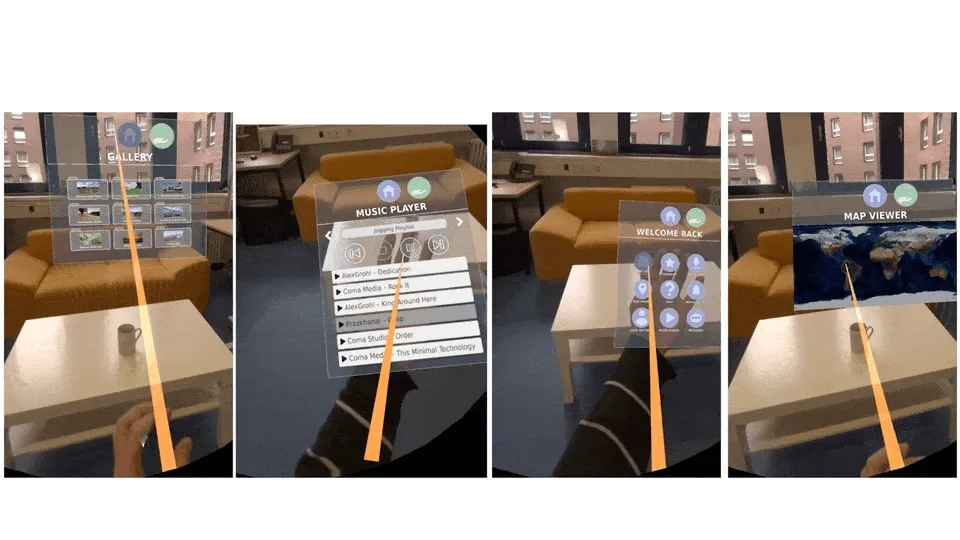

With this method, you can hold a coffee in one hand and switch the playlist in your AR glasses with the other. The following shows the combination of gesture and eye movement (rays in the video).

Based on this interaction method, many regular operations can be completed, for example:

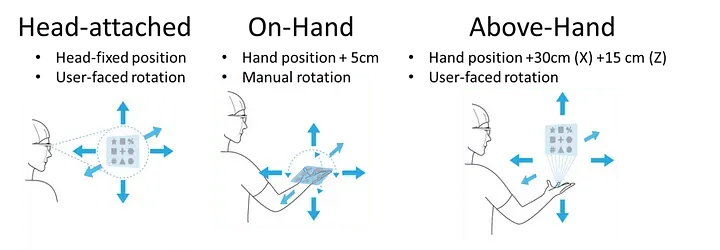

Moreover, the author discussed three UI placement designs:

- Head-attached: UI anchor point on the head, with panel position fixed and moving with the head. This setup might obstruct the view.

- On-hand: UI anchor point 5cm above the hand, with panel moving with the hand.

- Above-hand: UI anchor point above the hand (x-axis 30cm, z-axis 15cm), with panel moving with the head.

According to feedback from 18 participants, this method is intuitive and easy to use, with smooth pinching and dragging operations, though left-right dragging is somewhat challenging.

Among the three UI placements, Head-attached was the most popular, offering the most stable performance, while the Above-hand approach tended to wobble.

However, the downside of these operations is that relying entirely on one hand might become tiring.

Course

XR Development for Beginners by Microsoft: Microsoft’s Official XR Beginner Course

Keywords: Microsoft, Beginner, XR

Impressed by the OpenAI Developer Conference, I considered diving deeper into the field and found that Microsoft has released a new Generative AI course: generative-ai-for-beginners. Surprisingly, scrolling through the documentation, I discovered that Microsoft has developed courses in various fields, including IoT, ML, AI, Data Science, and of course, XR!

Looking at the commit history of XR Development for Beginners, the last update was around June last year, which seems a bit old school. However, trusting Microsoft, I spent an afternoon reading through the entire material - after all, learning never ends.

Overall, this material is concise and straight to the point, presenting key concepts and core content. Reading through it once will help you establish the basic concepts and considerations for XR development. The first four chapters focus on theory and design, while the last four are more about development and hands-on content.

What impressed me were the ‘Gaze and Commit’ and ‘Hands-free’ chapters, which mentioned a few small but very helpful points. For example:

Users often fail to find UI elements located either too high or low in their field of view. Most of their attention ends up on areas around their main focus, which is approximately at eye level. Placing most targets in some reasonable band around eye level can help. When designing UI, keep in mind the potential large variation in field of view across headsets.

I’m not sure if these points are mentioned in HIG, but I have indeed found these suggestions very pertinent in my actual development process for visionOS applications. Previously, when developing in the simulator, I didn’t pay much attention to the field of view parallel to the eyes. However, when you experience it on a real device, you will realize that if the UI interface deviates from the parallel field of view, it can cause many problems, such as users involuntarily looking down or up to find the interface. This can easily lead to discomfort (like neck soreness) after prolonged use, so keeping the main elements of the UI interface in the user’s parallel field of view is essential.

Why not spend some time quickly going through this material yourself? It’s worth it.

If you don’t have the time, why not feed these contents to your GPTs? After all, once it learns, it’s as if you have too!

SmallNews

Google Launches Geospatial AR Hackathon

Keywords: Google, Hackathon, Geospatial

Google has launched the Google’s Immersive Geospatial Challenge, a hackathon for immersive experience design based on geolocation. Participants are invited to use Google’s photo-realistic 3D mapping tool, Google Photorealistic 3D tiles, and the Spatial Creator tool for experience design in various categories like entertainment, advertising, travel, production, sustainability, etc. Participants can use Adobe Aero or Unity for project creation and submit related videos and source codes by November 21. The competition has set 11 prizes, with a total prize pool of $70,000.

According to Google’s blog cloud.google.com, Google photorealistic 3D tiles allow for overlapping effects between real and virtual buildings, enabling users to experience a “Sim City” like scenario in AR glasses.

19 Movies on Apple TV Now Support Apple Vision Pro 3D Viewing

Keywords: Apple Vision Pro, 3D

According to flatpanels, after updating to the latest version, 19 movies in the Apple TV app list now support 3D viewing. This includes movies like “Shrek,” “Kung Fu Panda 3,” “Minions: The Rise of Gru,” “Jurassic Park,” and “Pacific Rim: Uprising.” Based on previous recruitment information, Apple may be advancing the integration of Vision Pro with Apple TV.

The list of 19 movies includes:

- 47 Ronin

- Cirque Du Soleil: Worlds Away

- Everest

- Hansel and Gretel Witch Hunters

- Jurassic World Dominion

- Kung Fu Panda 3

- Mortal Engines

- Pacific Rim Uprising

- Sanctum

- Shrek

- Skyscraper

- Tad the Lost Explorer and the Secret of King Midas

- The Boss Baby: Family Business

- The Little Princess

- The Nut Job 2: Nutty by Nature

- The Secret Life of Pets 2

- Trolls

- Trolls World Tour

- Warcraft

Contributors to This Issue

| Link | Image |

|---|---|

| Onee |  |

| SketchK |  |

| Zion |  |

| ybbbbt |  |

| Faye |  |

Recommended Reading

- XR World Weekly 025 - Bad news and good news for Quest players

- XR World Weekly 017

- XR World Weekly 010

- XR World Weekly 026 - Black Myth: Wukong has completed the journey to obtain Buddhist scriptures, but Meta may not have

- XR World Weekly 007

- XR World Weekly 021 - Apple launches the web version of the visionOS App Store

- XR World Weekly 029 - Hurry up and update to the new system.

XReality.Zone

XReality.Zone