XR World Weekly 005

Highlights

- Create 3D content with one line of code, Unity launches AI tools “Muse” and “Sentis”

- Now you can run your Unity projects on the visionOS simulator

- Karl Guttag’s series of analysis articles on Apple Vision Pro

Big News

Create 3D content with one line of code, Unity launches AI tools “Muse” and “Sentis”

Keywords: Unity, AIGC

Unity announced two new AI products:

- Unity Muse, a broad platform with AI-driven assistance during creation, i.e. AI-powered model and texture generation, similar to an enhanced Midjourney or Stable Diffusion;

- Unity Sentis, which allows developers to embed neural networks into builds to enable previously unimaginable real-time experiences, simply put it’s like binding ChatGPT with animated characters for conversational role-playing.

Currently both products are in closed testing, planned for global launch later in 2023.

Unity Muse

Unity Muse is an AI creation platform that can be used in real-time 3D applications like 3D games and digital twins, to accelerate 3D asset creation. Muse’s end goal is enabling developers to create almost anything in Unity Editor using natural inputs like text prompts and sketches.

Unity also provides a feature called Muse Chat, which is a key capability of the Muse platform. Muse Chat enables AI search to obtain well-structured, up-to-date, accurate info from Unity’s documentation, training resources and support content, like code samples, to expedite development and troubleshooting.

According to Unity’s promotional video, after user questions, Muse Chat can quickly react and provide valid suggestions to help resolve issues or offer ideas in a timely manner:

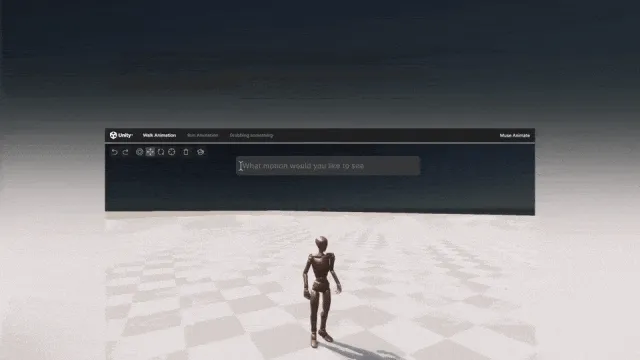

Muse’s model animation generation from text is very impressive. For example, inputting “do a backflip” makes the character model smoothly and naturally perform the action:

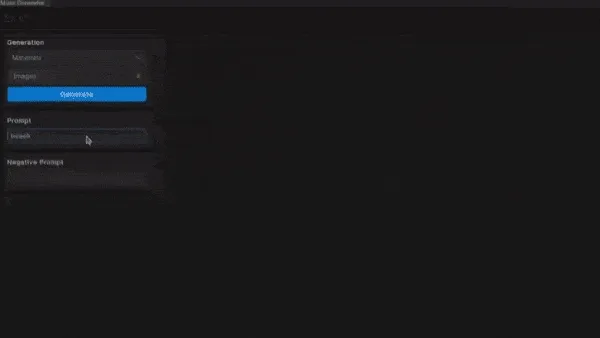

It can also quickly generate new 2D SpriteAssets using natural language prompts like Stable Diffusion:

And directly generate texture maps:

Unity Sentis

Unity sees Sentis as a true “game changer”. On a technical level, it connects neural networks with the Unity Runtime, unlocking more possibilities.

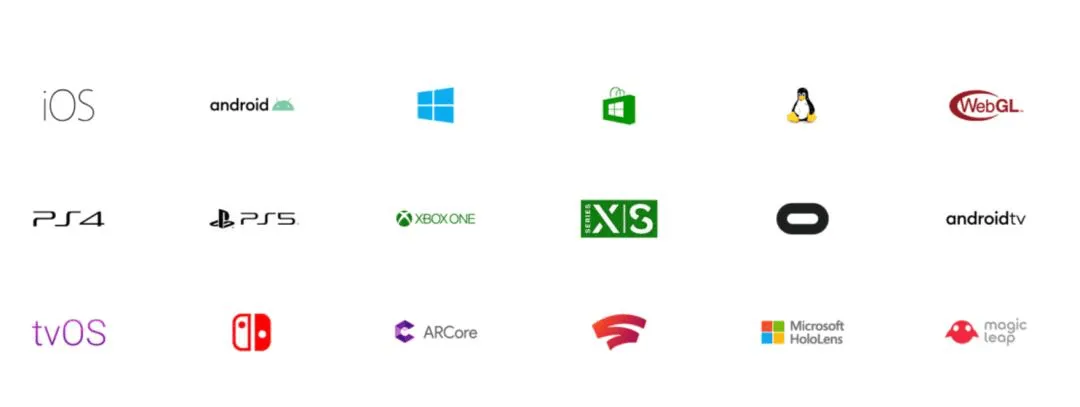

Sentis allows AI models to run on any Unity-powered device. It is the first and only cross-platform solution embedding intelligence into a real-time 3D engine. Build once and embed models that run across platforms from mobile, PC and Web, to popular gaming consoles like Nintendo SwitchTM and Sony PlayStation®. Since it runs locally on the user’s device, there are no complex steps for cloud-hosted models, latency or extra costs of cloud services.

We can have conversations with AI characters:

Ask the AI about hair styles, discuss secrets of life

More AI solutions on Unity Asset Store

Unity also offers AI solutions on the Unity Asset Store — third-party packages meeting Unity’s highest quality and compatibility standards. You can now find professional-grade AI solutions from providers like Convai, Inworld AI, Layer AI, Leonardo Ai, LMNT, Modl.ai, Polyhive, Replica Studios and Zibra AI. These new solutions leverage AI techniques like AI-driven intelligent NPCs, AI-generated VFX, textures, 2D sprites and 3D models, generated speech, AI in-game testing, etc, to help accelerate the creation process.

Now you can run your Unity projects on the visionOS simulator

Keywords: Unity, visionOS, InputSystem

Although official beta applications for PolySpatial are still scarcely approved — meaning we still can’t create Volume or Immsersive type apps for visionOS in Unity yet, with the release of Unity 2022.3.5f1, all Unity developers can finally try running their projects in windowed mode on visionOS.

If you want to give it a try, you’ll need:

- Xcode 15 beta version (downloadable from Apple official website)

- International version of UnityHub (needs global version)

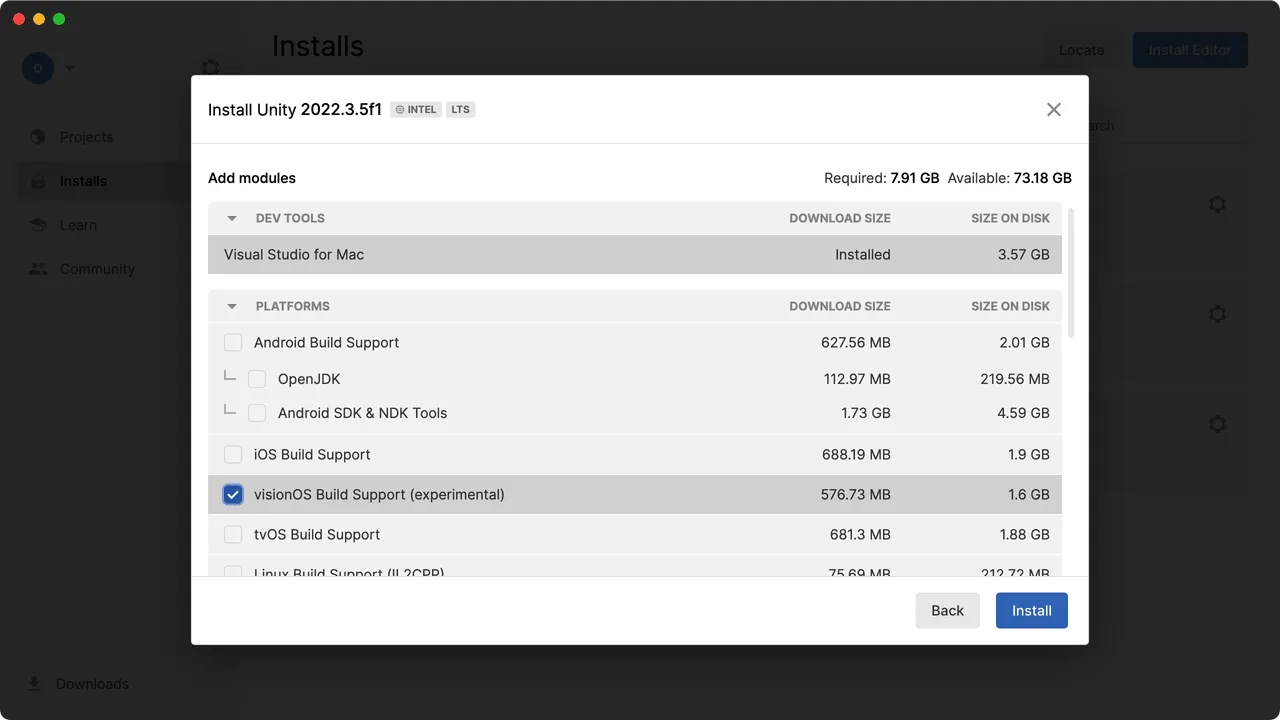

After installing the above tools, just install Unity 2022.3.5f1 in Hub, with visionOS Support selected:

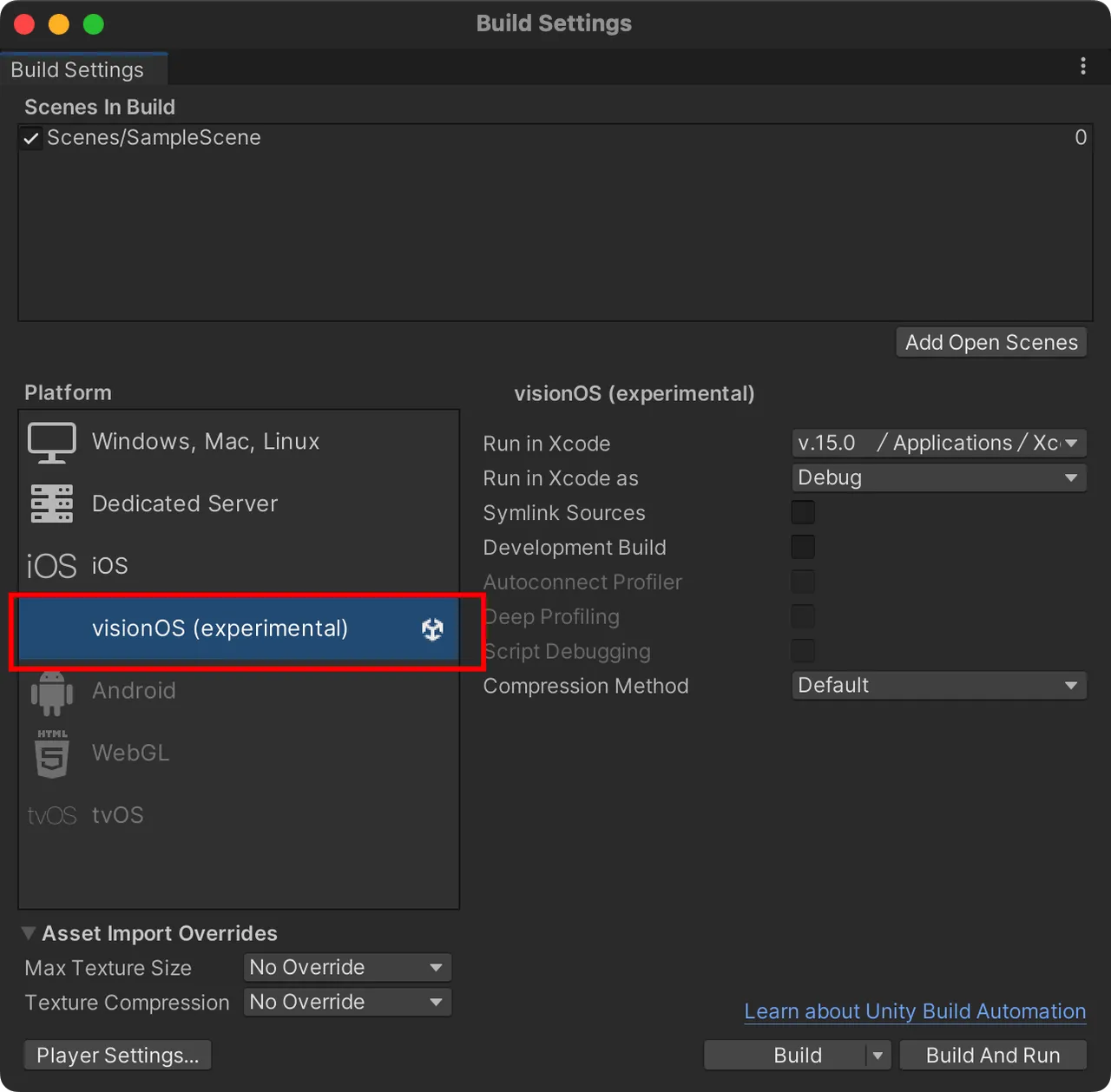

You can then check visionOS in Platforms for your Unity project:

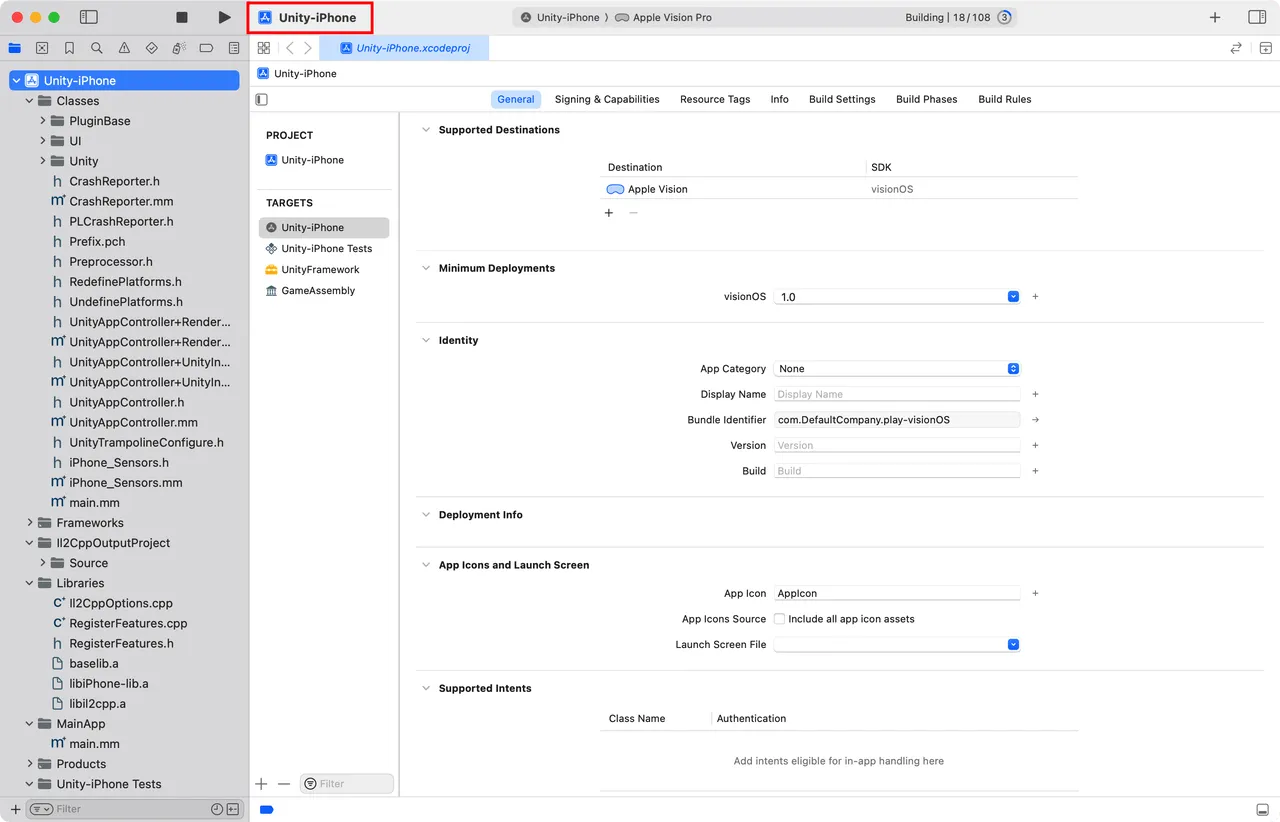

With everything set up, build and run, and you’ll see Xcode beta open — the whole process is not just similar to building for iOS, it’s identical (note the Unity-iPhone👀):

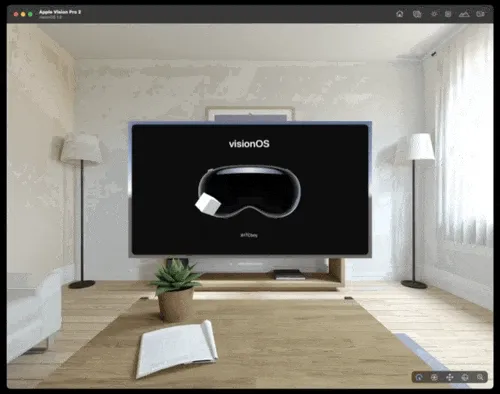

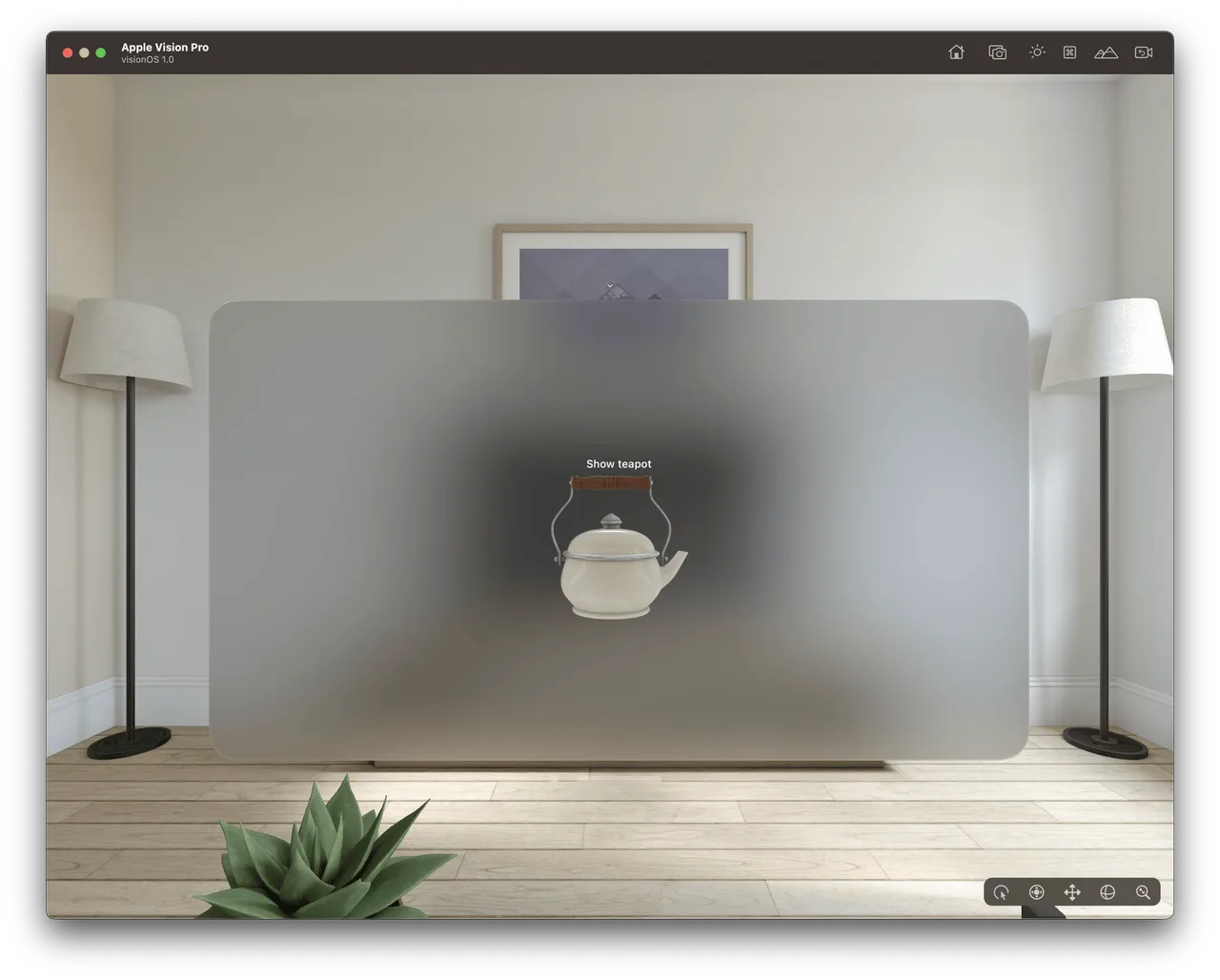

Once Xcode beta finishes building, you can see your project running as a Window in the visionOS simulator:

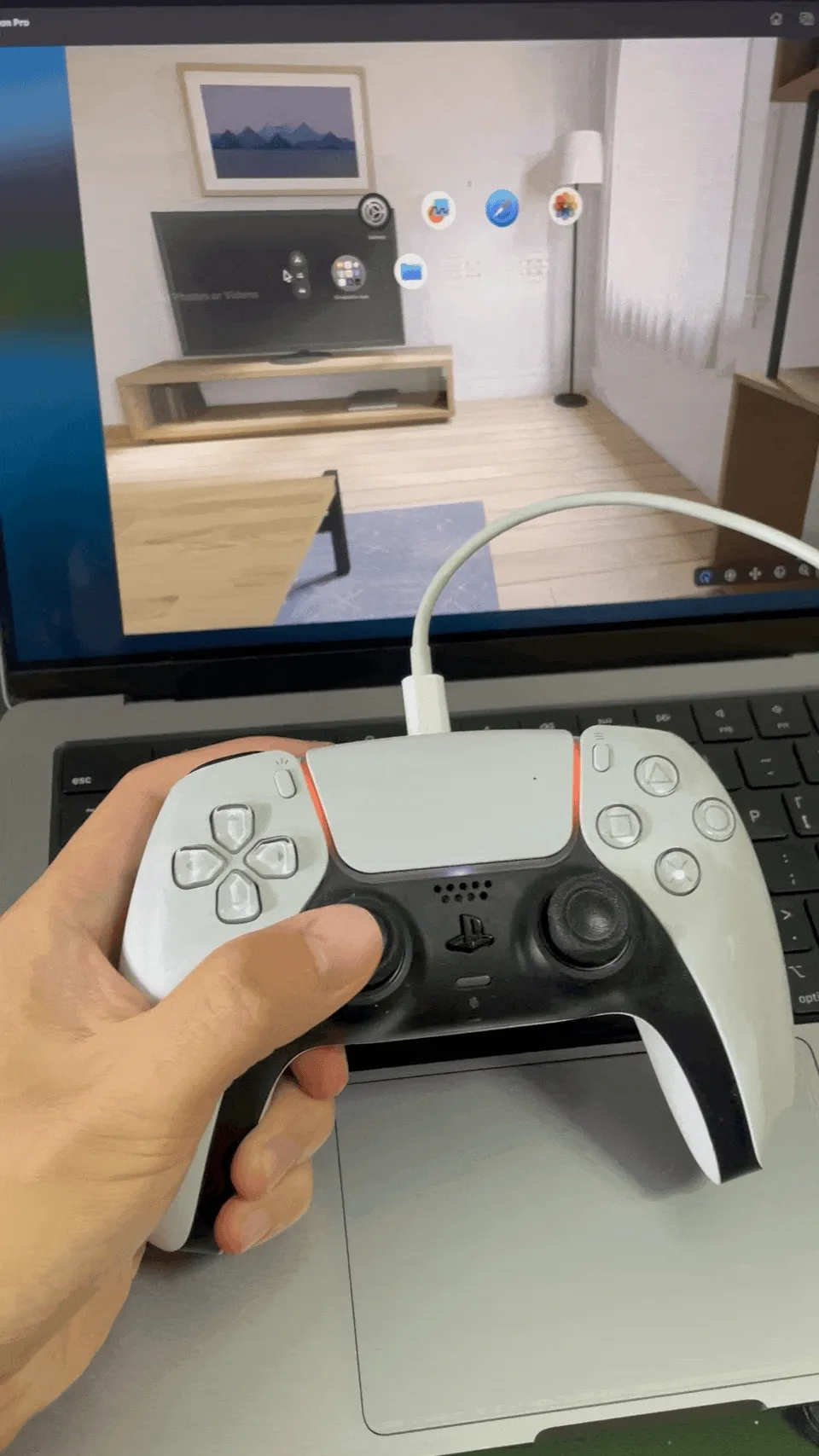

Although currently only Window apps can be developed, it doesn’t mean developers have nothing to work on. Firstly, since visionOS supports controllers, developers can add controller support like an iOS/iPadOS game (Apple thoughtfully provided related Unity plugins).

Additionally, if your project isn’t yet using Input System, you can start migration, since Apple’s official session already stated Unity projects on visionOS require Input System. In our visionOS Accelerator circle on Yixiaoke, we’re already seeing developers (iHTCboy) start attempts:

You can now develop MR apps on Meta Quest with AR Foundation!

Keywords: Unity, AR, VR

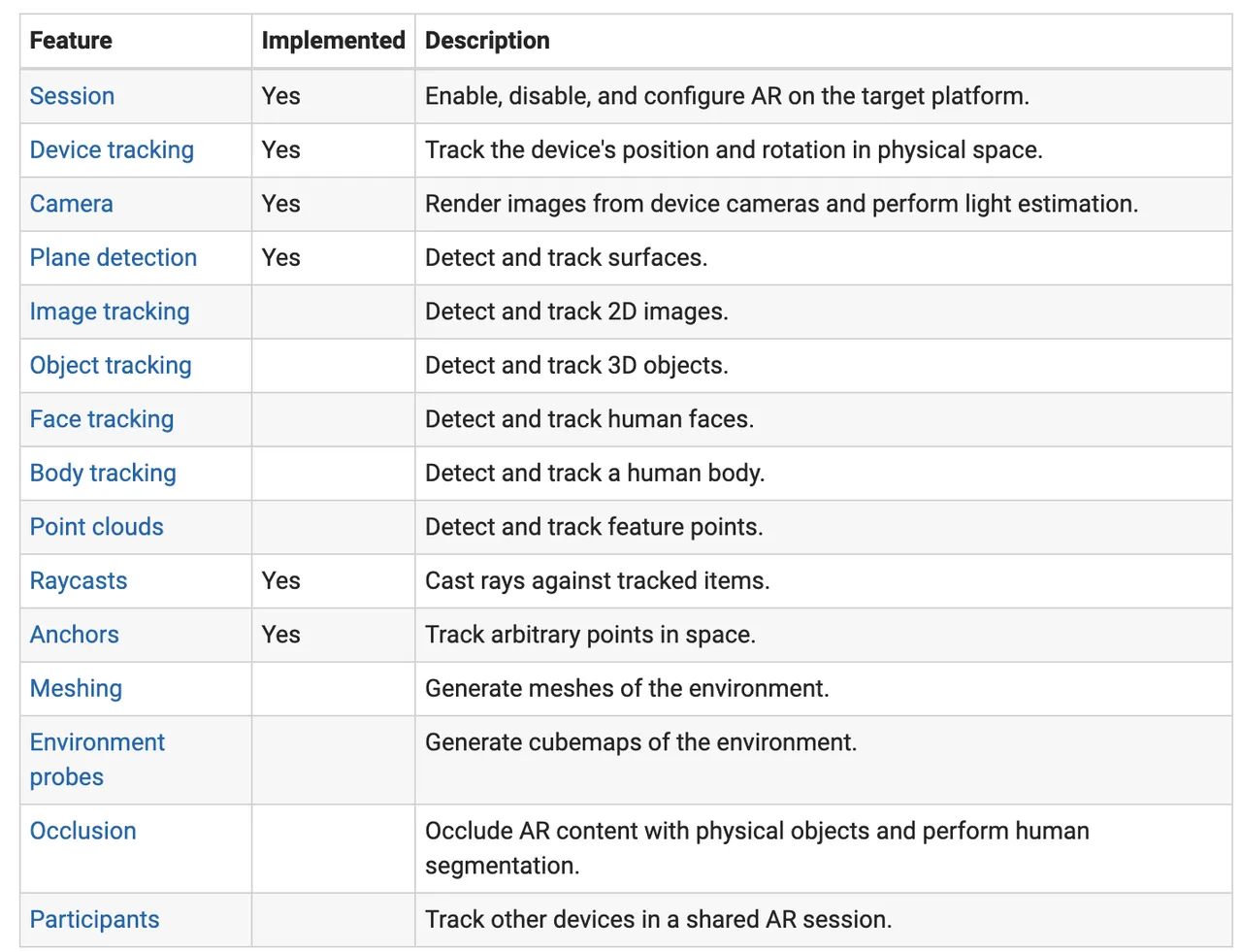

Unity’s AR Foundation is a cross-platform framework used by many Unity developers for developing AR apps on iOS (based on ARKit)/Android (based on ARCore) and HoloLens (based on OpenXR).

Now, with the release of Meta’s OpenXR Feature package, AR Foundation can also be used for developing MR apps on Meta Quest!

You can start development with:

- Unity 2021.3 or later

- AR Foundation 5.1.0-pre.6 or later

- OpenXR 1.7 or later

However, Meta Quest platform support for AR Foundation is still quite limited in current versions, only supporting a few capabilities like 6DOF tracking, plane detection, anchor adding, raycasting, etc. If your app relies heavily on other AR Foundation features, it’s recommended to keep an eye on iterative updates in subsequent releases.

Idea

Daft Punk 10th anniversary AR album cover / What’s the music listening experience like on Apple Vision Pro?

Keywords: AR, Apple Vision Pro, music

What can AR, or spatial computing, bring to our lives? Most people may first think of its ability to place objects in space. But for artists, in the vast space, they can present charming effects not possible on flat surfaces in better ways.

Although Daft Punk disbanded over a year ago, their status in electronic music remains undisputed. Their 4th studio album Random Access Memories is considered a masterpiece by many fans.

In 2023, on the 10th anniversary of Random Access Memories, Daft Punk released a commemorative edition. Unlike previous editions, this one came with a special AR effect on Snapchat tied to the album. Fans can use Snapchat to see Daft Punk’s iconic robot helmets on the album:

https://twitter.com/SnapAR/status/1656207544380006401

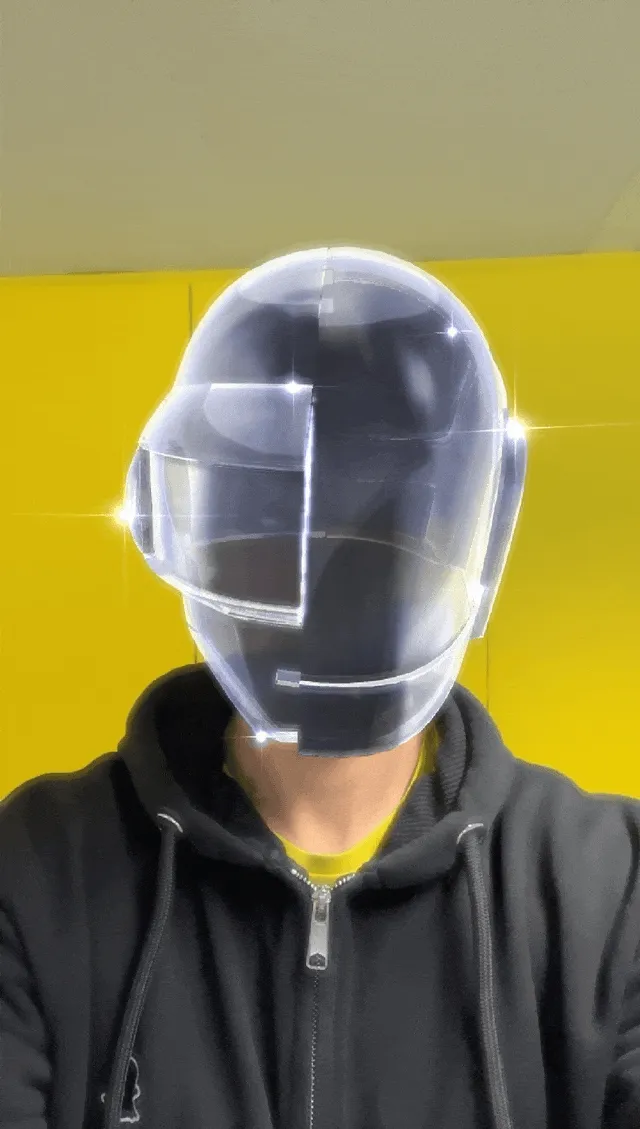

Or use the Snapchat filter to wear the iconic robot helmet themselves:

If the above AR examples only brought small enhancements to the music experience, this short prototype video by Dev Khanna highlights the huge potential of spatial computing in transforming music experiences. You can see an actual speaker in real life overlayed with a music player interface using Apple Vision Pro. As the music plays, the speaker grilles even pulsate like water ripples to match the layers of sound pressure in the track.

https://twitter.com/CurieuxExplorer/status/1684089364731826176

Although just a simple concept demo, you can already feel some very different sensations. I believe in future spatial computing scenarios, music and effects will enable many “Wow” use cases.

Showcasing AR products with AI-generated QR codes

Keywords: AI-Generated, QR Code, AR

https://twitter.com/XRarchitect/status/1674472885090672640

Recently, a novel form of QR code has gone viral online. They are no longer traditional black-and-white squares, but resemble beautiful images that catch the eye at first glance. Amazingly, scanning these images with a phone still redirects to valid websites. Now, I▲N CURTIS combines such AI-generated QR codes with AR to further expand use cases.

Five cases of applying head-mounted displays in the creative industry

Keywords: VR, XR, creative industry

Varjo is an AR/VR device manufacturer from Finland. Recently, they showcased 5 different AR/VR application scenarios on their website, which may provide some practical inspiration for related practitioners (you reading this article).

https://www.youtube.com/watch?v=7Eo3WdOKYQk

Art in the Metaverse

Jani Leinonen is one of the most prominent figures in Finland’s contemporary art scene. Using Varjo XR-3 and Unity engine, he pioneered new forms of expression, bringing NFTs into the tangible realm, and unifying digital and real art in unprecedented ways.

VR art project: “Modules”

Digital artist Maxim Zhestkov launched his VR art experience “Modules” in Helsinki. “Modules” offers an immersive virtual environment blending architecture, sculpture, sound, film and movement into an intense interactive experience with all visitors.

Experiencing history first-hand

Alma Hoque, Pauliina Lahti, Isra Rab and Lotta Veromaa developed a VR concept game for the Finnish National Museum called Ingeborg, aimed at building a bridge between history and modern technology. The game transports players to 16th century Häme Castle to interact with the armed noblewoman Ingeborg Åkesdotter Tott.

Experiencing the thrill of extreme watersports

Cliff diving as an extreme sport is clearly not for the faint of heart. Even if you have the courage, it’s not easy to get to locations for such bold jumps. In a special exhibit called “Water — Breaking the Surface”, Red Bull lets visitors experience the realism of extreme watersports by Red Bull athletes through headsets.

Opera creation through VR

After two years of production, the Finnish National Opera and Ballet’s Tundra premiered on January 27, 2023. This work is the first opera globally conceived, prototyped and produced end-to-end using immersive technologies. Leveraging the powerful capabilities of VR headsets, the creative team significantly reduced costs and time, while effectively enhancing workplace safety.

Code

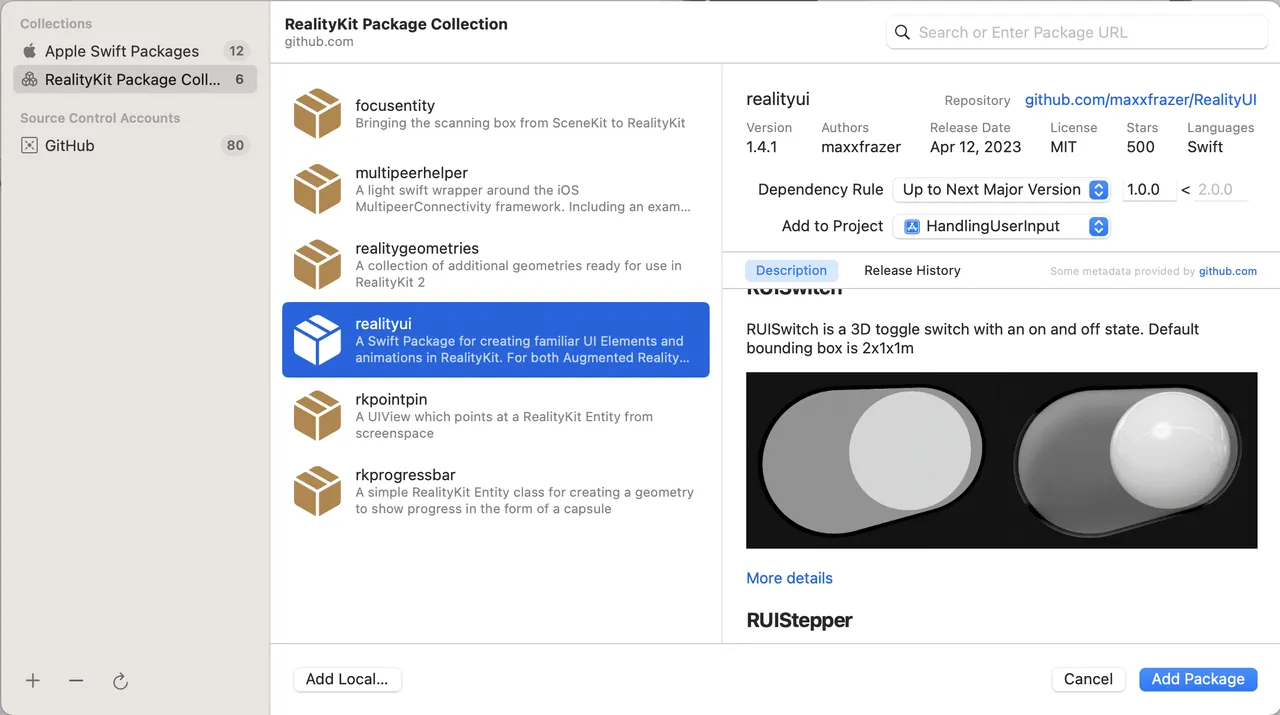

RealityKit Swift Package Collection

Keywords: RealityKit, Swift Package

Some may recall we previously recommended a RealityUI framework by AR developer Max Cobb (@maxxfrazer). In fact, he has more than just that AR dev framework — FocusEntity, ARKit-SCNPath etc also came from him. Now utilizing Apple’s Swift Package Collection, the author consolidated his various RealityKit frameworks into one collection. Adding just this package brings in all his existing RealityKit frameworks, so you won’t miss new ones.

visionOS_30Days

Keywords: visionOS, ARKit

Many developers following ARKit have probably heard of the magically creative AR_100Days project by Hattori Satoshi. The author recently also started a visionOS 30 day challenge project, open sourced on github. This visionOS 30 day challenge also goes from simple text and 3D model displays to gradually diving deeper into visionOS capabilities and creativity, great for beginners wanting to learn visionOS development through following along.

Swift3D 📐🔷

Keywords: SwiftUI, Metal

SwiftUI has never had a great out-of-the-box 3D display view. SceneKit is still based on UIKit requiring bridging, and RealityKit brought to SwiftUI at WWDC 2023 focuses on AR scenes with less customizability. Swift3D offers another option.

Swift3D is a custom 3D engine built on Metal that uses syntax highly unified with SwiftUI DSL, so you can use it like any other SwiftUI view. With SwiftUI + Metal, it can efficiently render complex 3D scenes, with custom lighting, transition animations and Shaders for visual effects and animations.

The framework supports Swift Package Manager for install and is great for SwiftUI and Metal learning, suitable for developers with some 3D knowledge but unfamiliar with SwiftUI.

Article

Tutorial: How to Make WebXR Games With A-Frame

Keywords: WebXR, Oculus Rift, HTC Vive, A-Frame

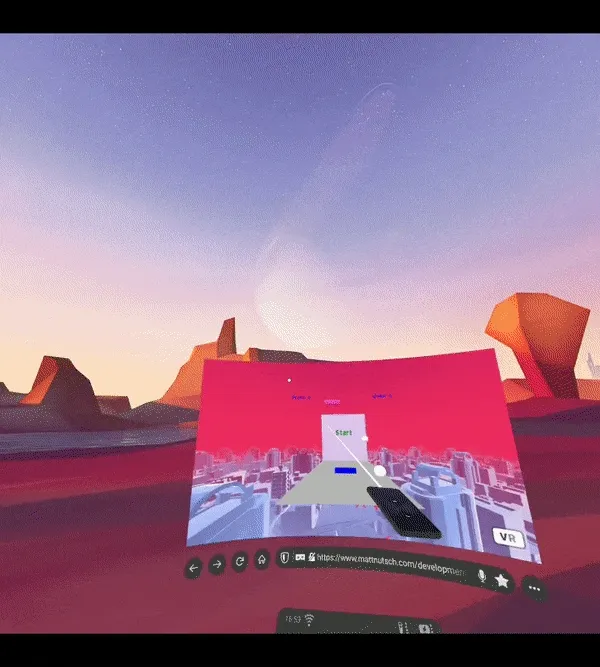

This article teaches how to implement a WebXR game with JavaScript and Mozilla’s A-Frame library. It can run on WebXR capable devices like Oculus Rift and HTC Vive.

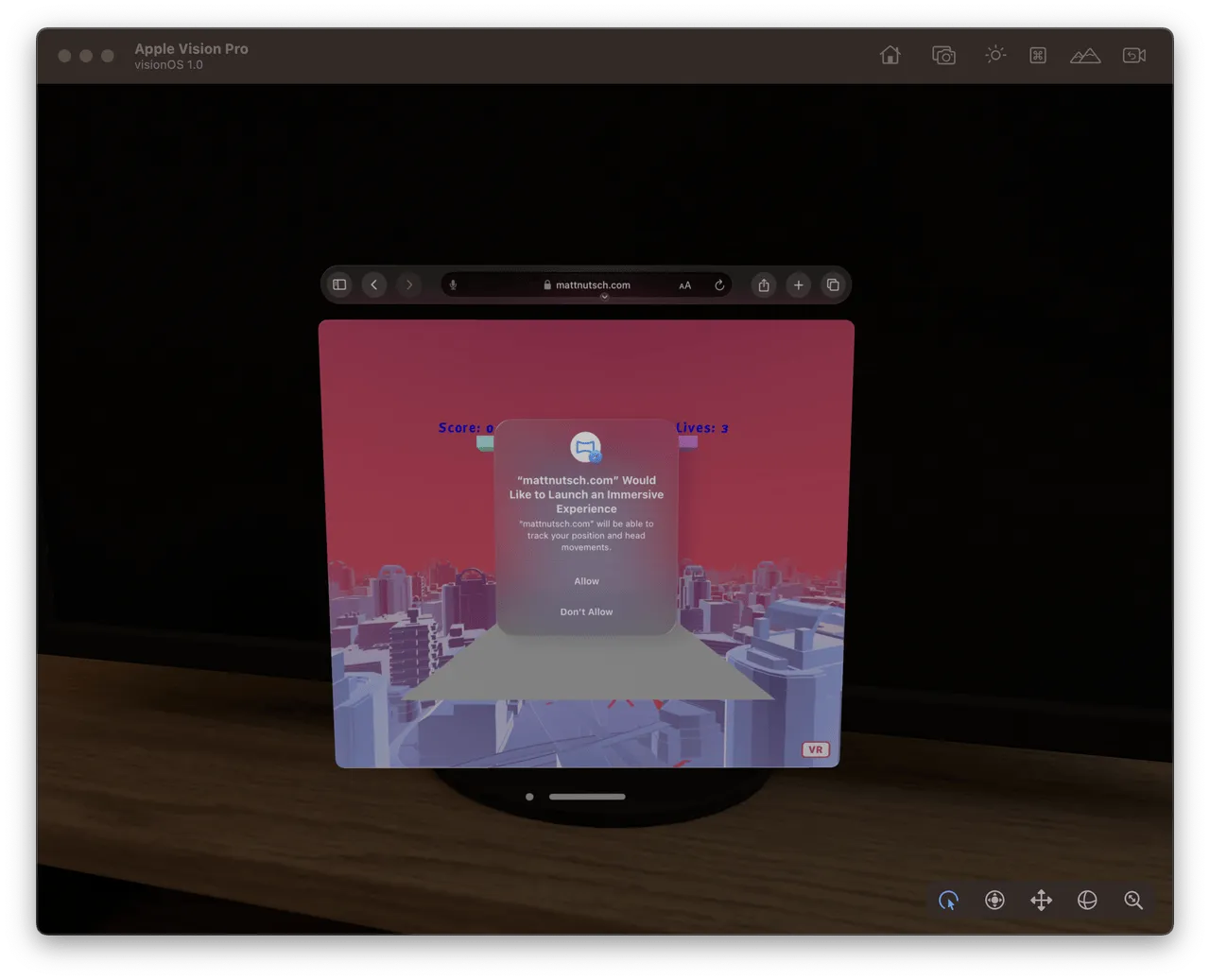

Additionally, Apple stated existing websites should be compatible on visionOS Safari since it’s a full browser. But to go beyond flat web pages, visionOS Safari includes WebXR support for immersive experiences.

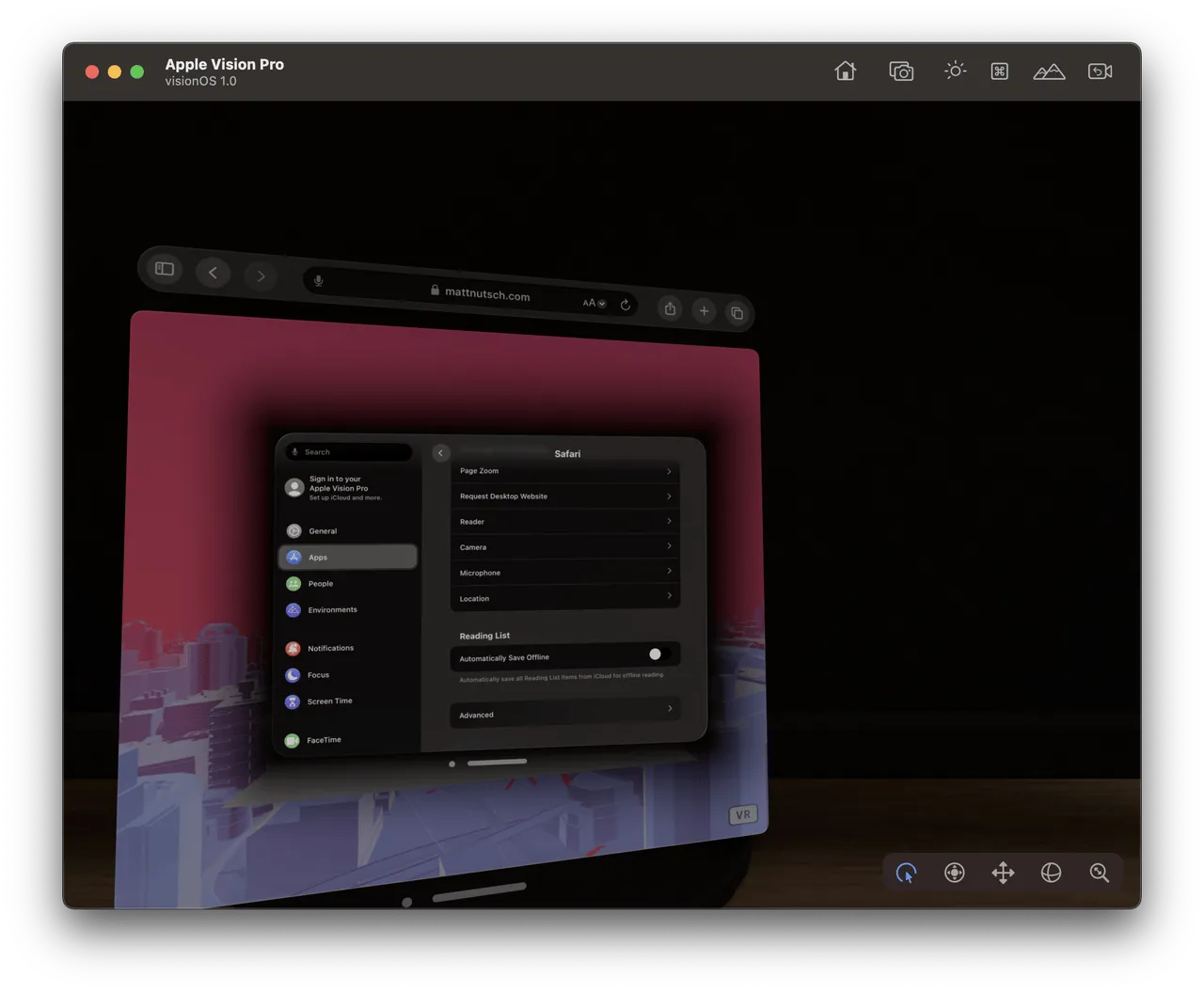

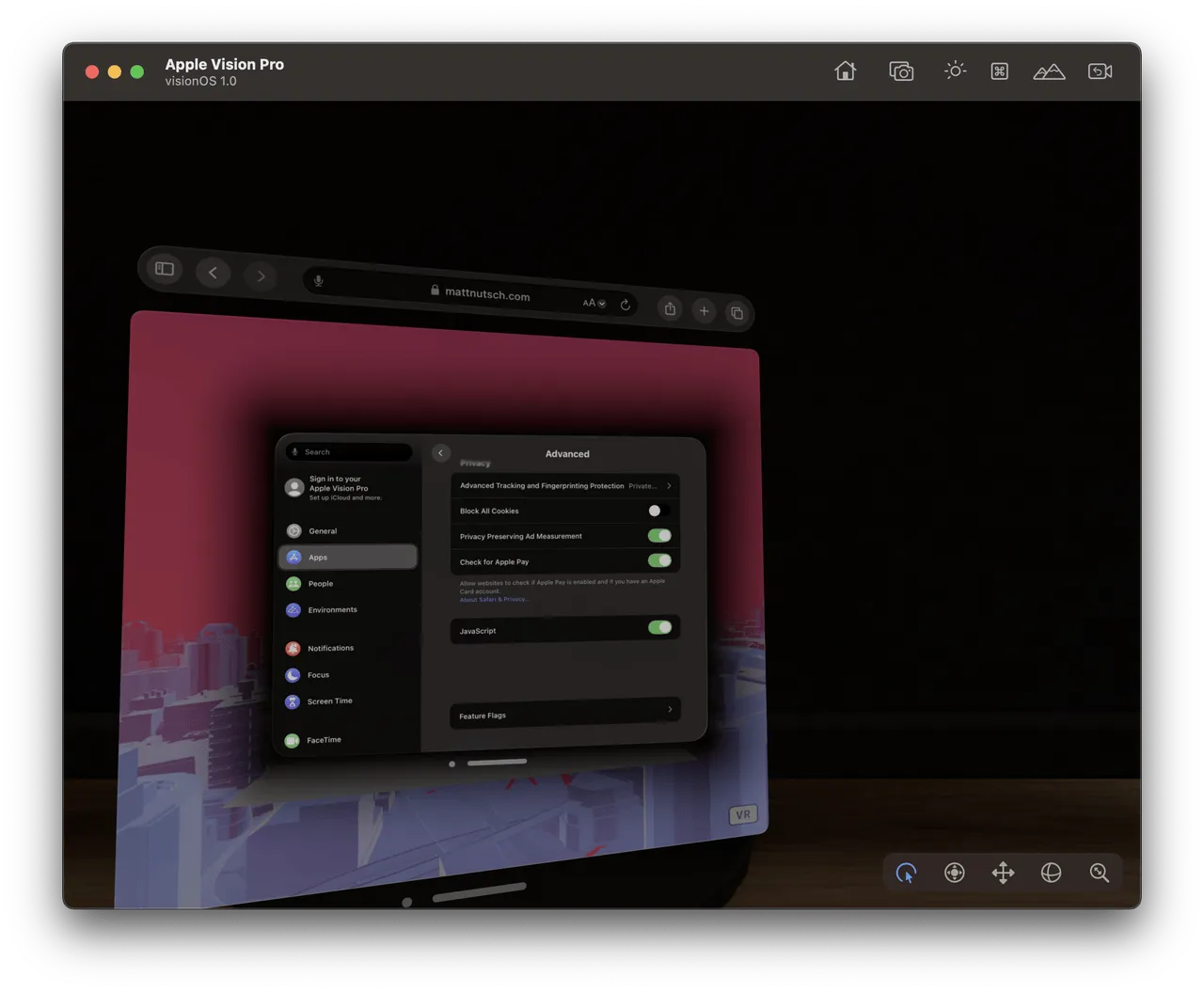

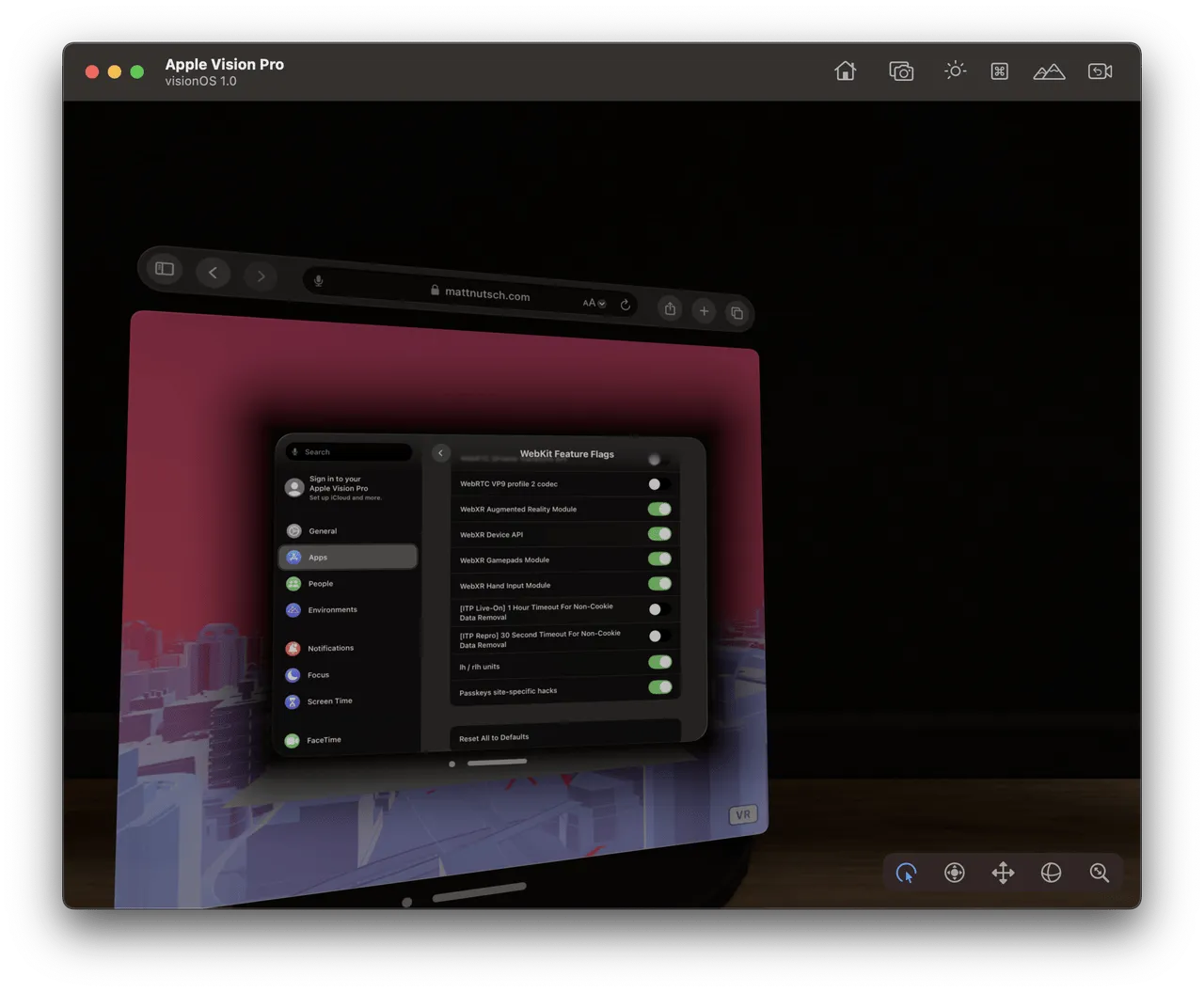

So the article is also relevant for Apple Vision Pro, and the game will eventually run on it too. Testing in the visionOS simulator for now, after enabling WebXR in Safari settings, VR mode can be entered but not yet interacted — needing further adaptation.

Demo url: https://www.mattnutsch.com/breakoutwebxr/

visionOS Safari WebXR enabling steps: Settings > Apps > Safari, scroll to bottom Advanced > Feature Flags,

scroll to bottom to find WebXR toggles, enable them.

Close Settings, return to Safari browser. Refresh page, click VR button at bottom right to enter VR mode.

Karl Guttag’s series of analysis articles on Apple Vision Pro

Keywords: Apple Vision Pro, Quest Pro, Karl Guttag

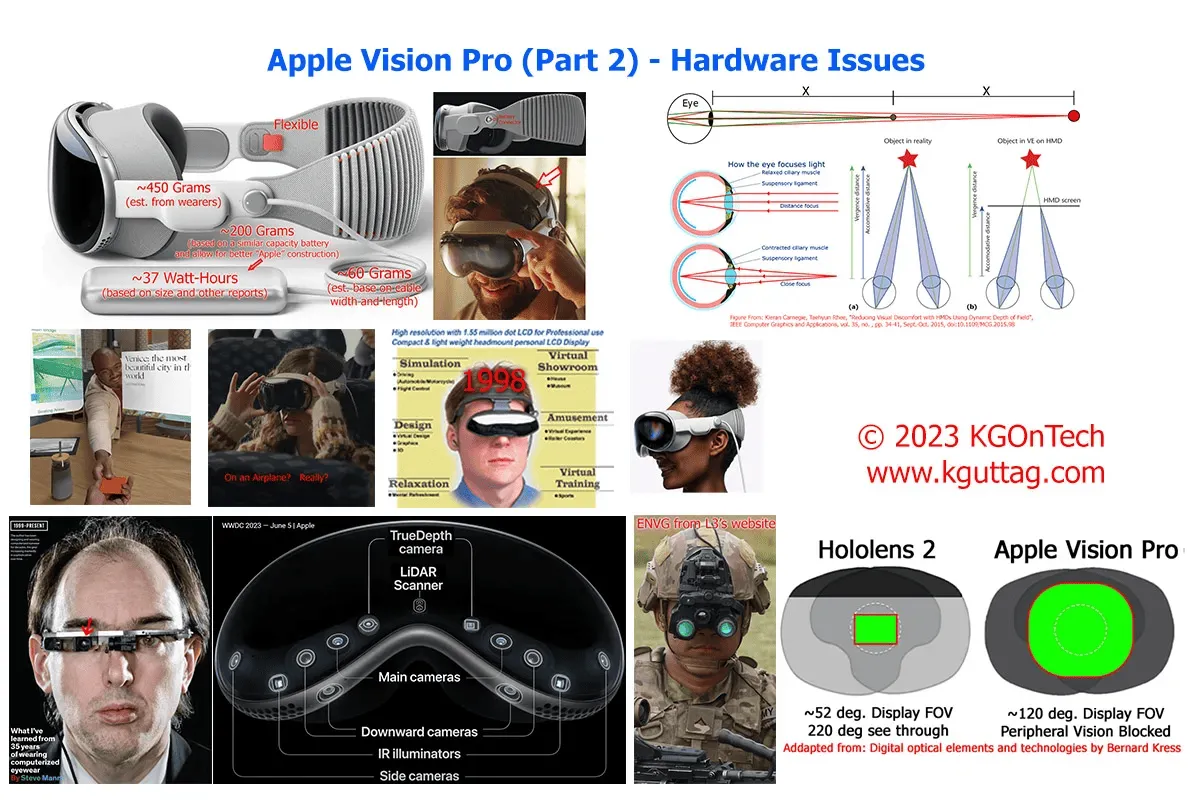

Karl Guttag is an expert with 40 years of consumer electronics industry experience. He has extensive expertise in graphics and image processors, digital signal processing (DSP), memory architectures, display devices (LCOS and DLP) and display systems including augmented reality and virtual reality headsets. His blog KGOnTech serves as a platform for sharing technology perspectives, aggregating his knowledge across display devices, headsets, projectors, graphics accelerators and video game consoles.

After the Apple Vision Pro unveiling, Karl Guttag wrote four articles analyzing the product from different angles, providing valuable industry insights.

In “What Apple Got Right Compared to The Meta Quest Pro”, Karl Guttag compared Apple’s Vision Pro and Meta’s Quest Pro headsets, focusing on differences in VST perception, commercial applications, display contrast, optics, gesture tracking, eye tracking, processing power, battery life, etc. He believes Vision Pro does better than Quest Pro overall, but proving the prospects of VST still takes time, and human visual systems are complex — current AR/VR optical display tech still can’t break physical limits, necessitating more talent, time and money to solve problems.

In “Hardware Issues”, Karl Guttag points out some deficiencies in Apple’s Vision Pro, including VAC, foveated rendering, safety and ergonomics. He thinks from the current stage, Vision Pro still has many issues, particularly balancing appropriately between VST perception and appearance. Although Vision Pro’s perception far exceeds Quest Pro, some basic safety problems remain unresolved.

In “Why It May Be Lousy for Watching Movies On a Plane”, Karl Guttag explores the experience issues with using VR headsets for inflight movies, involving FOV size, resolution, VOR effects, ergonomics, etc. He indicates VR viewing is still unable to fully replace traditional movie viewing. Although Vision Pro is better than competitors in aspects like FOV and resolution, challenges like eye tracking and battery life need addressing.

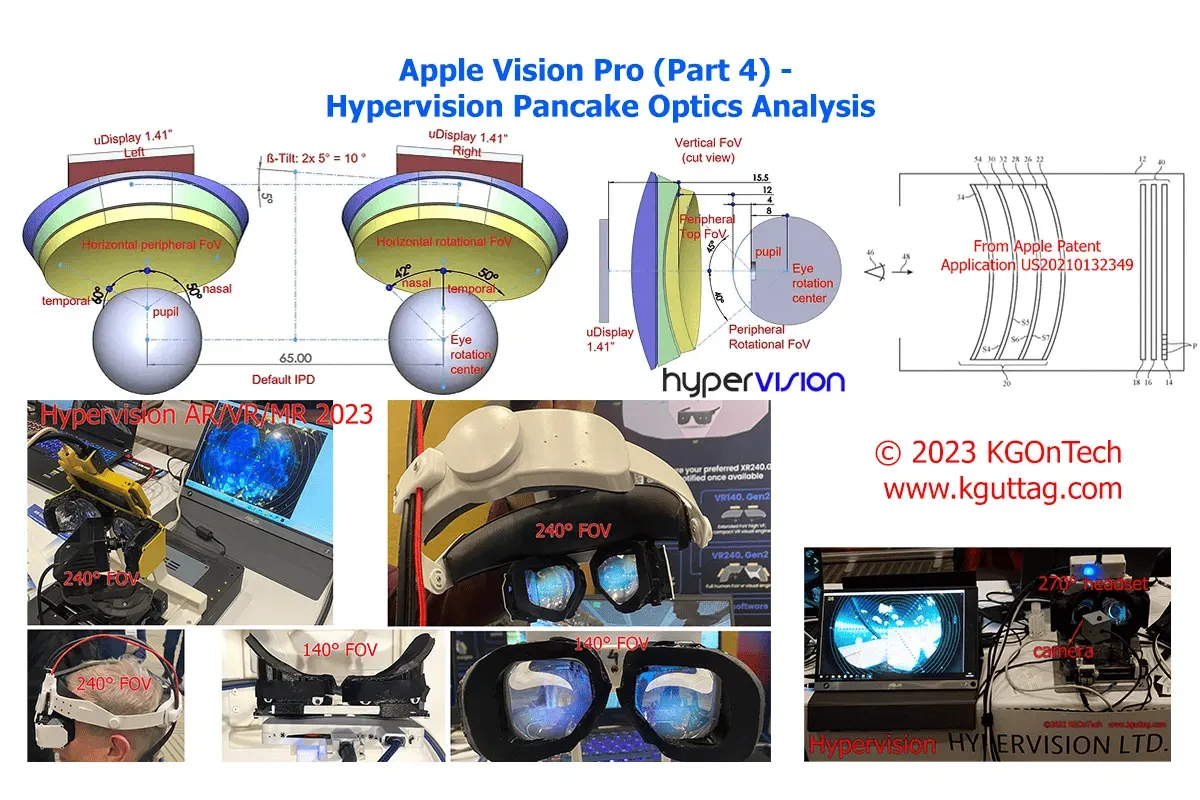

In “Hypervision Pancake Optics Analysis”, Karl Guttag introduces Hypervision’s analysis results on the Vision Pro lenses. Vision Pro adopts a three-lens configuration with 2 asymmetric freeform lenses having rotational symmetry. Predicted binocular horizontal FOV is 100 degrees, vertical FOV is 90 degrees. Estimated PPD is around 34, with around 10% difference in PPD at the center sweet spot. The pancake lens has high magnification, relying on visual axis and optical axis alignment, potentially limited by eye motion range.

Recommended Reading

- XR World Weekly 024 - PICO is about to launch a new product

- XR World Weekly 015

- XR World Weekly 026 - Black Myth: Wukong has completed the journey to obtain Buddhist scriptures, but Meta may not have

- XR World Weekly 025 - Bad news and good news for Quest players

- XR World Weekly 030 - The greatest significance of Orion released at the Connect conference is to give people hope

- XR World Weekly 028 - visionOS 2 will be officially released on September 16.

- XR World Weekly 018

XReality.Zone

XReality.Zone