XR World Weekly 004

Highlights

- WWDC23 releases Apple’s first generation AR headset operating system visionOS

- Apple releases Xcode 15 beta 4, provides visionOS SDK and simulator

- Apple Design Resources releases design drafts for visionOS

Big News

WWDC23 releases Apple’s first generation AR headset operating system visionOS

Keywords: visionOS, XR, Apple Vision Pro, ARKit, RealityKit, Unity

Apple’s defined spatial immersion

After watching WWDC23’s keynote, as a developer, are you also eager to unlock your talent tree in visionOS? Although the actual device won’t be available until next year, this doesn’t stop us from learning as much as possible about this entirely new computing platform based on what Apple has revealed so far.

As the start of a series of content, let’s try to understand visionOS from a macro perspective, as much as we can grasp from a developer’s point of view.

visionOS provides users with an infinite spatial canvas. We can imagine it as the display being “bent” into a ring surrounding the user.

To create realistic and rich immersive experiences, Apple defined three concepts:

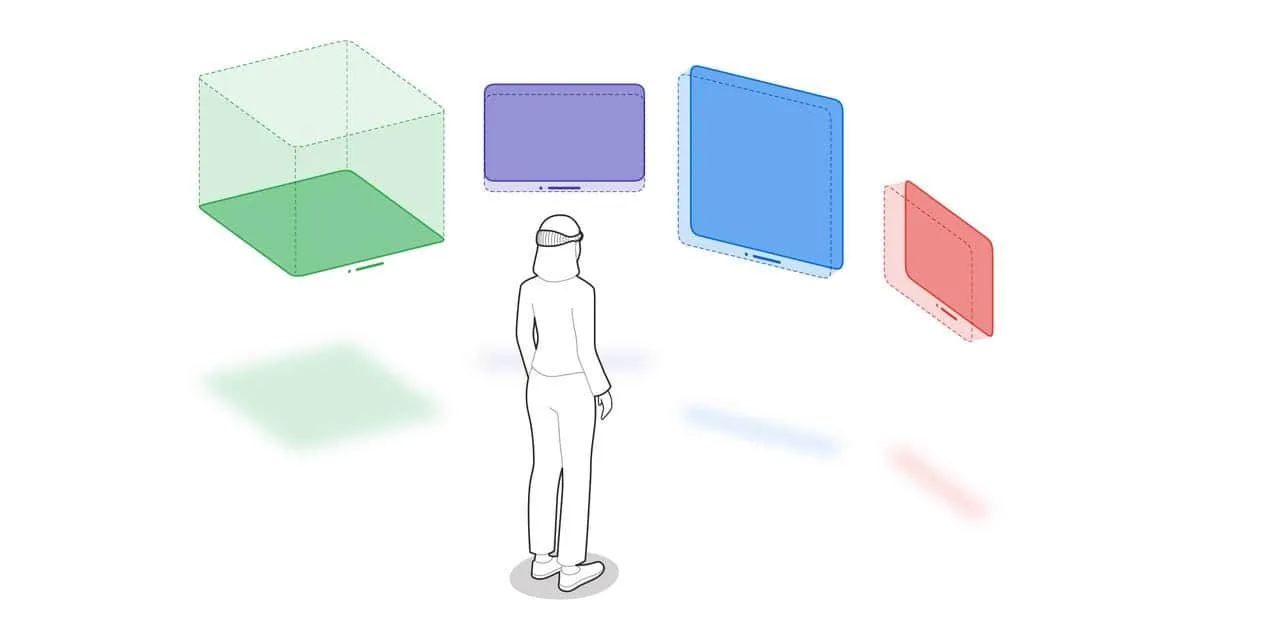

- Window

- Volume

- Space

Let’s first look at Windows. Similar to how we traditionally understand windows, each app in visionOS can create multiple windows, each being a scene built with SwiftUI. They will be placed in the user’s physical space in a certain order, like the purple, blue and red windows in the image above — they may be from the same app or different apps. They can display 2D or 3D content. We can adjust the position of these windows and set their size. In simple terms, using windows in visionOS is almost the same as using a window in macOS.

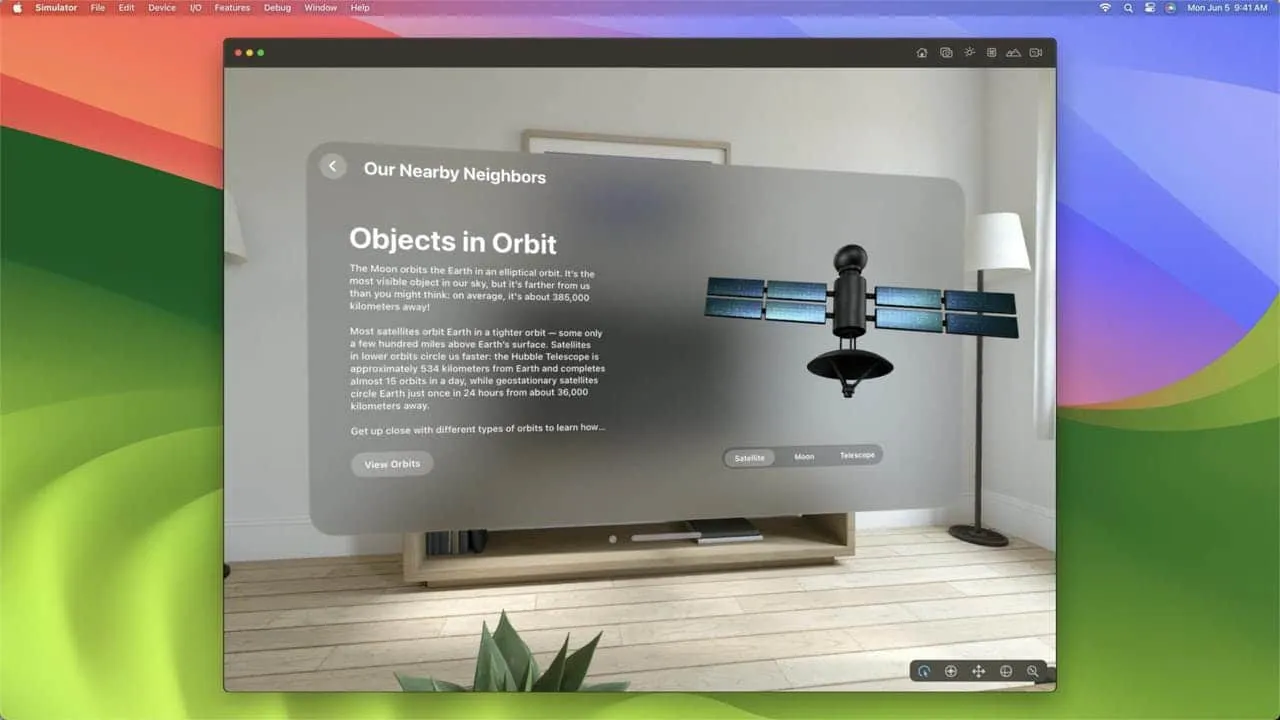

For example, the image below shows a visionOS window loaded with both 2D and 3D content.

Let’s now look at Volume, which we can think of as the container in visionOS dedicated to presenting 3D objects, i.e. the green part in the image above. Objects loaded into a Volume can be observed from a 360° perspective through Apple Vision Pro. Volume is also a SwiftUI-built scene, so we can use the same layout techniques as traditional UIs to constrain its form. For rendering, Volume uses RealityKit, allowing a 3D object to look more realistic in physical space.

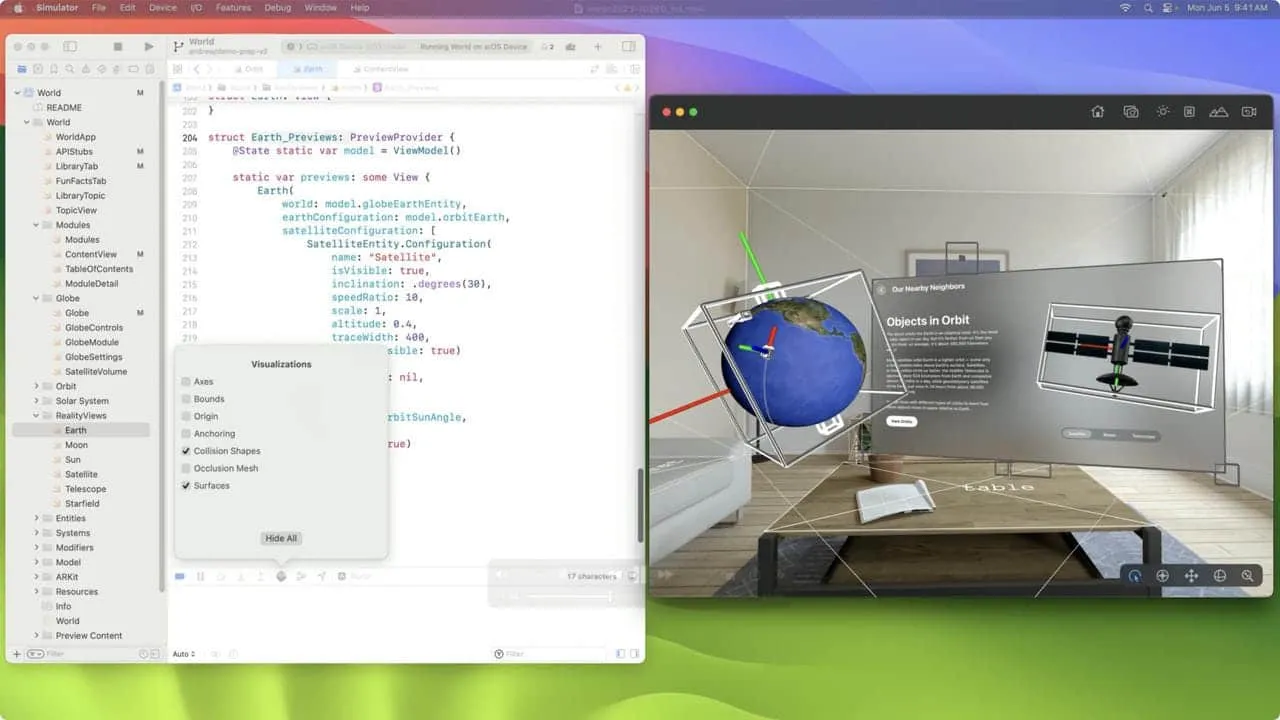

For example, the container on the left holding the globe in the image below is a Volume.

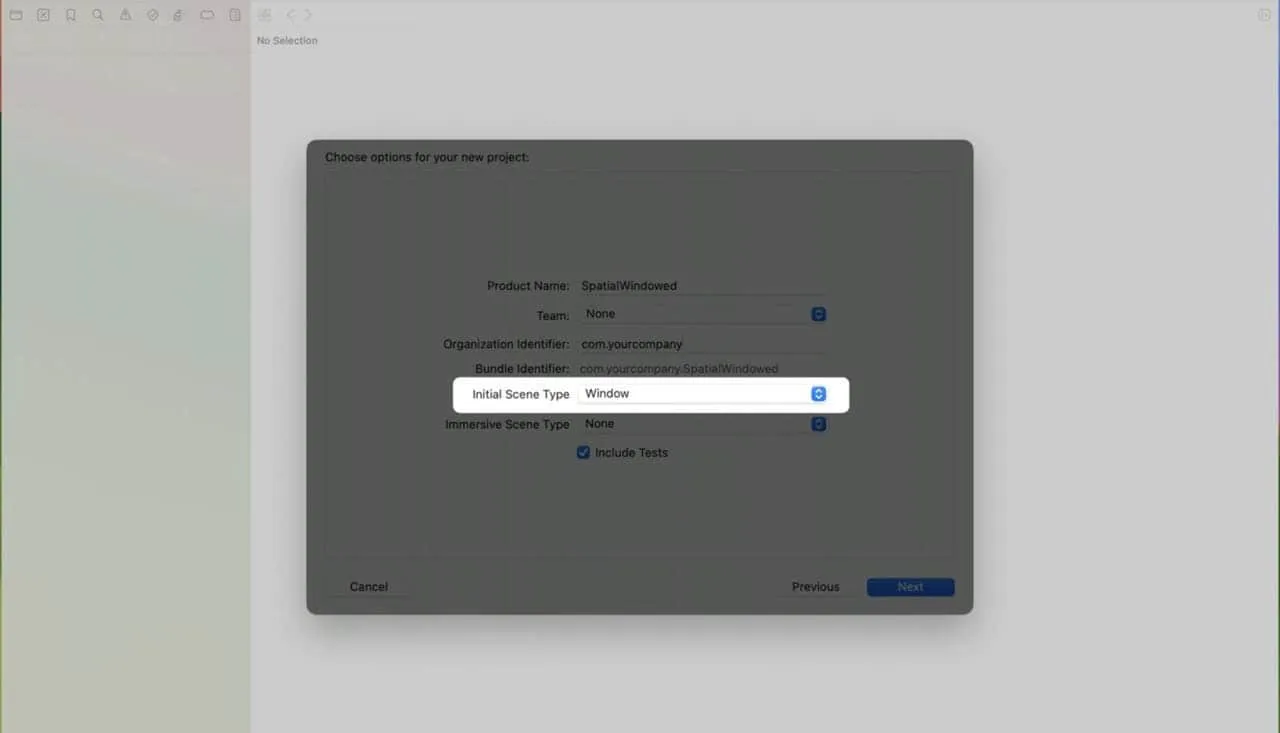

When creating a visionOS app, we can choose either Window or Volume as the initial carrier, and Xcode will generate different template code. Of course, we can continue adding other needed containers afterwards.

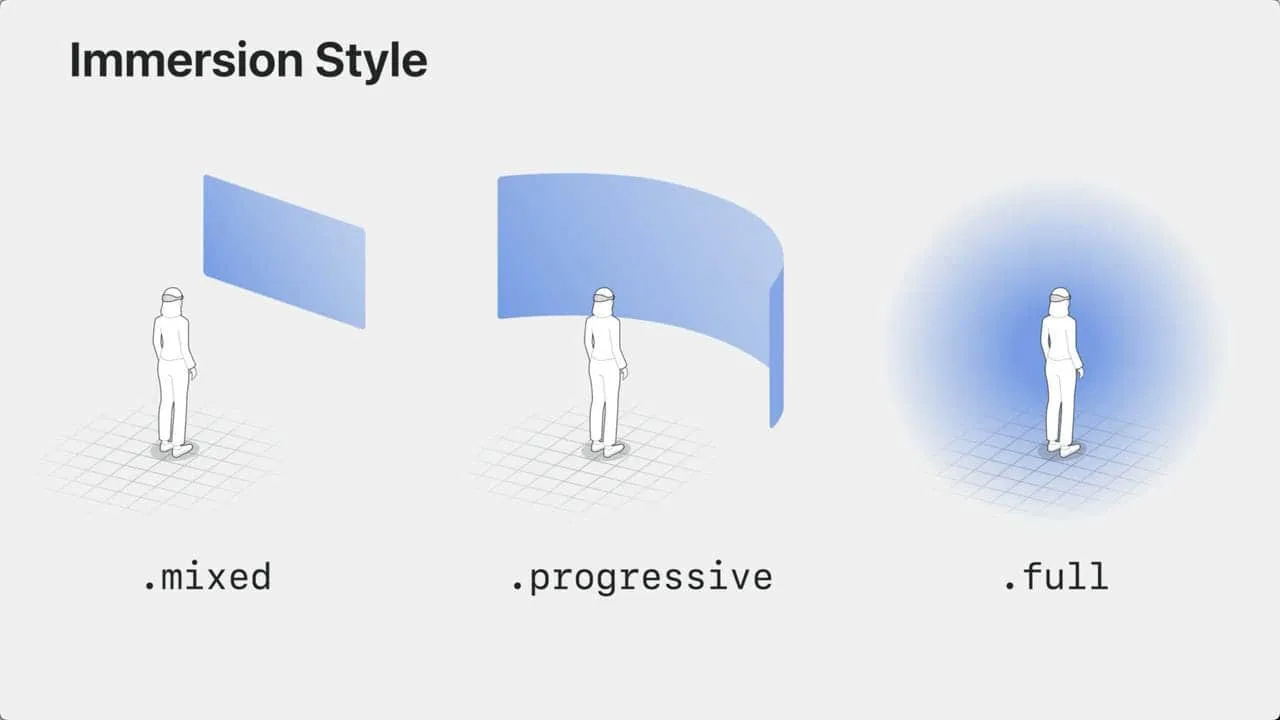

The last concept is Space, which we can understand as visionOS’ two different modes for presenting content.

One mode is called shared space, which we can think of as a “productivity mode”. This mode turns our desk into a sphere, giving us an almost infinitely large display to work with, so app windows will appear in the space one after another. This is also the default option when creating a visionOS app in Xcode.

The other mode is called full space, which we can understand as an “entertainment” mode. In this mode, one app occupies the user’s entire space, exclusively showing its own Windows and Volumes. Moreover, in this mode, users can not only use predefined spatial gestures, but also create specific interactions through the hand skeleton API provided by ARKit. When we select this mode in Xcode, an isolated scene is added to the project specifically for defining “spatial exclusive” content. For this, visionOS provides two different forms of exclusivity:

- One is called Passthrough, where Apple Vision Pro works in AR mode. Users still maintain contact with the real world, except that the real world only contains content created by your app. For example: home furnishing apps can present the final furnished look to users this way. For dedicated rooms like recording studios, combining Apple spatial audio, the integration between real and virtual can be more seamless, making users feel the content is already surrounding them.

- The other is called Fully Immersive, where Apple Vision Pro works in VR mode. It can render the user’s space into a specific scene, such as: movie theater, starry sky, nature, cockpit, etc. At this point users are temporarily isolated from the real world, fully immersed in the virtual world created by the app.

Of course, these two forms are not mutually exclusive, but can transition from one to the other:

Therefore, when building a visionOS app, how to start with a Window, how to showcase 3D content with Volumes, how to smoothly and naturally switch between “productivity” and “entertainment” modes, and how to make good use of user space, are four questions Apple has raised for us. We might think about our own visionOS apps starting from these perspectives.

Apple’s defined spatial interactions

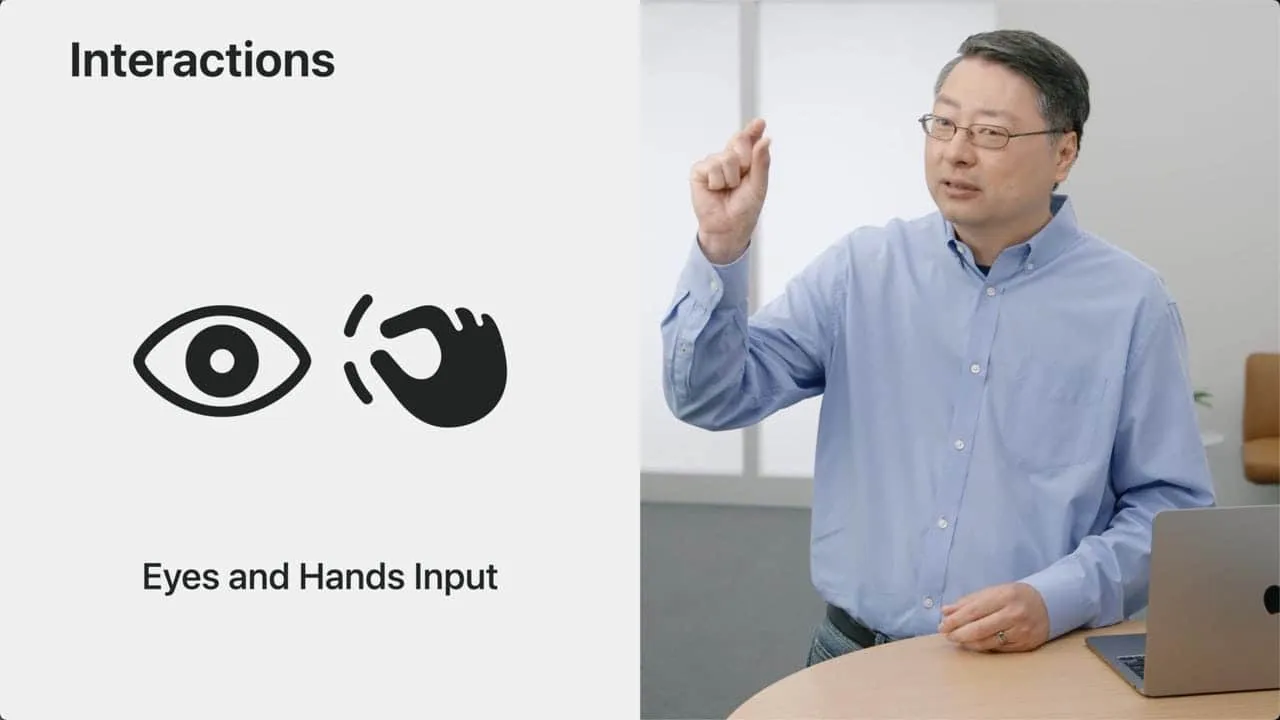

When our interactions migrate from a flat surface to a 3D space, some ways of interaction will naturally be extended. For operating a 3D spatial system, Apple has defined the following common interactions:

First, staring at an area then pinching with two fingers, indicates selecting an object;

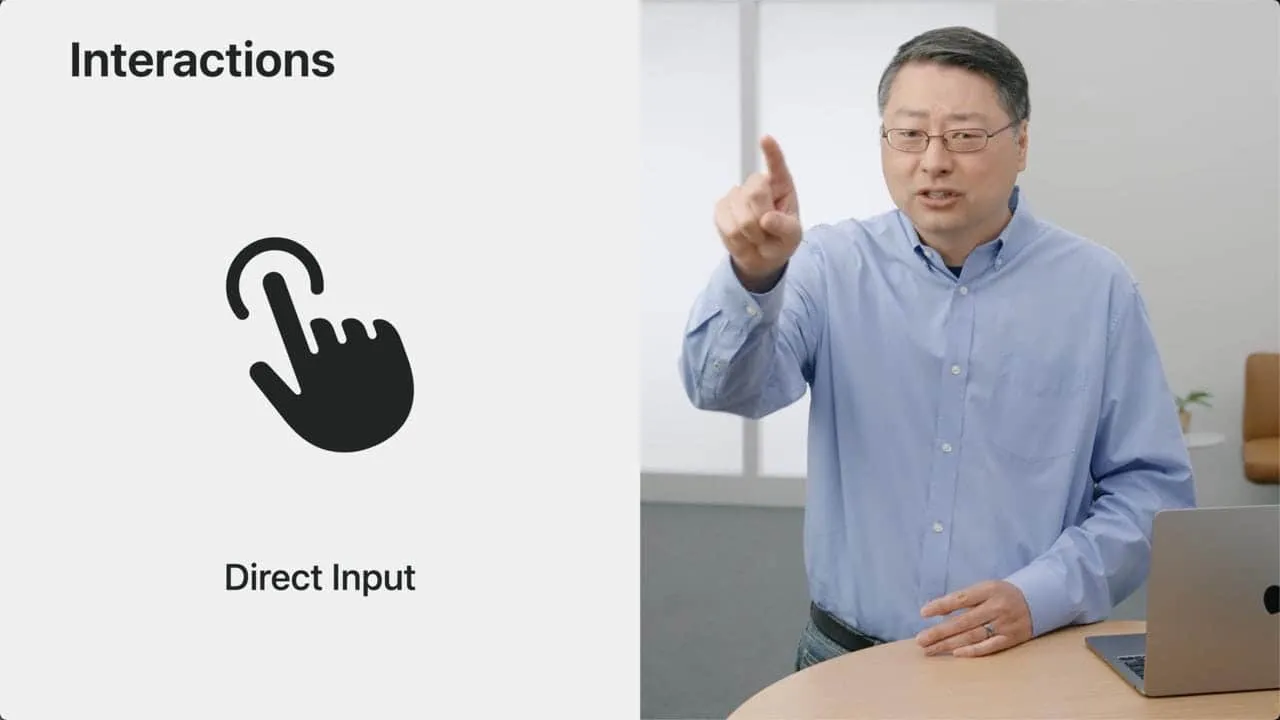

Next, looking at an object then directly tapping it with hand:

It’s easy to imagine that since the above two operations are supported, visionOS can of course also support gestures like long press, drag, rotate, zoom, etc. The system can automatically recognize them and pass corresponding touch events to apps.

Moreover, these gestures can not only manipulate the UI, but also interact with RealityKit objects, such as: adding elements to 3D objects, manipulating 3D objects, playing with 3D Rubik’s Cubes or chess pieces, etc.

Third, when an app runs in full space mode, developers can also leverage ARKit’s hand skeleton tracking to develop custom gestures. For example in the image below, blocks can be smashed by horizontal hand swiping:

Lastly, support for traditional input peripherals:

For example: keyboard, trackpad, and game controllers. This is essential for working with Apple Vision Pro and playing traditional games. So applications in visionOS do not have to abandon traditional input devices. This is something to note when developing a visionOS app.

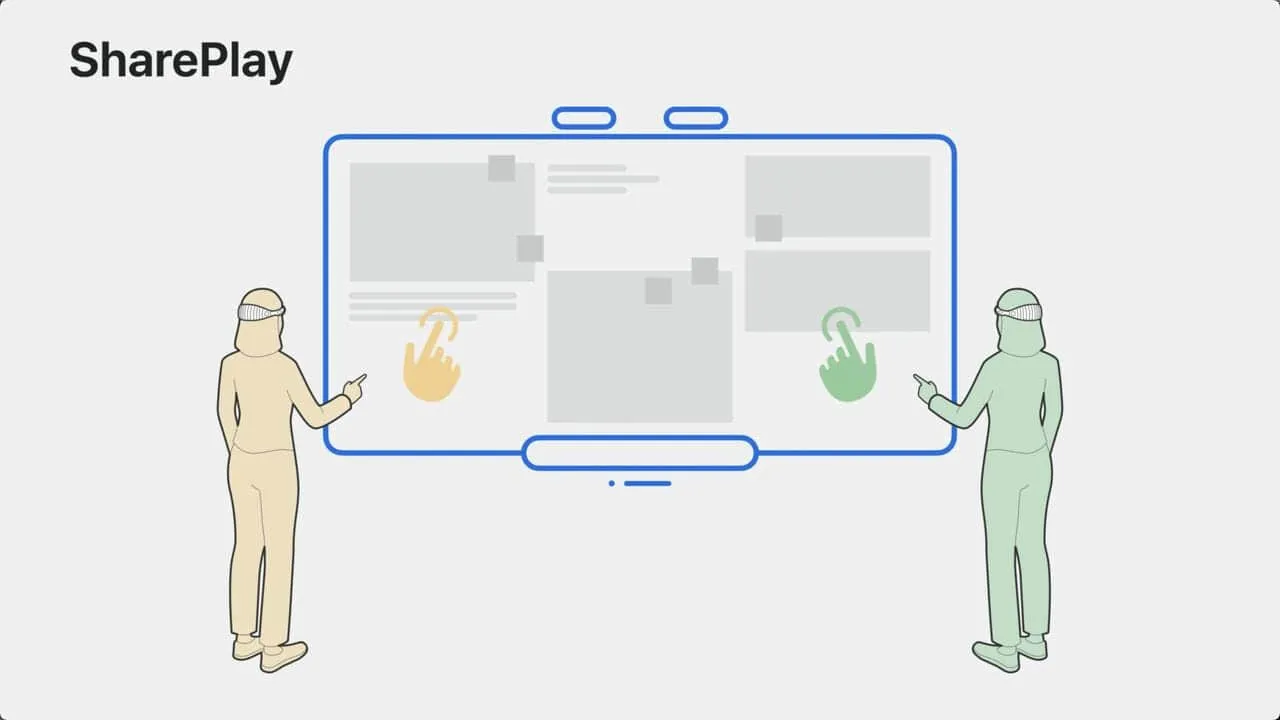

The above describes actions by a single user of Apple Vision Pro. But in Apple’s view, spatial interactions also include multi-user collaboration. This collaboration contains two meanings:

- One is synchronizing presentation content. For example in the image below, when one person rotates the model, others should see the same effect:

- The other is synchronizing interaction actions, which can create the effect of people discussing and communicating together in person:

These two functionalities are enabled through the SharePlay and GroupActivities frameworks. Of course our current task is not to explore their details, just understanding Apple’s defined spatial interactions cover individual operation and multi-user collaboration is sufficient.

Apple’s defined spatial privacy

After discussing interactions, let’s talk about user privacy which Apple always emphasizes. visionOS is no exception, by default it only provides apps information to facilitate interaction. For example:

- User hand motions are parsed into tap or touch events, apps do not get access to detailed data about the forearm, wrist and hand;

- User eye motions are parsed into focused windows, apps do not know the actual content seen by the user in physical space;

If apps need further information, they must request user permission:

This concludes the essential theoretical knowledge for learning visionOS development. Next, let’s talk about what we can specifically do before getting the actual device.

Current focus on which technologies

For frameworks, we can start gaining knowledge in these four areas. First let’s talk about the most important one:

- SwiftUI: According to Apple, the aforementioned Window / Volume / Space can all be created with SwiftUI, and existing iOS or iPadOS apps made with SwiftUI can also seamlessly run in visionOS. This year, SwiftUI also added support for depth, gestures and 3D immersion. In summary, SwiftUI is the best choice for building visionOS apps. Even if you’ve been entrenched in the UIKit camp, now is the time to consider expanding your skillset.

Next are three you may not be very familiar with if you were a conventional app developer, so start charging up early.

- RealityKit: If you’ve done 3D development on iOS before, think of it as SceneKit in the AR domain, and many of the programming concepts are similar. Of course, no prior knowledge is required. Just think of it as a rendering framework that makes 3D models look and feel more realistic in the real world.

- ARKit: You may not have used it, but must have heard about it at WWDC over the years. It helps us solve spatial cognition problems, like quickly detecting planes, scene reconstruction after location changes, image Anchors, world tracking, and skeleton tracking, etc. These are core to the AR experience. So it’s safe to say, building on ARKit gives your app a robust AR foundation.

- Accessibility: When building visionOS apps, accessibility should be the first consideration. visionOS is a device that can fully interact via eyes and voice, some may also choose to use finger, wrist or head for pointer control. These leverage the same technologies used in Apple’s other platforms. If you haven’t paid much attention to these before, now is the time to focus on this topic. In visionOS, accessibility is no longer an optional niche feature, but a first class citizen in interaction experiences.

Tools to acquire

After frameworks, let’s discuss tools.

- Xcode: The top of the list is naturally Xcode. Before Xcode 15 official release, we can experience visionOS through the simulator in Xcode beta versions (likely the only way for some time);

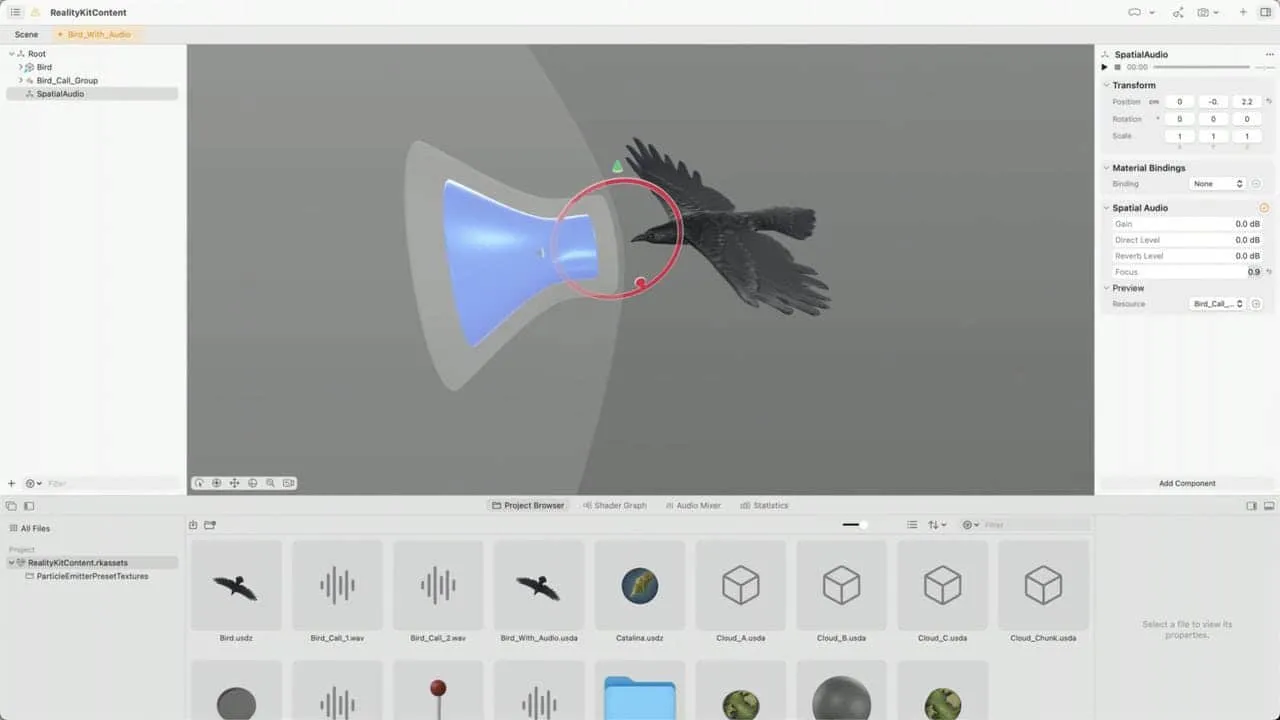

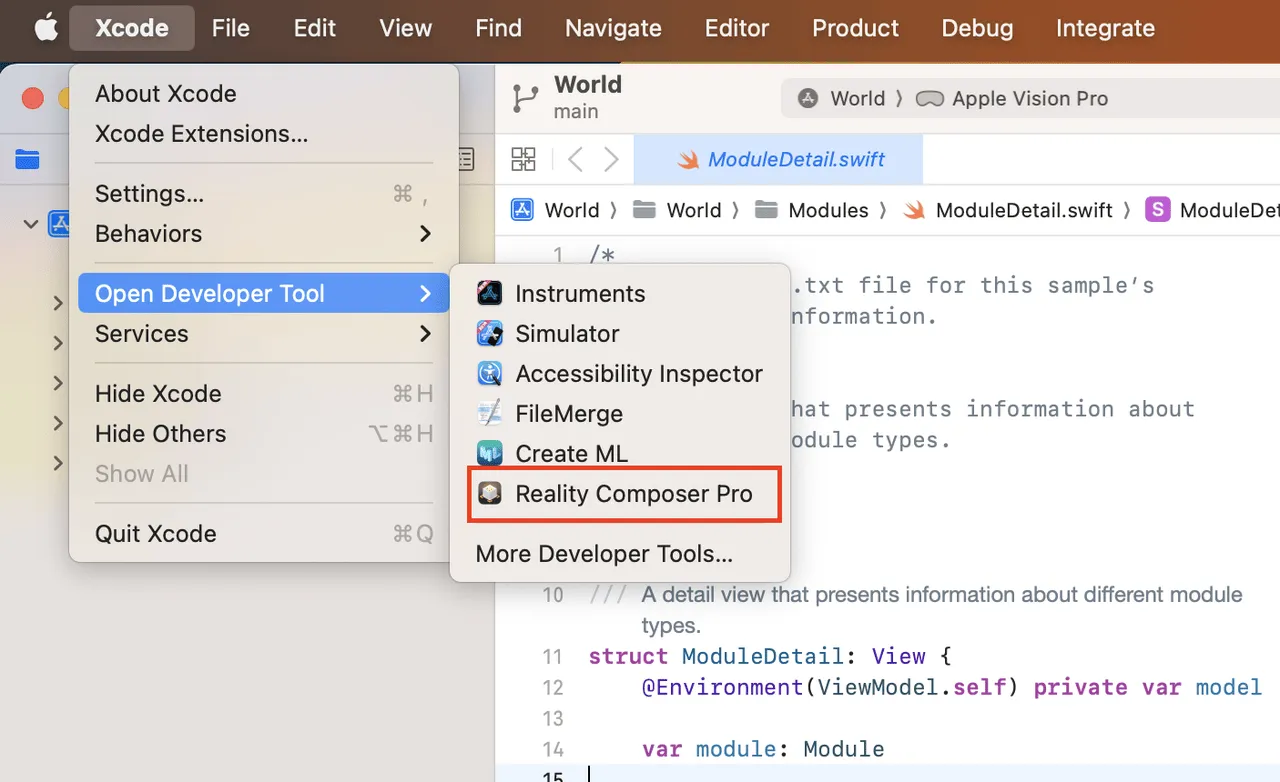

- RealityComposer Pro: This is an enhanced version of previous RealityComposer. Whether or not you’ve used RealityComposer before, think of it as Interface Builder for 3D content. This is an app you’ll inevitably interact with, regardless of preference:

- Unity: We mentioned earlier that Unity-created 3D content can be loaded into Volumes. For visionOS “entertainment mode”, Unity has obvious innate advantages. Unity has also opened up applications for the Spatial Creation version. For most non-gaming developers, learning Unity is a long-term process, so leave ample time.

How to get started

If you’ve never done AR development before, following some beginner tutorials to complete an AR app with SwiftUI on iPhone should be a good knowledge building process. This can help concretely understand the specific concepts involved in AR development. And this app can also be smoothly ported to visionOS in the future.

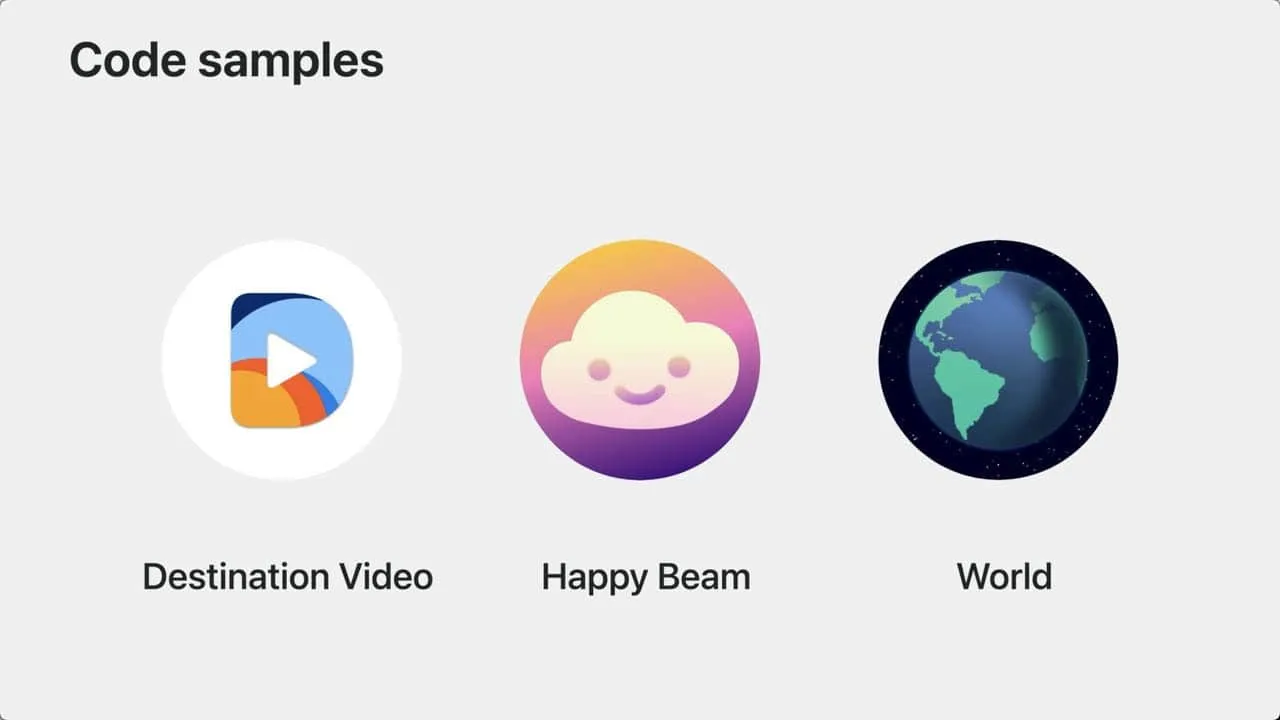

Of course, if you already have some AR experience, this year’s WWDC provides three visionOS demos specifically:

- Destination Video demonstrates playing 3D video in shared space and using spatial audio for immersive effects;

- Happy Beam shows custom gestures in full space for gaming with friends;

- World is the Hello World for visionOS, introducing basic concepts of visionOS app development. Some screenshots in this article are from this app;

Finally, to facilitate systematic visionOS learning, Apple also provides a visionOS topic page based on WWDC23 content. Sessions are categorized and organized by design, tools, gaming, productivity, web, app migration, etc.

Additionally, we’ve also watched all these sessions and summarized a Session Watching Roadmap based on our understanding, to help better go through these sessions.

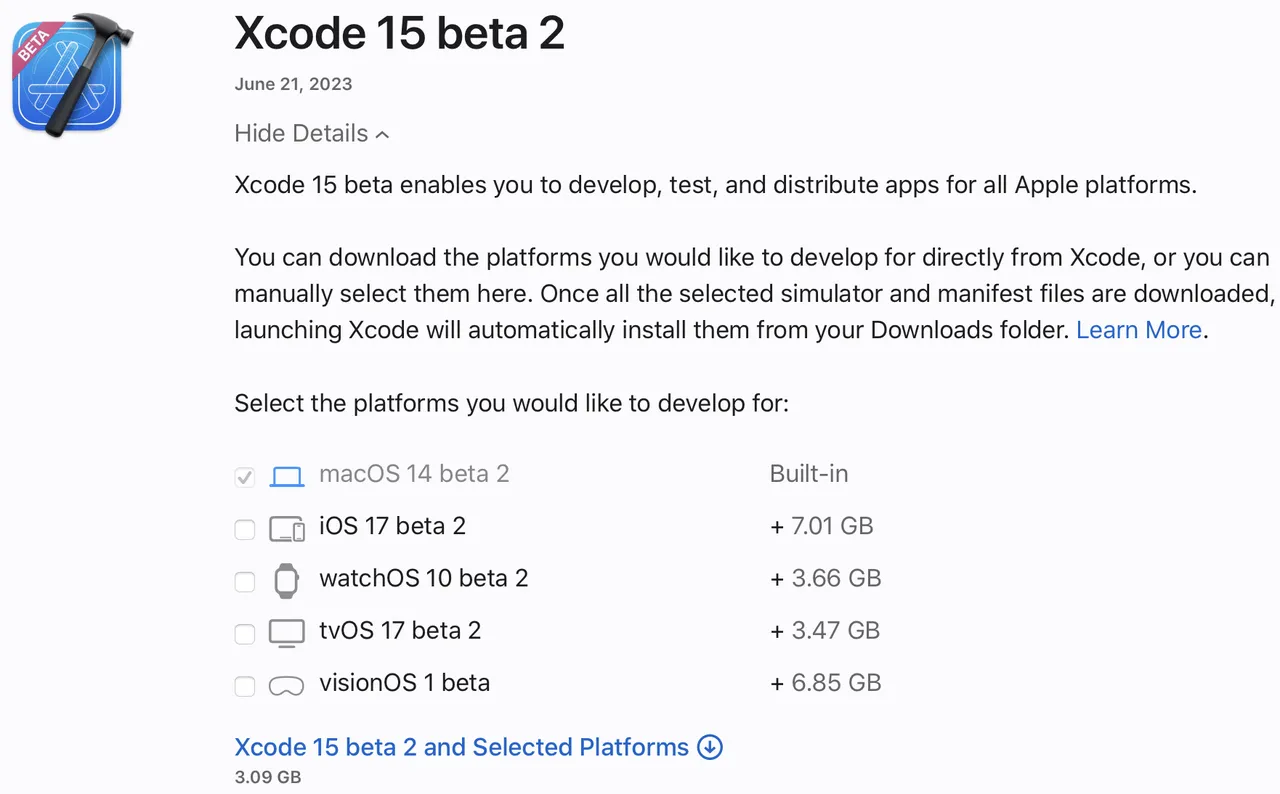

Apple releases Xcode 15 beta 4 with visionOS SDK and simulator

Keywords: Xcode, visionOS, XR, visionPro, ARKit, RealityKit

The visionOS SDK and simulator arrived as scheduled by end of June. Official website now provides Xcode 15 Beta 4 with the visionOS SDK and Reality Composer Pro. To try the new SDKs, you need to upgrade your system to macOS 13.4 or above, and download Xcode 15 Beta 2.

Note:

Although the latest Xcode version is beta 4, due to Swift feature support issues in Xcode 15 beta 3/4, e.g.

@Observablein SwiftData, the official visionOS demos cannot compile. So Xcode 15 beta 2 is still recommended for testing visionOS related demos. See Xcode Release Notes for differences between each version (Xcode 15 beta 1 does not support visionOS SDK).

Apple’s app testing platform TestFlight also subsequently enabled submission of visionOS beta apps.

visionOS Introduction Page

Apple launched a dedicated visionOS development introduction page: developer.apple.com/visionos/

Apple consolidated basic visionOS introduction, used development frameworks and tools, as well as WWDC2023 visionOS related session and demo downloads on this page.

Vision Pro Simulator

The Vision Pro SDK and simulator are included in Xcode 15 beta 2, but to download faster, it’s recommended to uncheck iOS17 and visionOS1 SDKs during installation, then separately download them afterwards.

After Xcode and visionOS SDK finished downloading, you can get the Demos provided by Apple at WWDC:

- Hello World: 3D display of earth, satellites relationship

- Diorama: Terrain and landmarks in sandbox mode

- Happy Beam: Gesture heart shape to eliminate clouds

- Destination Video: Video playback

You can then see interface renderings in the simulator.

Apple also made short clips for each demo. In HelloWorld, we can see the demo leveraged Window, Volume and Space to showcase solar system models respectively:

In Diorama, a simple 3D sandbox and landmarks on it are shown:

In Happy Beam, gesture recognition for a simple monster battling game is demonstrated:

In Destination, a video player use case with Immersive Space is shown:

New Reality Composer Pro

The new visual editor Reality Composer Pro also arrived with Xcode 15 beta 2, accessible via top left menu Xcode-Open Developer Tool-Reality Composer Pro.

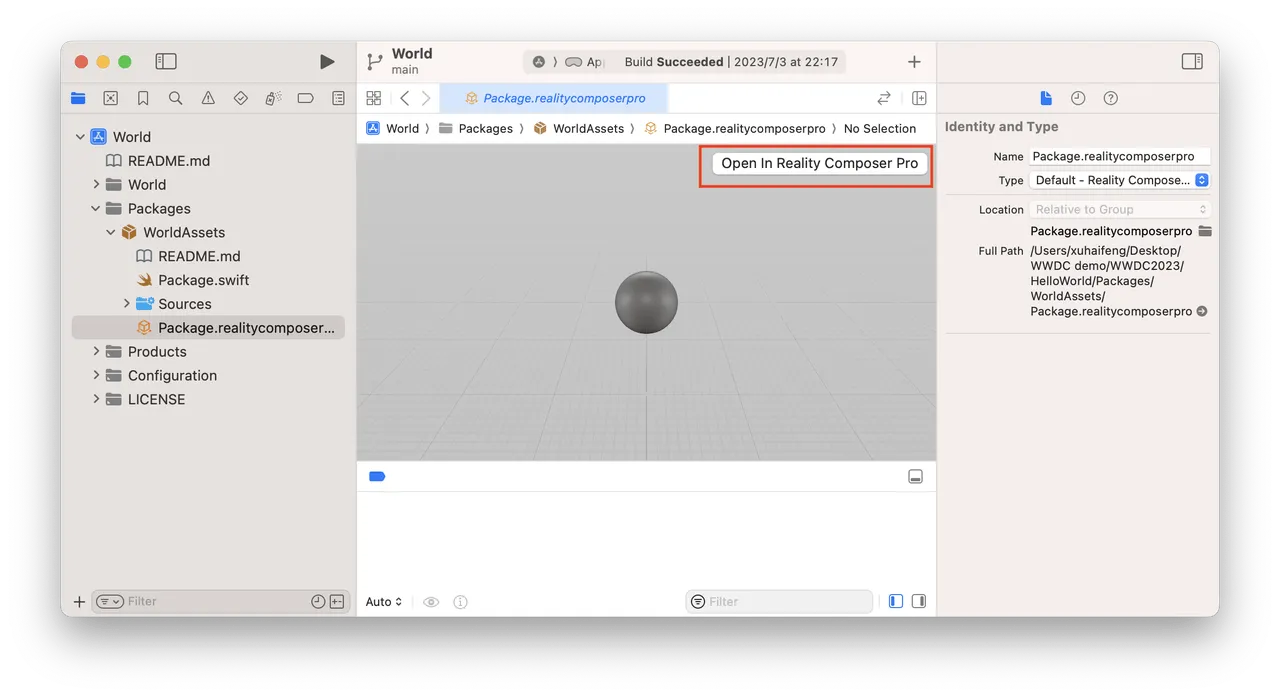

If the open Xcode project already contains Reality Composer Pro files, it can also be opened from within Xcode. For example, in Apple’s demo project World:

As seen above, the collaboration between Reality Composer Pro and Xcode projects has changed — previously Reality Composer files when dragged into Xcode would automatically generate Swift code, but Reality Composer Pro files are directly embedded as Swift packages.

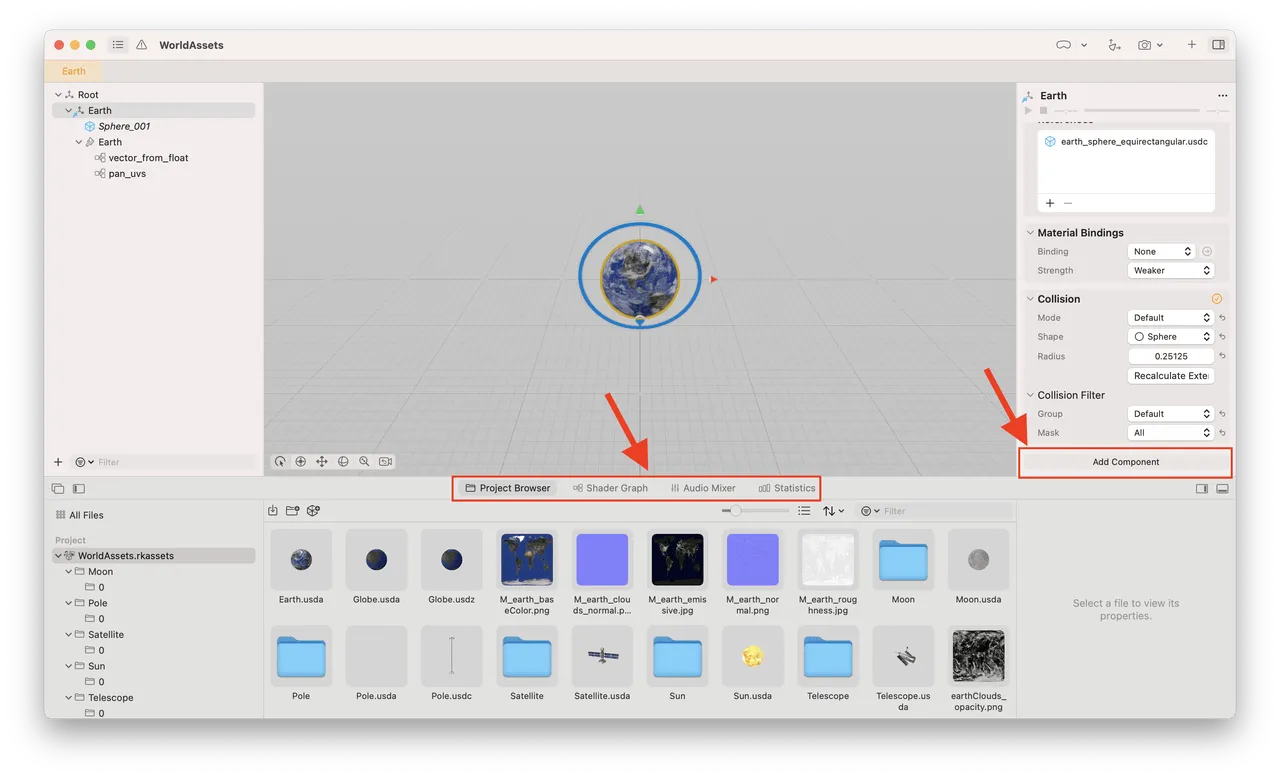

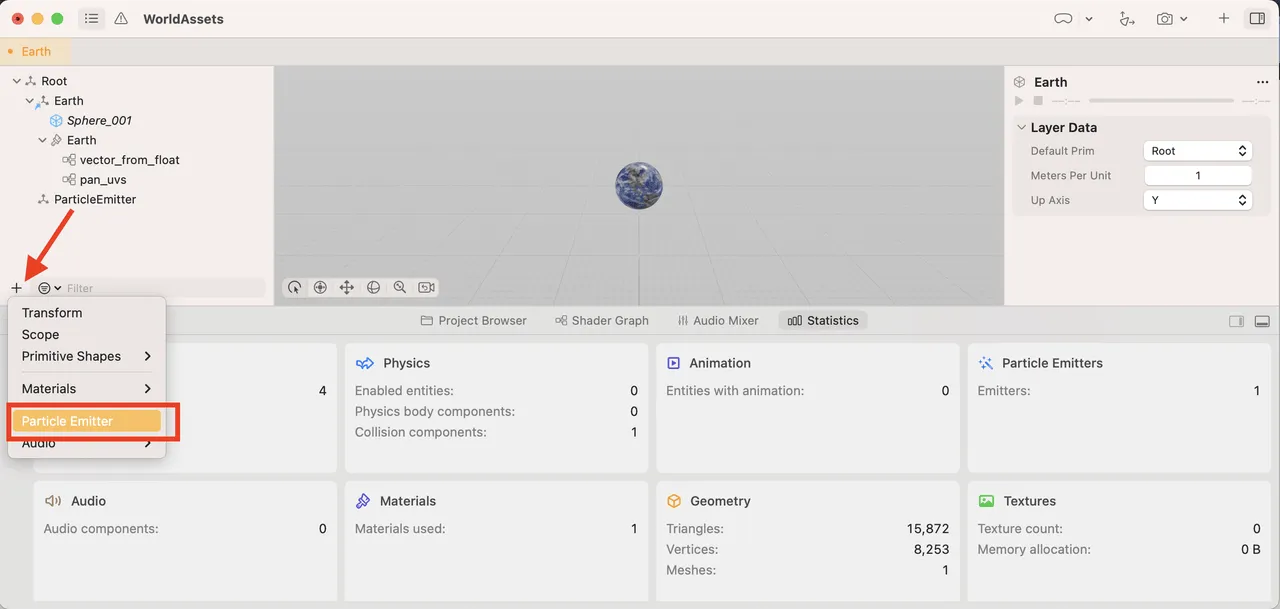

The new Reality Composer Pro interface and capabilities have also evolved, overall resembling a Unity editor. Most notably, the bottom workspace now has 4 tabs: Project Browser, Shader Graph, Audio Mixer, Statistics. The right sidebar also has a new “Add Component” button for adding custom components written in Xcode.

Other major upgrades include finally supporting particle systems, which have long been in SceneKit.

Tool

Apple Design Resources releases visionOS design drafts

Keywords: Figma, Sketch, visionOS

Every year after WWDC updates the system, Apple routinely releases related design resources for designers to use and reference. This year is no exception, with Apple updating relevant material as usual to facilitate designers.

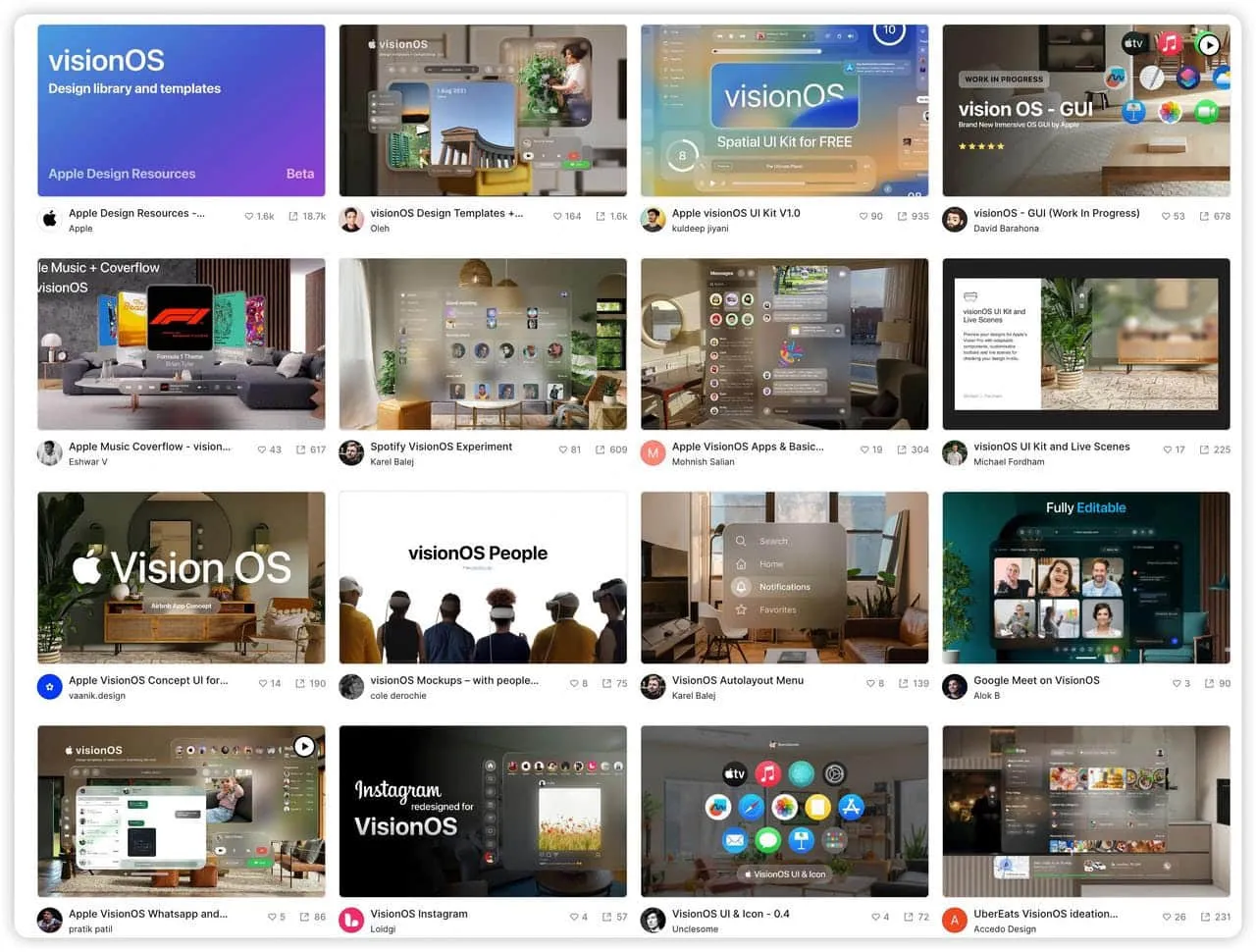

In addition, searching for visionOS in Figma Community, you can already see many designers unleashing their “superpowers” to create beautiful visionOS app concept drafts, with most highly polished (just lacking development).

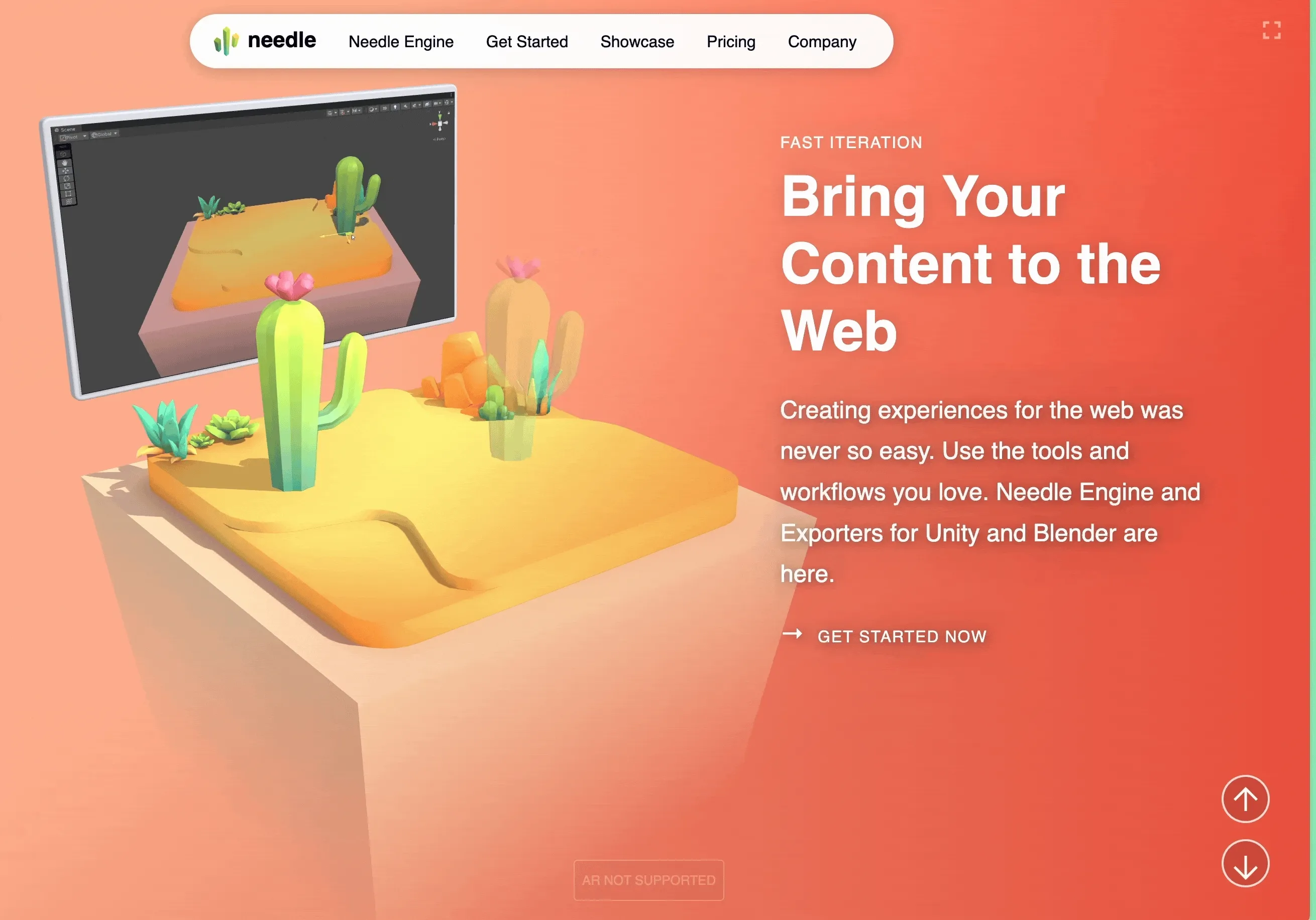

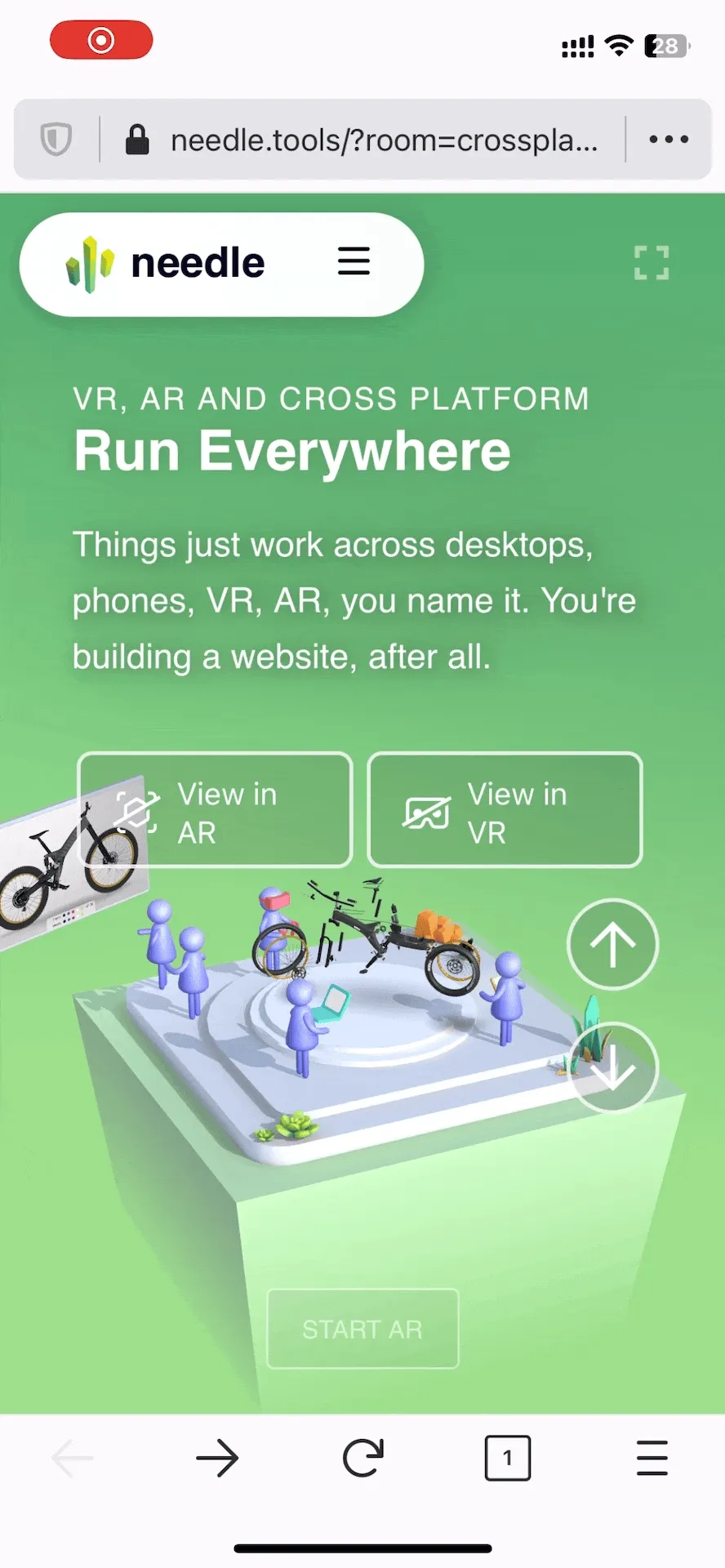

Needle — Web-based, XR-friendly 3D App Runtime

Keywords: Unity, Web

If you are a Unity VR/AR developer, and want to create some web-based projects for easy distribution, then Needle will be a handy tool for you.

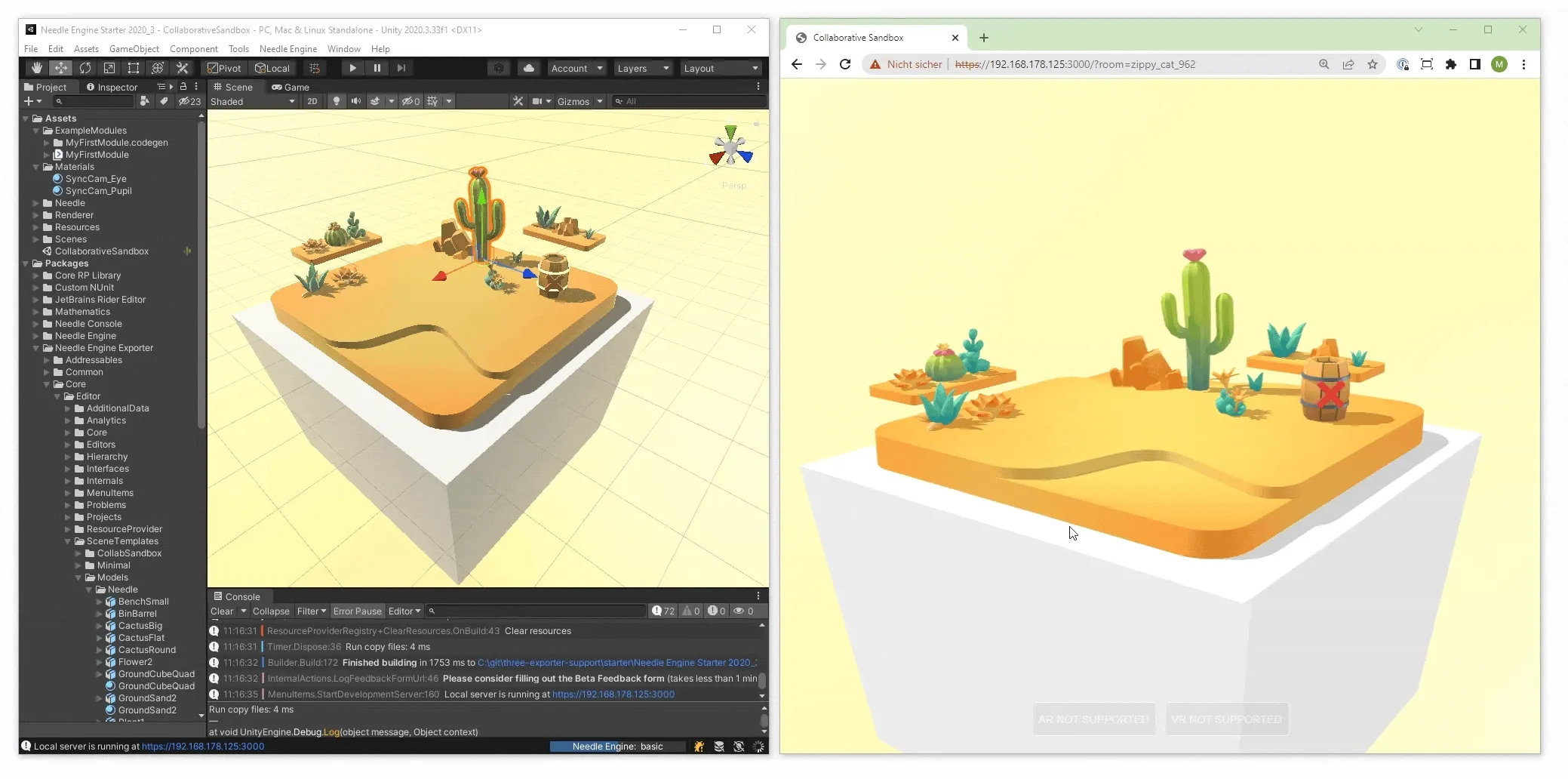

What is Needle? According to their website, Needle is a workflow to help you bring 3D scenes onto the Web. It’s called a workflow because Needle does not intend to build a new editor, but rather a set of tools to quickly bring your existing creative tool content onto the Web — for example, with Needle’s tools, you can quickly package a Unity project as a web project and preview it in the browser (and modifications in Unity can reflect real-time in the browser):

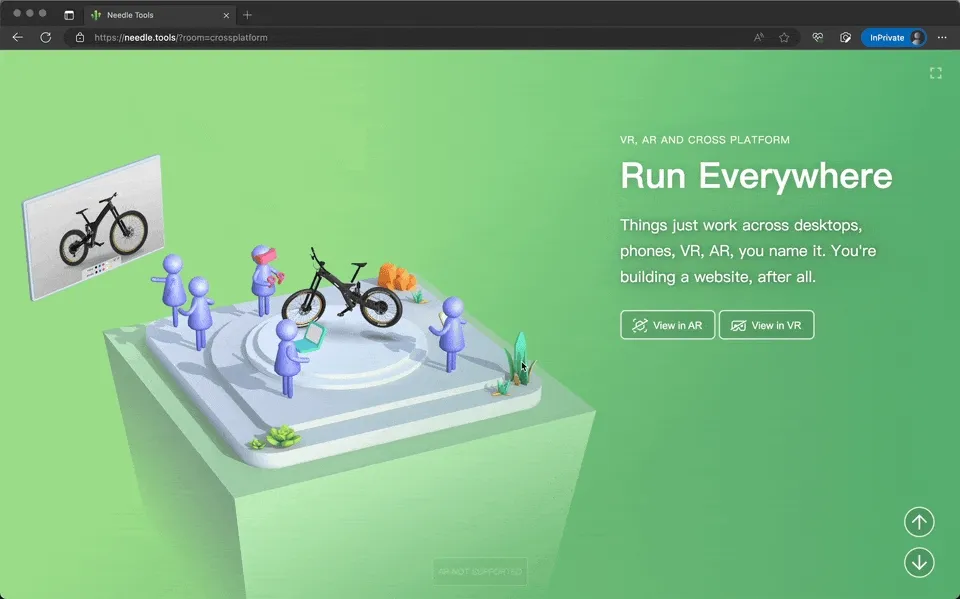

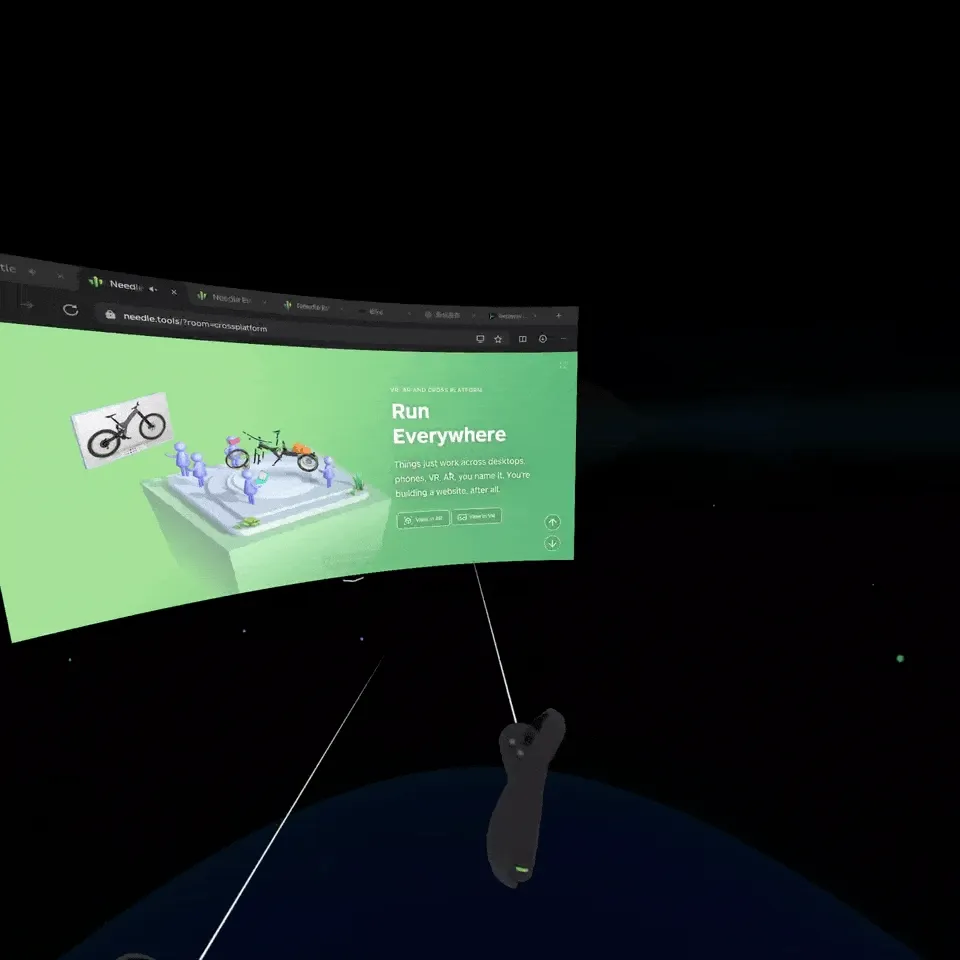

In addition, the Needle team also believes new VR and AR devices will expand the boundaries of the Web. So WebXR support is built into Needle, allowing the same project to run on both WebXR-enabled VR devices and mobile devices:

Web:

VR:

AR:

If you’re interested in Needle’s capabilities, you can get started quickly with their beginner friendly documentation~

Video

The game engine that changed the world by accident / In-depth analysis: How far has today’s game technology really come?

Keywords: game engines, UE5, popular science

Whether VR or AR, a key role is unavoidable in building the entire ecosystem — game engines. Because simulating a realistic 3D world is exactly what game engines have been constantly improving on, and this is needed by both VR and AR.

So how does a good game engine simulate a realistic 3D world? You’ll get a general idea from these two Bilibili videos.

In the first video, the creator starts with Unreal Engine’s Lumen system, and walks us through the history of computer graphics, as well as the progression of global illumination tech from simple to complex, from classical to modern.

In the second video, the creator not only explains rendering techniques representing realism, but also introduces motion capture for more realistic character animations, physics simulation for more realistic scene interactions, and AI tech for more realistic NPC interactions.

If you’re interested in game development but don’t know where to start, these two videos will help establish basic conceptual understanding.

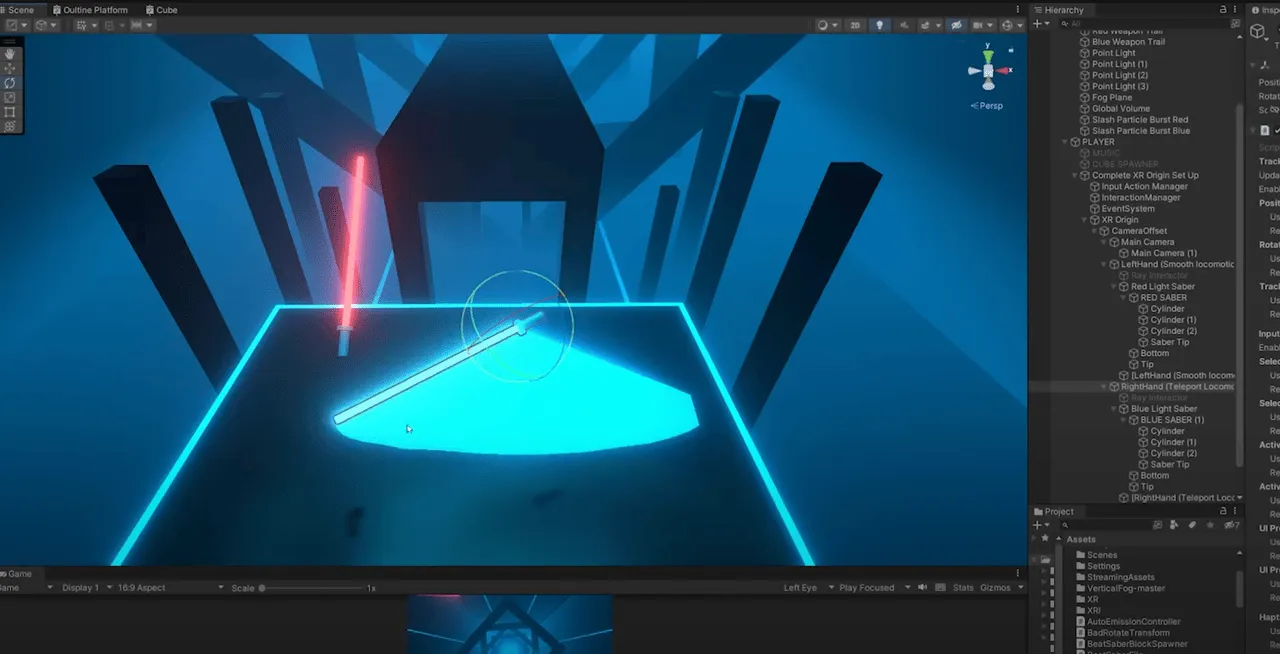

Recreating Lightsabers in Unity with ChatGPT

Keywords: ChatGPT, VR development process

ChatGPT keeps getting more powerful. Recently, the author successfully had GPT-4 walk through the entire process of making the classic VR game “Beat Saber”, including code, VFX and 3D models. This efficient workflow is amazing, proving technical staff need not only technical skills, but also the ability to integrate and leverage resources. This achievement opens up more possibilities for game development, while providing more room for technical professionals to explore.

They completed this project in 3 days. If you want to see AI capabilities, or make another Beat Saber, check out Valembois’ conversation with ChatGPT here.

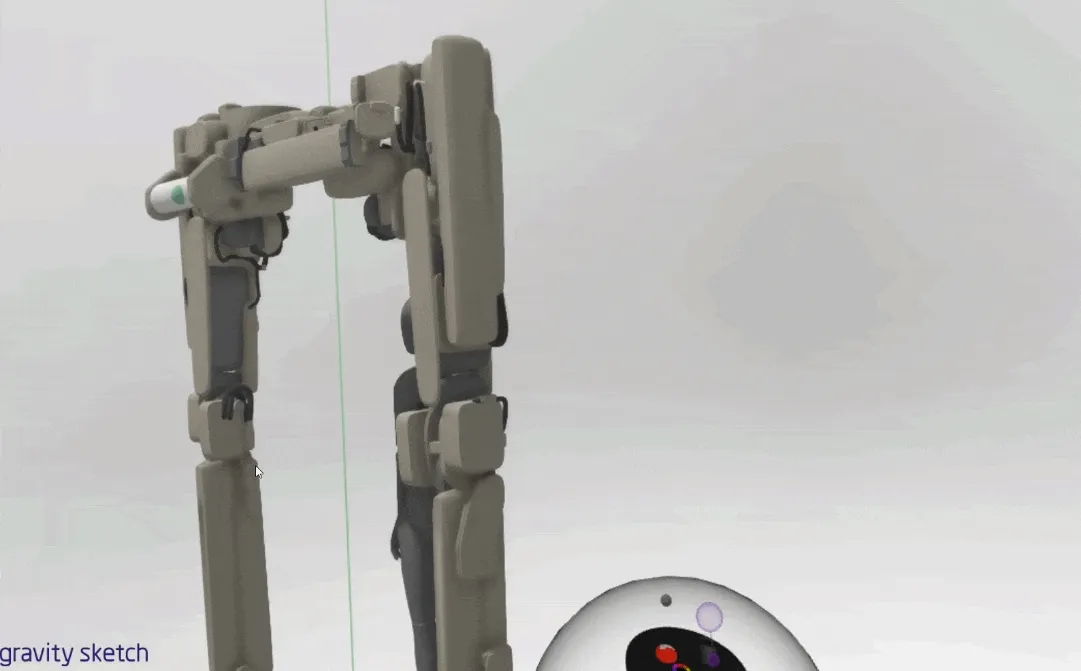

Wood — A Stylish VR Art Piece

Keywords: VR art appreciation, technical implementation

https://www.youtube.com/watch?v=xk6vUPk222A

We’ve probably seen many unique painting styles, but unique VR artists may be less common.

Minhang is a stylistically unique VR artist from Vietnam, whose work quality and visuals are now on par with traditional 3D software output, with an even stronger artistic style. And due to VR paintings’ inherent sense of presence, the painting process itself is also very interesting — like constructing a brand new world:

In addition to artistic works, VR painting has also garnered appreciation from some creators during prototyping stages. For example, the concept design in the movie “The Wandering Earth 2” utilized VR software. The impressive doorframe robots in the movie leveraged Medium and Gravity Sketch, two VR drawing tools, for early concept exploration. Here’s a detailed article on the design process.

XReality.Zone

XReality.Zone