XR World Weekly 019

Cover of this Issue

In this issue’s cover, we share Mike Stang’s creation Instagram 4 VisionOS. Currently, many applications on Apple Vision Pro still use a single-block design similar to the iPad, not fully utilizing the “space” as a larger “screen”. We hope to see more multi-block design apps like Instagram 4 VisionOS in the future.

Foreword

Hi everyone, surprised to see the XR World Guide appear this Thursday, right?😉

Yes, in order to meet with you every week, we decided to switch from bi-weekly to weekly updates starting from issue 019. Unless there are unforeseen circumstances (like typhoons or volcanic eruptions), the XR World Guide will meet you every Thursday.

Of course, maintaining weekly updates is impossible with just one person’s effort. We would like to thank all the evangelists at XReality.Zone. If you are passionate about the XR world and want to share the fun of XR world through XR World Guide, feel free to join our evangelist team at XReality.Zone.

How to join? You can contact us via WeChat (add CallMeOnee) or email ([email protected])~

Without further ado, let’s see what this issue of XR World Guide brings you~

Table of Contents

BigNews

- Let’s visionOS — A playground for visionOS creators created by us at the end of March

Idea

- Bezel: Show Your iPhone in Apple Vision Pro

- Dino: Helping you type Chinese on Apple Vision Pro

Tool

- Unity provides a new visionOS Project Template for developers

- SplineMirror: Now you can view 3D models created in Spline on visionOS

- Spatial: A free command-line tool for Mac to convert MV-HEVC to regular format

Code

- HandsRuler: An app for measuring object lengths with both hands is now open source

- ShaderGraphCoder: Writing ShaderGraph with code

- ALVR for Apple visionOS: SteamVR streaming software now supports Vision Pro!

QuickNews x 🔟6️⃣

BigNews

Let’s visionOS — A playground for visionOS creators created by us at the end of March

Keywords: Apple Vision Pro, visionOS Creators

Here it comes! 🌟 The first Let’s visionOS Creator Conference is about to open grandly in Beijing at the end of March. 🌆 From March 30 to 31, friends at XReality.Zone invite you to focus on Apple Vision Pro and visionOS, exploring new trends in spatial computing. This is not just a gathering, but a great opportunity to deeply interact with global innovators and pioneers who love tinkering. There’s also a chance to directly experience Apple Vision Pro, immersing in the future’s appearance📍

At the conference, XReality.Zone’s team has invited many domestic and international developers🌍, who will bring their extraordinary insights on Apple Vision Pro, such as:

- Jordi Bruin — Creative independent developer, his app Navi was nominated for the Apple Design Award in 2022, revolutionizing communication with real-time subtitle translation for video calls.

- Devin Davies — Outstanding iOS developer, his visionOS recipe app Crouton was highly acclaimed by the Wall Street Journal for its unique AR timer function, along with his creative ‘AR Plate Smashing Stress Relief App’ Plate Smasher, showing his exceptional talent in app innovation.

- Hidde van der Ploeg — Product designer with ten years of experience, his visionOS app NowPlaying provides an upgraded music playback experience for Apple Vision Pro users, becoming a star product recommended by the App Store.

- Adam Watters — Pioneer in game development, his visionOS app developed with the Godot game engine demonstrates more diverse development possibilities to the developer community, pioneering a new path for developing high-quality applications with non-mainstream engines.

- Hai Xin and A Wen — The first AI space video creators to perform on the Spring Festival Gala stage, leading AI visual artists in China.

At this two-day grand conference, we will explore the various possibilities that visionOS brings us. If you are:

- Engineer: You will explore cutting-edge technology, challenges, and solutions under the new platform, expanding your technical horizons.

- Designer: You will explore new design concepts in spatial computing, get inspiration from peers from five continents, and boost your creativity.

- Entrepreneur: You will build connections with emerging partners during the inception period of the new platform, seeking inspiration and opportunities for entrepreneurship.

- Tech enthusiast: You will keep up with technological forefront, expanding your cognitive boundaries, igniting your passion and innovative drive for the future.

🌟 Embrace international perspectives, seize overseas opportunities: March 30 to 31, Beijing, join us to welcome the new chapter of technological innovation. 🚀 Click the link to secure your spot and enter the new era of spatial computing.

Idea

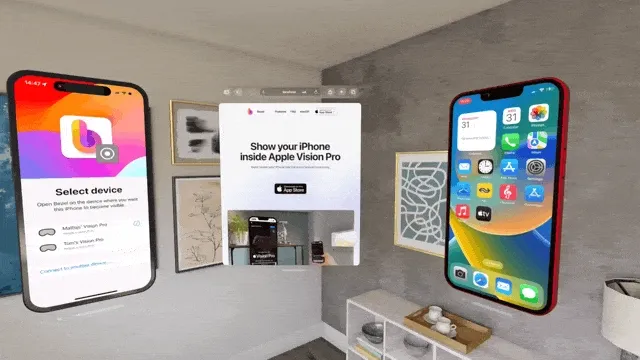

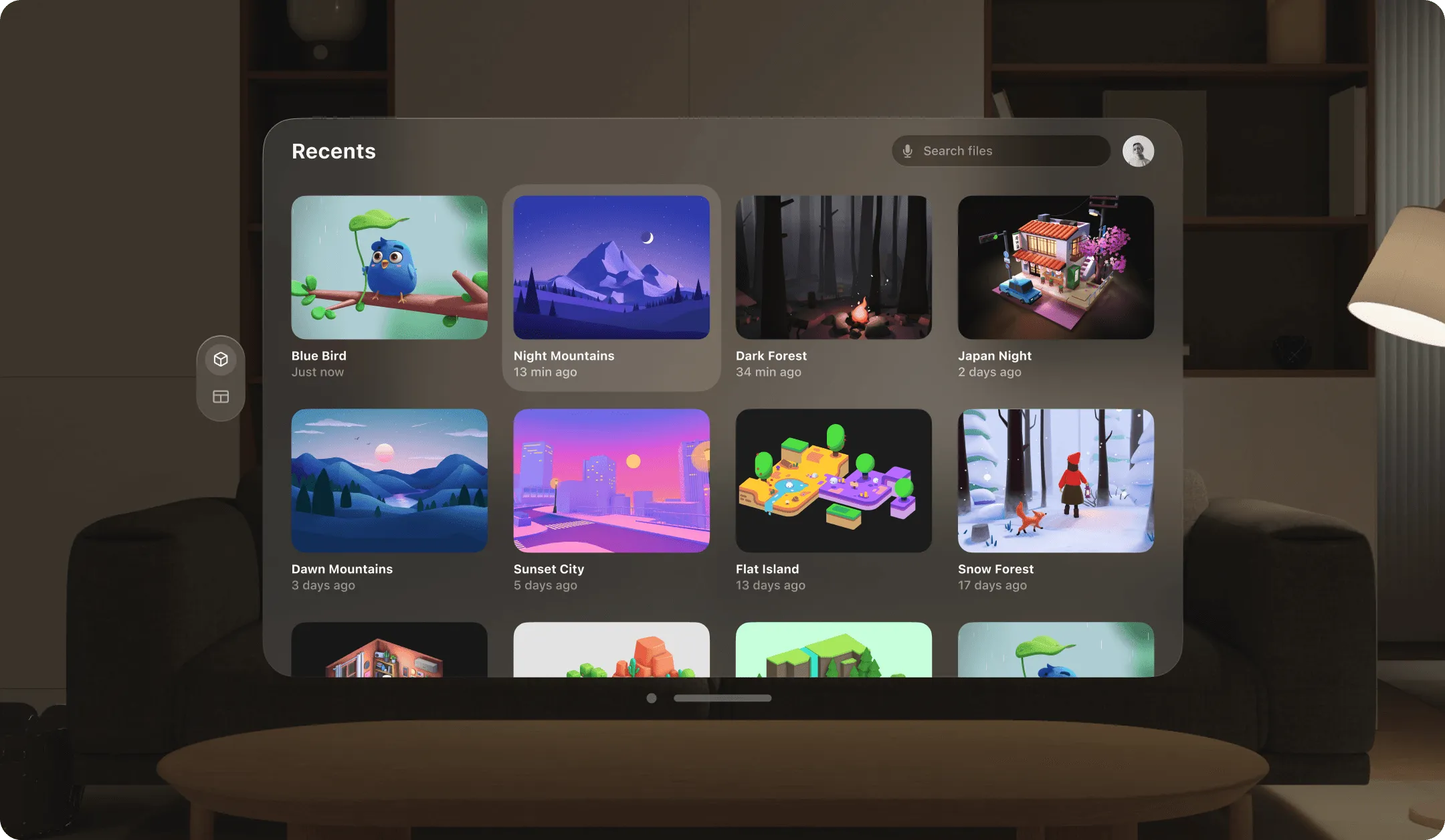

Bezel: Show Your iPhone in Apple Vision Pro

Keywords: Apple Vision Pro, iPhone, Mirror

Bezel is an app that helps you project your iPhone screen on your Mac, and it has recently launched an app for Apple Vision Pro to project your iPhone as an independent window.

If you want to use some apps on Apple Vision Pro in multiple windows, which are currently only available on mobile phones, like the one shown in the picture above, you can try this app.

Additionally, like the Mac app, Bezel also offers very exquisite iPhone shells, only this time they are fully 3D!

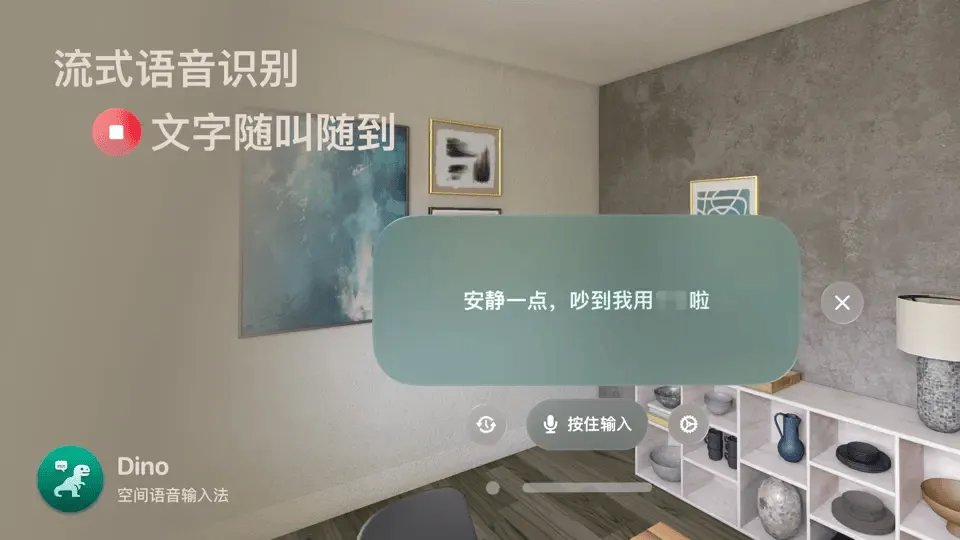

Dino: Helping you type Chinese on Apple Vision Pro

Keywords: Apple Vision Pro, Chinese Input

Even though the national version has not been released yet, Chinese developers and consumers’ enthusiasm for Apple Vision Pro has not diminished at all🤏.

However, when everyone gets their Apple Vision Pro, they find that visionOS does not currently provide Chinese input method and voice recognition, making it very difficult to type Chinese on Apple Vision Pro.

Dino was launched to solve this problem. With Dino, you can input Chinese offline via voice. After the input is finished, Dino will automatically add the text content to the clipboard, and you can also drag and drop it into any text box.

The author’s idea and initiative, Ciyou, deserve praise👍.

Tool

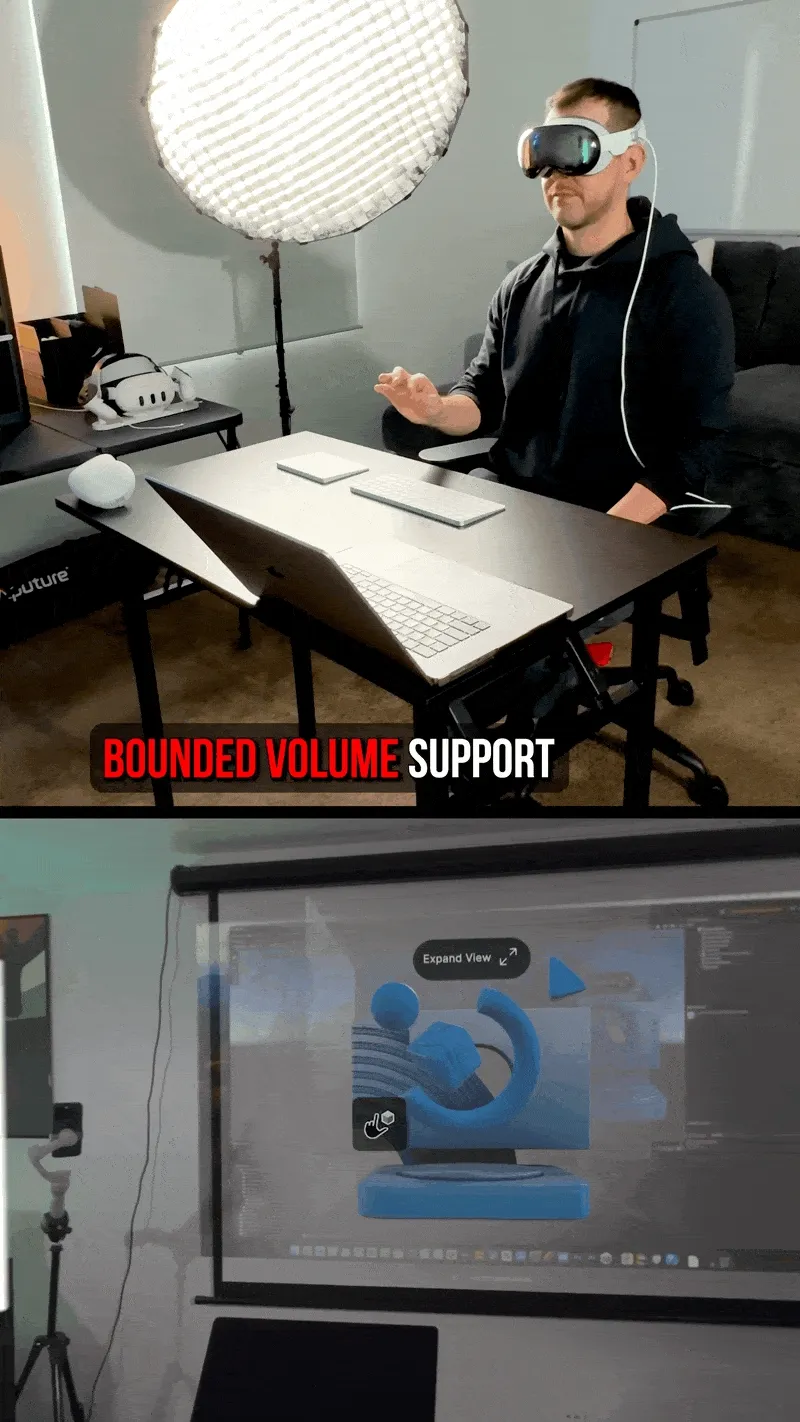

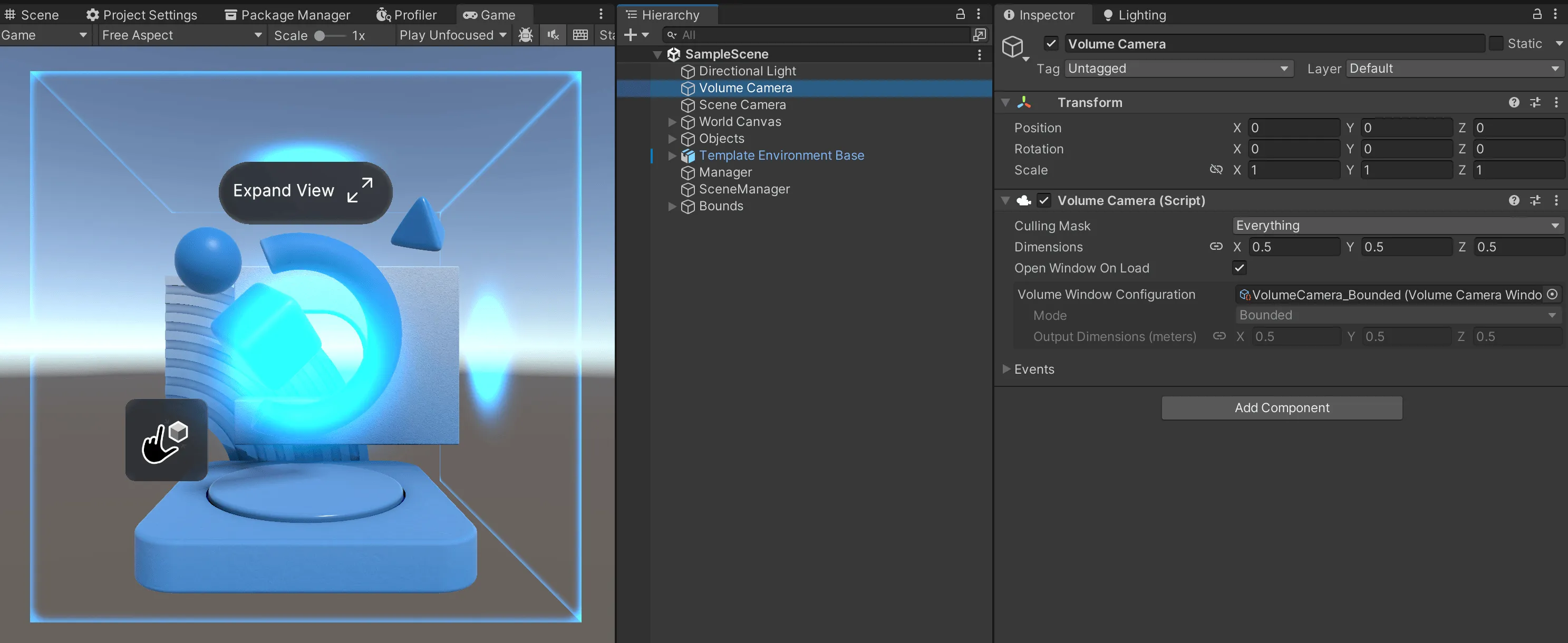

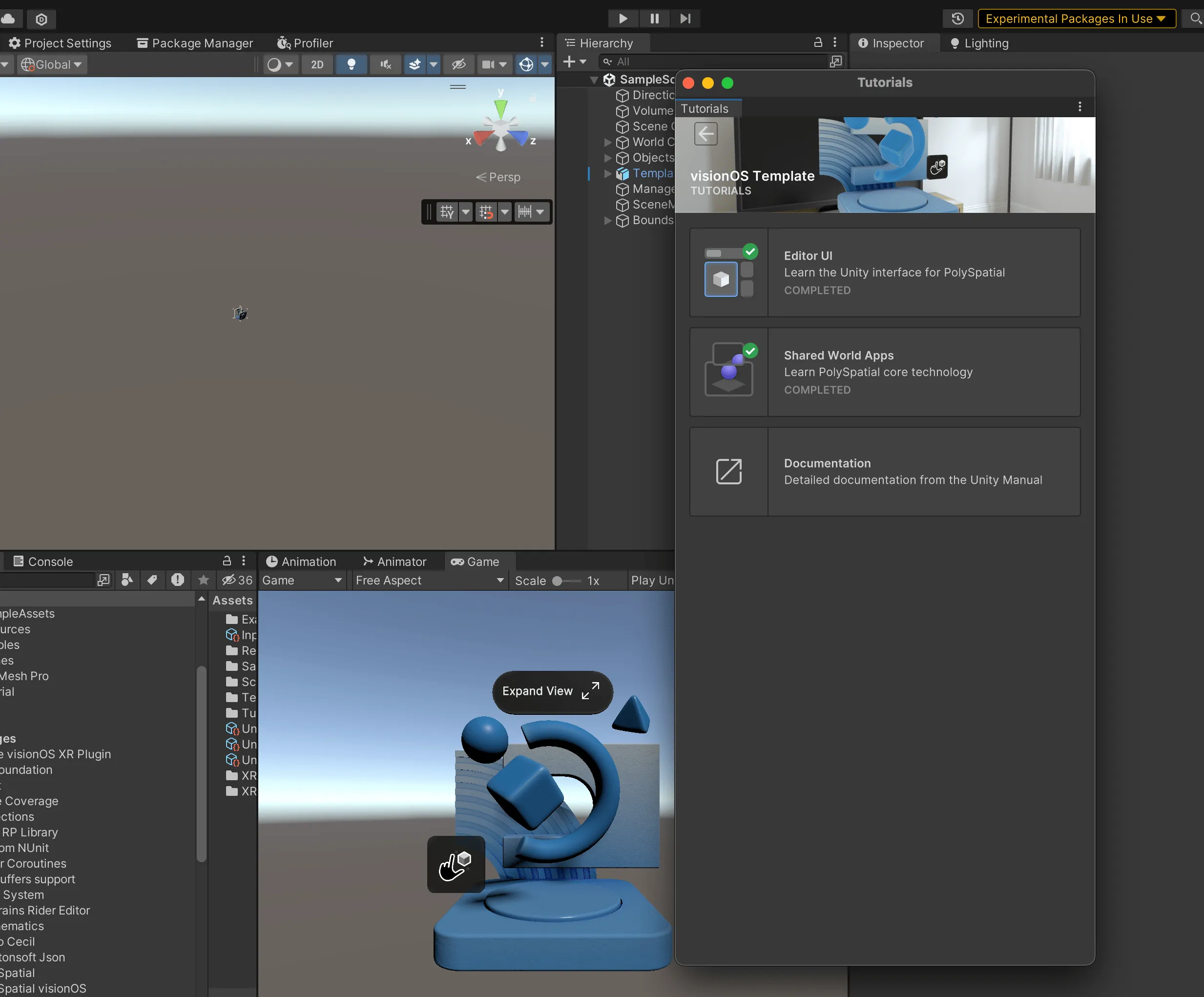

New visionOS Project Template from Unity for Developers

Keywords: Unity, visionOS

Unity now offers a visionOS Template Project for developers looking to start developing apps on visionOS. Dilmer showcased some demos running on Apple Vision Pro in his video.

This Template includes a Shared Space scene and a Full Space scene.

In native visionOS development, we typically use a Volumetric Window to contain the App’s 3D content in Shared Space mode. In PolySpatial, we achieve the same effect through the VolumeCamera component. Just like the Volumetric Window in native development, we can also set the physical size of the 3D Volume in MR using the Dimensions parameter in VolumeCamera. When we set the mode of VolumeCamera to Bounded, as the name suggests, our 3D content is displayed within a fixed-size Volume. When we change the mode of VolumeCamera to Unbounded, the App switches from Shared Space mode to Full Space mode, and the 3D content in the App is no longer bounded, entering a fully immersive mode.

In the Bounded scenario of the case study, we can move floating blocks and other 3D objects in the scene through remote hand-eye interaction and direct interaction. This scenario also allows us to learn how to replicate the interaction modes native to the visionOS system in Unity. The two scenes in the Template can be switched in real-time. By dynamically loading scenes or switching the mode of VolumeCamera, we can enable the App to switch between Shared Space and Full Space modes. The Unbounded scenario of the case demonstrates how to use plane detection and gesture recognition.

In addition, this Template project also provides an interactive Tutorial to help you step by step understand the development components of PolySpatial.

If you want to quickly get started with developing visionOS Apps on Unity, this template project is recommended for you not to miss~

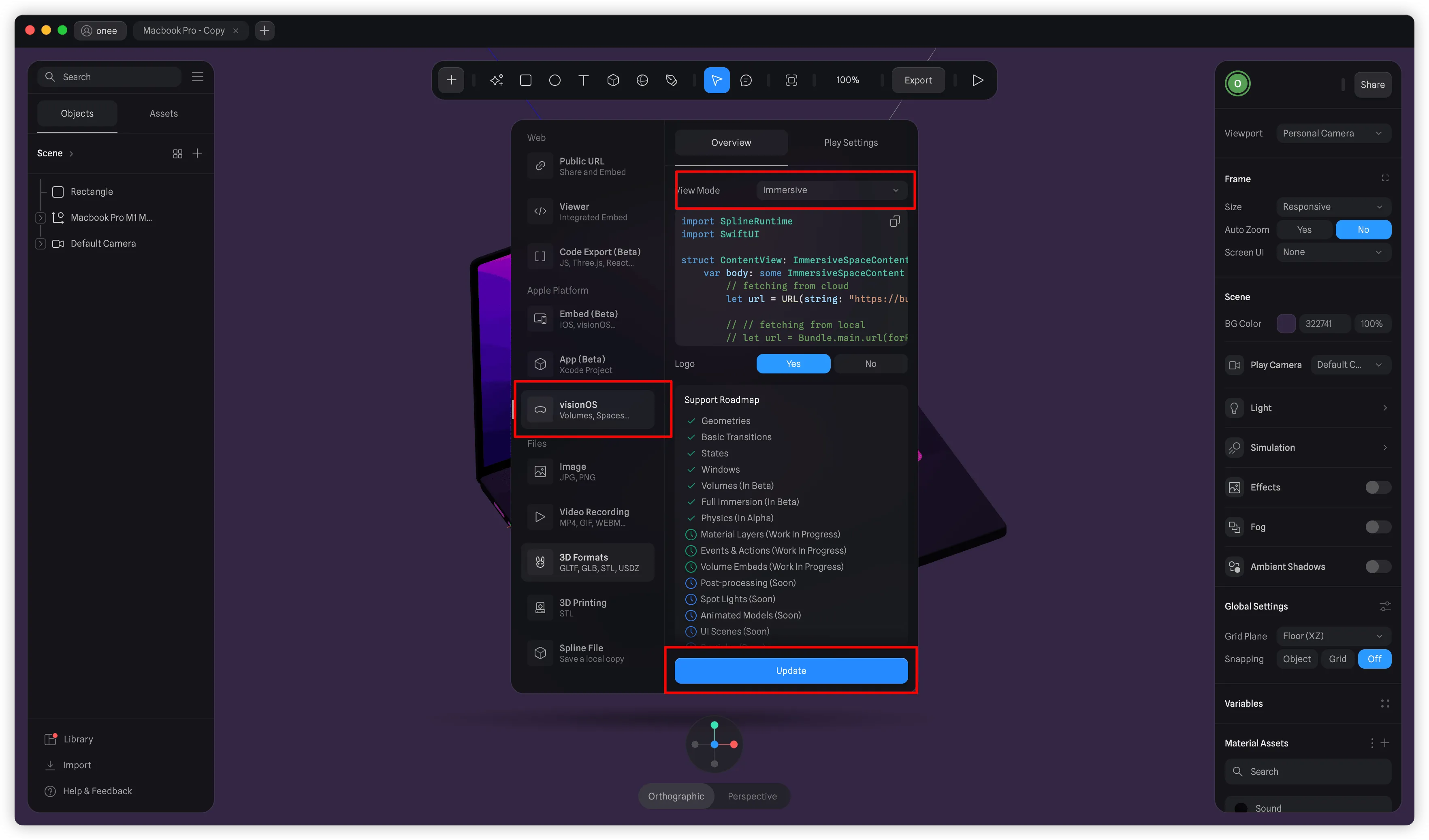

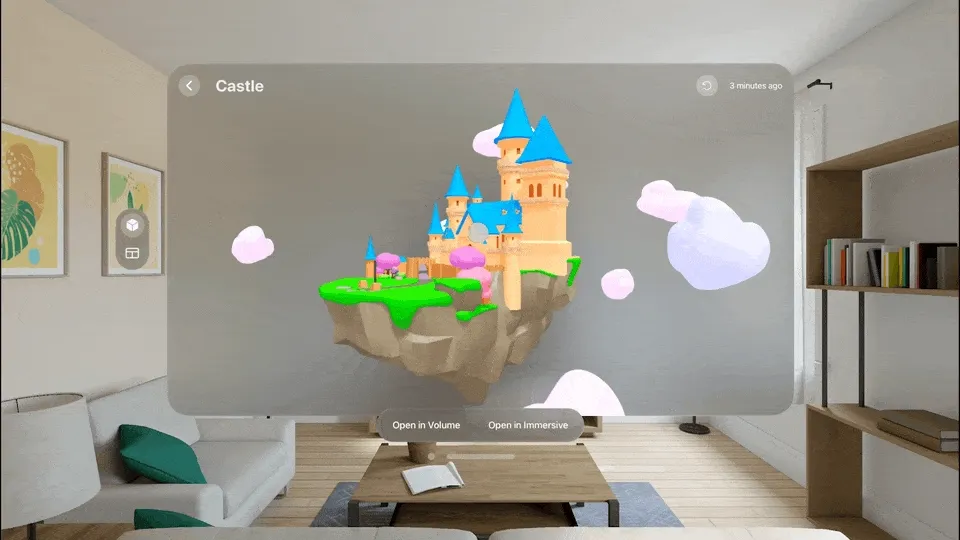

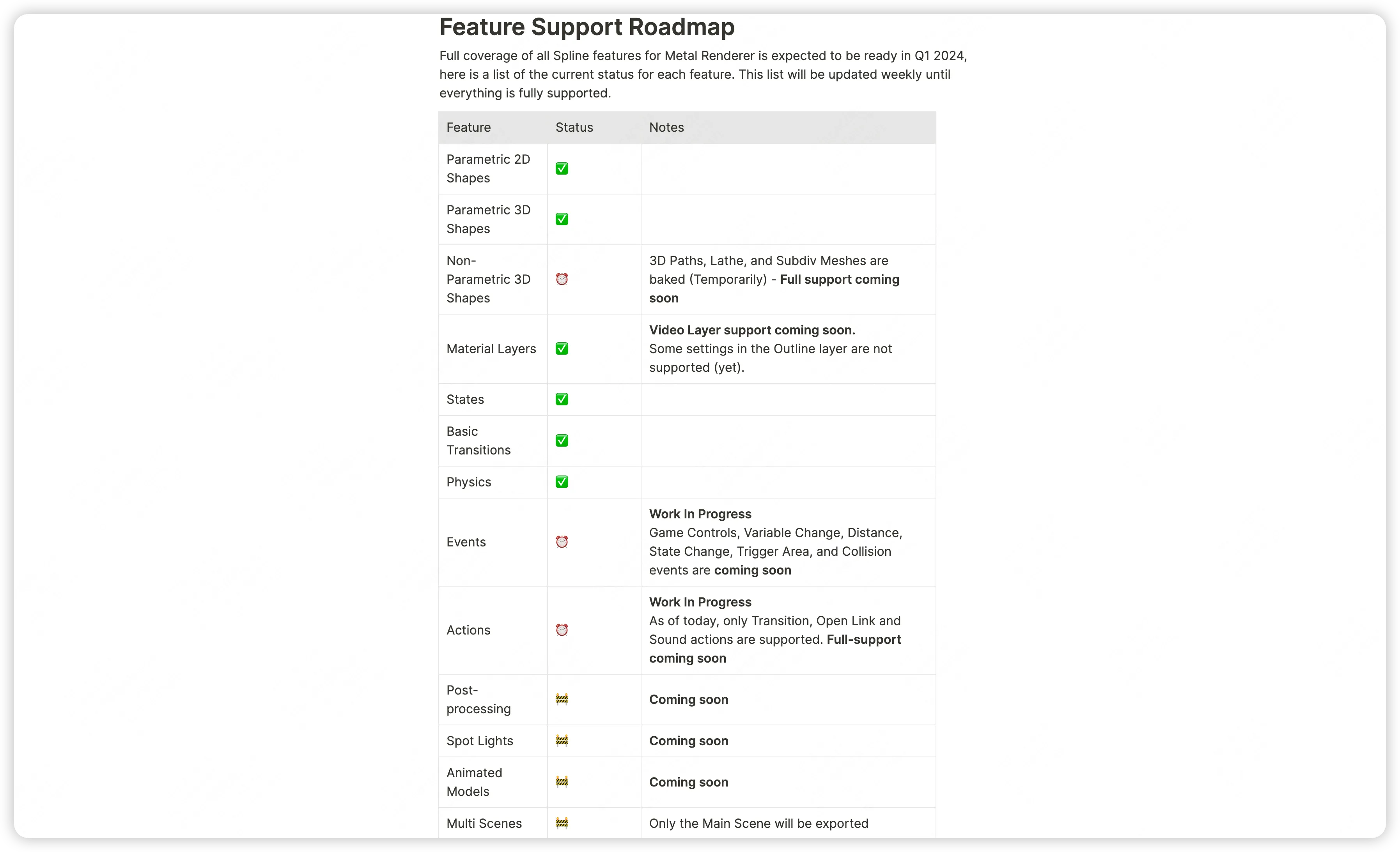

SplineMirror: View 3D Models from Spline on visionOS

Keywords: Spline, 3D Editor, visionOS

As a 3D artist, when you’ve finished your proud creation in the editor, it would be so thrilling to view it in 3D form.

Now, SplineMirror + Apple Vision Pro can satisfy this need. After you have finished editing a scene in the Spline Editor, just click Export -> visionOS -> Update, and you can export this scene file in a format suitable for visionOS preview.

Next, just open Spline Mirror in Apple Vision Pro, and you can see this scene in Recent:

Then, by clicking on the scene, you can view this model file in three different forms: Window, Volume, Immersive Space:

Of course, at this stage, Spline Mirror does not support all features of Spline files, as can be seen from the official documentation. There are still many features such as those involving interactive events, and overall scene post-processing that are not yet supported:

However, for Spline, previewing 3D models on Apple Vision Pro is definitely a scenario where the experience is greatly enhanced. Looking forward to further updates and upgrades of Spline~

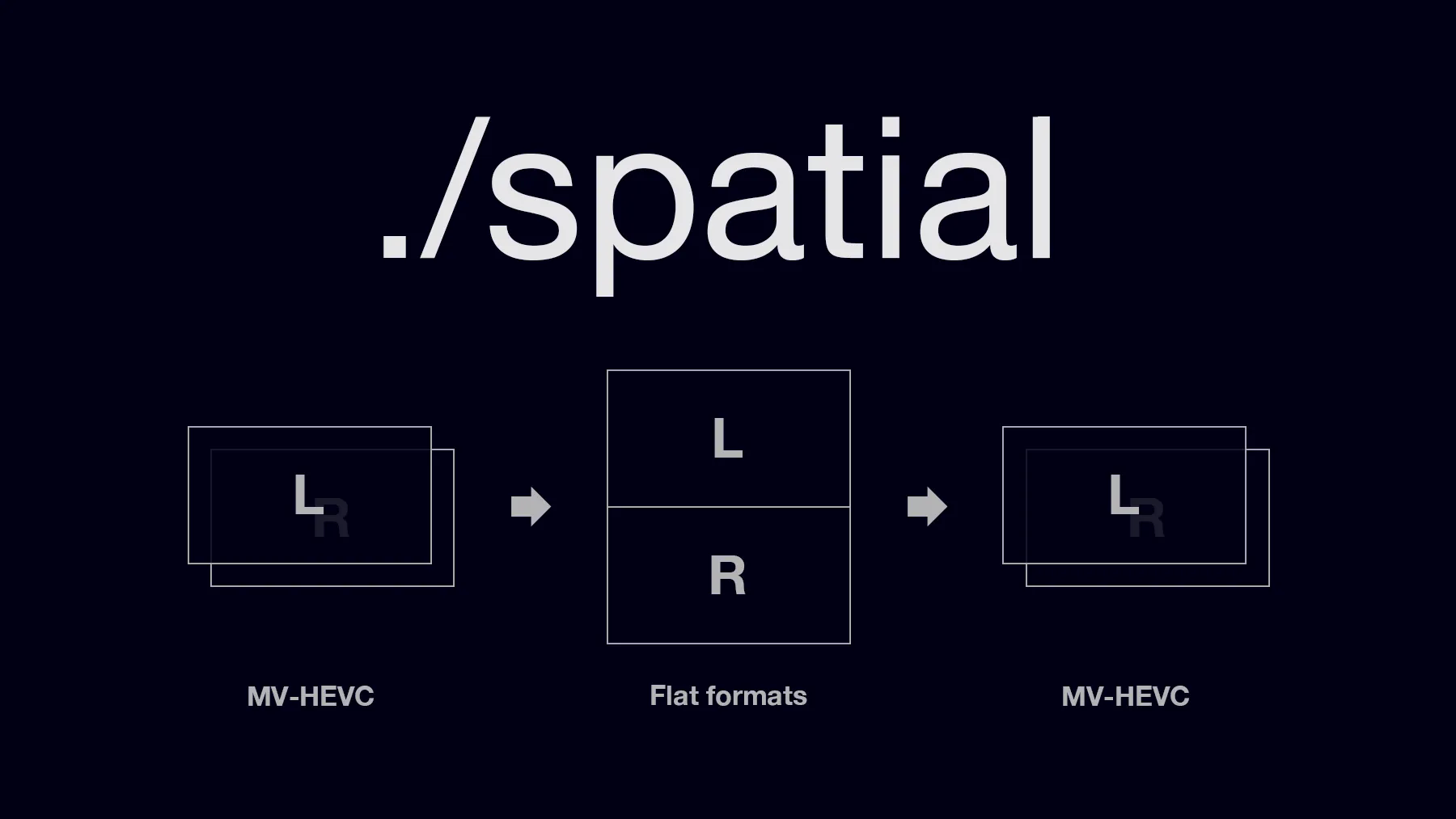

Spatial: Free Command-Line Tool for Converting MV-HEVC on Mac

Keywords: Spatial Video, MV-HEVC

In previous articles, we recommended many tools for Apple spatial video, such as in 018 where we recommended HaloDepth, Owl3D, and Depthify.ai.

This time we recommend spatial, a command-line tool that makes it easy to convert between spatial videos and regular SBS format videos on a Mac (if you’re unfamiliar with SBS, please read What is Spatial Video on iPhone 15 Pro / Apple Vision Pro? for a brief review).

The usage is very simple. If you have Homebrew installed, just execute the following command in the terminal:

brew install spatial

Then, you can use these four commands to perform the operations you want:

- info: Get information about an MV-HEVC file

- export: Convert an MV-HEVC into a regular SBS video

- make: Convert a regular SBS video into an MV-HEVC format

- metadata: Get and modify the metadata in an MV-HEVC file

For example, with the following command, you can convert a spatial video in MV-HEVC format into a regular SBS video:

spatial export -i spatial_test.mov -f ou -o over_under.mov

And with the following command, you can convert an SBS video into a spatial video in MV-HEVC format:

spatial make -i over_under.mov -f ou -o new_spatial.mov --cdist 19.24 \

--hfov 63.4 --hadjust 0.02 --primary right --projection rect

Although Quest has already supported direct viewing of MV-HEVC format spatial videos in v62, this command-line tool is still very practical in scenarios where a large number of video files need to be modified. Many thanks to the author Mike Swanson for bringing us such a convenient tool!

Code

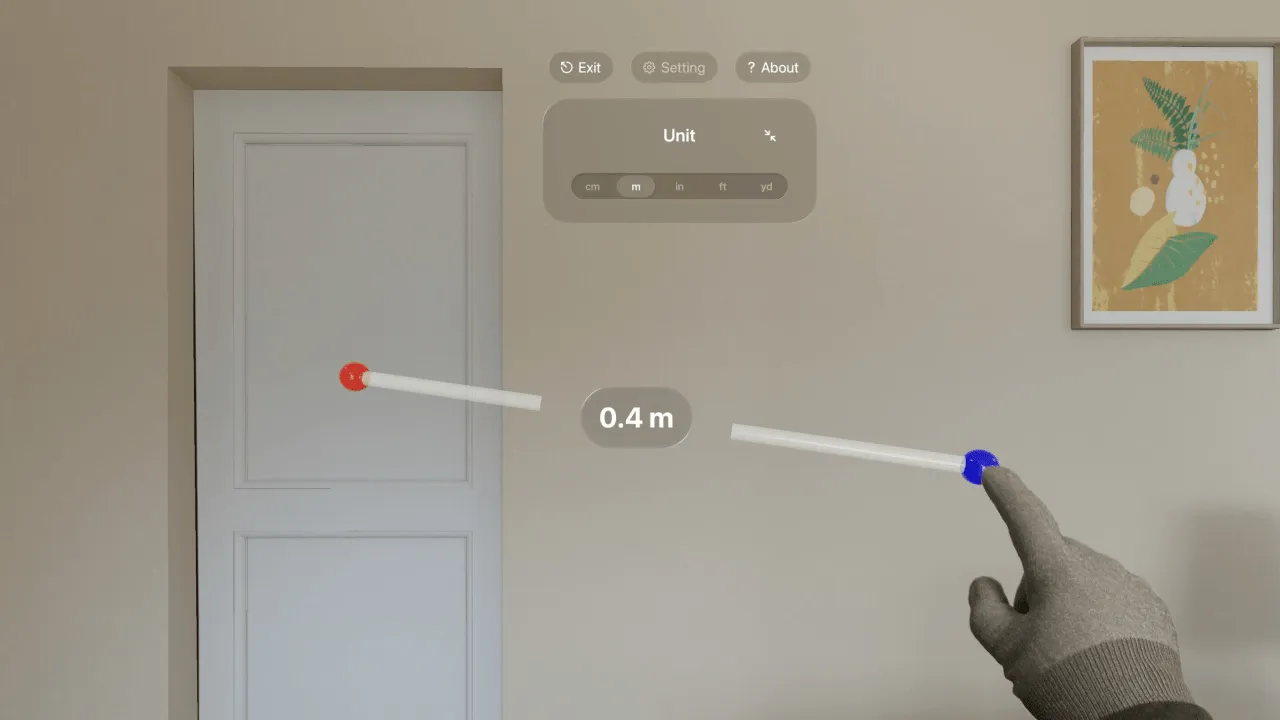

HandsRuler: Open-Source App for Measuring Object Lengths with Hands

Keywords: Ruler, visionOS

HandsRuler is a free app that uses gesture tracking to measure lengths, now available on the App Store. Additionally, the author has open-sourced the project code for everyone to learn from, available at HandsRuler. If you are interested in developing visionOS applications, you can refer to and learn from this project’s code to understand the basics of developing and launching with visionOS.

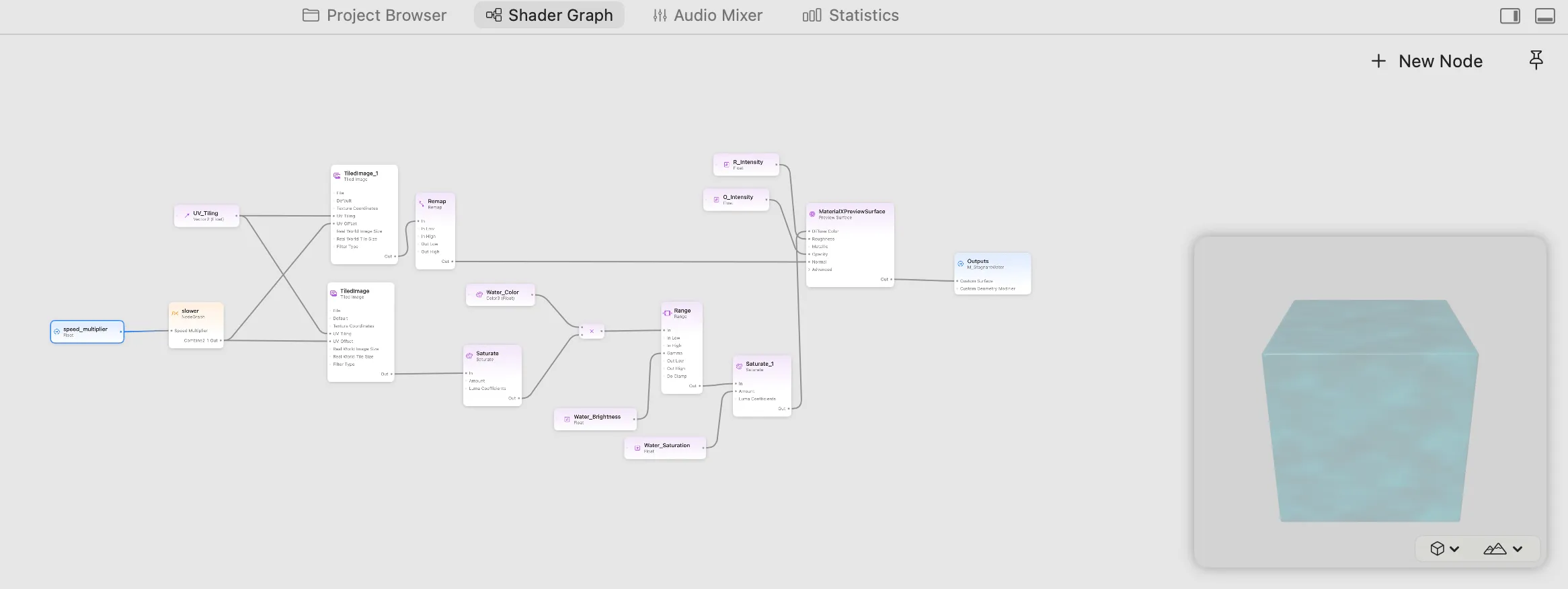

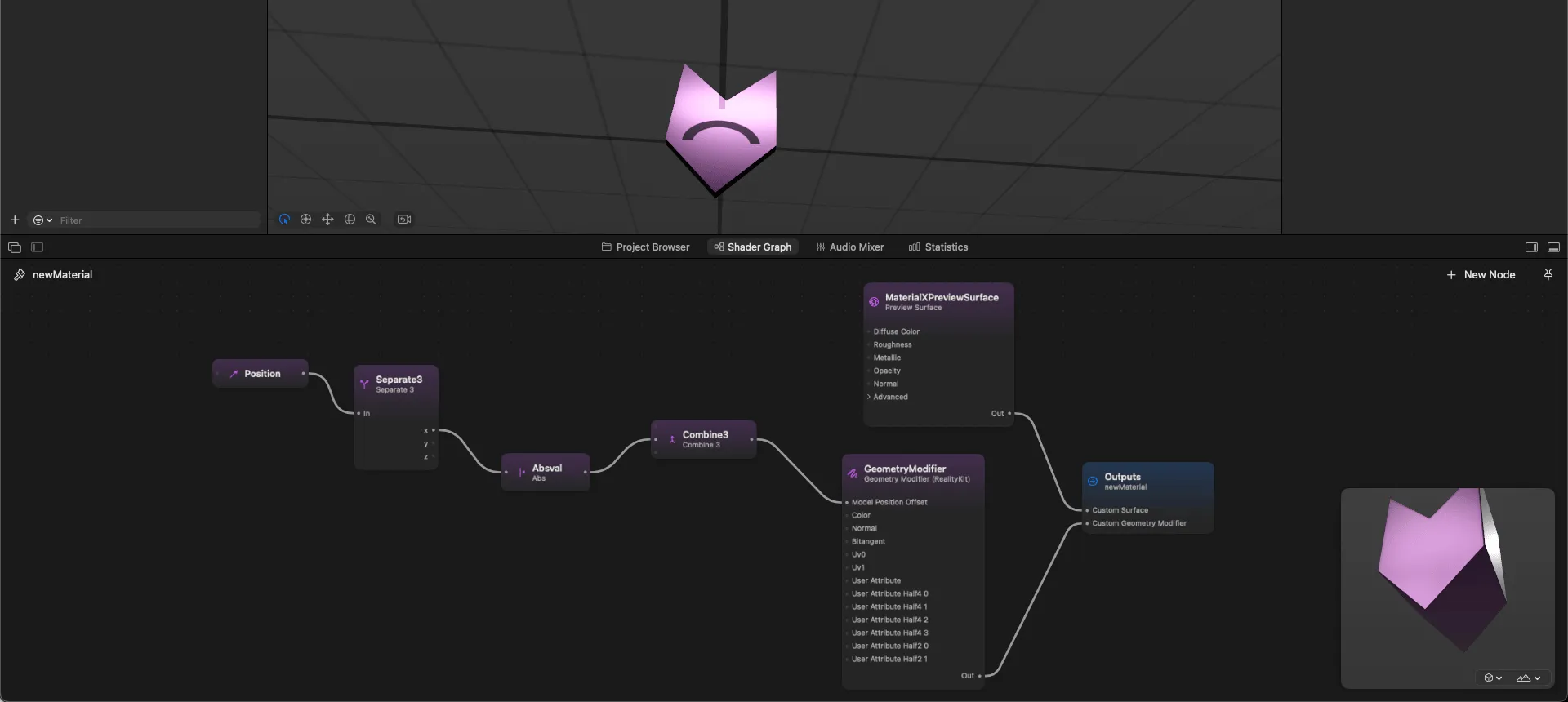

ShaderGraphCoder: Write ShaderGraph with Code

Keywords: visionOS, ShaderGraph, USD

visionOS natively supports the import and use of ShaderGraph, but Xcode itself does not currently offer a code writing feature. That means, to use ShaderGraph, one has to use external tools (such as Reality Composer Pro or Blender, MaYa) to pre-write by dragging components, and then import them via USDZ files.

The ShaderGraphCoder project provides the ability to write ShaderGraph code in Xcode. The specific implementation logic is:

- Write Shader Graph code using the preset

SGValuetypes, which make up a graph - Write the graph into a USDA file in a predetermined format (USDA is a plain-style USD file, similar to a json file)

- Load the USDA file to obtain a usable

ShaderGraphMaterialtype

Most common shader operations and input types are supported. Example usage is as follows:

// Create a solid red material for visionOS

func solidRed() async throws -> ShaderGraphMaterial {

let color: SGColor = .color3f([1, 0, 0])

let surface = SGPBRSurface(baseColor: color)

return try await ShaderGraphMaterial(surface: surface, geometryModifier: nil)

}

// Create a pulsing blue material for visionOS

func pulsingBlue() async throws -> ShaderGraphMaterial {

let frequency = SGValue.floatParameter(name: "Frequency", defaultValue: 2)

let color: SGColor = .color3f([0, 0, 1]) * sin(SGValue.time * frequency * (2*Float.pi))

let surface = SGPBRSurface(baseColor: color)

return try await ShaderGraphMaterial(surface: surface, geometryModifier: nil)

}

ALVR for Apple visionOS: SteamVR Streaming Software Now Supports Vision Pro!

Keywords: SteamVR, ALVR

The well-known streaming software ALVR recently announced its official launch on the Apple Vision Pro headset. Players can use this application to remotely play SteamVR games on the Vision Pro headset. However, the related application is not yet available on the App Store, and users need to download the project from GitHub for manual compilation and deployment. You can visit the project address to view the code, and follow the compilation steps to install it on Apple Vision Pro.

The main issue this project currently faces is the lack of adapted controllers, as Apple has not equipped Vision Pro with such devices. Therefore, players need to use PS5’s DualShock controllers or purchase additional VR controllers to play games.

Quick News

Welcome to our new section - “Quick News”, where we’ll quickly bring you various industry news from the past week

Tips

If you don’t want to read the text, you can also listen to the podcast “XR Product Talk” - “Early Knowhow of XR Dynamics”.

Search for “XR Product Talk” on Apple podcast or go directly here

Search for “XR Product Talk” on Xiaoyuzhou or click here

- Apple releases visionOS 1.1 Beta 3, optimizing volume application positioning and forgotten password reset process for headsets;

- Apple Vision Pro supports ultra-wide-screen movies;

- New Apple patent: handheld controllers for Vision Pro, Mac, and other products;

- Kuo Ming-chi: Vision Pro expected to launch in more markets before WWDC 2024;

- Meta Quest applies to use Apple’s Airplay for receiving;

- Meta requires Oculus accounts to migrate to Meta accounts by end of March;

- Quest rejects Google’s upcoming Android XR platform;

- Meta releases new XR Core SDK(v62):

- Introduces Mulitmodal MM multiple modes allowing Quest 3 and Pro applications to use controllers and hand tracking simultaneously;

- Adds Wide Motion Mode;

- Meta releases AR game Cryptic Cabinet, source code

- Meta may release finger nerve tracking wristband;

- Meta plans to showcase its own AR glasses prototype in the fall;

- Meta partners with LG to develop next-generation XR headset, LG to produce Quest Pro 2 expected to launch in the first half of 2025;

- Thunderbird Innovation completes a new round of billion-level financing;

- Disney’s tenth accelerator program: four AI companies, one VR company;

- Sony testing PS VR2 support for PC;

- Promotion for “Dune 2”: collaboration with Snapchat to launch AR experience;

Contributors to This Issue

Recommended Reading

- XR World Weekly 027 - AIxXR must be a good pair

- XR World Weekly 032 - Not First, But Best

- XR World Weekly 031 - Friend, do you believe in light?

- XR World Weekly 015

- XR World Weekly 005

- XR World Weekly 024 - PICO is about to launch a new product

- XR World Weekly 022 - Qualcomm Technology Salon, Persona can replace the background, visionOS 2 30 Days

XReality.Zone

XReality.Zone