XR World Weekly 013

This Issue’s Cover

This issue’s cover features a concept design for visionOS sports live broadcast from Vektora, shared at concept design.

Contents

BigNews

- The next version of Unity will be known as Unity 6

- Spline now also supports Gaussian Splatting

Idea

- Tracking Craft: Remote control racing cars, be careful

- Mobile version of AR Cubism?

Tool

- sudo.ai: Create a 3D model in 60 seconds with a photo

- Swift Angel: GPTs that help you develop visionOS Apps with Swift

Article

- Apple visionOS and iOS SwiftUI development (Augmented Reality AR)

Code

- Vision Pro Agora Sample App: Agora SDK’s Vision Pro integration demo

- Grape: A Swift library for force simulation and graphics visualization

- GoncharKit: A toolkit developed specifically for RealityKit on visionOS

SmallNews

- Apple releases spatial video examples

- Apple’s new patent application: Cross-terminal multiplayer games in Facetime, but with real objects (I really have printed playing cards in my hand!)

- TikTok adds AR filter editing to its mobile version

- Microsoft: Industrial AR AI, two-pronged approach, more efficient!

- Apple’s new patent: From the Genius Bar to your living room, using AR to promote new product chopping

BigNews

The Next Version of Unity will be Known as Unity 6

Keywords: Unity, Developer Community

”A new broom sweeps clean”, this time, Unity’s version number might be the first thing to clean for new CEO Jim Whitehurst.

Since 2017, Unity has changed its version number to a yearly-based major version mode, such as Unity 2017.1, 2017.2, 2017.3, etc. This naming method has continued to the present, such as 2020.3, 2021.3, etc. Before this, whether it was Unity 1.0 in 2005 or version 5.4 in 2016, Unity used a more common pure digital version number.

However, at Unite 2023, Unity announced its next Unity version will be Unity 6, to be released in 2024.

The new CEO also said he didn’t like the original yearly naming model:

“Frankly I never liked the yearly naming model, because it’s always this weird thing where the LTS came out in a different year than the one it was named for,” he said. He said the new naming system should be more “consistent and clean.”

Spline now also supports Gaussian Splatting

Keywords: Spline, Gaussian Splatting

In Newsletter 012, we mentioned Gaussian Splatting technology, where each data point is represented as a shape with a Gaussian distribution (or “splat”), rather than a single pixel point or geometric shape. This allows point clouds or data sets to be presented in a smoother, more continuous way, improving visual effects and data interpretation quality. Some 3D scanning tools, such as Polycam and Luma, also support exporting scene files in Gaussian Splatting format.

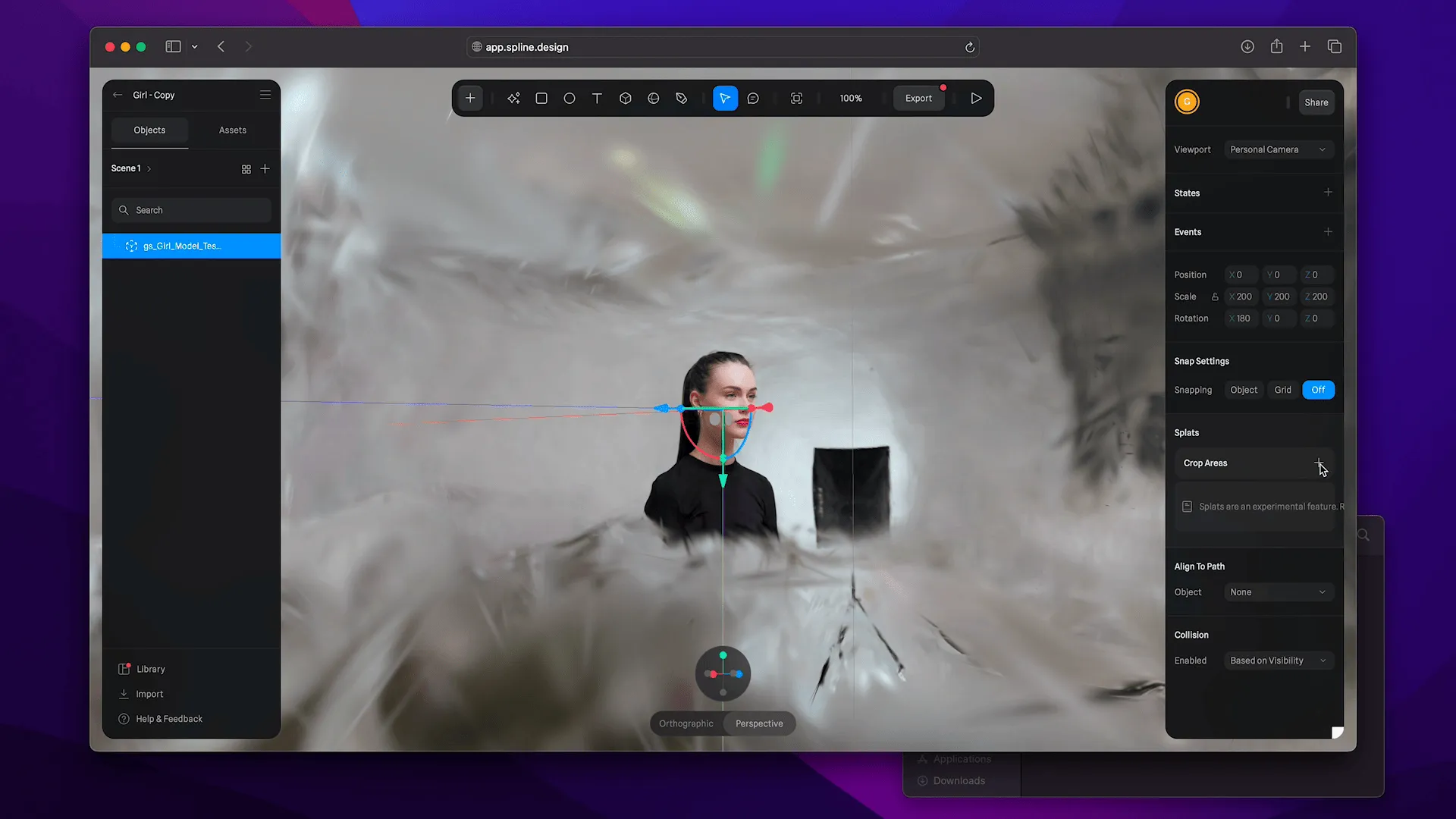

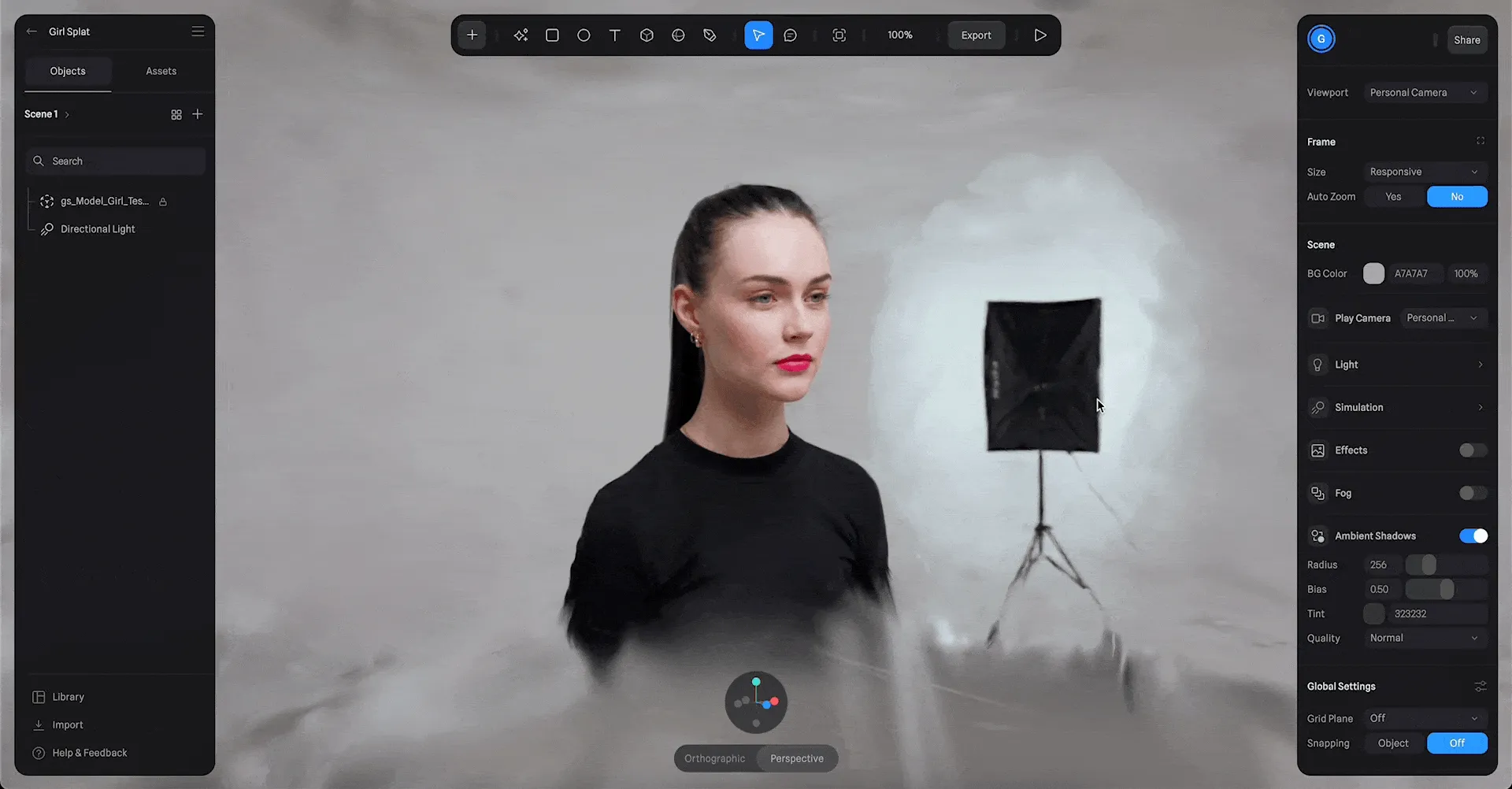

And the 3D online design tool Spline that we recommended in Newsletter 001 now also supports Gaussian Splatting format, like this work.

The usage is also very simple. Just drag the .ply file from Polycam or the Gaussian Splat result scanned by Luma directly into Spline (note, do not export .ply files directly on the mobile end), and you can directly use Gaussian Splatting in the Spline scene and make some edge trimming according to your own needs:

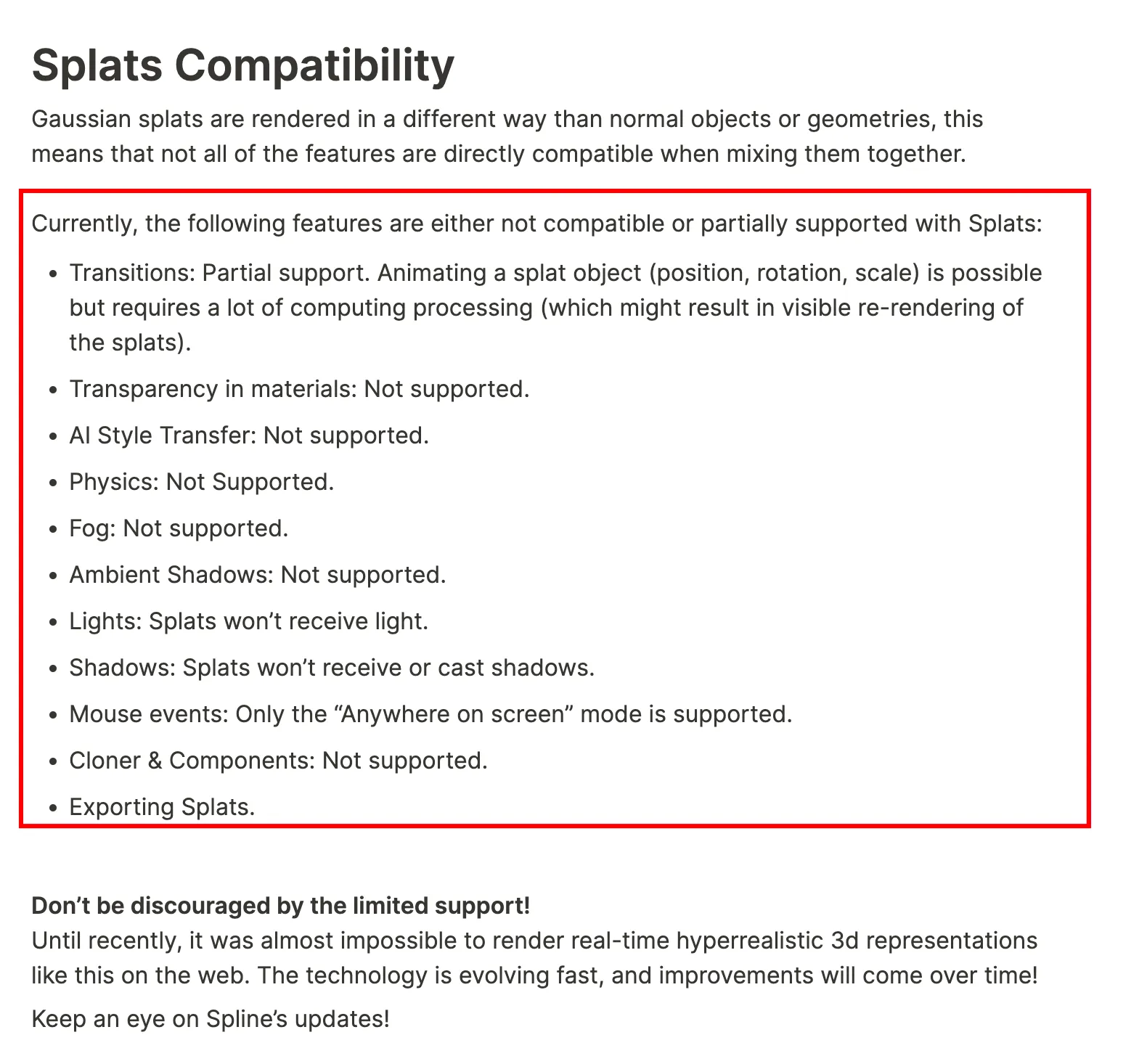

However, due to the different rendering technologies behind Gaussian Splatting, there are still some limitations in Spline’s support for it. For example, although Spline can crop and transform the imported .ply files (displacement, scaling, rotation), it cannot support shadows, physical simulation, lighting, and other features.

But in the end, the Spline team also said that technology is developing very fast, and these incompatible features may be resolved one day, so please don’t be discouraged and keep an eye on Spline.

Idea

Tracking Craft: Remote Control Racing Cars, Be Careful

Keywords: MR, Cars, Quest 3

Hey, kids, do you want to play remote control racing cars in your living room?

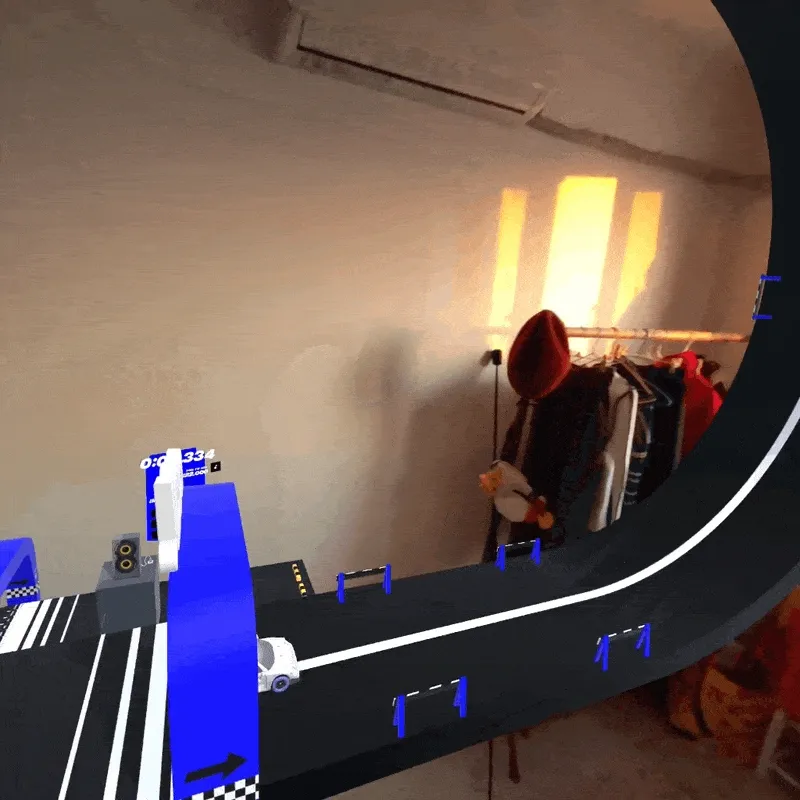

In the past, you might have to get your parents to buy you lots of little cars or tracks to realize this dream, but with this MR App Tracking Craft, you can achieve this dream just by wearing Quest 3.

Tracking Craft is an MR game where you drive a remote control racing car through various interesting levels. The player’s task is to predict the physical settings of the levels and let the remote control car go from the starting gate to the end gate within a limited time.

Of course, thanks to Quest 3’s spatial awareness, you can also let the remote control car run wild in your living room, just like the official demonstration video shows:

In actual gameplay, making good use of different remote control car characteristics and observing the unique physical settings of the levels is the key to successfully passing them. For example, in the level below, as long as the car has speed, it will not fall off the track when it flips over, so you can pass the flipped section at a slower speed.

Currently, Tracking Craft is available for free download on both META APP LAB and SIDE QUEST, and the official said it will be launched on PICO in early 2024. If you have Quest 3 at hand, this game is highly recommended for you to try.

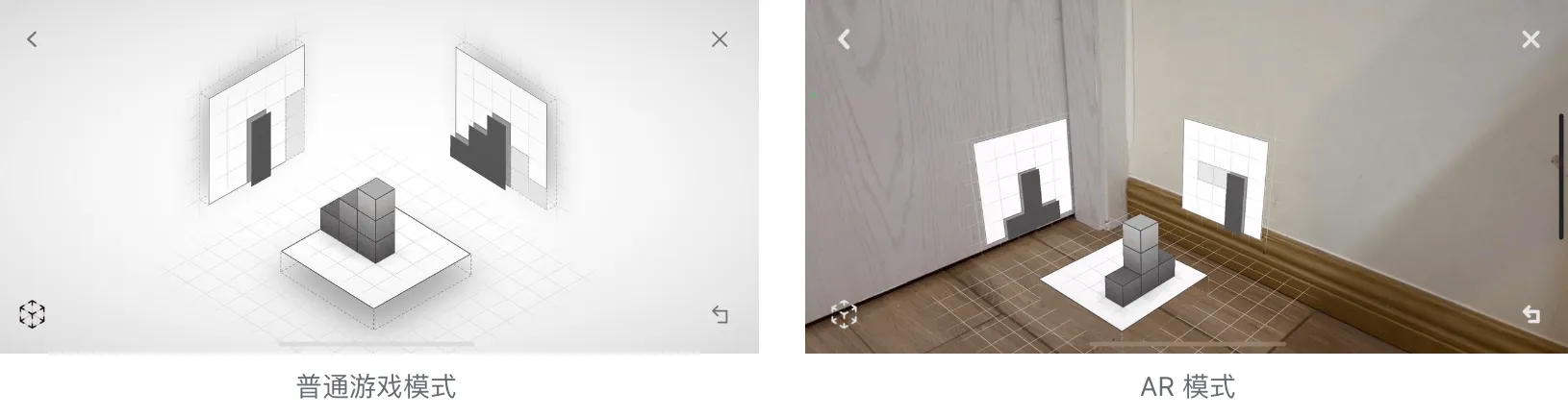

Mobile Version of AR Cubism?

Keywords: AR, Cubism, Cubism, Building Blocks

A few days ago, I found a casual game supporting AR mode called Projekt while browsing Artifact, which is similar to building blocks according to prompts from different directions to make the projection satisfy both left and right views (reminds me of the days dominated by three views/engineering drawings in my freshman year, and the style is also very similar to the schematic diagrams I used to draw at school). Similar to 《Cubism》 on Quest and PICO. Made with Unity, it also supports AR mode on mobile phones (just like Cubism supports MR mode), which is quite relaxing. Interested friends can try it.

What, you say this does not reflect the superiority and uniqueness of MR/AR? That’s because you don’t understand the fun of Lego and Jenga~

Tool

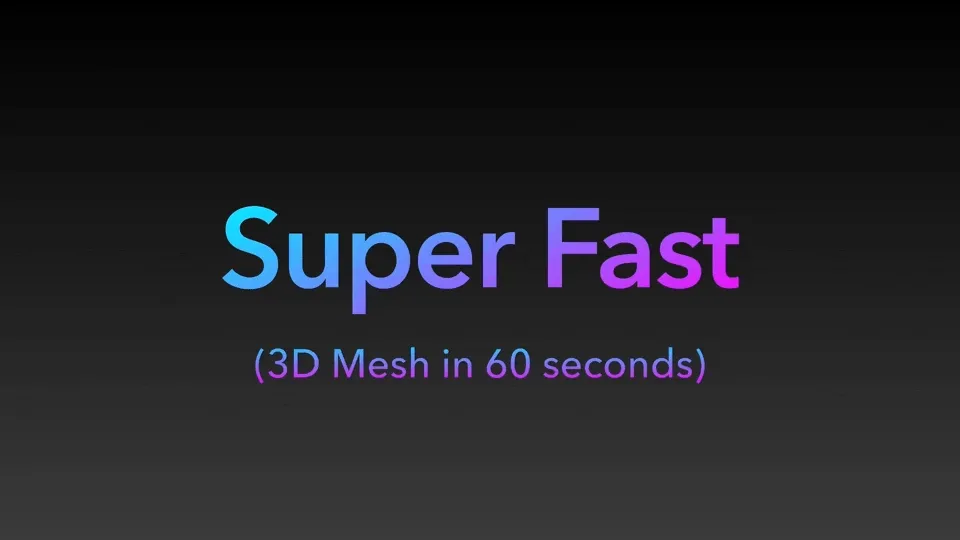

sudo.ai: Createa 3D Model in 60 Seconds with a Photo

Keywords: AI, 3D Assets Generation

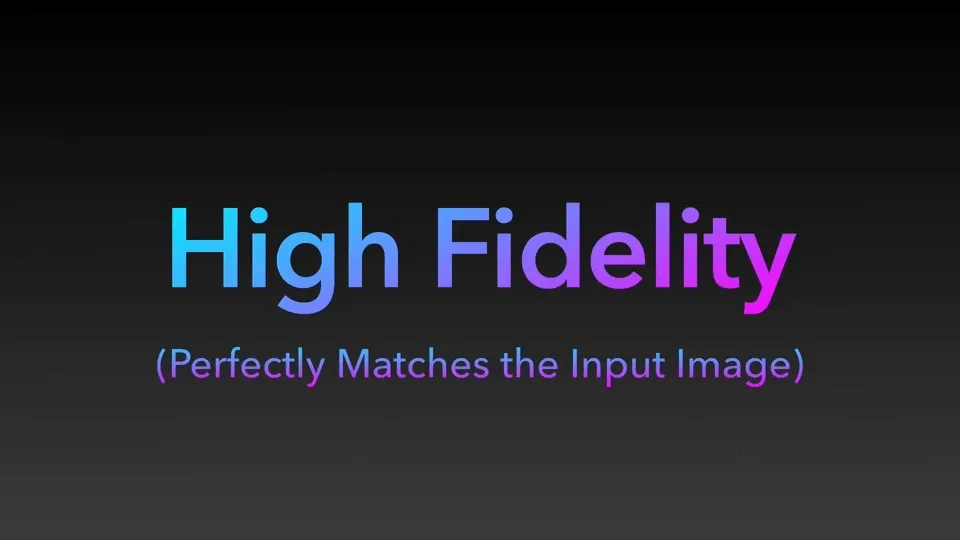

sudo.ai is an online platform for generating 3D models, currently available for free trial. Its main feature is to create 3D models based on a photo or text description. Its advantages include:

- Just one photo is needed to identify the main subject and automatically guess the shape of the back, generating a corresponding 3D model.

- Fast speed, capable of quickly generating usable models within 60 seconds, and also creating a 360-degree rotation video for sharing on social networks.

- Automatically recommends similar models based on shape and color, eliminating the need for manual keyword searches.

The official promotional video also highlights the speed of sudo.ai:

It also emphasizes the fidelity of sudo.ai-generated models to the original images:

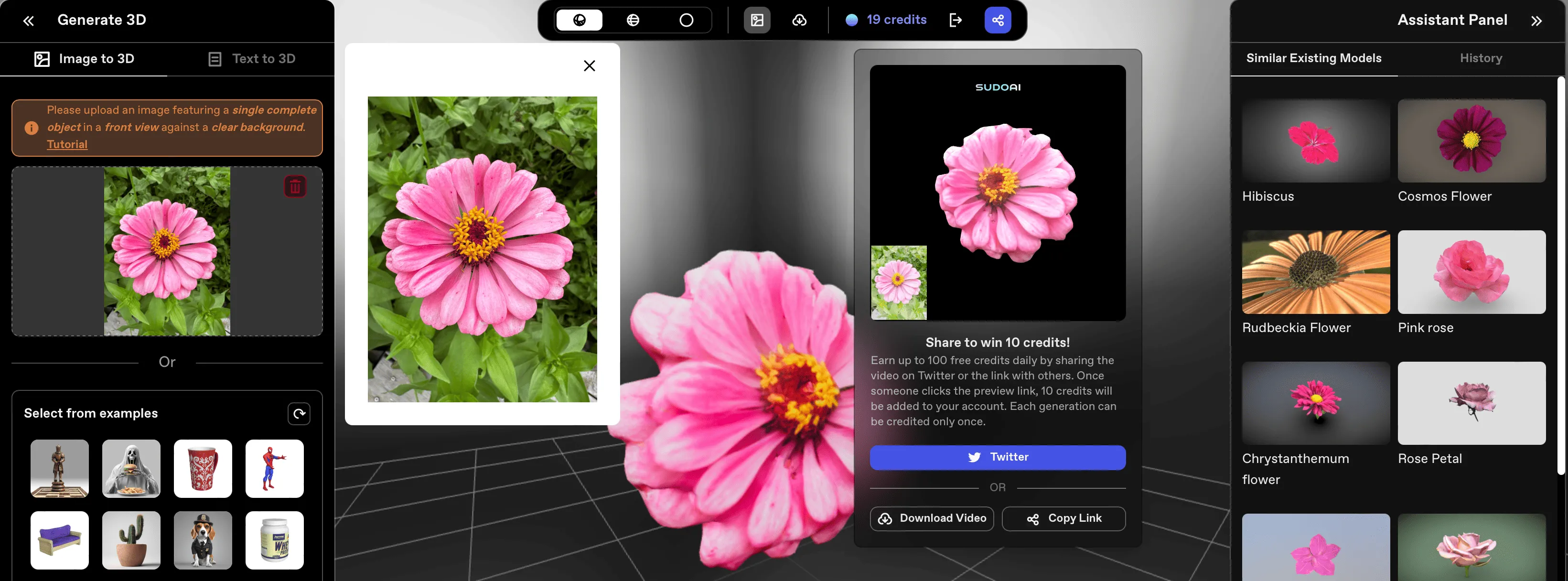

We also conducted practical tests with sudo.ai. On the left side of sudo.ai, we uploaded a photo of a flower taken with a mobile phone. It automatically identified the main subject as the flower, discarded the background leaves, and generated a model of the flower. After completion, the right side had recommended similar models.

If you remember the CSM.ai we recommended in issue 012, they essentially use the same principle, first generating multi-angle images based on the uploaded picture, then using technologies like NeRF for 3D model generation. sudo.ai has now open-sourced the code for multi-angle image generation: Zero123++, with an online demo. The 3D model generation part is currently only publicly available in the form of a paper, with no plans to open-source the code in the short term.

Using the same flower photo for testing, the left side with sudo.ai is faster and the 3D model is smoother. Currently, export supports only .glb format; while the right side with CSM.ai is a bit slower but has stronger detail and supports more 3D formats.

Swift Angel: GPTs that Help Develop visionOS Apps with Swift

Keywords: AI, Swift, VisionOS, GPTs

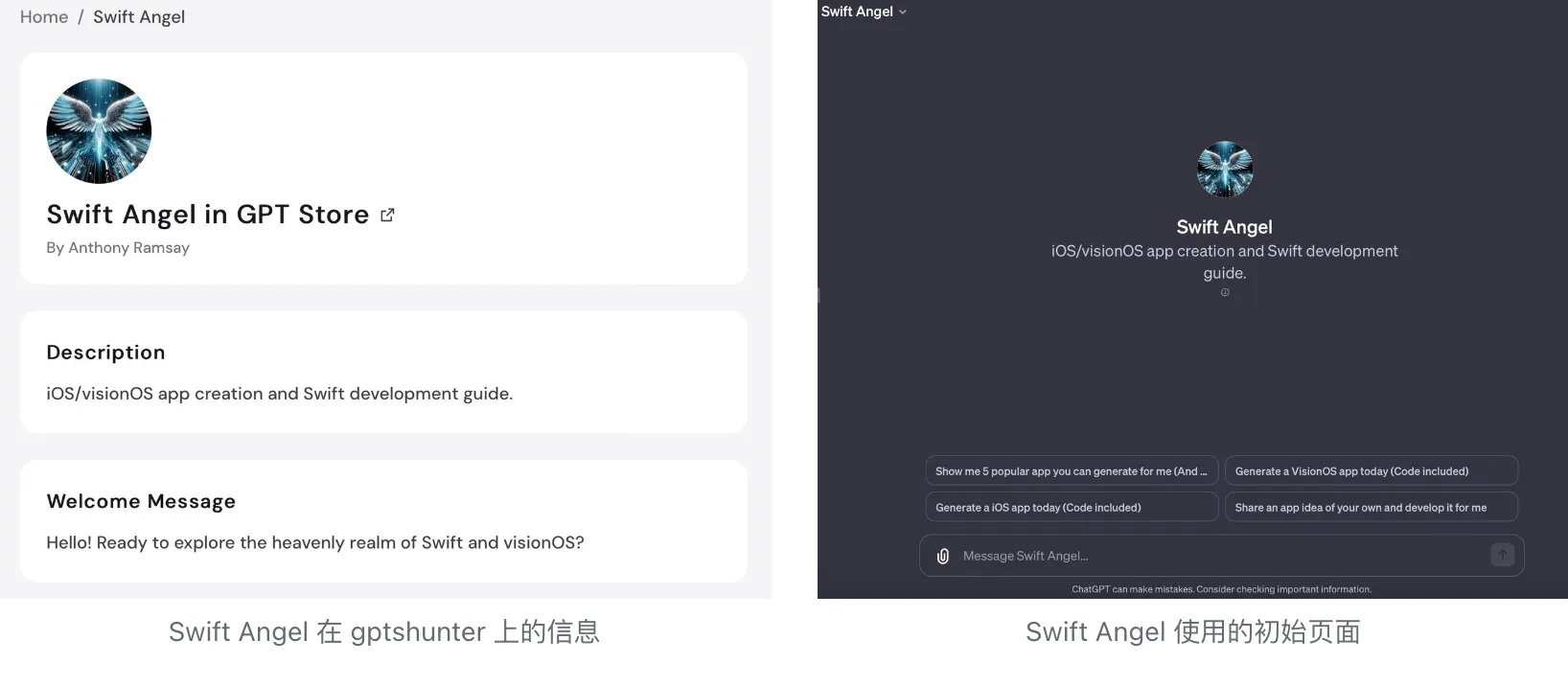

Since the launch of GPTs, multiple Swift GPT assistants created by GPT users can help accelerate the development of iOS and visionOS apps using Swift and SwiftUI. We introduced some in the previous issue.

This time, the only novice in the editorial team personally tried and tested the writing and communication efficiency of some of these GPT assistants. Due to time constraints, we did not test all the GPT assistants that claim to help write Swift apps. The following is a brief review of the usage process with a GPT assistant that has been tested and found workable.

Before ChatGPT supported image recognition and online query functions simultaneously, the novice author had a pure text conversation with the GPT4 native body to implement a simple visionOS app front-end interface (very simple, almost window-based, with some volume display in a few places). Since GPT4’s knowledge base had not been updated at that time, still stuck in 2020, much of the visionOS documentation information was learned and updated in the knowledge base by the author submitting to GPT during the debug process. The overall experience was that GPT4 “learns” and “understands” quickly, but the author’s English written language expression ability also improved greatly after not writing papers.

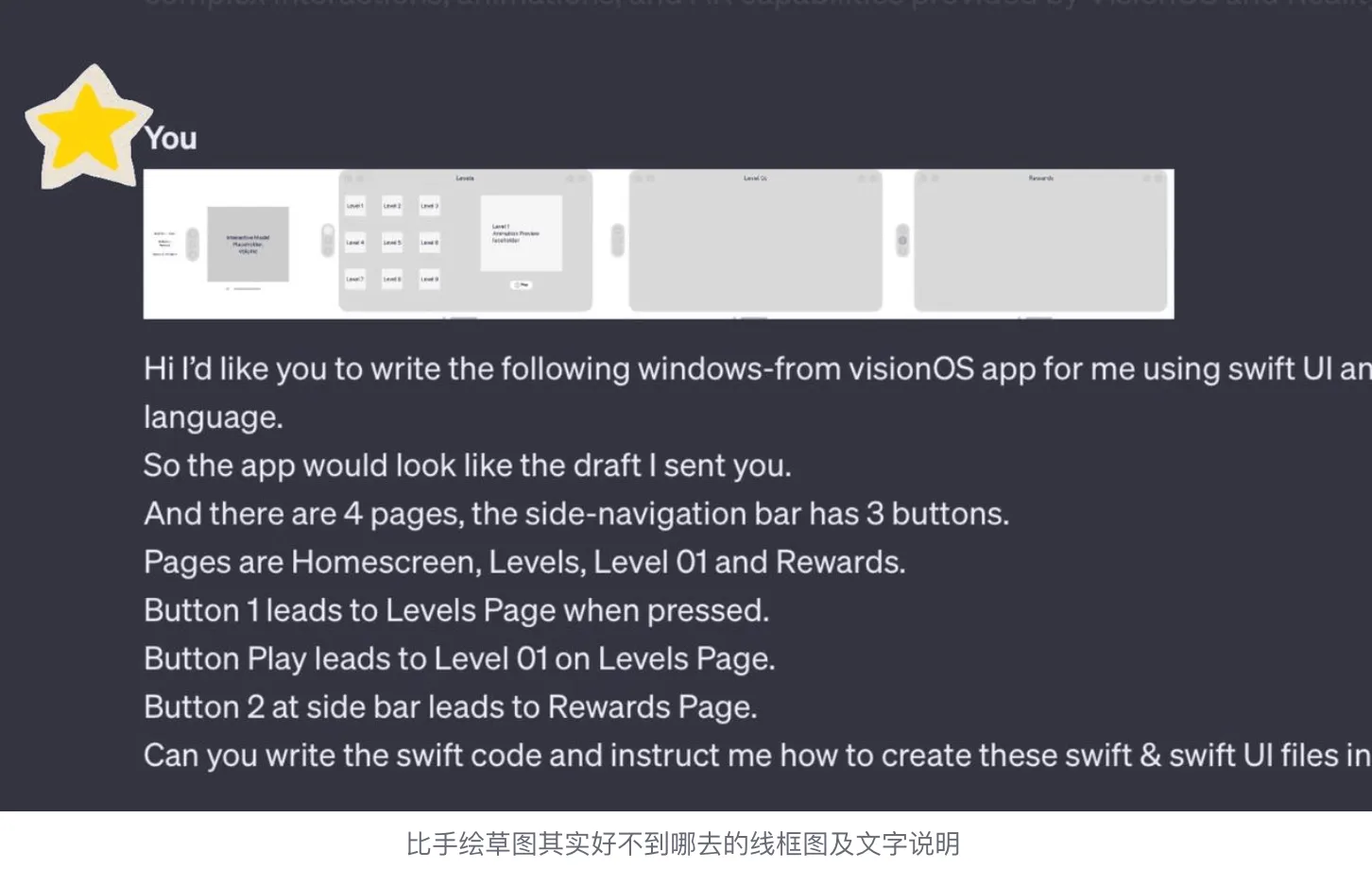

After the GPTs assistant appeared, the author took the simple interface implemented by the GPT4 body and simplified it again to test individual assistants. Some assistants, when tested, the author uploaded hand-drawn interface sketches and stated that they “could not provide code language,” so the author gave up. Meanwhile, to ensure smooth testing and fearing that the sketch was too “rough,” the author also quickly drew a set of wireframes for submission testing.

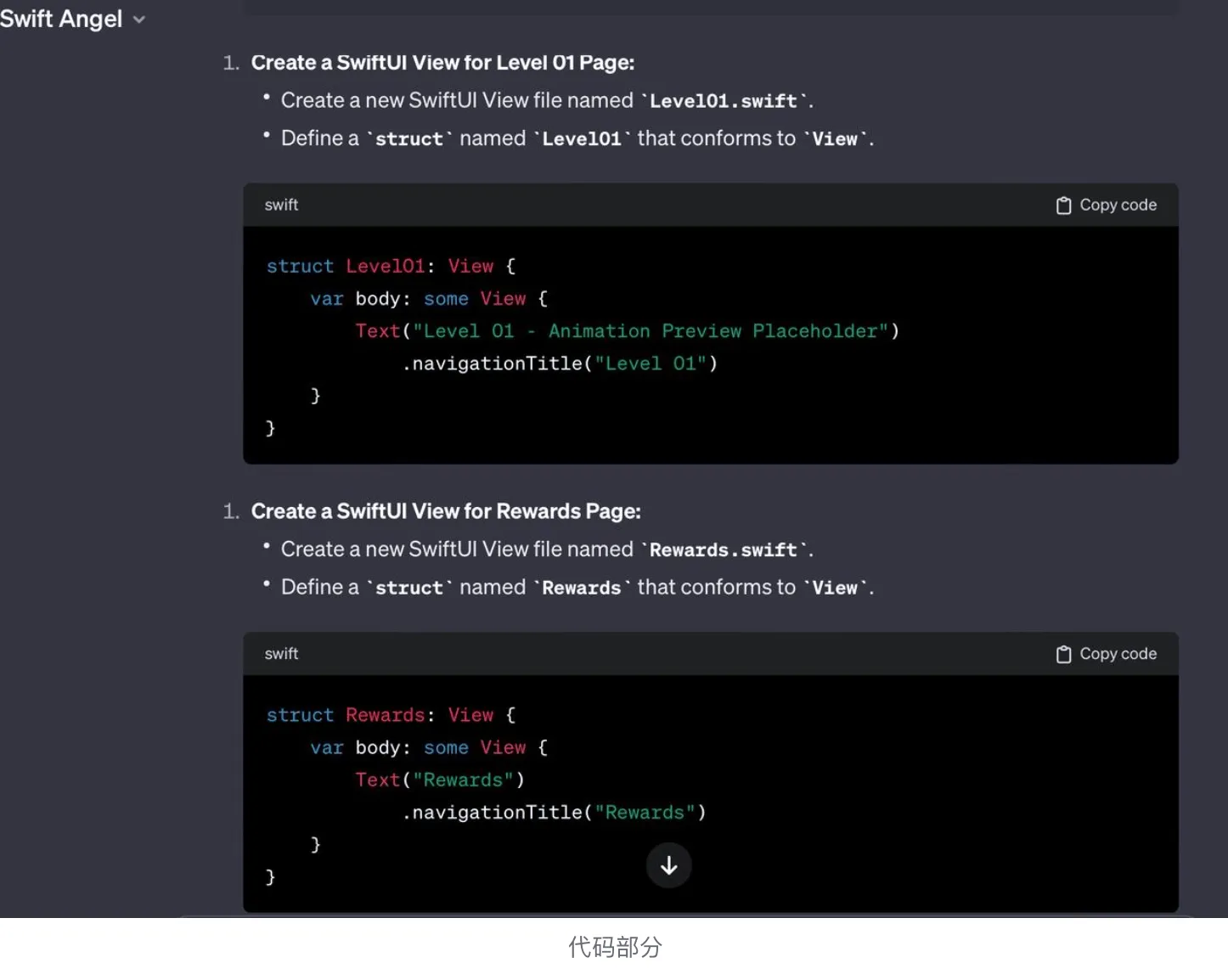

It turns out that a GPT assistant named Swift Angel was able to understand the submitted wireframes (with simple text explanations) and provide Swift code, as well as guide you on how to create project files (truly an Angel). For subsequent misunderstandings or errors, you can also communicate with Angel for modifications (the interface implementation after the first description did not fully meet my expectations; the video and pre/post-modification images here are only for demonstrating GPTs capabilities). Mainly, the efficiency of communication with GPTs/GPT is greatly improved after being able to upload images, no longer relying solely on our “verbal” communication. This example is relatively simple; whether this GPT assistant can implement more complex visionOS app interfaces is something for everyone to explore and discover.

Or do you have any insights or recommendations from your recent interactions with GPTs/GPT in programming? Feel free to leave a comment and interact with us (and pray for server stability)~

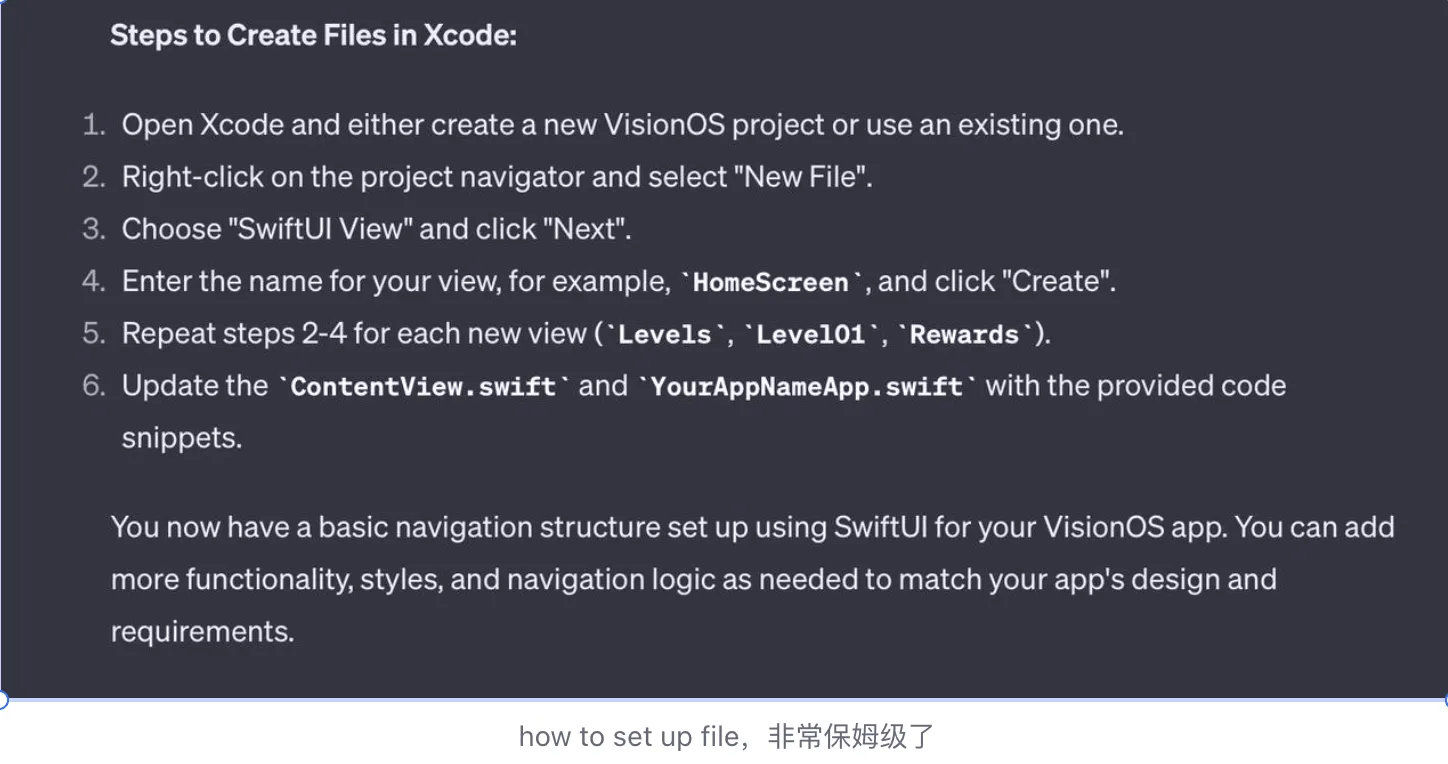

Some communication processes with Swift Angel are shown below:

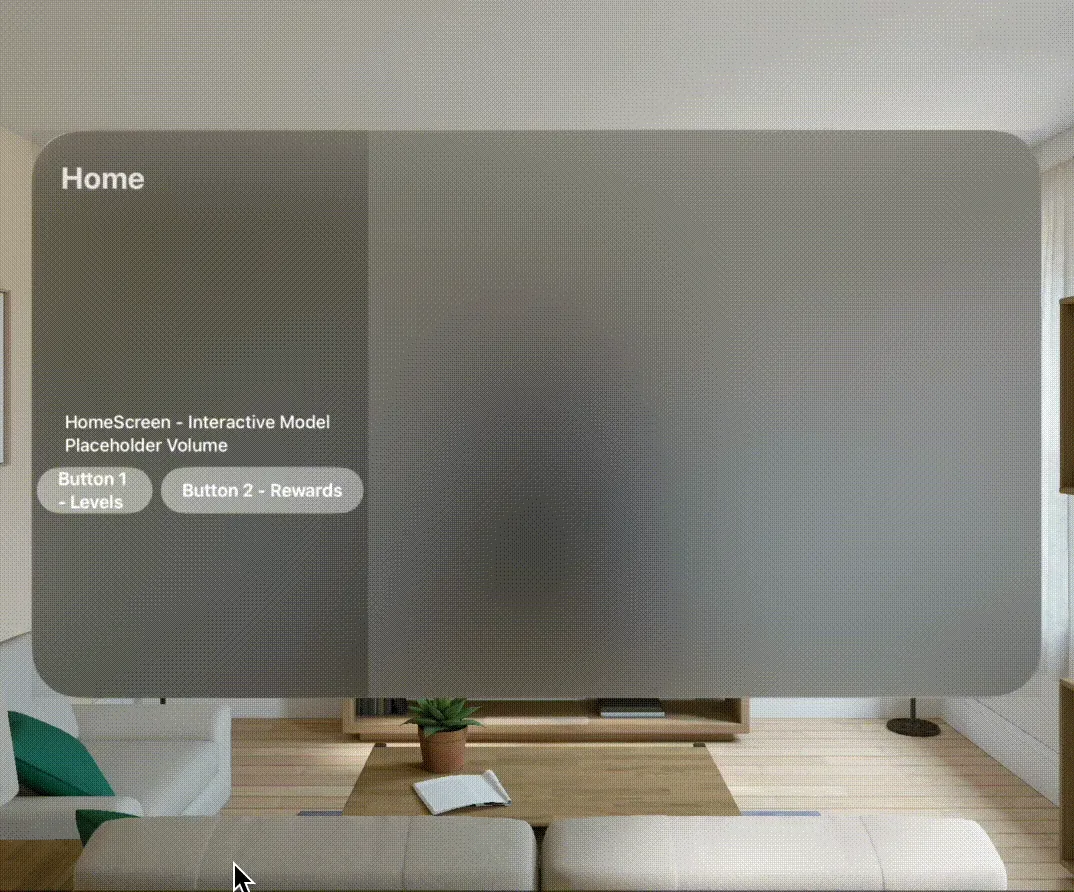

The final implementation effect after the first description is as follows:

Article

Apple visionOS and iOS SwiftUI Development (Augmented Reality AR)

Keywords: visionOS, tutorial, SwiftUI

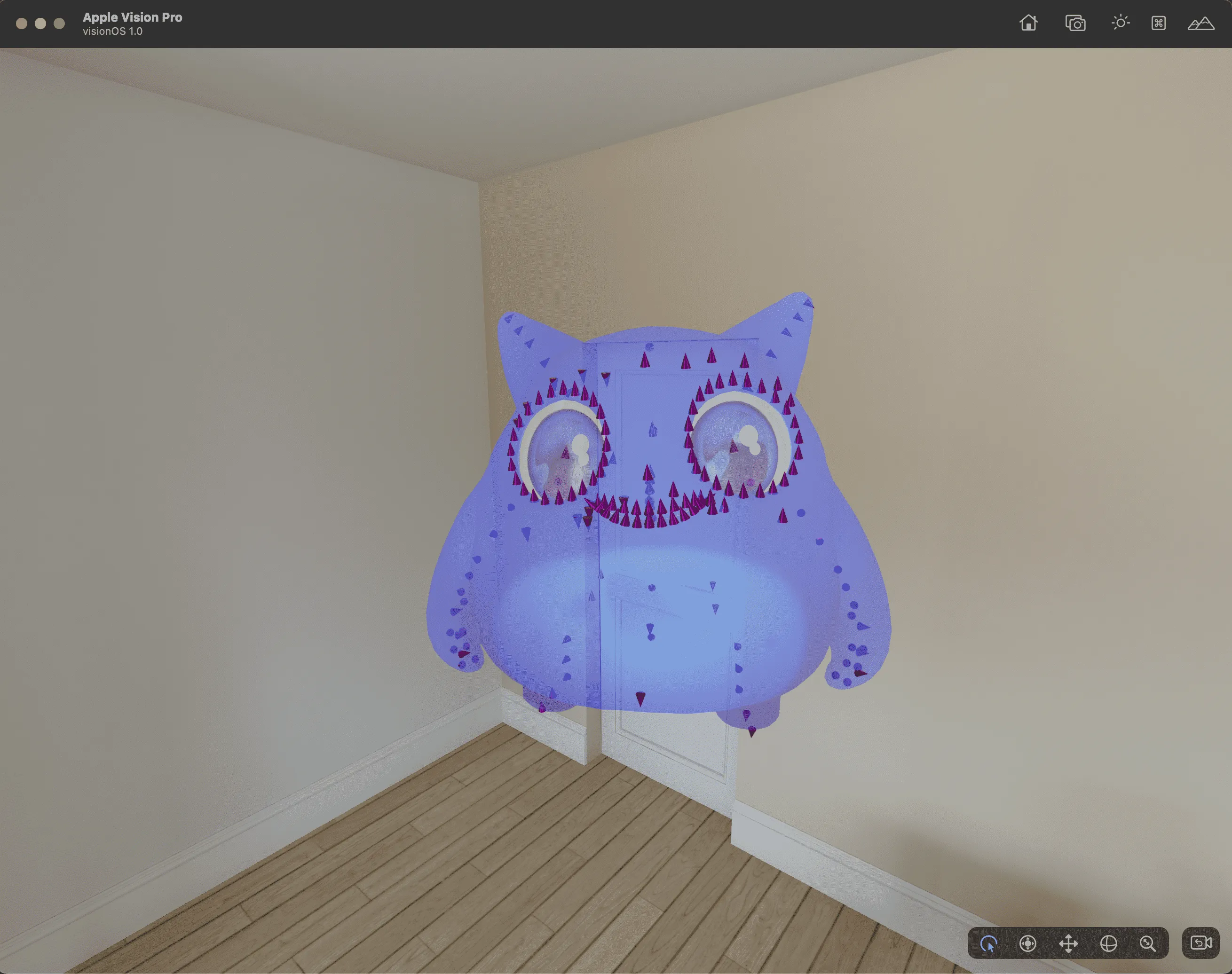

Apple visionOS and iOS SwiftUI Development (Augmented Reality AR) is a Chinese introductory tutorial on visionOS and SwiftUI development. The author refers to the Japanese developer’s visionOS_30Days open-source project, combining their own ideas, creating demos with explanatory thoughts and API usage guides.

Each article briefly completes a small function and comes with pictures and explanations, very suitable for beginners who are not very good at English.

Code

Vision Pro Agora Sample App: Agora SDK’s Vision Pro Integration Demo

Keywords: visionOS, Sample Code

visionOS-Quickstart is a visionOS version sample program that uses Agora RTC SDK to implement video calling functions.

If you need to integrate video calling or voice conferencing features in visionOS, you can refer to this official demo from Agora.

Grape: A Swift Library for Force Simulation and Graphics Visualization

Keywords: visionOS, force simulation

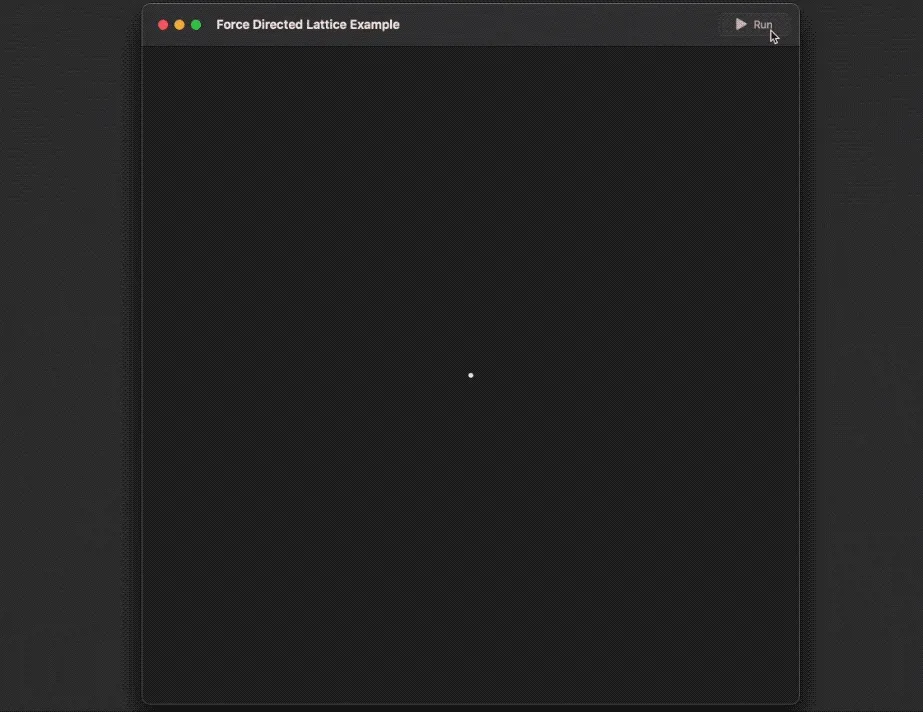

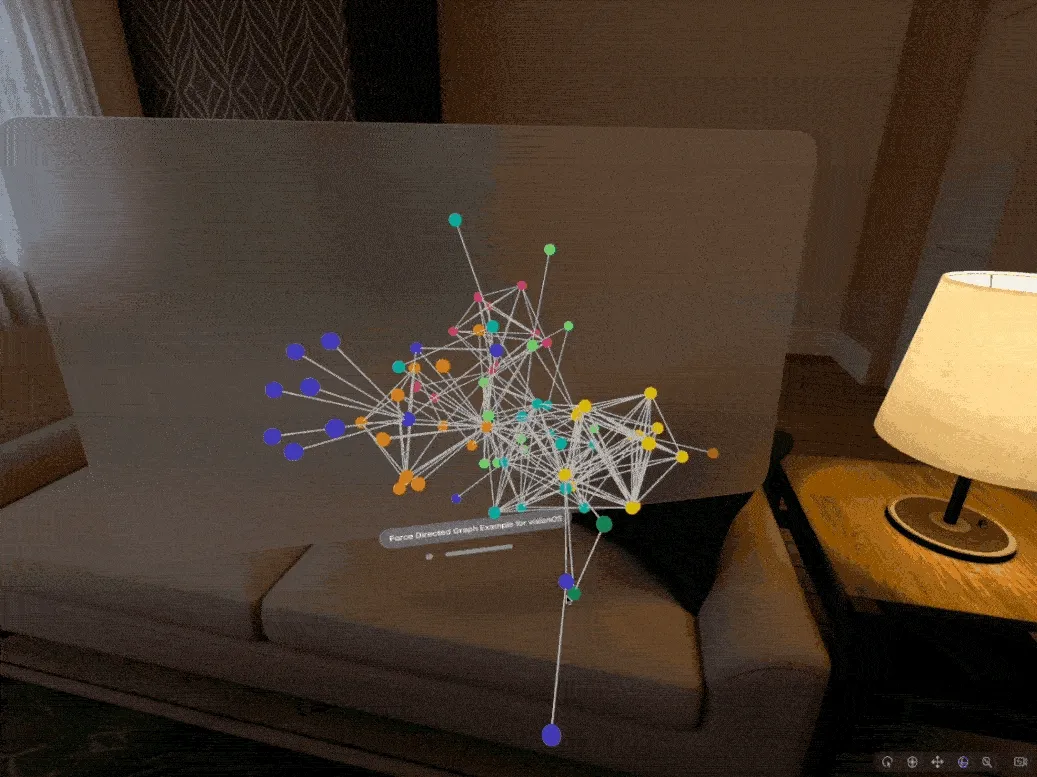

Grape is a Swift library for force simulation and graphics visualization, capable of performing force-directed graph and lattice simulation calculations, supporting 2D and 3D simulations, with a visionOS version demo for learning.

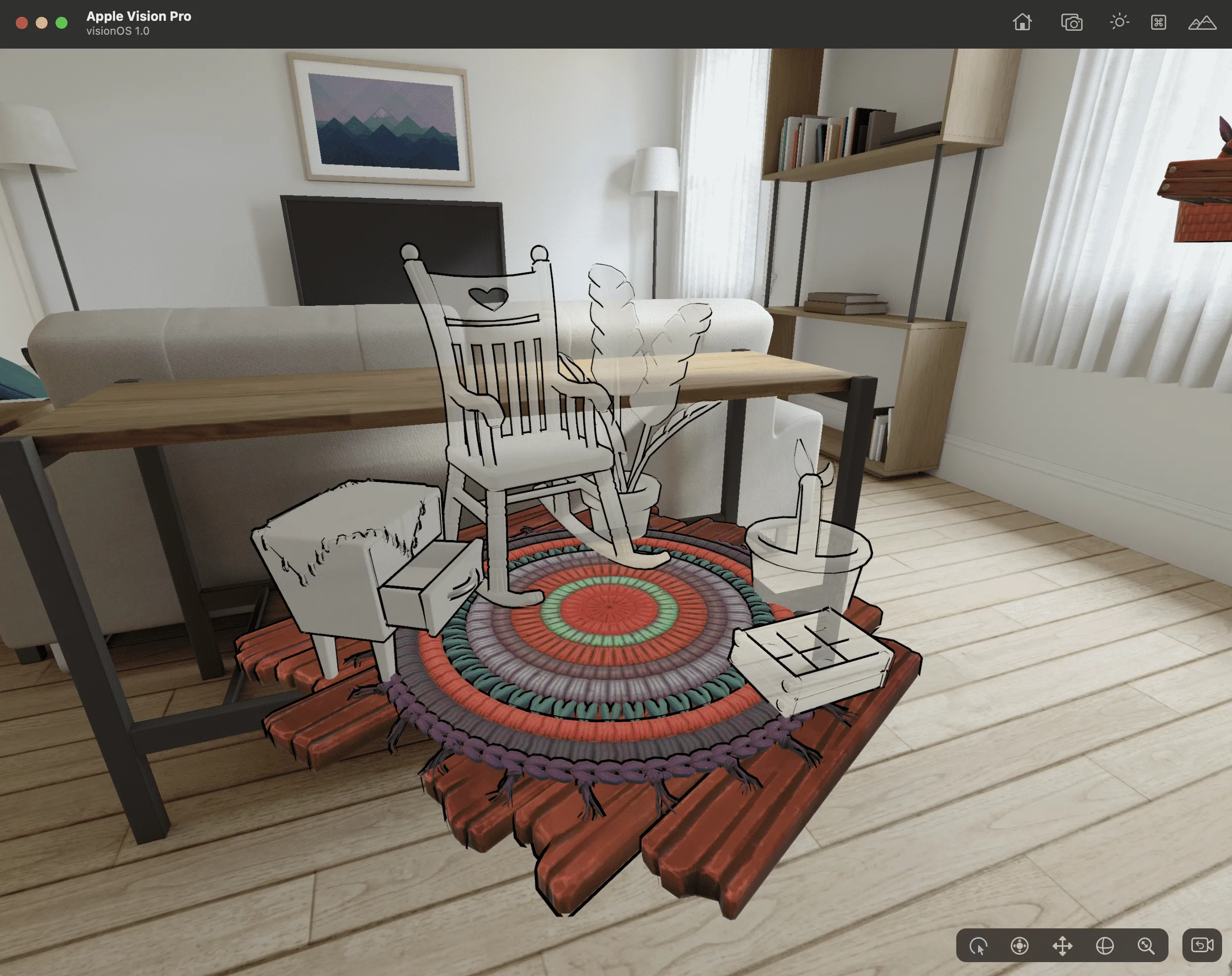

GoncharKit: A Toolkit Developed Specifically for RealityKit on visionOS

Keywords: RealityKit, visionOS

GoncharKit is a toolkit developed specifically for RealityKit development on visionOS. It conveniently adds visual effects to 3D models, currently offering three functions: Outline Mesh Generation, DoubleSided materials, Skeleton Visualization.

- Outline Mesh Generation: Since visionOS’s RealityKit currently neither supports custom multi-channel rendering nor screen post-processing for edge processing, to add outlines (external contour lines) to models, it’s necessary to generate new meshes by outwardly extending normals.

Although this method is not perfect for objects with discontinuous normals, such as cubes, resulting in breaks at discontinuities, it is currently the only feasible way to add outlines on visionOS.

- DoubleSided materials: Since RealityKit and USDZ themselves do not support double-sided materials, flat objects like leaves may lead to the backside not being displayed. To display the backside of the model, it is necessary to reverse the vertex indices to generate new faces, ultimately achieving a stable display effect.

- Skeleton Visualization: Adds markers to the joints of skeletal animations, facilitating debugging of skeletal animations.

SmallNews

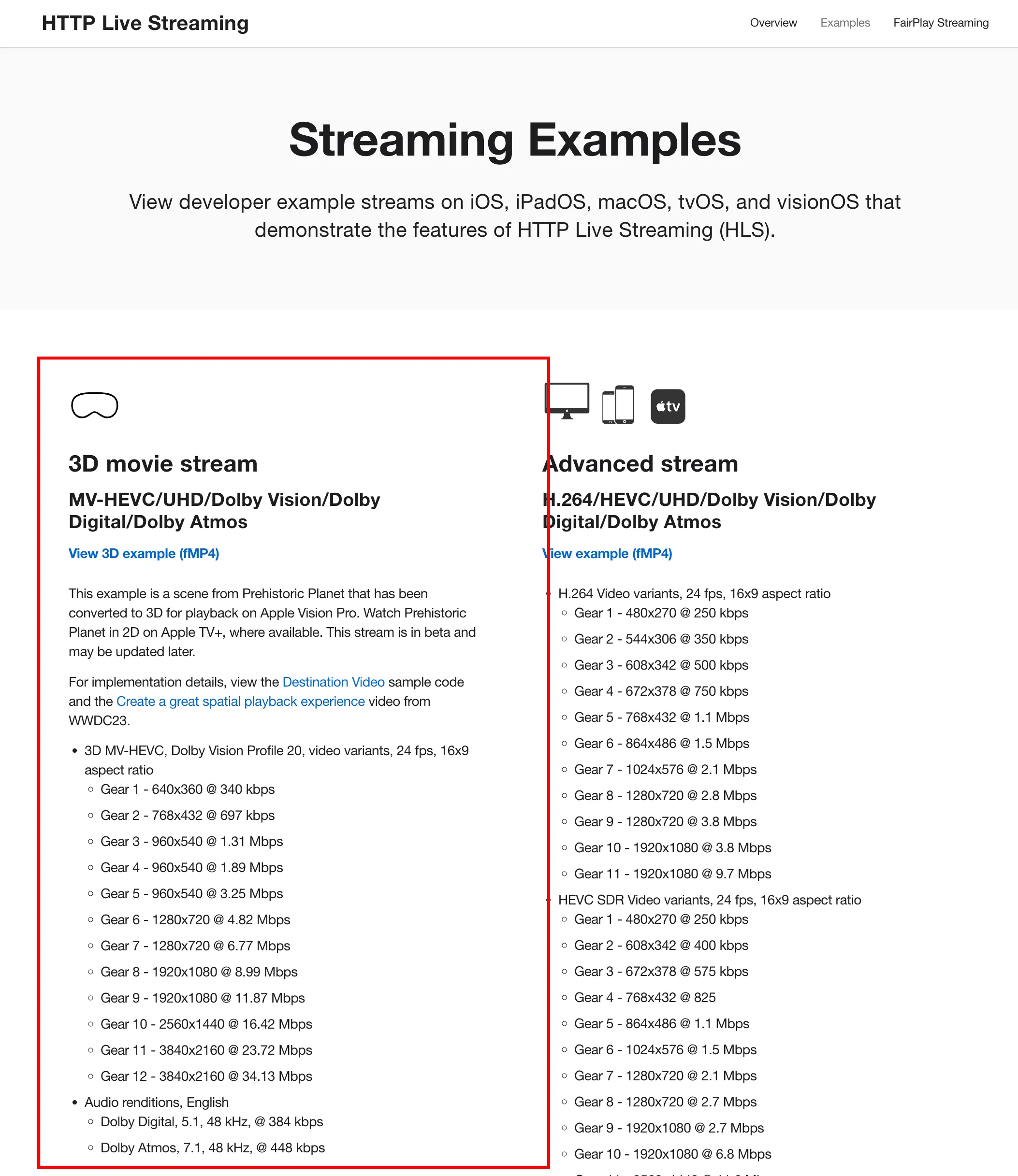

Apple Releases Spatial Video Examples

Keywords: visionOS, Spatial Video

On the Apple Developer Streaming Examples page, Apple provided an example based on the 3D movie Prehistoric Earth. However, the video link provided can only be played in Safari on a real Apple Vision Pro device; it cannot be played in simulators or browsers on a Mac. (Possibly the current browser version does not support this HLS format playback)

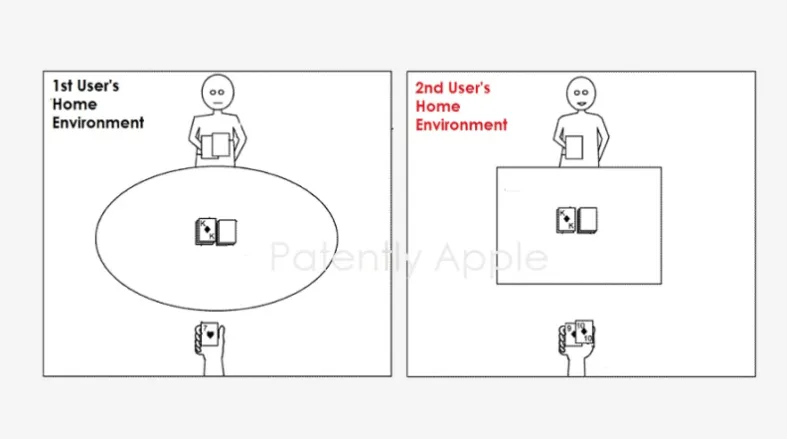

Apple’s new patent application: Cross-terminal multiplayer games in Facetime, but with real objects (I really have printed playing cards in my hand!)

Keywords: Patent, Facetime, Cross-terminal, XR Multiplayer Games

At this year’s Apple WWDC and subsequent videos, Apple mentioned creating a SharePlay experience in Facetime using visionOS in Facetime. In a patent application revealed recently by PatentlyApple (Application No. 20230293998), this experience will be upgraded.

It’s not just about playing the same virtual game across terminals and systems (iOS, iPadOS, visionOS) in Facetime. The patent application aims to use Fiducial marker technology to bring real-world multiplayer gaming experiences to Facetime. For example, you play poker in Facetime with a friend using an iPhone while wearing Apple Vision Pro (both holding playing cards). Unlike straightforward video presentation (which may not be clear), this patent hopes to use holographic projection or similar forms to bring the cards in your hand to your friend’s presence. Or when your friend hands you a “real” item, this technology might generate a “real” projection in your hand, simulating the effect of truly passing it to you, blurring the boundary between reality and virtual, thus realizing the concept of extended reality and bringing people closer together.

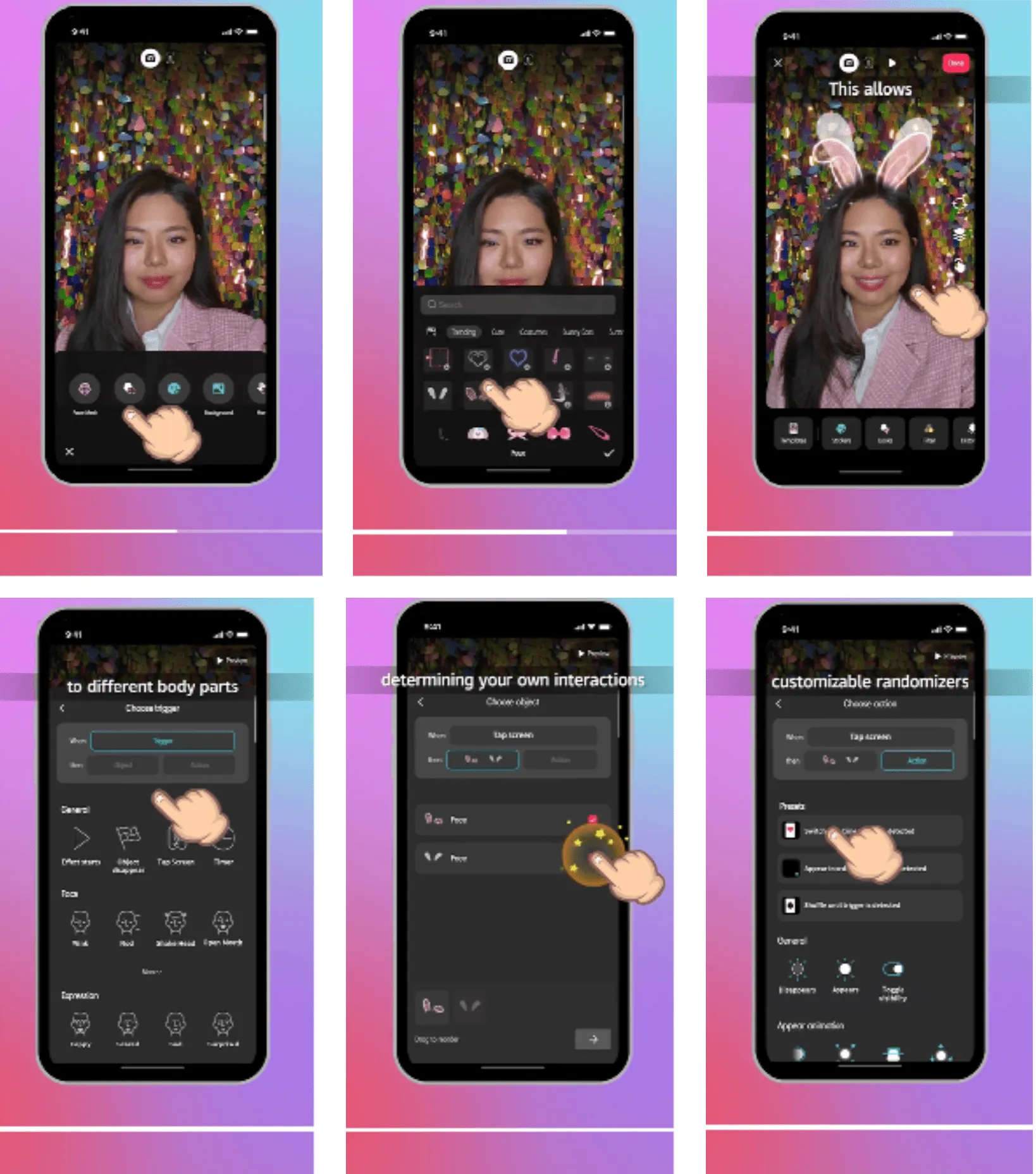

TikTok Adds AR Filter Editing to Mobile Version

Keywords: AR, TikTok, EffectHouse

Following the launch of the AR creation software EffectHouse (PC) that emulates AR camera program Snapchat, mobile version TikTok recently announced a new feature that allows users to create AR filters directly in the mobile version of TikTok, eliminating the need to download EffectHouse separately (of course, the PC version of EffectHouse can create richer effects). Users can combine more than 2000 materials from the mobile AR filter library. Previously, TikTok also provided a creator fund worth $6 million to encourage the production of AR filters. Currently, TikTok is adjusting its latest creator program.

Note: For some reason, TikTok later took down the Tutorial video

As shown in the screenshot of the video tutorial below, users can select the corresponding FilterBase, Trigger on the mobile end, similar to a simplified version of the PC EffectHouse and Unity modules; users can combine different 3D assets, Triggers, and tracking Anchors to complete the creation of filters. AR filters created on the mobile end also enjoy the benefits of the creator program.

Microsoft: Industrial AR AI, Two-Pronged Approach, More Efficient!

Keywords: AR, AI, Copilot, Microsoft

Earlier this year, Microsoft introduced Copilot for white-collar workers. Now, they are integrating this AI assistant into their mixed reality applications Dynamics 365 Guides, targeting technicians in factories, with the goal of helping them work better and spend less time managing and maintaining equipment. In this way, on-site workers no longer need to do everything themselves; they can communicate with Copilot using natural language and gestures to complete daily tasks. Copilot provides assistance by displaying relevant technical documentation, service records, and other data sources on the device. This enables easier conversational interactions and the creation of three-dimensional models, among other things. Moreover, as some older employees retire, adding this AI assistant helps companies retain their knowledge base. Currently, Copilot is in preview and will first be released on Microsoft’s HoloLens 2 head-mounted device, and later on mobile devices.

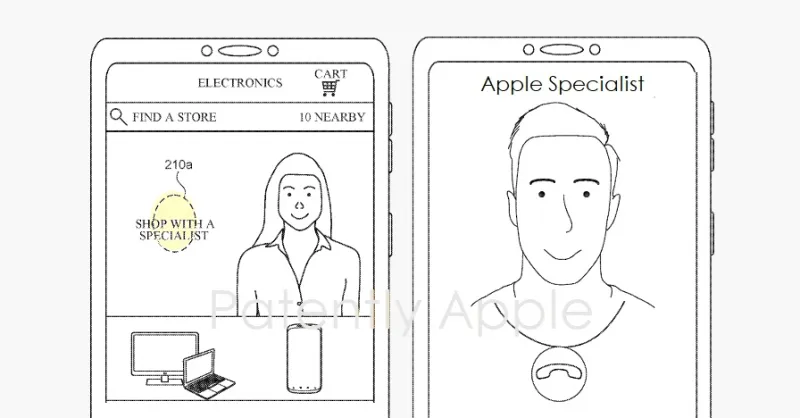

Apple’s New Patent: From Genius Bar to Your Living Room, Using AR to Promote New Product Purchases

Keywords: Patent, AR, Sales, Apple

Apple has undoubtedly been driving industry integration and technological advancement while marketing remains a crucial aspect for them. A recent patent disclosed by PatentlyApple (link) that Apple has recently been granted (Application No. 11816800, filed in 2021) suggests they may incorporate AR technology in future sales of their products. Even if there’s no Apple store within 500 miles, remote sales personnel with devices can come to customers’ living rooms in Avatar form, showing and interacting with products in real-time.

Apple also plans to combine AR-guided shopping with online chat systems. This means customers can consult and get help while seeing products come to life through AR technology. It’s like having a personal shopping advisor who can help you make the best choices based on your needs.

It appears that Apple is truly pushing the boundaries of AR technology. They are not only making it easier for customers to access this technology but also enhancing the overall shopping experience. I can’t wait to see how these features develop and improve in the future!

Writing at the End

Whether it’s the quality information you see or the premium content you write, you can contribute through Github’s Issue 😉.

Contributors to This Issue

| Link | Image |

|---|---|

| Onee |  |

| XanderXu |  |

| Puffinwalker.eth |  |

Recommended Reading

- XR World Weekly 010

- XR World Weekly 026 - Black Myth: Wukong has completed the journey to obtain Buddhist scriptures, but Meta may not have

- XR World Weekly 012

- XR World Weekly 009

- XR World Weekly 027 - AIxXR must be a good pair

- XR World Weekly 022 - Qualcomm Technology Salon, Persona can replace the background, visionOS 2 30 Days

- XR World Weekly 018

XReality.Zone

XReality.Zone