XR World Weekly 008

Preface

Unconsciously, XR World Weekly has been written for almost two months. We have also grown from the beginning of stumbling, ignorant, to now be able to do it with ease. Thanks to the vigorous development of XR world, we can find a lot of fun things to share with you every issue.

However, with the development of the industry, we also found that our perspective may gradually not keep up with the development speed of the industry. Although we have tried very hard to improve this, in a thriving industry, this is not an easy thing after all.

So, we decided to use everyone’s strength to do this well. Whether you are a practitioner or a fan of the XR industry, as long as you see interesting XR-related content in your daily information flow, you are welcome to contribute to the XR World Weekly. For the adopted content, we will also indicate the contribution signature in the corresponding issue. Remember to fill in your social media link in the form~

You can submit articles through Github Issue

Looking forward to the contribution of every friend, and looking forward to high-quality content, which can make XR development easier and let more friends join the creation of XR field. Well, without further ado, please continue to watch our carefully prepared XR World Guide 008 issue~

Table of Contents

- BigNews

- With Reality Composer Pro, you can now record high-quality demo videos with Apple Vision Pro!

- Now you can experience 3D photos in the visionOS Simulator.

- Idea

- Spatical Interface: From Hand Interaction to Spatial Interaction.

- The visionOS Demos that can open your mind

- Video

- How do you develop on Tilt Five?

- Real and Ethereal: We Reconstructed His Room With 90 Million Light Points

- Article

- XR - An Awesome List for Understanding the XR Industry

- Where did the shadows go? Troubleshooting Object Shadow Rendering on Apple Vision Pro

- Code

- Apple Vision Pro UI Kit - Rapidly prototype visionOS interfaces on Unity.

BigNews

With Reality Composer Pro, you can now record high-quality demo videos with Apple Vision Pro!

Keywords:Reality Composer Pro、Apple Vision Pro、Spatial Video、Spatial Screenshot

In Apple Vision Pro, recording high-definition demonstration videos may not be a simple matter. For example, based on a series of optimization measures such as eye tracking, there must be a lot of content that is blurred when recording the picture entering the human eye directly (because the part outside the main vision of the human eye must be rendered with low resolution output).

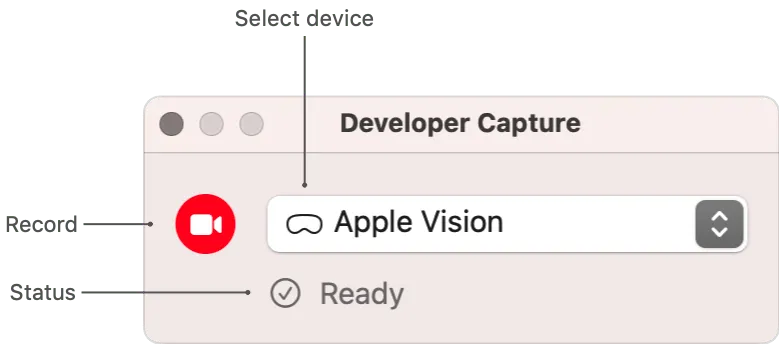

Now, with the continuous iteration of visionOS, Apple’s official website has also gradually appeared more abundant developer documents. This time, in the recently new Capturing video from Apple Vision Pro for 2D viewing, Apple details how to use the Developer Capture on Reality Composer Pro to record high-quality demonstration videos.

In the process of recording the demonstration video, the visionOS system will turn off some optimization measures to ensure that developers can record high-quality video content. It should be noted that since this function is only used to record demonstration videos, the recording will end when the time exceeds 60s.

Now you can experience 3D photos in the visionOS Simulator.

Keywords:visionOS、Simulator、Spatial Video、Spatial Photo

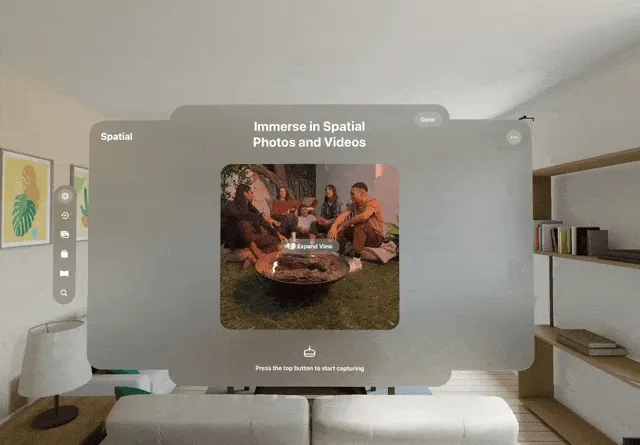

With the continuous iteration of visionOS, in addition to the update of developer documents, there are also some updates to official apps. In the latest visionOS beta 3 version, we have been able to roughly understand the viewing experience of spatial photos from the system’s album app. X user M1 also mentioned this in this status.

At the same time, in order to help everyone better understand spatial videos/spatial photos, we also wrote a special article What is the spatial video on iPhone 15 Pro and Apple Vision Pro, what is it? Detailed explanation of the principle of spatial video/spatial photo.

After knowing the principle of spatial video, We believe you can understand what kind of efforts have been made behind the effect of spatial video shown by Krishna Jagadish.🤗

Idea

Spatical Interface: From Hand Interaction to Spatial Interaction

Keywords:Spatical Interface、Apple Vision Pro、ATAP

Apple Vision Pro takes us into a new era of spatial computing. On this new three-dimensional platform, all the interactions we are familiar with may be rewritten. Although in the foreseeable years, most of the apps we are familiar with will still run on Apple Vision Pro in a two-dimensional form, the new and creative three-dimensional interaction methods are worth our early layout and thinking. Today I want to share with you the design of hand interaction and the idea of spatial interaction from Google’s ATAP team (Advanced Technology & Projects) a few years ago.

First: Hand Interaction

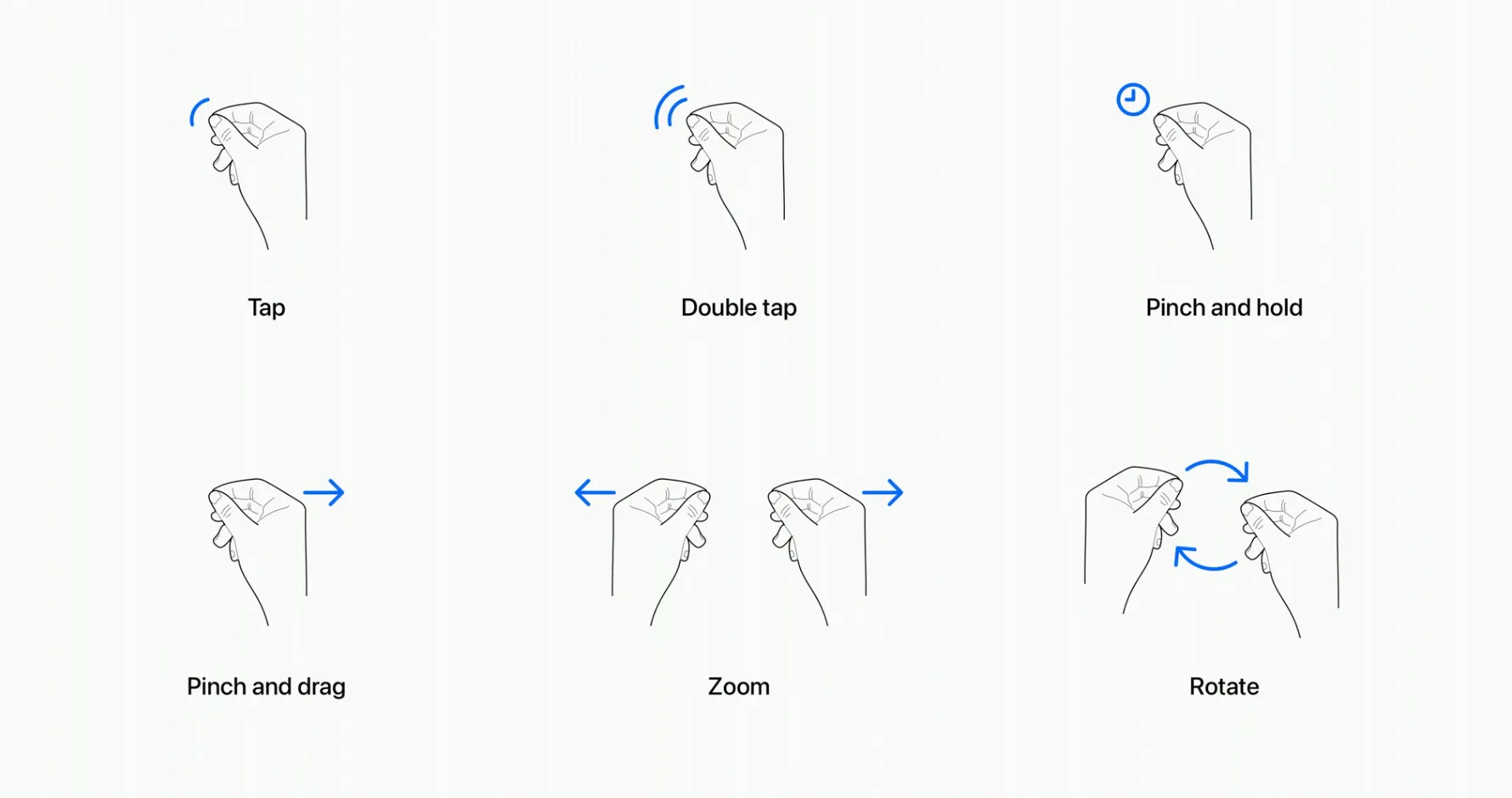

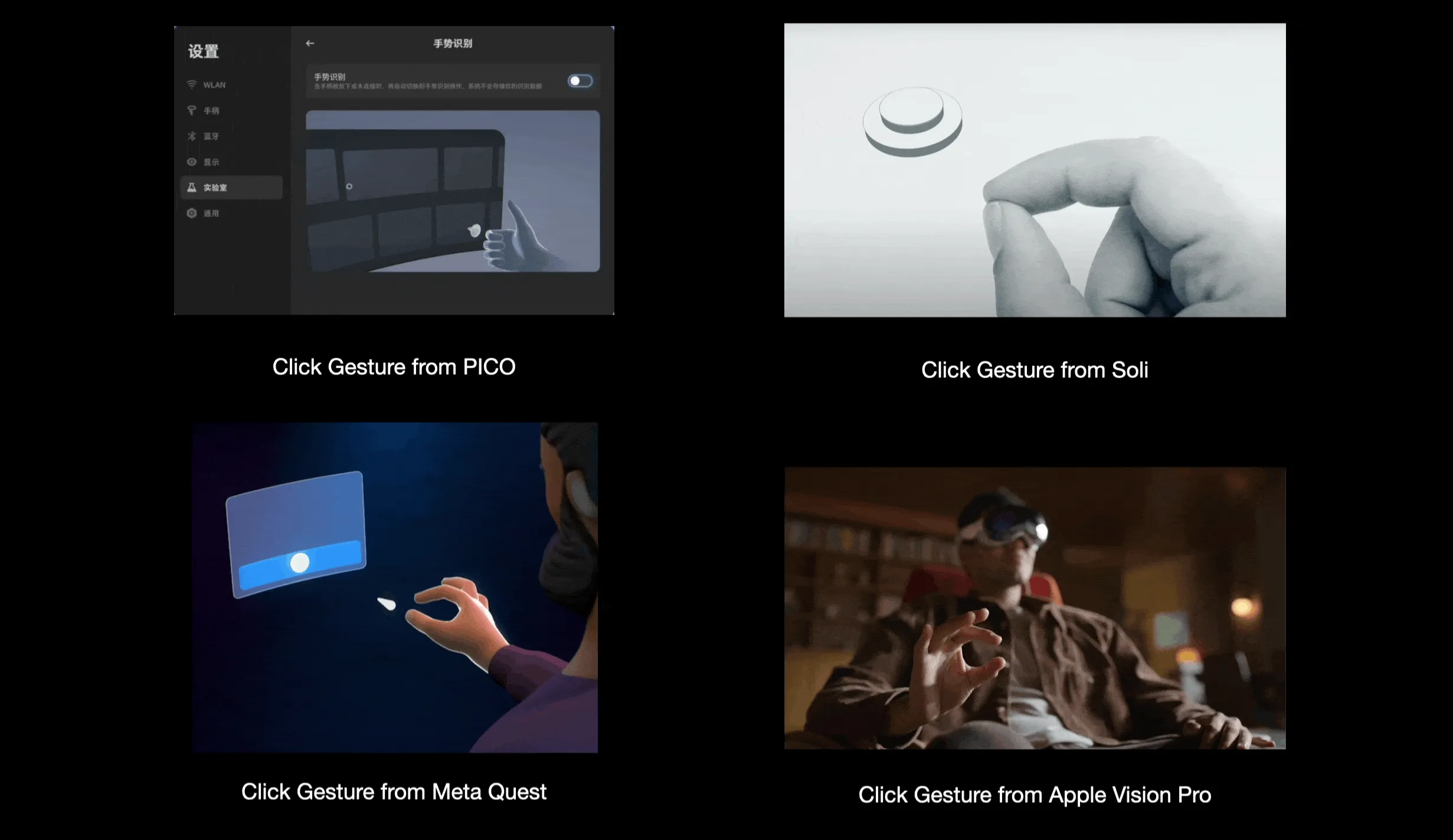

In Apple Vision Pro, spatial clicks, plane slides, rotations and other interaction methods can be easily realized by eye movement and finger pinching, but we also found that some gestures require two hands to cooperate. On the one hand, there is the speed and accuracy of gesture recognition. On the other hand, it is also the consideration of user habits.

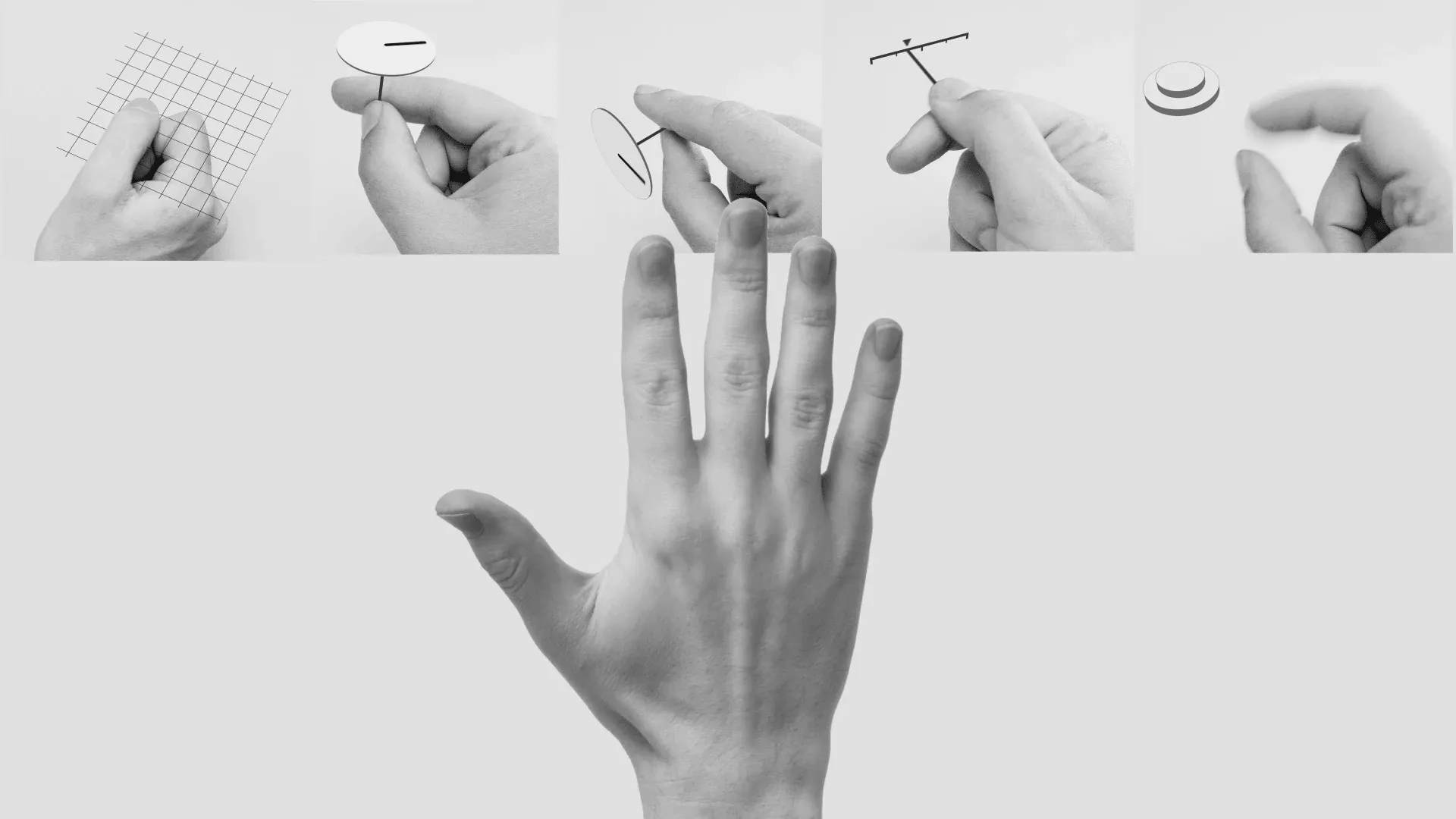

If we temporarily ignore the constraints of the above algorithm capabilities, can some complex gestures be completed with one hand? And can there be some other gesture interaction ideas? ATAP’s buddies explored some interesting gesture interaction designs in the Soli project, including rotating the wheel, moving the coordinates, and of course, including the familiar button design (a friend on X through the following figure of the hand, expressed a point of view: the hand is the only interaction medium we need).

Of course, in addition to designing gestures that are easier to recognize from algorithms, or designing gestures that are more intuitive from human beings, we also need to design gestures from cultural differences. For example, now we are in Meta, Apple Vision Pro and the above-mentioned Soli project. The confirmation button is implemented by pinching the thumb and index finger, but considering the cultural differences in some different regions, you can also choose to click the thumb on the index finger to achieve it, similar to PICO.

Second: Spatial Interaction

Apple not only allows developers to run existing iPad apps in Apple Vision Pro, but also encourages everyone to develop new native visionOS apps. How to create interesting apps in three-dimensional space, here is a little inspiration for you.

One of the members of the ATAP team, Ivan Poupyrev, released the spatial interaction designed by their team on X. By displaying the distance between the user and the screen step by step to show the details of the fabric of the clothes, from the initial Zone 1 to display the style, to Zone 2 to display the specific material and price, and finally to Zone 3 to display the washing suggestions. The background of this design is in the United States, where the growth rate of offline consumption is higher than that of e-commerce. The original intention is that physical stores can better interact with physical clothes, and display those details that are generally overlooked (such as washing suggestions). The content presented by the interaction between the distance in the space is a brand new attempt in the spatial interaction.

This spatial interaction technology does not use additional cameras, sensors and other devices to assist, although we do not know how it is implemented, but this gives us an inspiration, in the face of spatial interaction, there are still many things we need to explore.

The visionOS Demos that can open your mind

Keywords:visionOS、Physics、Spatial Video、Ads

The advent of Apple Vision Pro has brought a new scene space for all developers and creators to exert their imagination. This is not the case. X’s friends from all walks of life have exerted their imagination to show what interesting scenes there are in the spatial computing scene.

Although Twitter has been renamed to X, Wenbo Tao still misses the original little blue bird, so he also missed the classic little blue bird in the visionOS simulator.

https://twitter.com/tracy__henry/status/1700662889110278241

Except for this, in view of the fact that spatial computing devices have the innate feature of “infinite screens”, Wenbo Tao also came up with a more interesting interaction method of card interface.

https://twitter.com/tracy__henry/status/1697610761634484323?s=61&t=PbZXIQCK2Zv6aKBxslrPFA

Watching a movie alone is inevitably a bit boring. In order to improve the viewing experience, Steven Howard came up with a way to let the characters in the movie accompany you to watch it. For example, when watching Rick and Morty, the crazy scientist Rick suddenly appeared from one side of the video and watched this episode with you. (Steven Howard is also very intimate about the implementation method of this idea in the original text-just use a video that supports a transparent background to achieve it)

https://x.com/stevnhoward/status/1697698434894139495?s=20

Based on this idea, he also tried to explore a new video advertising presentation method in spatial computing devices. (For a while, I didn’t know whether this was a good idea or a bad idea🤨)

https://x.com/stevnhoward/status/1699797701297410287?s=46&t=Gccgna5KEzyhzQW-I8cMqA

Video

How do you develop on Tilt Five?

Keywords:Tilt Five、Unity、Unreal

Tilt Five is an AR peripheral + game company founded in 2017. Unlike other AR manufacturers, they focus on the very vertical scene of desktop games.

Besides, Tilt Five also has a very unique design. They use a special optical system to project the image onto the game board, and then reflect the image to the user’s eyes through the game board. This design allows users to see the real world and the virtual world at the same time, and the virtual world will not be affected by the real world. The interference of the world.

The development of such a device naturally requires the active participation of developers to bring more fun games to users. Currently, Tilt Five, like PICO, also supports three different development methods:

- C(Develop in a way similar to Android NDK)

- Unity

- Unreal

But at present, the development of TiltFive can only be developed on Windows (because the driver of the glasses only supports Windows), and the support of the mac system is expected to be launched in 2024. Dilmer Valecillos also recently released a video to introduce how to develop on TiltFIve based on Unity and Unreal.

On Unity, he implemented a simple demo of a 3D figure moving in an indoor scene.

On Unreal, he implemented a simple demo that uses the controller to simulate physical effects.

Real and Ethereal: We Reconstructed His Room With 90 Million Light Points

Keywords:LiDAR、Memory、PointCloud

What is the value of XR technology? In our opinion, expanding the way we look at and remember the world is the most indispensable value that XR technology can bring to us among the many values.

In the latest video of Mediastorm - Real and Ethereal: We Reconstructed His Room With 90 Million Light Points, they worked with digital artist SOMEI 烧麦晟 to use the LiDAR technology commonly used in the XR industry to restore SOMEI 烧麦晟 ‘s grandfather’s room in the form of “point cloud”.

Although the technology of using LiDAR to scan rooms for modeling has already existed, few people can tell a good story. This may remind us that simple technology, as long as it is used in the right place, can also tell a good story.

Article

XR-An Awesome List for Understanding the XR World

Keywords:XR、List、Big Picture

If you are a new XR enthusiast, you will definitely have such a question: What does the XR World look like as a whole?

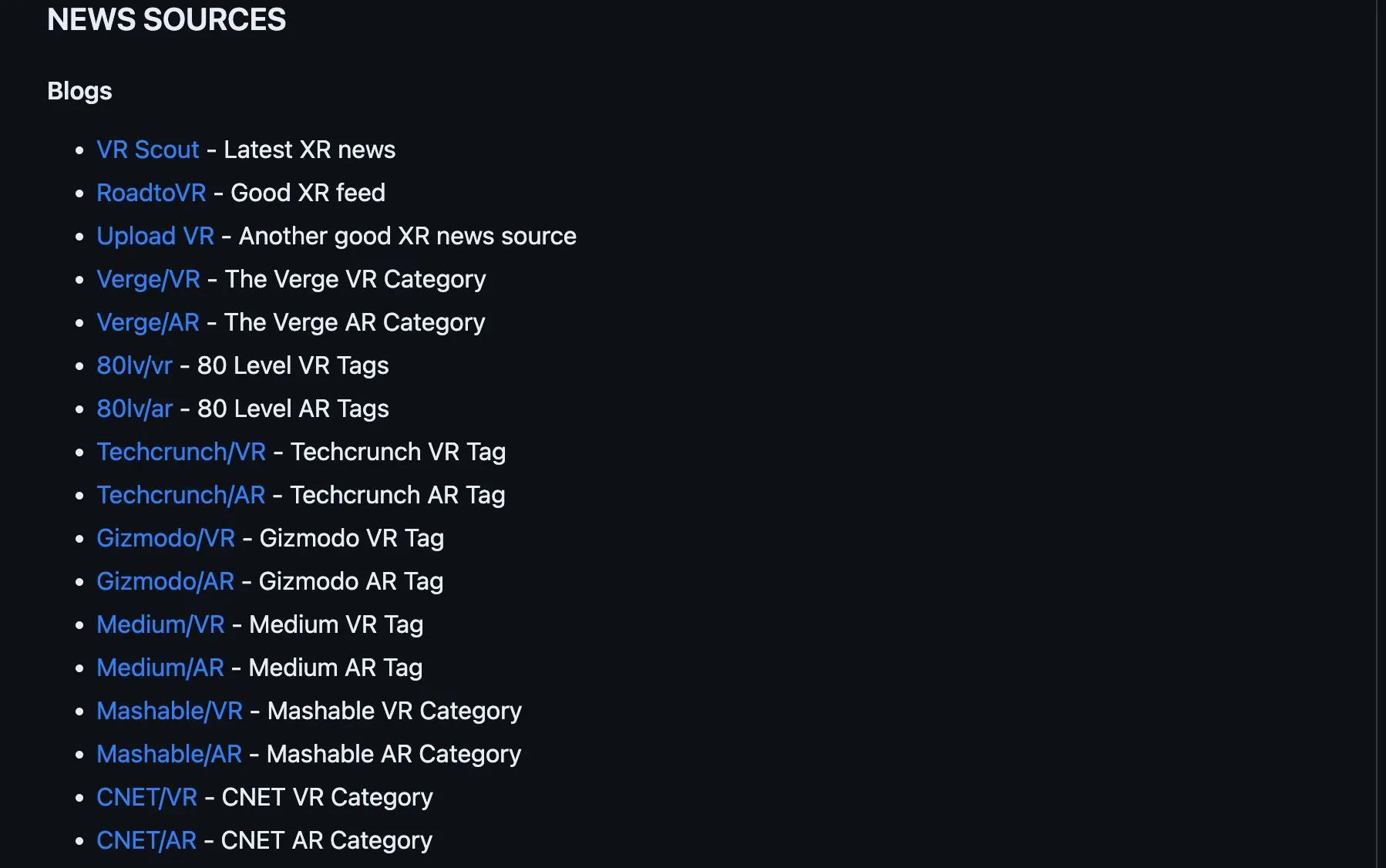

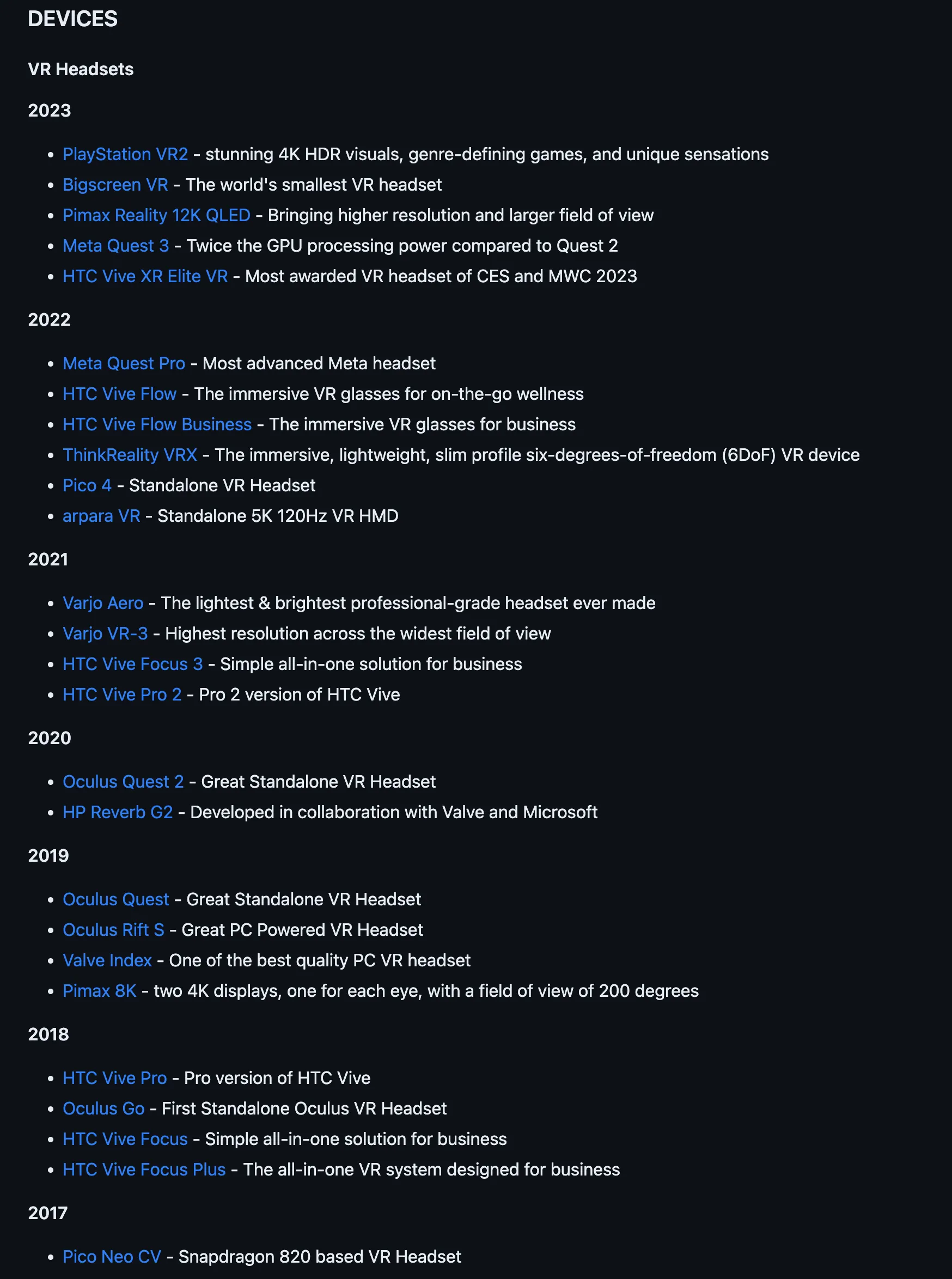

This is an extremely difficult question to answer, but fortunately, now there is such a list that can be called a “panorama” in front of you: A LIST CALLED XR, you may try to take a look.

In this list, there will be several introductory articles about XR first. If you know nothing about XR, you can first use these articles to scan the blind.

After having a basic concept of the XR world, you can also choose your favorite news source from NEWS SOURCES to follow.

If you are not familiar with various XR devices, the DEVICES section will also help you to have a rough idea of the various devices in the XR world and the corresponding time points.

Where did the shadows go? Troubleshooting Object Shadow Rendering on Apple Vision Pro

Keywords:visionOS、ShadowComponent、Reality Composer Pro、ModelEntity

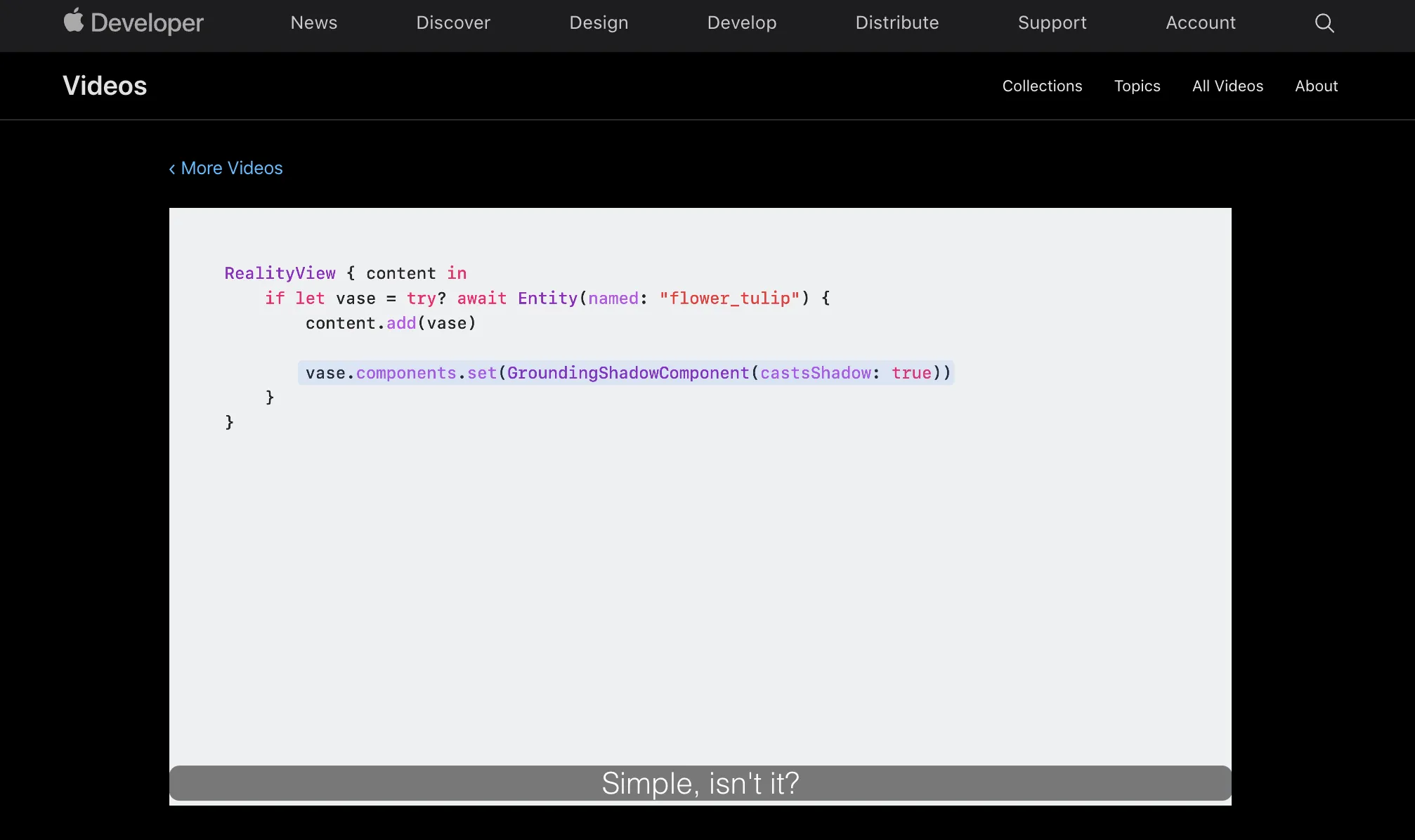

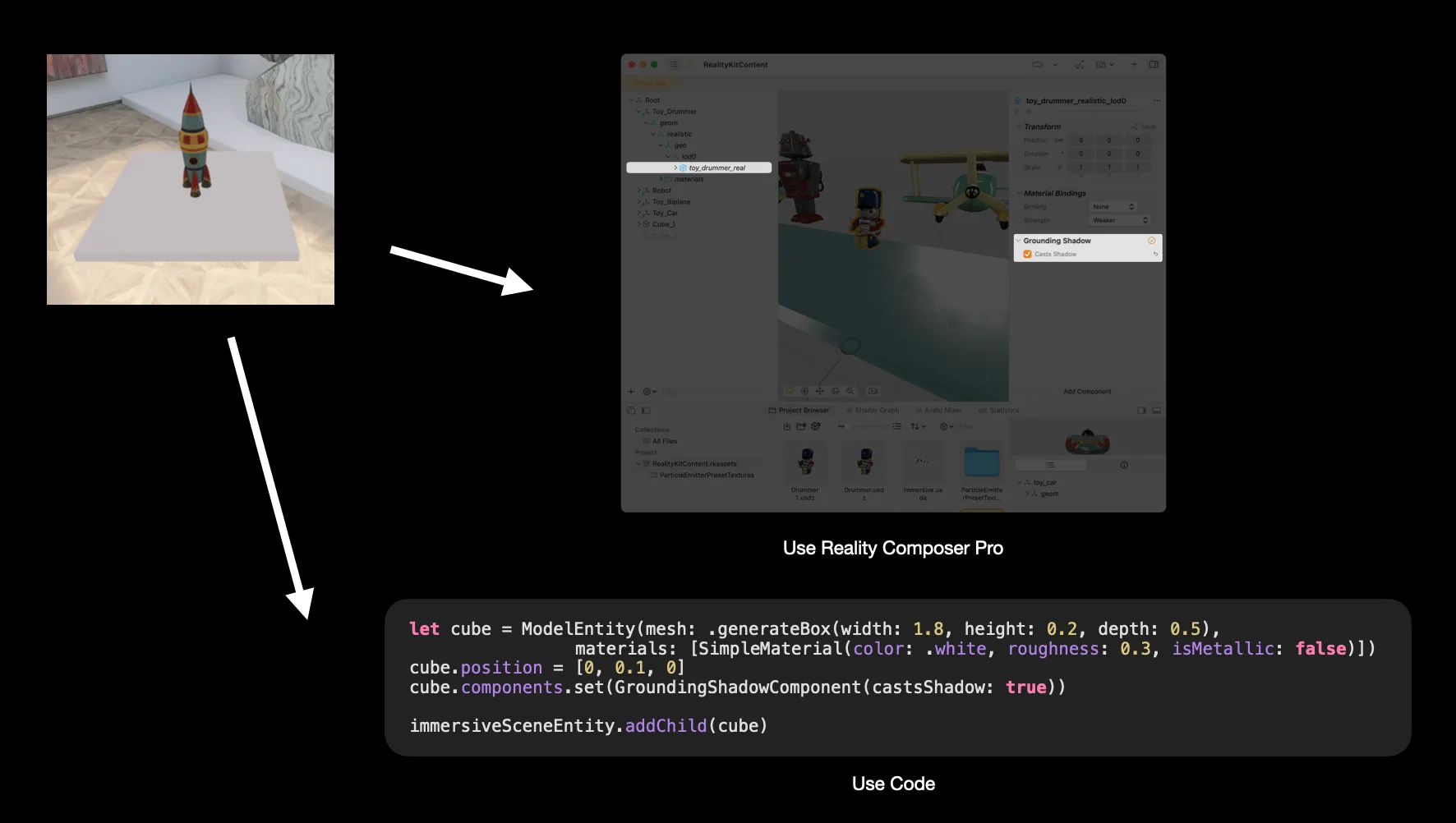

Shadows, although inconspicuous, are the key to the perfect integration of virtual objects and the real world. If you have developed ARKit, combined with this year’s WWDC video, it should be very simple to add shadows to objects on Apple Vision Pro (really?).

In the actual development process, Drewo Brich found that things are not that simple… If you want shadows when the models block each other, we need to use GroundingShadowComponent in the current version, but it only works for Model Entity, and it is invalid to directly set an Entity. So how to find Model Entity? There are two solutions:

- In Reality Composer Pro, find Model Entity (there is a special icon), add Grounding Shadow and set the checkbox;

- Directly set in the code, find Model Entity through the code and do the same operation;

If you want the model to have shadows in the “simulator” environment:

- In current version (visionOS beta3), the shadows set by Reality Composer Pro are invalid;

- Set through the code is theoretically valid, but there are still some problems with the current version of the simulator, and not all positions can see the correct shadow projection (it is currently known that the correct shadow projection may be seen near the stove in the kitchen scene)

More information can be found at: Developer Forums

Code

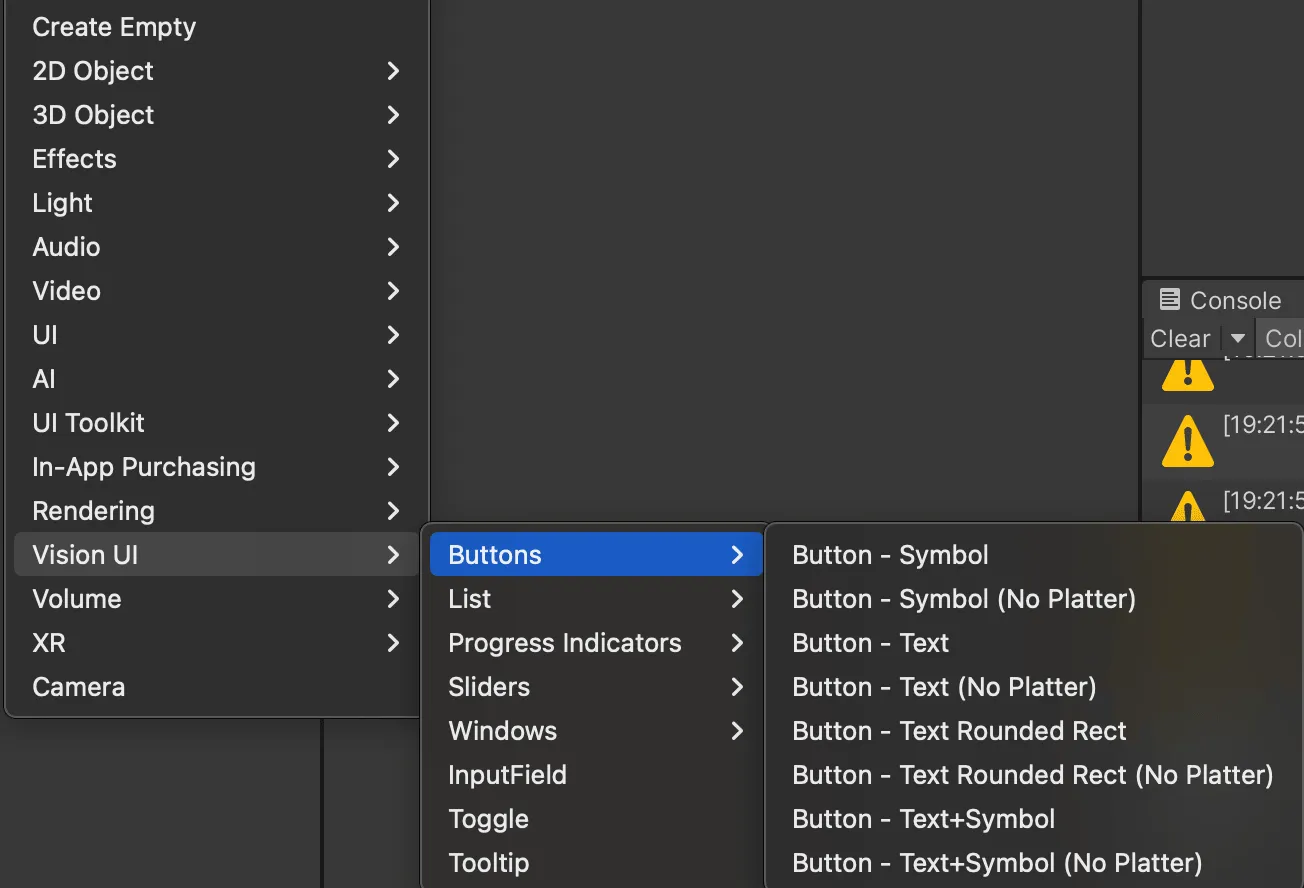

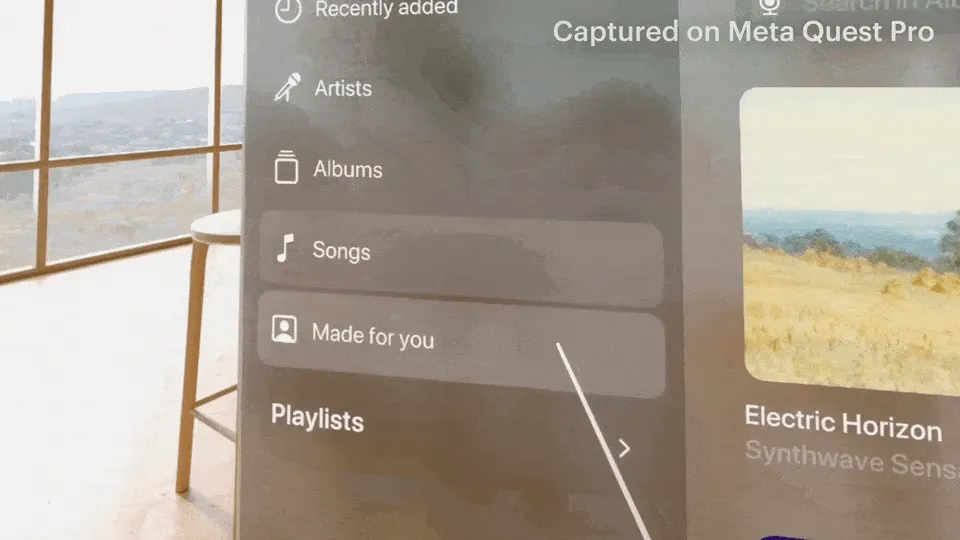

Apple Vision Pro UI Kit - Rapidly prototype visionOS interfaces on Unity.

Keywords:Unity、Apple Vision Pro、Design、Quest、PICO

If you are a product manager or designer who wants to quickly build a prototype of the interface on visionOS, before this, your only choice may be the visionOS Figma template released by Apple. Now, you have a new choice. A design team called JetStyle recently released and open sourced a set of UI code Apple Vision Pro UI Kit that can help you quickly build a 2D Window interface similar to the visionOS style in Unity.

The way to use it is also very simple. Just treat it as a normal Unity Package and import it into the Unit project in Git mode, and you can use various UI components carefully copied by JetStyle according to the visionOS Figma design template.

Although it has been released for more than three months, for most people, the charm of Apple Vision Pro is still limited to various promotional videos. One of the reasons is that the limitation of the 2D screen makes it difficult to convey the charm of spatial devices to everyone.

However, if you still have a Quest 2 or Quest Pro at hand, you can directly use the Apple Vision Pro UI Kit Demo

If you only have PICO at hand, it doesn’t matter. You can also try to use this PICO installation package that we have prepared in advance to simply feel the charm of 2D windows in visionOS.

XReality.Zone

XReality.Zone