[Closed] What is OpenXR and how Qualcomm promotes OpenXR Technology

Qualcomm and Snapdragon Spaces

The name Qualcomm should be very familiar to everyone. As the industry leader in the Android chip market share, Qualcomm has always been very concerned about the XR field. The chip carried on the Quest that we are familiar with is the Snapdragon XR series chip launched by Qualcomm.

Despite its main business being chip manufacturing, Qualcomm’s ideas do not stop there. In order to gain more end users in the XR field, Qualcomm has played to its natural advantage as a chip manufacturer - cross-device compatibility. In 2021, it launched the Snapdragon Spaces XR Development Platform . On this platform, any hardware product developed based on Qualcomm’s Xiao Loongson chip can further reduce the adaptation cost: there is no need to fully implement an OpenXR Runtime. Upper-level application developers can quickly implement XR application development based on the Snapdragon Spaces SDK.

Here, we mentioned a new term - OpenXR. To explain what the Snapdragon Spaces SDK is and what it can achieve, we must first understand what OpenXR is.

What is OpenXR?

If we search on a search engine or directly seek help from ChatGPT, the answer we are likely to get is as follows:

OpenXR is a working group managed by the Khronos Group, aimed at designing a standard for virtual reality (VR) and augmented reality (AR). The Khronos Group announced OpenXR during GDC 2017 on February 27, 2017. On July 29, 2019, OpenXR 1.0 was released to the public by the Khronos Group at SIGGRAPH 2019

Very good, after reading the explanation above, we may end up like the picture below:

Conceptual things are always difficult to understand. Therefore, a good way to understand new things is to first understand what problems existed in the world before the emergence of this thing. Let’s take a look at what the world was like before the emergence of OpenXR.

Let’s take a look at the XR world before OpenXR appeared

Before the release of OpenXR (2017), there were already many AR/VR products on the market, such as:

- HoloLens, released in 2016

- HTC Vive, released in 2016

- Oculus Rift, Year of release: 2016

Although the products of various hardware manufacturers are different, the basic problems to be solved are actually similar. For example, everyone first needs to achieve 3DOF or 6DOF tracking of XR devices based on the sensors of the hardware devices; at the same time, for some hardware with controllers, real-time perception and tracking of the controllers are also required.

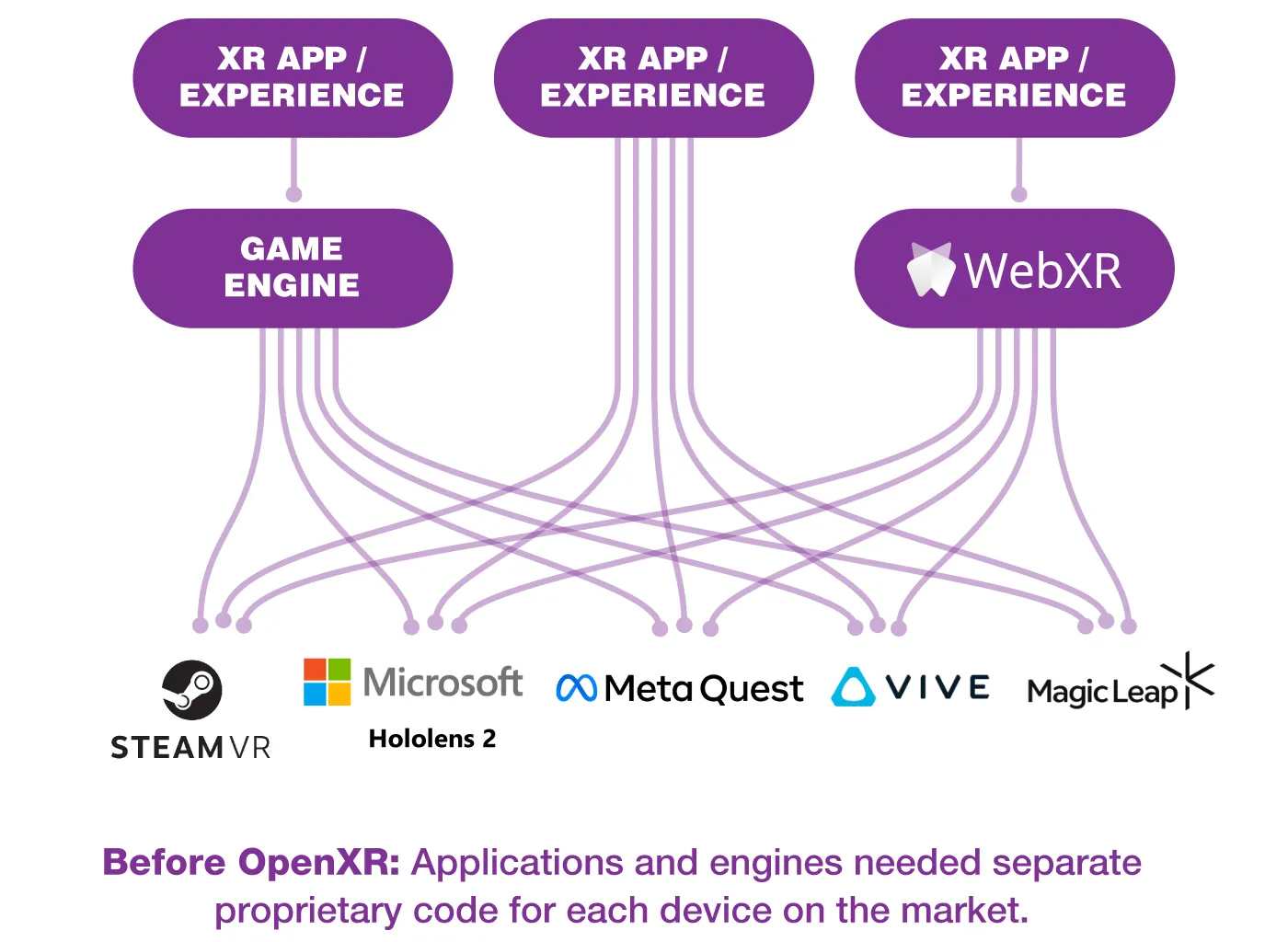

At the upper level, there are generally three types of developers who need to develop these hardware products.

- Game engine developers: Game engine developers need to adapt their engines to new hardware products

- Application developers: Application developers generally do not directly use game engines for development, so they also need to develop targeted SDKs for each device

- Web browser developers: Due to the emergence of WebVR, an early technology before WebXR released and promoted by Mozila, browser engines also need to be compatible with these XR hardware devices.

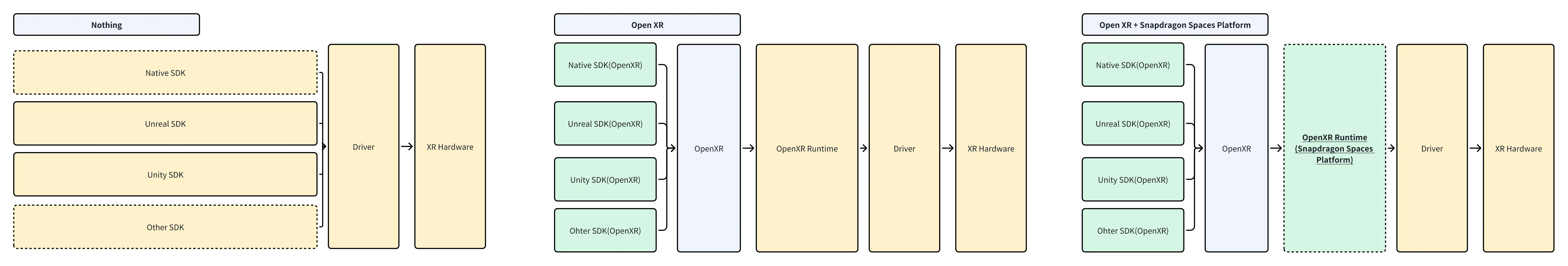

However, due to different hardware, each company provides completely different APIs for the same thing in SDK interface design. For upper-level engine developers, application developers, and browser developers, this brings great compatibility costs, resulting in the following situation:

Imagine if you are a developer of a small “VR calculator” application and have just spent a month polishing your app. When you have just launched your app on SteamVR, and then want to launch your app on Hololens, Quest, HTC Vive, and MagicLeap, you find that each platform provides completely different APIs, which means you have to start all over again. If you want to fully launch all these apps, you need to spend an additional four to five months learning and developing apps on these platforms. How frustrating would that be?

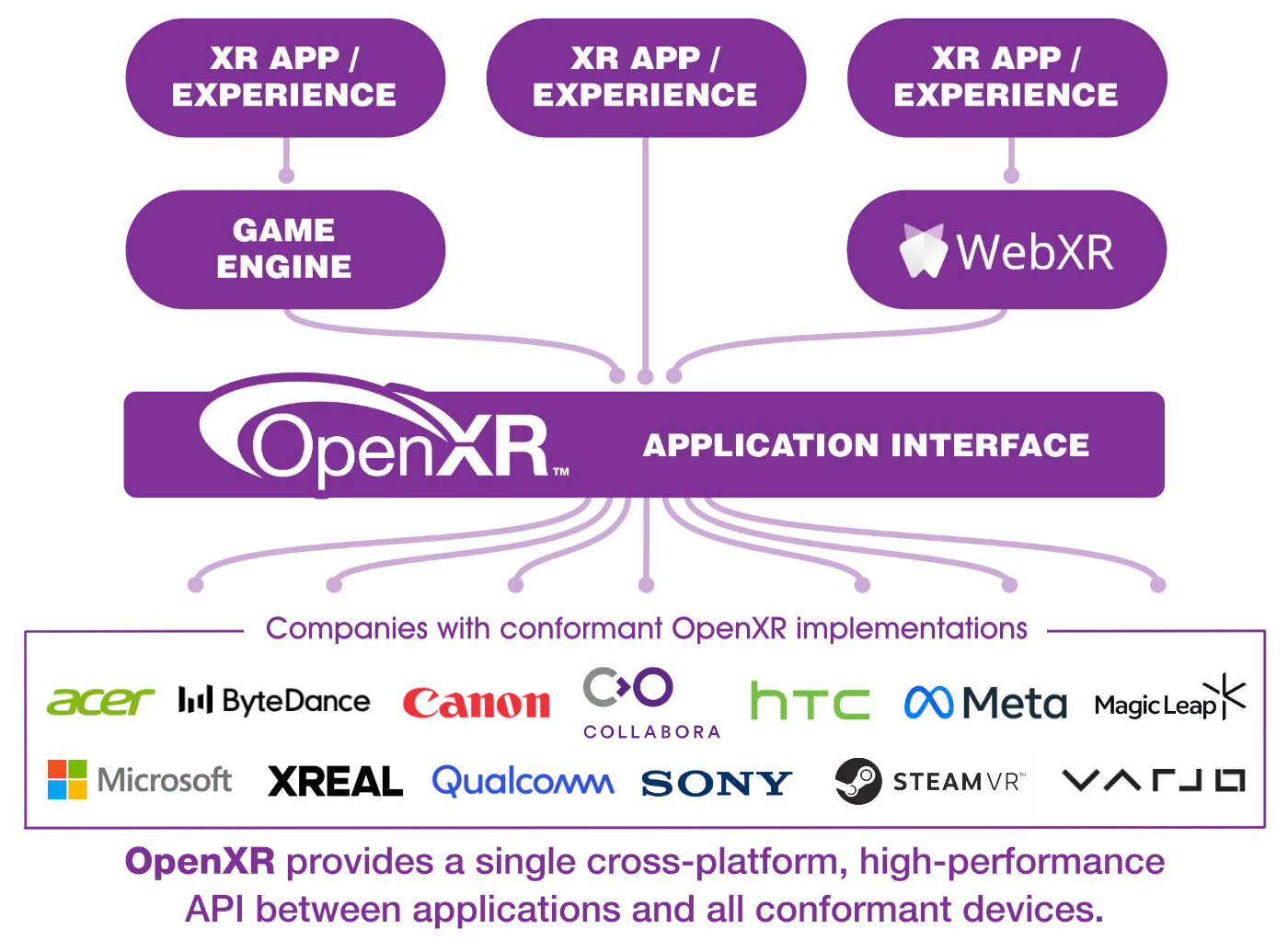

Therefore, in order to improve this bad situation, Khronos Group (Konas Standard Alliance) launched OpenXR in 2017, hoping to use a unified interface API to allow upper-level development engines, XR developers who directly connect to hardware, and browser developers who implement WebXR to face the same set of APIs and reduce their compatibility costs.

So, who is the Khronos Group that suddenly appeared?

Khronos Group and OpenXR

The Khronos Group was founded in 2000 by a group of leading Computer Graphics and multimedia industry leaders. Its purpose is to promote the development of open standards to solve the problem of technological fragmentation in the industry It can be seen that the original intention of the establishment of the Khronos Group is to “solve the problem of technological fragmentation”.

After the establishment of the Khronos Group, some public standards that we may now be familiar with were developed through the continuous efforts of the Khronos Group.

Among these standards, OpenGL and Vulkan may be the two standards that we are relatively familiar with. iOS and Android before 2020 support the OpenGL standard, while Vulkan has been supported by the Android system as a more advanced graphics API standard since 2016.

Tips

In fact, it is not uncommon for industries like this to spontaneously form an organizational definition standard after experiencing a period of chaos, which helps the industry move forward better.

W3C maintains and publishes web standards to help front-end developers reduce compatibility with Chrome, Firefox, IE, and Opera.

More recently, AOUSD maintains and publishes the OpenUSD specification to facilitate compatibility between 3D content. (Our XR World Weekly 006 covers AOUSD news.)

So, at the 2017 GDC (Game Developer Conference, an annual event for game developers around the world), the Khronos Group announced their plan to release OpenXR. After two years of discussion and modification, they finally released OpenXR 1.0 at the 2019 SIGGRAPH (Special Interest Group on Computer Graphics and Interactive Techniques, the annual event of the Computer Graphics industry).

In the design of OpenXR, a series of APIs (complete documentation can be found in the OpenXR API Reference ) are abstracted from the basic capabilities required for an AR/VR program, including controller tracking and view rendering. These APIs are defined in the form of C functions (corresponding Header Files are in Github/OpenXR-SDK ). And they all start with xr , for example, the following xrCreateInstance is an “entry function” used to create OpenXR instances:

// Provided by XR_VERSION_1_0

XrResult xrCreateInstance(

const XrInstanceCreateInfo* createInfo,

XrInstance* instance);

Using this series of entry functions, upper-layer XR applications, or game engines and browser engines that support XR features can use C/C++ and other languages that can call C functions to build their own applications, similar to this:

// Create a XrInstanceCreateInfo struct and init it

XrInstanceCreateInfo instanceCI{XR_TYPE_INSTANCE_CREATE_INFO};

// create OpenXR instance

OPENXR_CHECK(xrCreateInstance(&instanceCI, &m_xrInstance), "Failed to create Instance.");

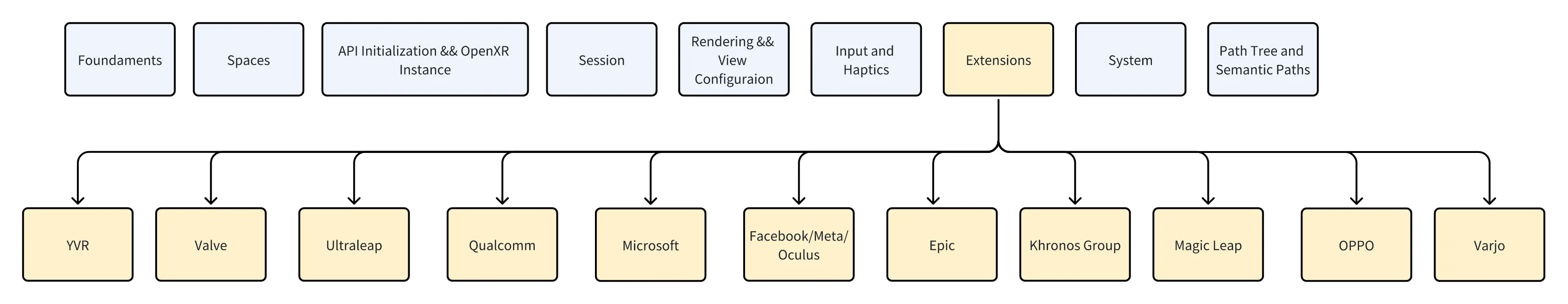

Of course, the above xrCreateInstance function is only a typical representative of OpenXR’s many interfaces. According to the OpenXR Specification , we can see that OpenXR roughly divides all these APIs into:

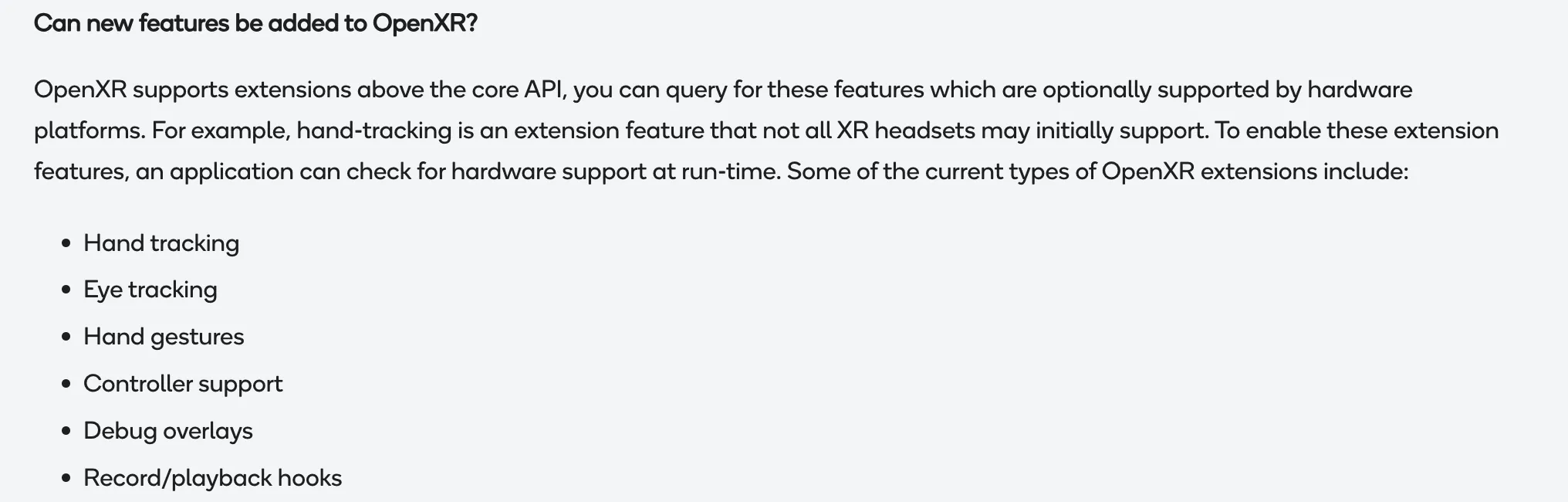

We won’t delve into other API categories for now. What’s more interesting is the Extension section. In the definition of OpenXR, Extension is defined as an API that hardware manufacturers can selectively implement. Members of the OpenXR working group can add new content to registered extensions. We can see that there are many XR companies in the industry among them.

Tips

If you are interested in the specific process of building a complete XR application using OpenXR, you can go to the official openxr-tutorial.com provided by the OpenXR working group to get a hands-on experience of how to use these APIs. This tutorial is recommended to be combined with the OpenXR Refrence Guide and the OpenXR Style Guide for learning.

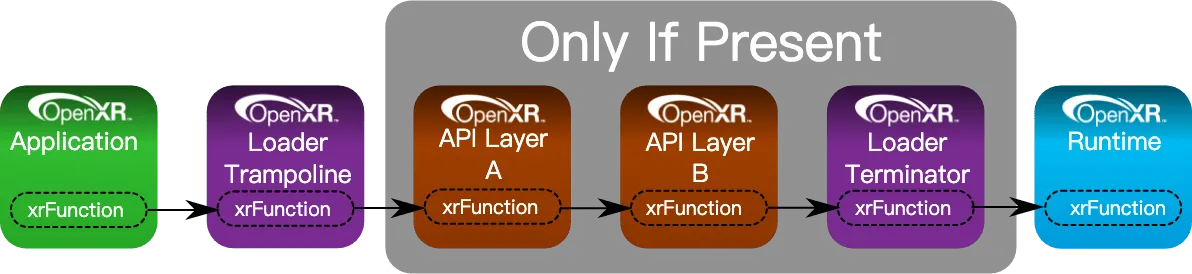

In the actual workflow of OpenXR, an application that uses OpenXR (such as Unity, Unreal, or a browser that supports WebXR) will call these OpenXR functions through the OpenXR Loader in the current system (which is used to find the correct OpenXR implementation in the current system environment), and then through the Layer defined by OpenXR (optional, similar to hook functions in various programming languages or Middleware in backend programs, which can intercept or modify the calling process of a function at the bottom layer), finally calling the specific implementation provided by hardware that conforms to OpenXR, and this specific implementation is generally called OpenXR Runtime.

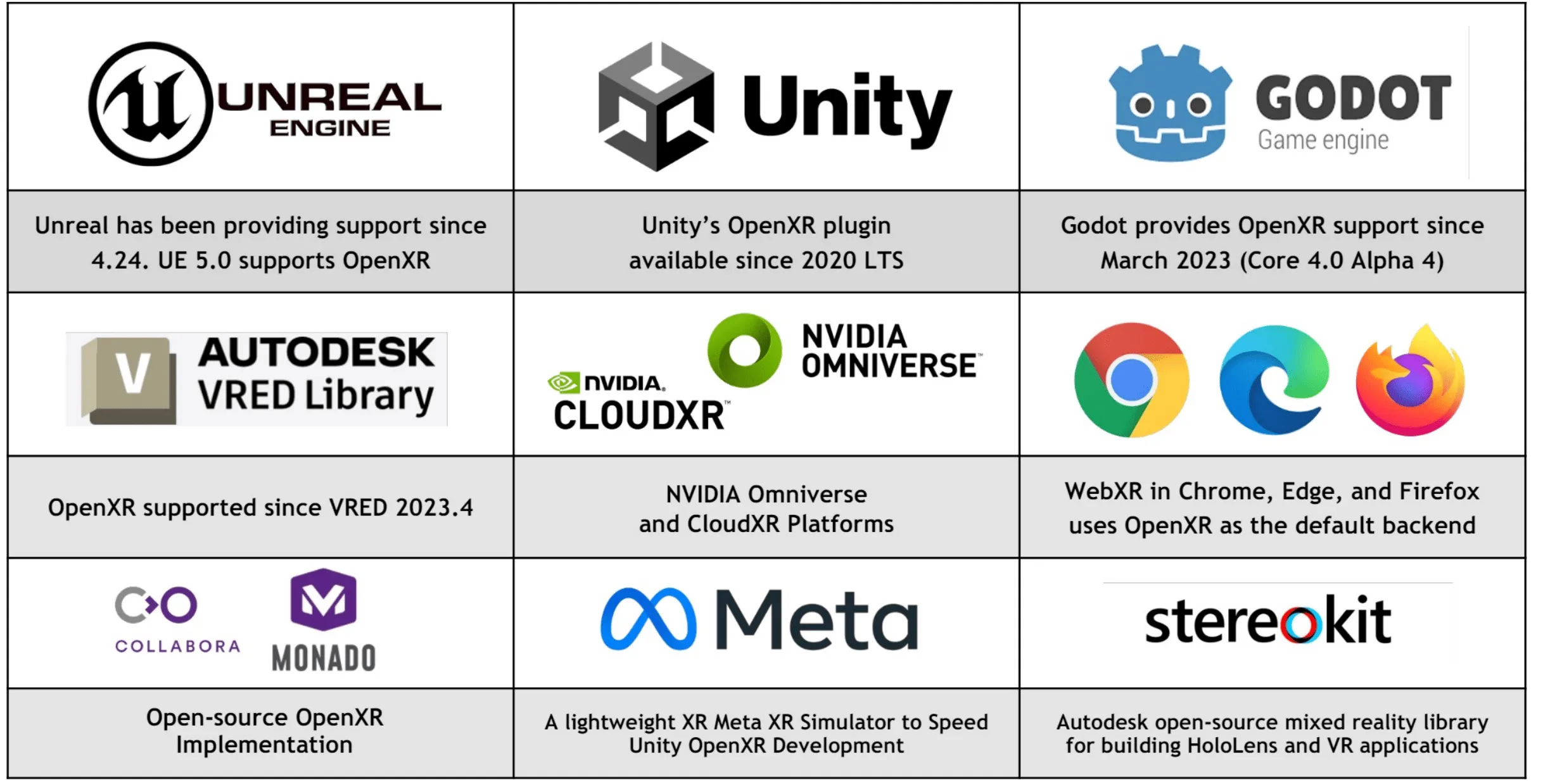

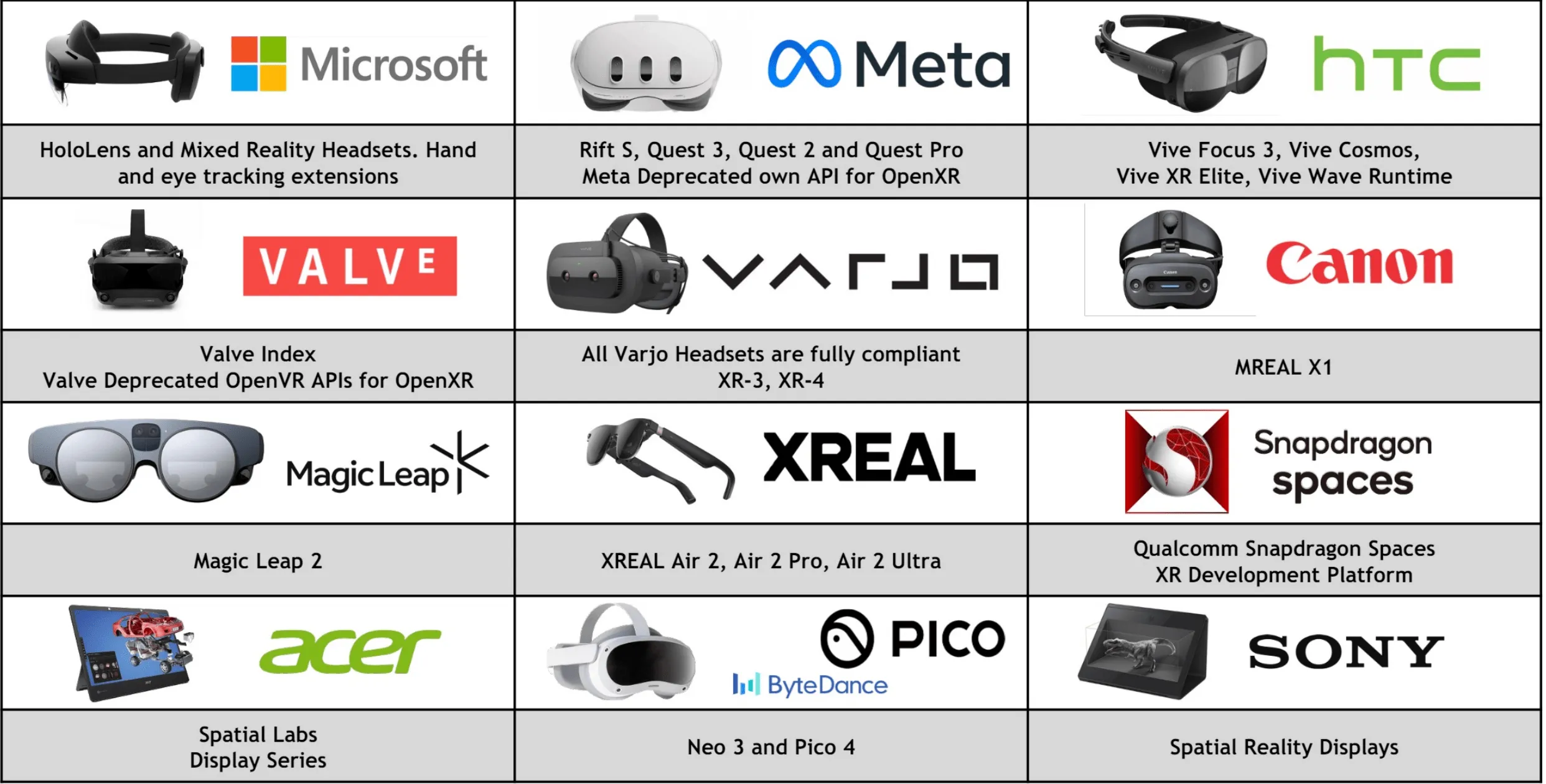

With the above explanations, I believe the image of OpenXR gradually becomes clear in our minds. As of now (2024), OpenXR has also received support from most companies in the industry.

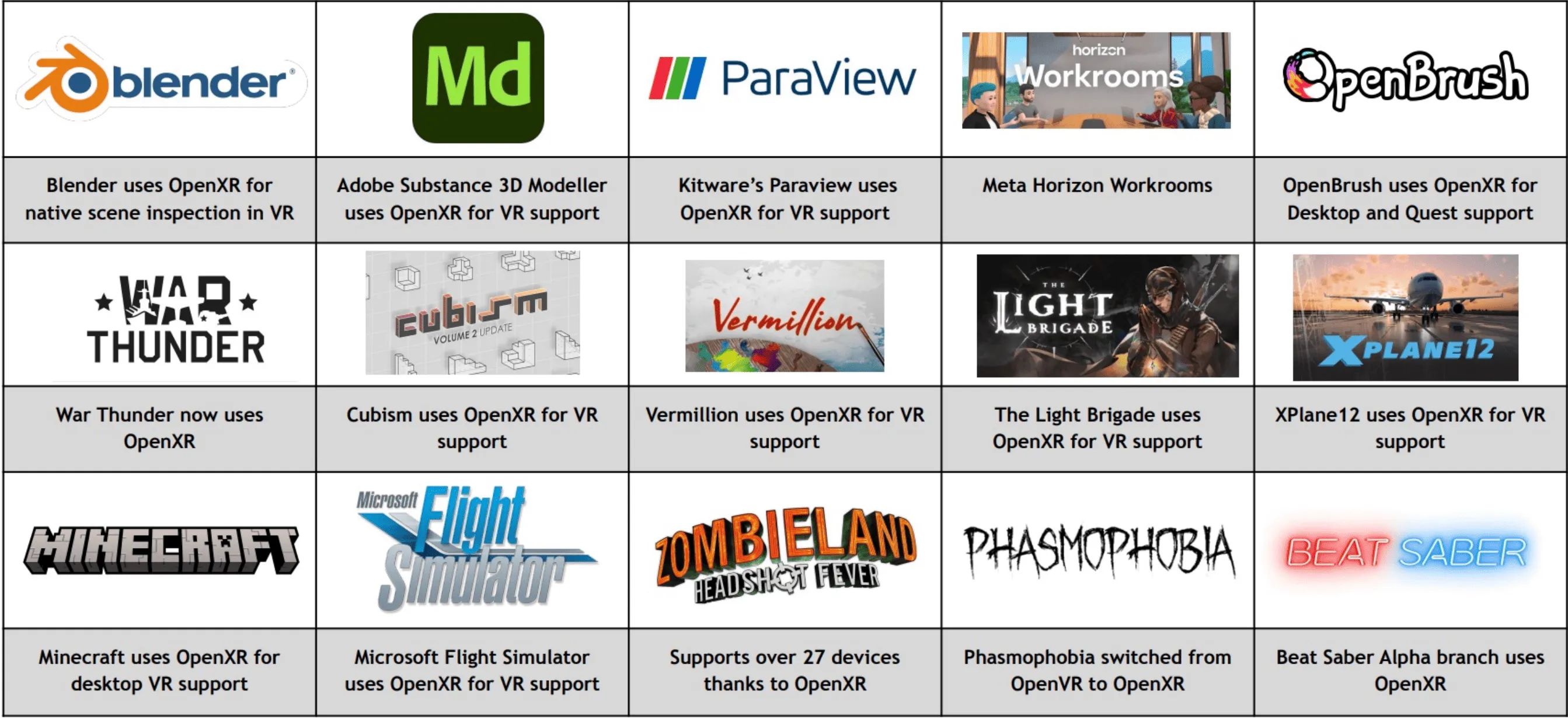

With the support of these companies, many upper-level applications have begun to adapt to the OpenXR interface, changing from directly connecting with hardware manufacturers’ APIs to connecting with OpenXR’s APIs. These products include some game engines, browsers, and SDKs.

And some professional software and games:

And these upper-layer applications adapted to OpenXR can also support the following devices at a relatively low cost with versions adapted to OpenXR:

Back to Snapdragon Spaces Platoform

Among these device manufacturers, Qualcomm in the lower right corner is relatively unique. As a chip manufacturer, it does not directly produce an XR device, but provides chips to multiple XR manufacturers. Now, we should be able to understand the significance of Snapdragon Spaces mentioned at the beginning of the article: new hardware manufacturers can directly use these engines and tools, greatly reducing the ecological construction cost of new XR hardware products, as shown in the figure below.

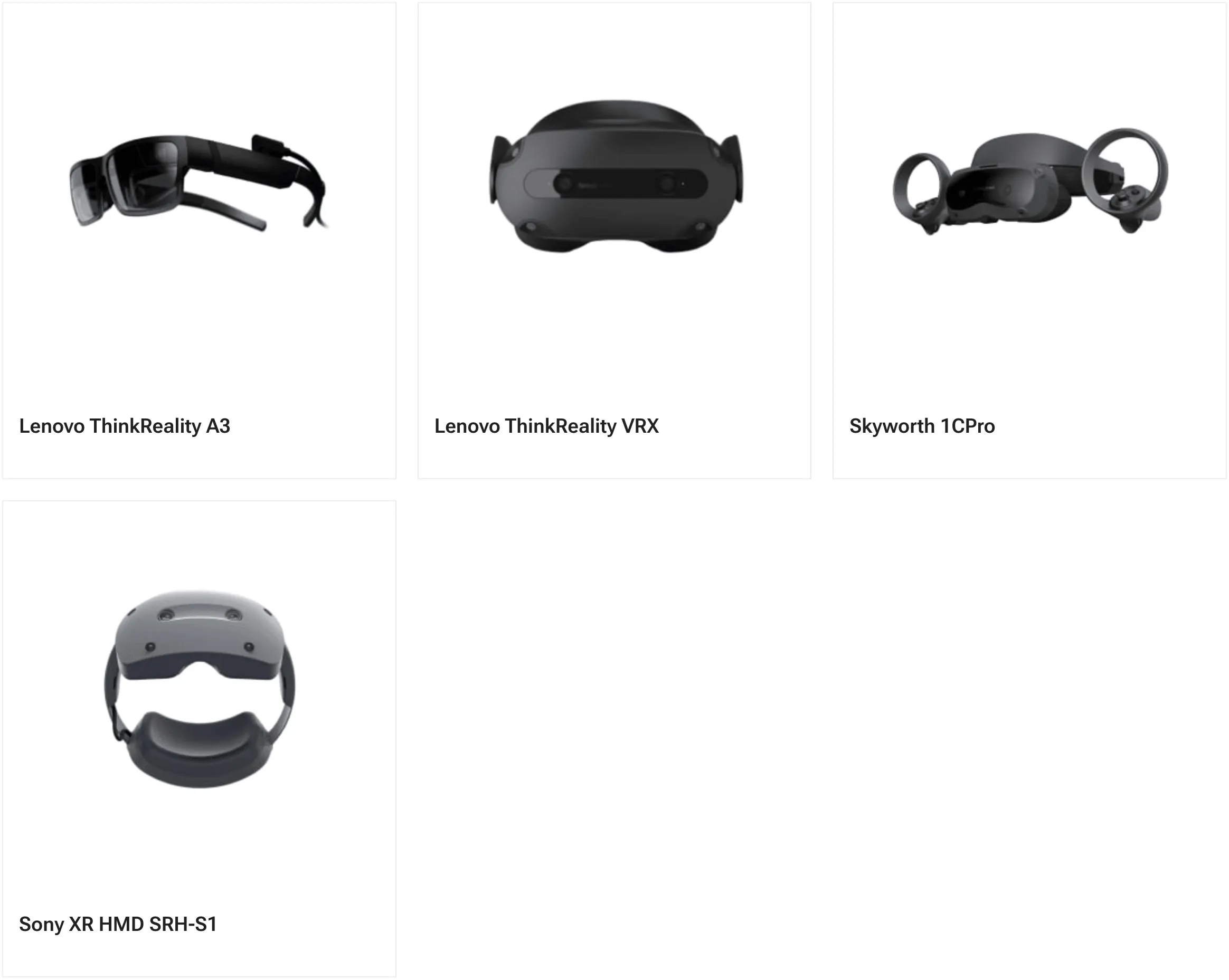

We can check the supported device list on the official website to see the devices currently supported by Snapdragon Spaces Platform:

Tips

Sony’s new XR headset, Sony XR HMD SRH-S1, which we introduced in XR World Weekly 016 , already supports Qualcomm’s Snapdragon Spaces Platform.

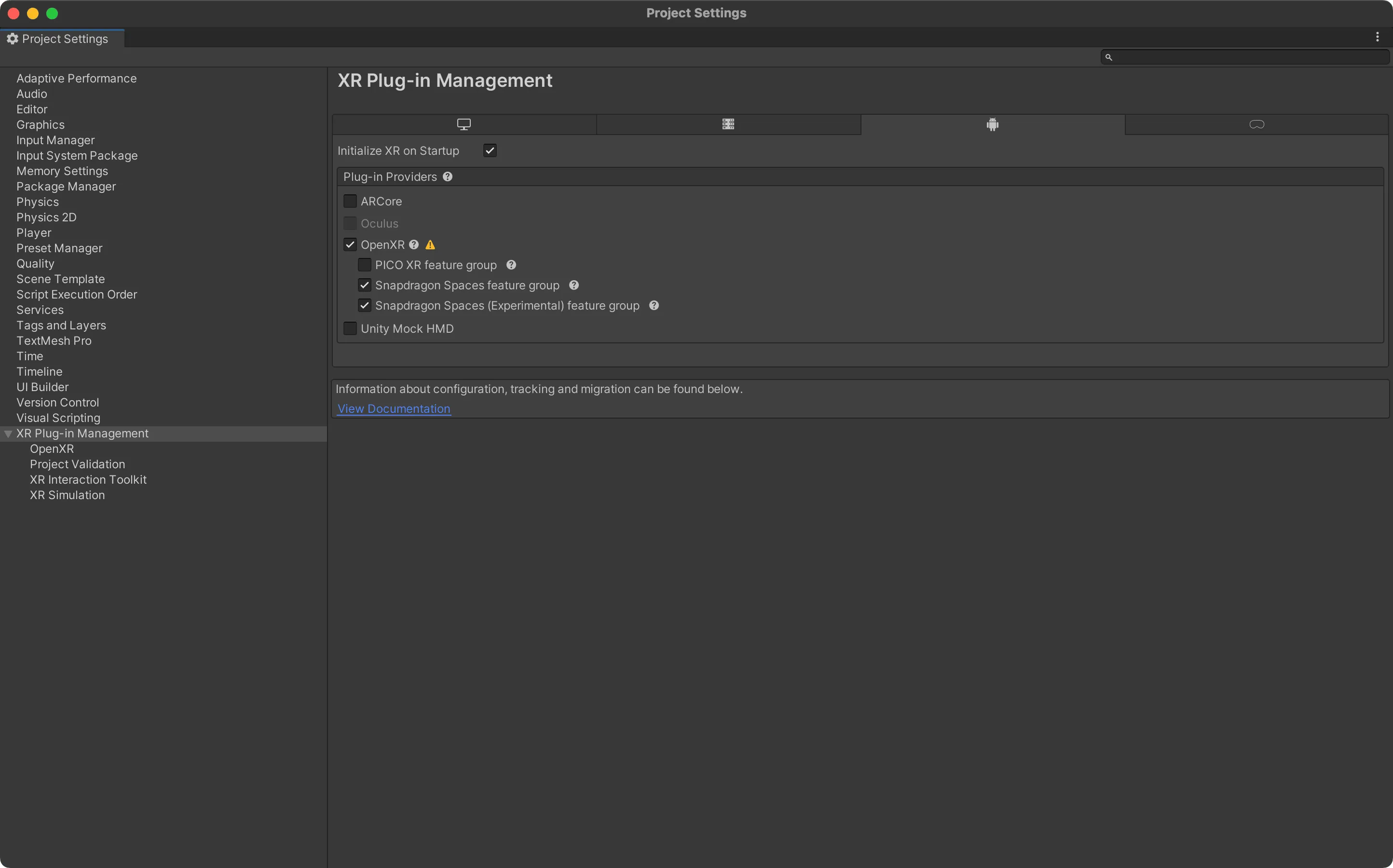

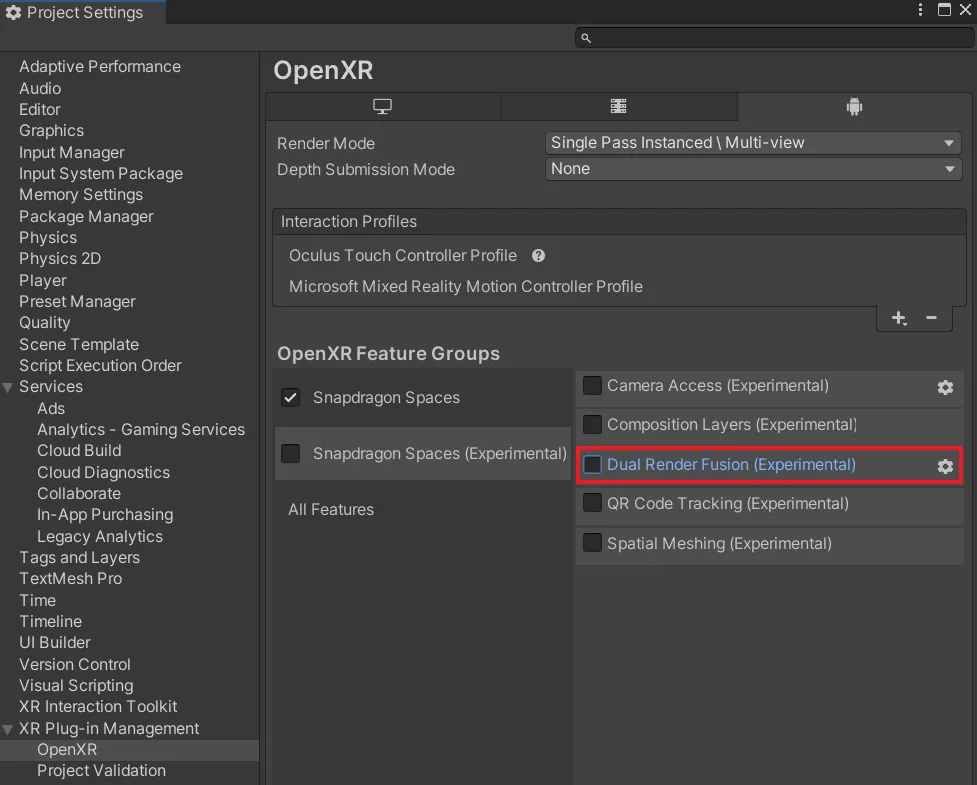

Not only the adaptation cost of hardware manufacturers has decreased, but also for upper-level application developers, as long as their games/applications are not deeply bound to the latest features of a certain device (that is, they care more about platform coverage), it is also very simple to use the Snapdragon Spaces SDK based on OpenXR. For example, taking Unity as an example, as long as we use the OpenXR version of the SDK of various manufacturers (usually automatically with Unity’s OpenXR Plugin ), we can cover most of the functions on more platforms. For different platforms, as long as the corresponding Features are enabled in Unity’s Player Settings- > OpenXR (specifically introduced and set in Unity) Snapdragon Spaces SDK steps, you can refer to the official documentation ):

For example, if we want to implement the Dual Render Fusion function, we only need to check the Dual Render Fusion (Experimental) option in the OpenXR settings of Unity in the Snapdragon Spaces (Experimental) OpenXR Feature Groups, and the vast majority of the project settings will be completed (for more detailed settings, please refer to the official documentation ):

Tips

Dual Render Fusion allows an Android application to output images to both the phone screen and the connected AR eye, allowing users to use the phone as a controller for AR glasses and view the final rendered XR image through the AR glasses, as shown in the figure below.

In addition, the Snapdragon Spaces SDK also provides more AR features, among which the relatively important ones are:

- Anchor : Anchor tracking allows developers to track their location in the real world, so that virtual objects can be anchored to the real world environment in the application

- Image Tracking : Image tracking allows developers to identify images in the real world and track their location

- Hit Testing : Hit testing allows developers to detect whether there are actual flat or physical contact points in a certain direction

- Plane Detection : plane recognition, allowing developers to identify the position of the plane in the real world and some basic information of the plane

- HandTracking : First person gesture recognition and gesture tracking, and also provides very fine ControlBox , Controller/HandTrackingSwitch , Virtual Force Feedback (VFF) , Snapping , Hand Filtering and other functions

- QRCodeTracking : Provides QR code recognition and tracking in real space, allowing developers to achieve more spatial anchoring functions based on QR code and parsed QR code information

- Spatial Meshing : Provides a space mesh for obtaining the real space environment, allowing developers to obtain the specific shape of the environment in the real space

- Camera Frame Access : Provides the ability to obtain camera images, allowing developers to further process camera images

You may have noticed that not all XR devices have many of these features, so some non-standard features, such as hand tracking, are based on the OpenXR Extension implementation we saw earlier.

The present and future of OpenXR

In the previous sections, we have explained what OpenXR is, as well as its specific forms and practical applications. Although the prospects are promising, it is still necessary to note that there is still a long way to go before the true “unification” of the XR industry.

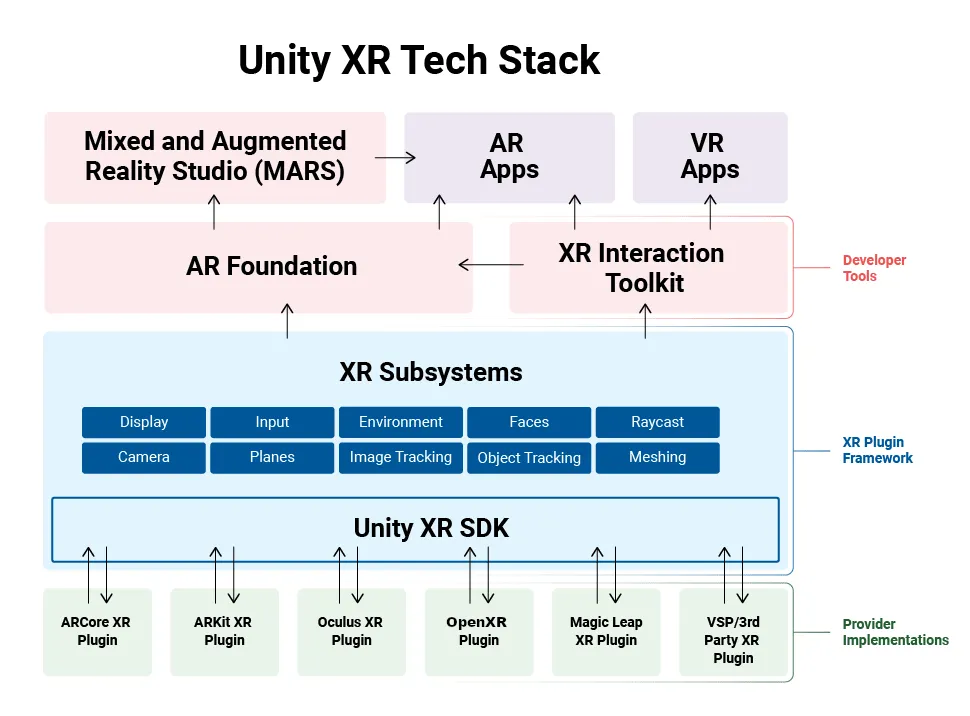

Currently, on mainstream XR development platforms (such as PICO and Meta), the OpenXR version of the SDK does not have application layer developers as the first option. Taking Unity as an example, due to Unity’s cross-platform strategy and the influence of Unity engine in the industry, Unity’s own XR Interfaction Toolkit and ARFoundation have become another factual “OpenXR”. We can take a look at the architecture diagram of Unity XR below.

In the above picture, the AR/VR application layer developers are directly connected to ARFoundation and XR Interaction Toolkit, so most of the basic functions do not need to be completely rewritten unless some hardware-related features are used. Due to historical reasons, various underlying hardware manufacturers generally release plugins for Unity XR Subsystem to allow developers to develop on their own platforms based on Unity. From this perspective, switching to OpenXR will not bring too much development perception to application layer developers.

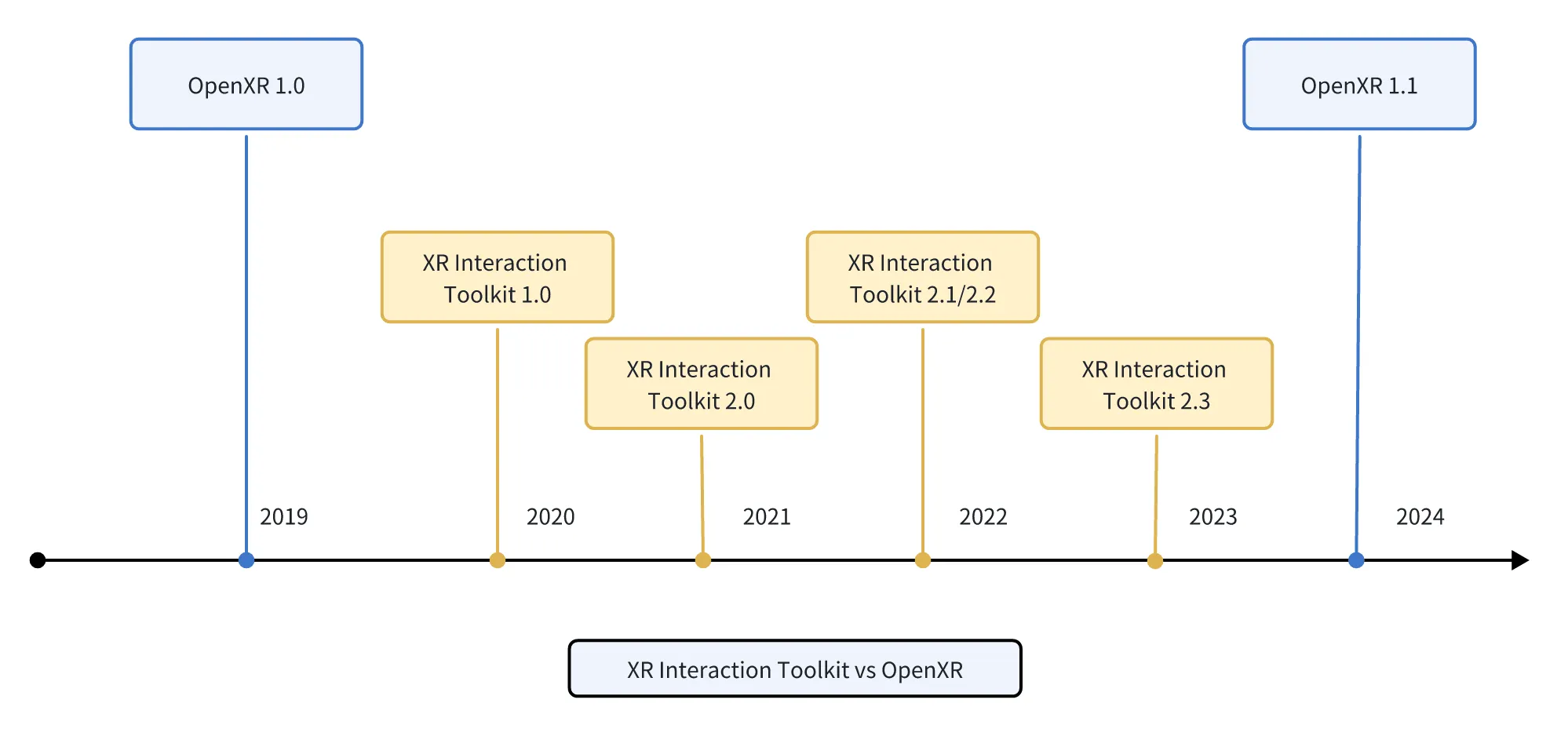

Moreover, since the OpenXR working group itself is an industry organization with many stakeholders, updating and iterating the protocol is definitely not as fast as commercial company SDKs. We can compare the version update speed of OpenXR and XR Interaction Toolkit.

For example, the PICO Unity Integration SDK supports various new features of PICO relatively quickly (this is based on the SDK provided by Unity XR Subsystems), so for application layer developers, choosing to use the PICO Unity Integration SDK is still a better choice.

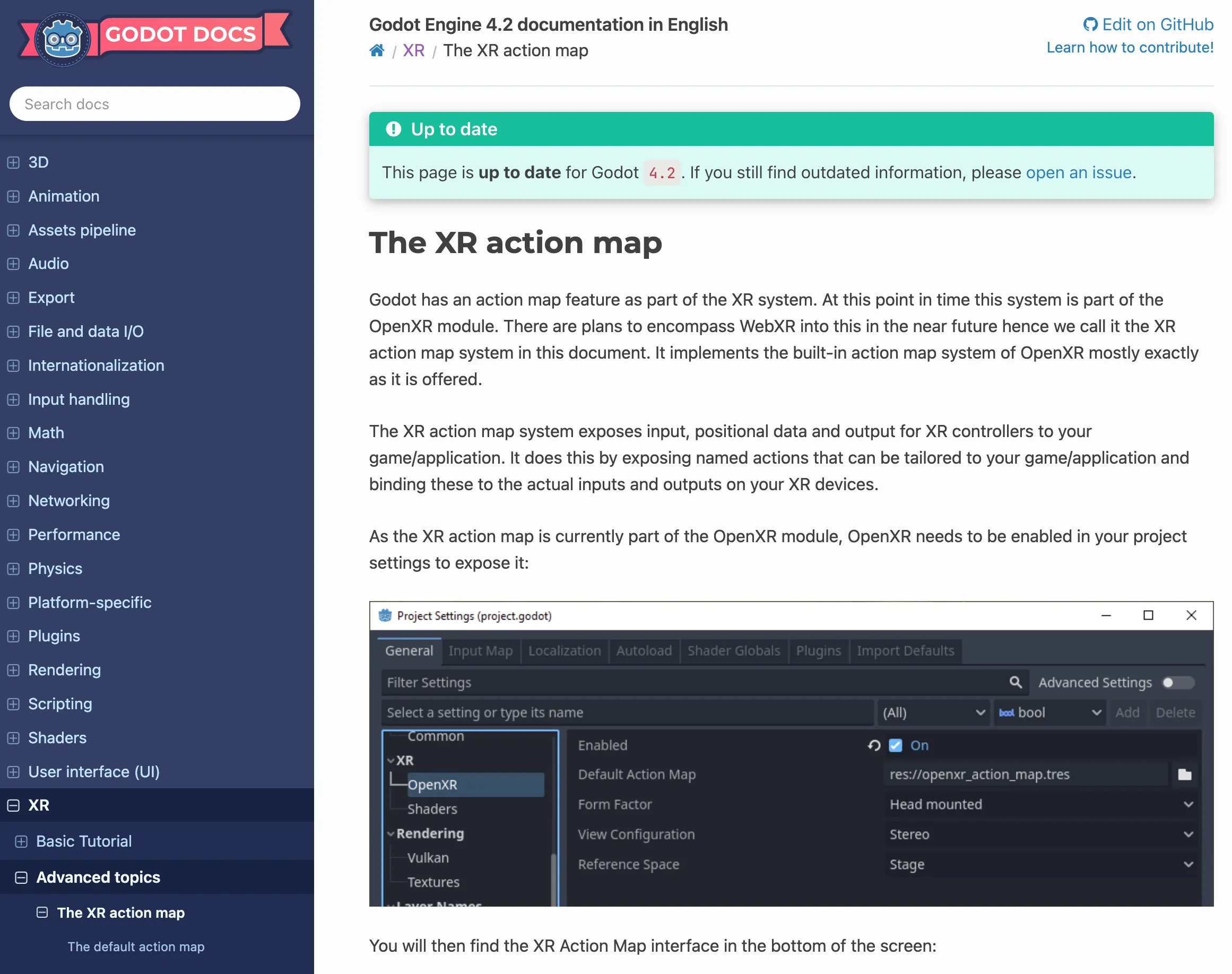

Does this mean that OpenXR is meaningless? No, No, No, things are not black and white. In the short term, for application layer developers, the perception of OpenXR may not be so strong, but for later game engine developers, the significance of OpenXR is very great. For example, game engines like Godot, because they do not have such a huge industry influence as Unity and Unreal, it is impossible to require each hardware manufacturer to develop corresponding SDKs for themselves. After adapting with OpenXR, Godot can quickly achieve 80 points in this field. Looking closely at Godot’s documentation , Godot does adopt the OpenXR route in XR:

Moreover, it is very common for standards like OpenXR to fall behind industry practices. For example, there are still many features in browsers that have not yet entered the formal W3C standard and are only supported by some browsers, such as the View Transitions API . According to the general rhythm, the newly added non-standard features will gradually enter the formal standard over time.

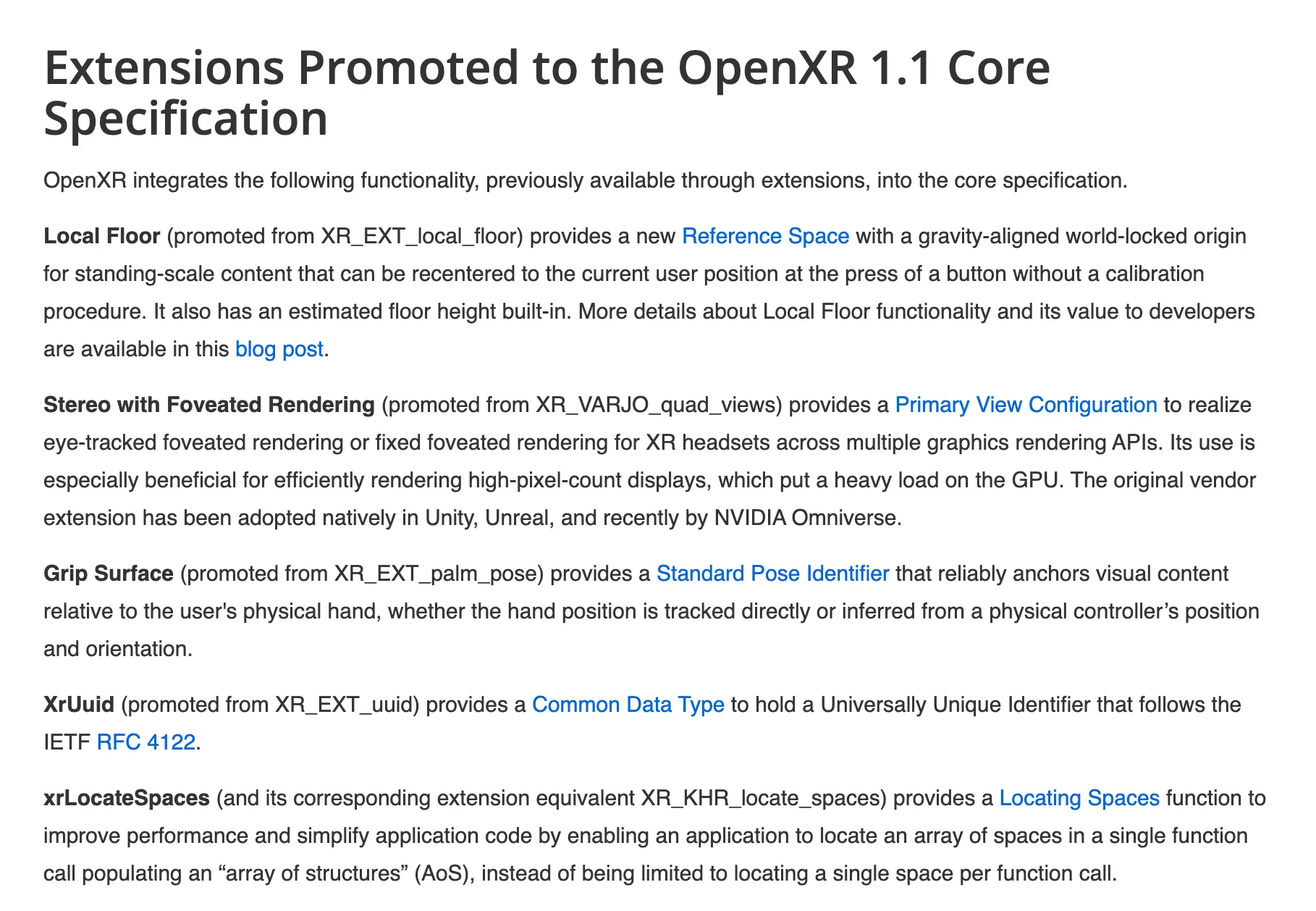

OpenXR is similar. In the 1.1 version released in 2024, OpenXR merged some APIs that previously existed in the extension part into the official API.

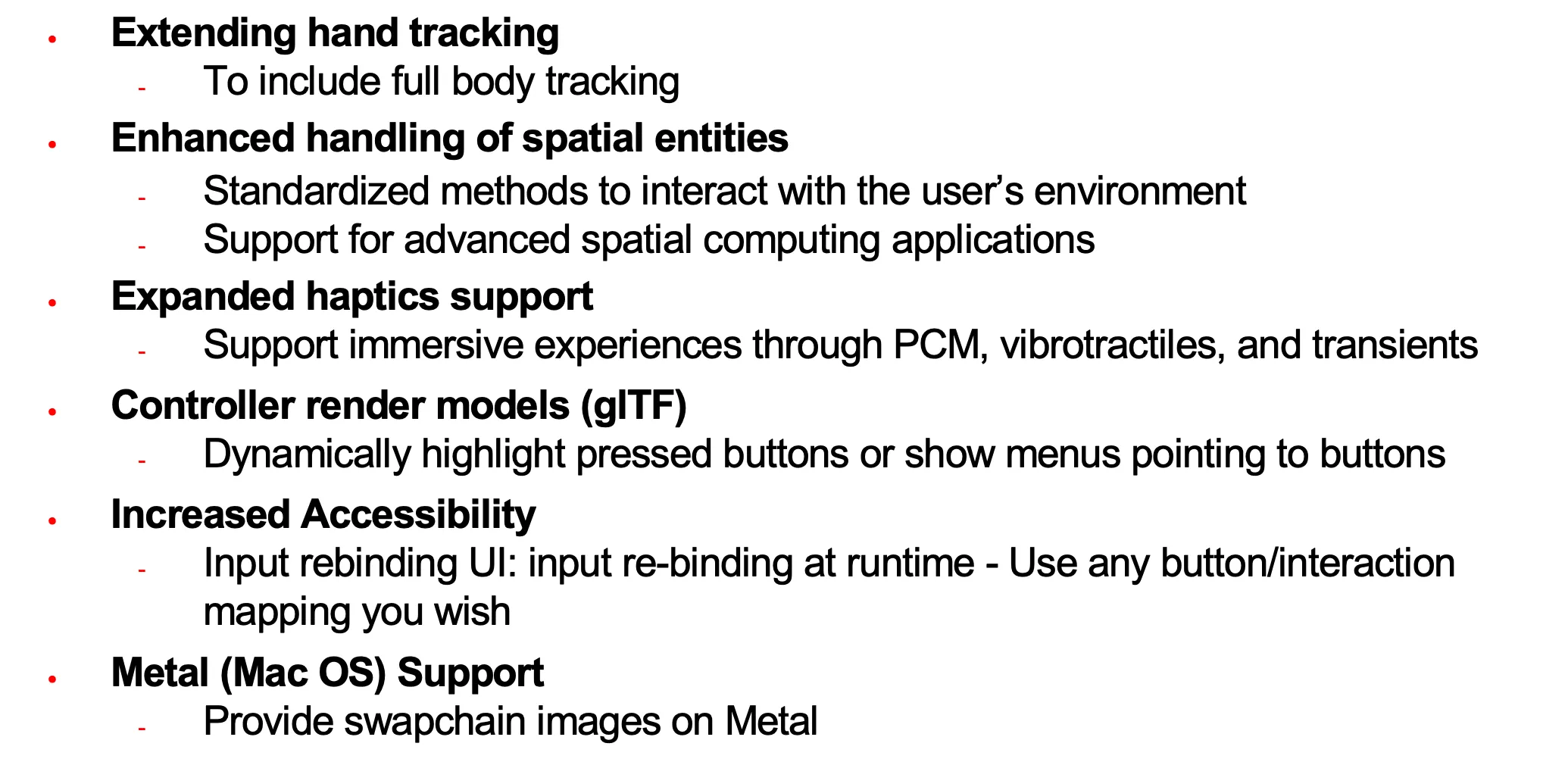

With the continuous development of the XR industry, more and more new XR features will be included in OpenXR’s future plans, such as:

Written at the end

The so-called trend of the world is that unity leads to separation, and separation leads to unity. The XR industry experienced a period of blooming flowers around 2016, but also experienced a low point for several years afterwards. The corresponding technical standards have also gone through a period from dispersion to loose unity. Fortunately, new things always emerge in this industry to try to let more people experience the magic of XR. Whether it is the Apple Vision Pro that emerged in 2023 or the large space narrative that emerged like mushrooms in 2024, these positive attempts are confirming that the industry is still moving forward!

As a very basic technical standard in this industry, understanding OpenXR may not help us immediately create a product that breaks into other demographics, but it can change our perspective and view the development of the entire ecosystem from an industry perspective. I hope this article can be helpful to you in front of the screen. If you want to learn more interesting or useful knowledge about XR, please follow the XReality.Zone 😉 on various platforms. See you in the next article!

XRealityZone is a community of creators focused on the XR space, and our goal is to make XR development easier!

If you are familiar with English, you can find us in the following ways: Official Website(EN), X(twitter), Medium.

If you think this article is helpful for you, you are welcome to communicate with us via Email.

XReality.Zone

XReality.Zone