XR World Weekly 007

Highlights

- Microsoft Mixed Reality Toolkit 3 (MRTK3) has moved to its own org on GitHub

- Rokid releases Rokid AR Studio suite

- The 3D world, maybe it doesn’t need to be built in one go

BigNews

Microsoft Mixed Reality Toolkit 3 (MRTK3) has moved to its own org on GitHub

Keywords: Microsoft, open source, MRTK3

Microsoft announced a major update for Mixed Reality Toolkit 3 (MRTK3). MRTK3 was originally created and maintained by Microsoft to support development of augmented reality apps for its HoloLens and other products. Now, Microsoft has moved it to its own independent organization on GitHub, making it a truly open source and cross-platform project.

As the third generation MRTK in Unity, it builds on top of Unity’s XR SDK Management and XR Interaction Toolkit, providing cross-platform input system, spatial interactions, and UI building blocks. It supports multiple platforms, enabling developers to prototype rapidly via live simulation.

MRTK3 supports a variety of XR devices including HoloLens 2, Meta Quest, Windows Mixed Reality, SteamVR, and Oculus Rift. It features an all-new interaction model, updated mixed reality design language, 3D UI system with Unity Canvas support, and more. Additionally, it offers some accessibility features like input assistance. Overall, MRTK3 has been rewritten and redesigned in areas like architecture, performance, and user interface to accelerate cross-platform mixed reality app development and present it to users in a professional, clean way.

Although the previous news of Microsoft disbanding the MRTK project team was very disheartening for practitioners, it’s good to see this story seems to have a decent ending after all. The move to transfer MRTK to Github ended the status of MRTK3 being solely controlled by Microsoft. As an independent organization, MRTK3 will be able to attract more platform and developer participation without being limited to Microsoft’s tech stack. Magic Leap joining the steering committee as an important AR device player and Qualcomm Technologies announcing its return signal broader industry support for the project.

On the product roadmap, MRTK3 is slated for formal release this September. As an important toolkit for AR and VR development, MRTK3 has been eagerly anticipated. Its open source transition and cross-platform support will undoubtedly bring greater convenience to developers.

Microsoft announces it will stop supporting Visual Studio for Mac IDE in August 2024

Keywords: Microsoft, Unity, Visual Studio

Microsoft recently announced that it will stop supporting Visual Studio for Mac IDE on August 31, 2024.

As an important tool in the Unity project development workflow, the retirement of its Mac version will undoubtedly have a major impact on macOS-based developers and affect workflows to some extent.

Currently, Microsoft has provided the following alternative solutions:

- Use Visual Studio Code along with the new C# tooling and related extensions

The recently released C# tooling, .NET MAUI, and Unity VS Code extensions are currently in preview, aimed at enhancing VS Code support for .NET and C# developers. These cross-platform native extensions, including on macOS, will continue to improve in experience as they transition from preview to general availability. (In Newsletter 006 we also covered the Unity extension for VS Code in depth.)

- Run the Windows version of Visual Studio IDE in a virtual machine on Mac

This option can cover a wide range of IDE needs like support for legacy Xamarin and F# projects, and remote development for iOS via the virtual machine.

- Run the Windows version of Visual Studio IDE in a cloud VM

Visual Studio remains the preferred tool for .NET/C# development. Using cloud-hosted VMs from Microsoft Dev Box, you can access full Visual Studio capabilities from Mac via web or native RDP clients, without needing to run VMs locally.

Although Microsoft has expressed commitment to continue supporting Mac developers, the Unity community can also take this opportunity to explore new cross-platform development solutions.

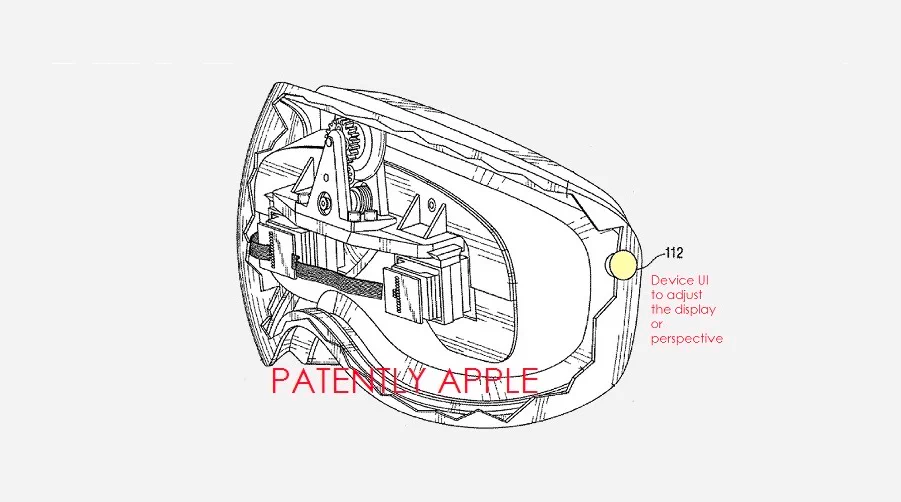

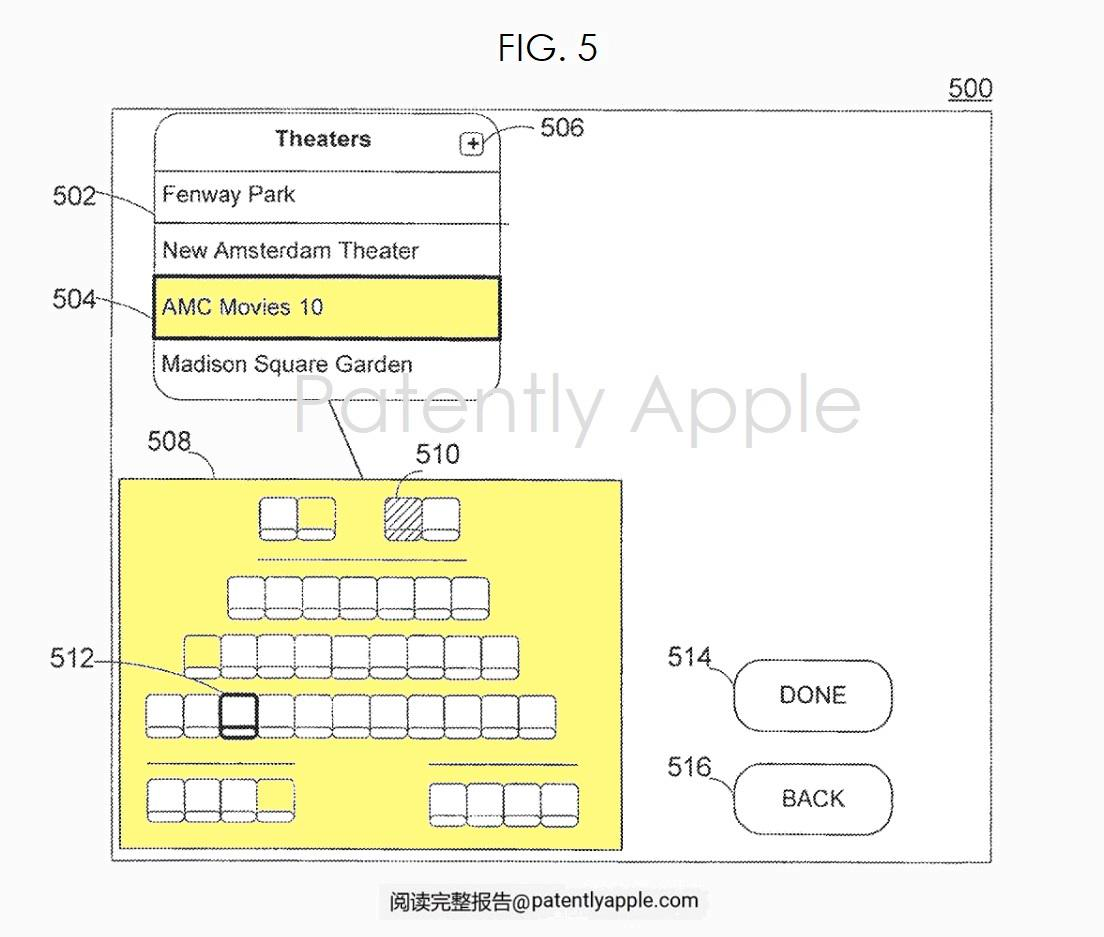

Apple granted a patent on Apple Vision Pro tracing back to 2007

Keywords: Apple, patent, Apple Vision Pro

Apple was recently granted a patent on its first vision-related invention, dating back to 2007, the same year the first iPhone was launched. The patent covers a potential future Apple virtual reality headset design.

The original patent application timeline is remarkably long, predating other VR headset makers like Oculus by many years. The patent covers a personal display system capable of adjusting display media configurations. The system can detect user head movements and adjust displayed images accordingly, which eventually evolved into later head and eye tracking technologies.

The patent also depicts an interface where users can select seats in a movie theater. Once a particular seat is chosen, the system modifies the displayed media image to simulate the view from that seat. Users can also opt to add new theaters for customization.

Additionally, the patent mentions scaling displayed images and adjusting audio effects based on user selections to create an immersive effect. For example, the system can simulate the head outline of another user in the theater, or a specific architectural structure of the theater. On audio, sound effects can be adapted based on the chosen seat to differentiate front vs. back row listening experiences.

It’s evident Apple had great foresight into VR technologies, encompassing immersive visual and audio effects. Although it may take time for potential product realization, the patent undoubtedly proves Apple has long been planning ahead in the VR space.

Rokid releases Rokid AR Studio suite

Keywords: Rokid, AR

The Rokid Jungle launch event was held in Hangzhou on August 26. At the event, Rokid founder Misa released the Rokid AR Studio suite focused on spatial computing. The Rokid AR Studio suite contains the Rokid Max Pro glasses and the Rokid Station Pro hub.

On hardware, the Rokid Max Pro adds a camera for SLAM localization compared to the previous Rokid Max, making it Rokid’s first AR glasses with 6DoF spatial positioning. The Rokid Max Pro uses MicroOLED displays with 1920x1200 resolution per eye, peak luminance of 600 nits, 50 degree FOV and 120Hz refresh rate. The biggest highlight of the Rokid Max Pro is its ultra lightweight design at just 76g, allowing for prolonged wear.

The Rokid Station Pro serves as a detachable AR computing platform, powered by the Qualcomm Snapdragon XR2+Gen1 chip. The device can also act as a controller for the AR glasses with 3DoF tracking capabilities, and users can leverage the physical buttons for mouse-like interaction. The Rokid Station Pro is also equipped with a 48MP camera that users can utilize for taking photos. Misa explained specifically that although capturing photos and videos directly through the AR glasses’ cameras is possible, Rokid believes this infringes on privacy as people are recorded without consent. By using the Rokid Station Pro to shoot, users have to make a physical action of picking up the device, similar to using a phone camera, making surrounding people aware of the photo capturing behavior.

On ecosystem, another highlight of the launch event was Misa elaborating on the previously announced collaboration with Google, making the Rokid Station the world’s first AR-enabled Android TV device certified by Google. This means Rokid Station users can enjoy immersive content from streaming platforms like YouTube and Disney+ in AR big screen mode.

At the event, Misa emphasized “Rokid is essentially a systems software company hidden within beautiful hardware products.” One of Rokid’s distinct traits is its spatial computing system based on single camera tracking. The advantage of a single camera is lighter weight and lower cost, but the precision challenges are greater compared to traditional multi-camera systems. To achieve SLAM based on a single camera, Rokid introduced its proprietary YodaOS-Master operating system, significantly optimized for spatial computing over the previous YodaOS-XR. YodaOS-Master provides capabilities like spatial localization, stereoscopic rendering, gesture recognition and spatial audio tailored for spatial computing.

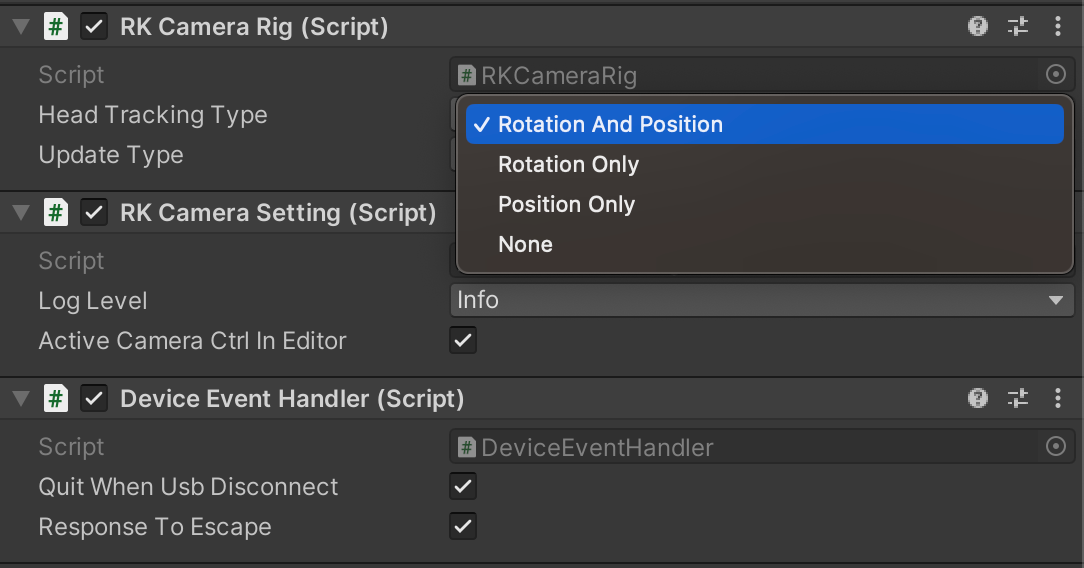

On development, Rokid launched the UXR2.0 SDK for Unity developers. We were among the first to download and try out the development workflow for Rokid’s AR devices.

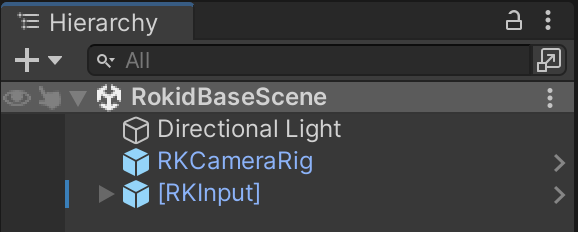

The first step to develop for Rokid in Unity is to achieve spatial localization of the camera. In ARFoundation, this is accomplished by adding an ARSession and XROrigin to the scene. With the UXR2.0 SDK, spatial localization can be achieved simply by dragging the RKCameraRig prefab into the scene.

On the RKCameraRig script, we can select 6DoF, 3DoF or 0DoF tracking via the HeadTrackingType option.

With spatial localization implemented, let’s look at the AR interaction piece. Rokid offers multimodal interactions including gestures (near and far), 3DoF raycast, controller, mouse, joystick, voice and head control. Users can dynamically switch between these modes within the same app.

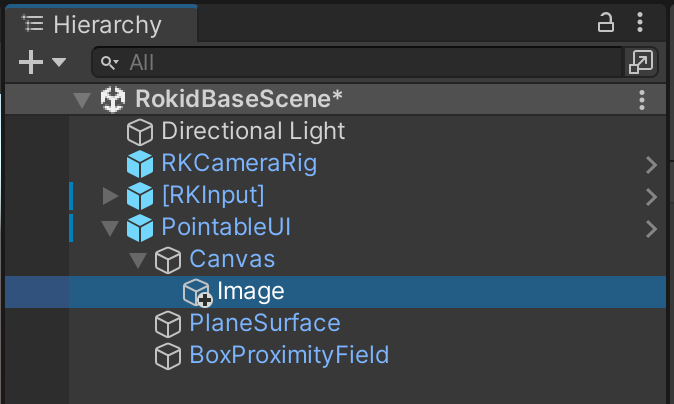

To implement the aforementioned multimodal interactions in Unity, the UXR2.0 SDK provides a dedicated prefab called [RKInput]. Each interaction modality is encapsulated into an interaction module, and all modules are managed uniformly by the InputModuleManager script on [RKInput].

For each interaction module, initialization must be completed before activation. In the InputModuleManager script, we can choose to initialize multiple modules at app launch and select one as the initially activated interaction module.

In addition to interaction methods, we also need some interactive objects to test the interaction effects. Here we’ll try a simple UI component interaction. The UXR2.0 SDK provides another prefab called PointableUI, which we drag into the scene.

To make the UI component interactable, we need to place it under the Canvas of PointableUI. We add an Image component.

To make this Image interactable, we create a script called ImageController and attach it to the Image.

using UnityEngine;

using UnityEngine.UI;

using UnityEngine.EventSystems;

public class ImageController : MonoBehaviour, IPointerDownHandler, IPointerUpHandler

{

// 当手指捏合的时候将图片变成蓝色

public void OnPointerDown(PointerEventData eventData)

{

GetComponent<Image>().color = Color.blue;

}

// 当手指松开的时候将图片变回白色

public void OnPointerUp(PointerEventData eventData)

{

GetComponent<Image>().color = Color.white;

}

}When we interact with the Image using far gesture raycast (pinch for select), the UXR2.0 SDK passes the interaction events through Unity’s event system to our script. Below is the interaction simulated in the Unity Editor.

From this small demo, we can see the UXR2.0 SDK is very friendly for Unity developers, enabling core capabilities via simple prefab drags. On interaction, the UXR2.0 SDK also adapts Unity’s native event system so developers can implement interactions using familiar code.

Idea

The 3D world, maybe it doesn’t need to be built in one go

Keywords: visionOS, games, particle effects

After the announcement of Apple Vision Pro, many developers have been thinking about how to “directly” convert their apps into a 3D world, but actually, this conversion may not need to happen all at once, and can be a gradual process.

In this regard, iOS/indie game developer James Swiney made some great attempts. Before the launch of Apple Vision Pro, he had been working on a pixel art 2D flat game, which in theory would be difficult to relate with space, but through a series of ingenious ideas, he first cleverly combined the game’s extra RPG info with visionOS’s multi-window capabilities:

Next, he also used various particle effects to add simple immersion to the 2D pixel art game, such as this scene with drifting snowflakes:

And if we already have snow, adding some lightning shouldn’t be too over the top:

Putting together all these creative ideas, we get to see an end result that really makes one go Wow:

In addition to James Swiney’s ingenuity, another Japanese developer called ばいそん made a “Beyond Flat” card game with similar philosophies:

This may hint that before having the capability to build complete 3D scenes, we could start the conversion process from small details in our 2D scenes. After all, Rome wasn’t built in a day, right?

Tool

guc: glTF to USD converter

Keywords: glTF, USDZ, MaterialX

guc is an open source glTF to USD format converter. Similar tools exist widely online, but each project has different targets and capabilities.

For example:

- gltf2usd: A proof of concept mainly supporting core glTF 2.0 features with minimal extension support.

- usd_from_gltf: Targeted primarily for AR Quick Look, which only supports a subset of glTF 2.0 features.

- Apple’s USDZ Tools: Apple’s official converter but not open source.

guc achieves better rendering by converting glTF materials almost losslessly to the MaterialX standard. It supports all glTF features except animation and skinning, and most extensions. Being open source (Apache 2.0) and feature-complete is guc’s biggest strength.

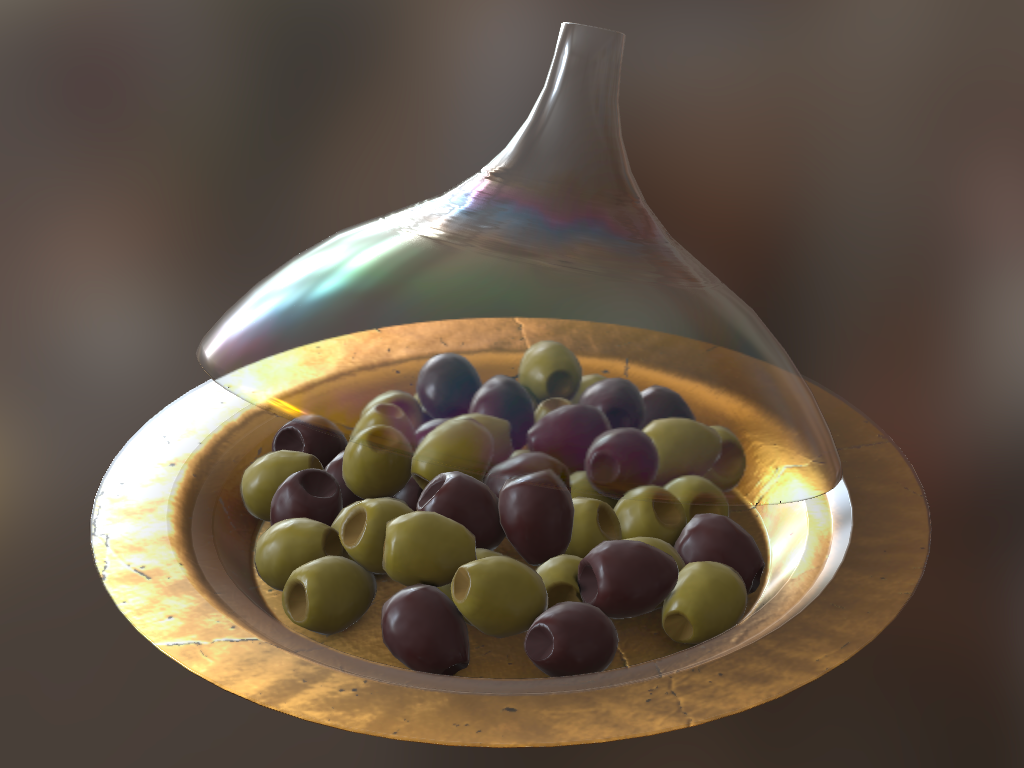

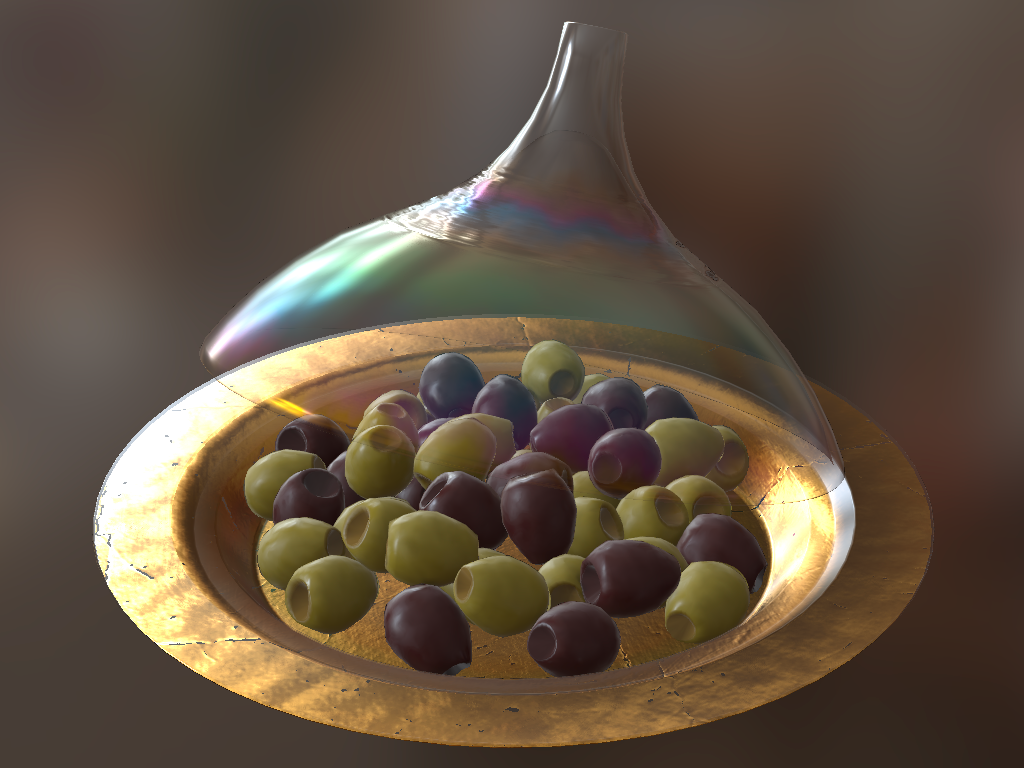

Below is a sample model, Iridescent Dish with Olives, comparing the USD model converted by guc with MaterialX, rendered in hdStorm, vs. the original glTF rendered in Khronos’ glTF Sample Viewer.

The project also provides a usd-assets repo containing USD models with various materials for testing.

Article

Apple shares developers’ experiences with Apple Vision Pro

Keywords: Apple, Apple Vision Pro

Apple set up Apple Vision Pro developer labs in Cupertino, London, Munich, Shanghai, Tokyo, and Singapore, inviting interested developers to sign up for a one-day event. At the labs, developers can test their apps, gain hands-on experience, and have their questions answered by Apple experts.

Recently, Apple shared feedback on its website from developers who attended the labs and tried out Vision Pro first-hand:

As CEO of Flexibits, the team behind successful apps like Fantastal and Cardhop, Michael Simmons has spent over a decade thinking about every aspect of teamwork. But when he brought Fantastical to Apple’s Vision Pro lab in Cupertino this past summer and experienced it on a device for the first time, he felt something unexpected.

“It was like seeing Fantastical for the first time,” he said. “It felt like I was part of the app.”

Simmons said the lab provided not just a chance to get hands-on, but profoundly impacted how his team thinks about what a true spatial experience is. He said, “With Vision Pro and spatial computing, I really saw how to start building content for an infinite canvas. This will help us make even better apps.”

Code

NeuralAngelo: NVIDIA open sources project code to convert regular videos to 3D structures

Keywords: 3D, metaverse, NVIDIA

NeuralAngelo is a new AI model from researchers at NVIDIA and Johns Hopkins University that reconstructs 3D objects using neural networks. The latest research has been accepted to CVPR 2023. The paper and demos will be released in June, and the related code implementation is open sourced now.

What makes NeuralAngelo special is its ability to accurately capture repeating texture patterns, homogeneous colors, and strong color variations.

By employing “instant neural graphics primitives”, the core of NVIDIA Instant NeRF, Neuralangelo can thus capture finer details.

The team’s approach relies on two key components:

(1) Numerical gradients to compute higher order derivatives for smoothing operations;

(2) Coarse-to-fine optimization over hash grids that control different levels of detail.

Even without depth supervision, Neuralangelo can effectively recover dense 3D surface structures from multi-view images, significantly outperforming previous methods for capturing detailed large-scale scenes from RGB video capture.

OctopusKit: A pure Swift 2D ECS architecture game engine

Keywords: Apple, Swift, ECS

OctopusKit is a pure Swift 2D game engine with ECS architecture, for iOS, macOS and tvOS. Rather than being a fully standalone engine, it wraps Apple frameworks:

- GameplayKit: To implement Entity-Component-System architecture for dynamic logic composition

- SpriteKit: For 2D graphics, physics and GPU shaders

- SwiftUI: To quickly design smooth, extensible HUDs with declarative syntax

- Metal: To achieve optimal performance

How is this 2D gaming framework relevant for XR? Because Apple’s visionOS relies on ECS architecture and GameplayKit, and there are few complete examples online. OctopusKit demonstrates ECS implementation and GameplayKit usage on Apple platforms, great for beginners to learn from.

XR 基地

XR 基地