XR World Weekly 003

Highlights

- Meta releases image segmentation model Segment Anything

- Make Cosmonious High accessible for blind and low vision users

- SkyboxLab — Generate stunning 360° skyboxes from text prompts

Big News

Meta releases image segmentation model Segment Anything

Keywords: image segmentation, fully convolutional neural network, generative AI, artificial intelligence

Facebook recently released an image segmentation model called “Segment Anything”, which can be seen as a GTP in the field of image segmentation to some extent, as it can accurately segment any object in an image through simple prompts, such as people, cars, trees, etc.

Technically, Segment Anything uses a fully convolutional neural network, which can perform pixel-level segmentation of images, and thus can handle images of different sizes and shapes, and can perform accurate segmentation in different scenarios. Compared with traditional methods, fully convolutional neural networks perform better in terms of segmentation effects and accuracy.

In addition, Segment Anything supports a method called “fuzzy processing” for data annotation, which allows annotators to only annotate part of the area to obtain annotation information for the entire image. This method can greatly improve annotation efficiency while reducing the workload of annotators.

In addition, Segment Anything also has the following advantages:

- Can adaptively adjust model parameters according to data characteristics to make model segmentation results more accurate;

- Can introduce multiple prompt signals so that the model can segment different types of images;

- Can handle more complex image scenes such as medical images, urban street scenes, etc;

- Supports active learning and other functions to help users annotate data more efficiently;

- Provides a tool called “data annotation engine” to improve efficiency in large-scale data annotation.

Therefore, Segment Anything can not only improve the efficiency of data annotation, but also improve the accuracy of annotation and the utilization of data.

Meta provided some real-world use cases of using Segment Anything. For example, SAM can be used in AR glasses to identify everyday objects, send reminders and instructions to users. SAM can also help farmers or assist biologists in research in the agricultural field, assist autonomous driving, and so on.

you can find the Demo online.

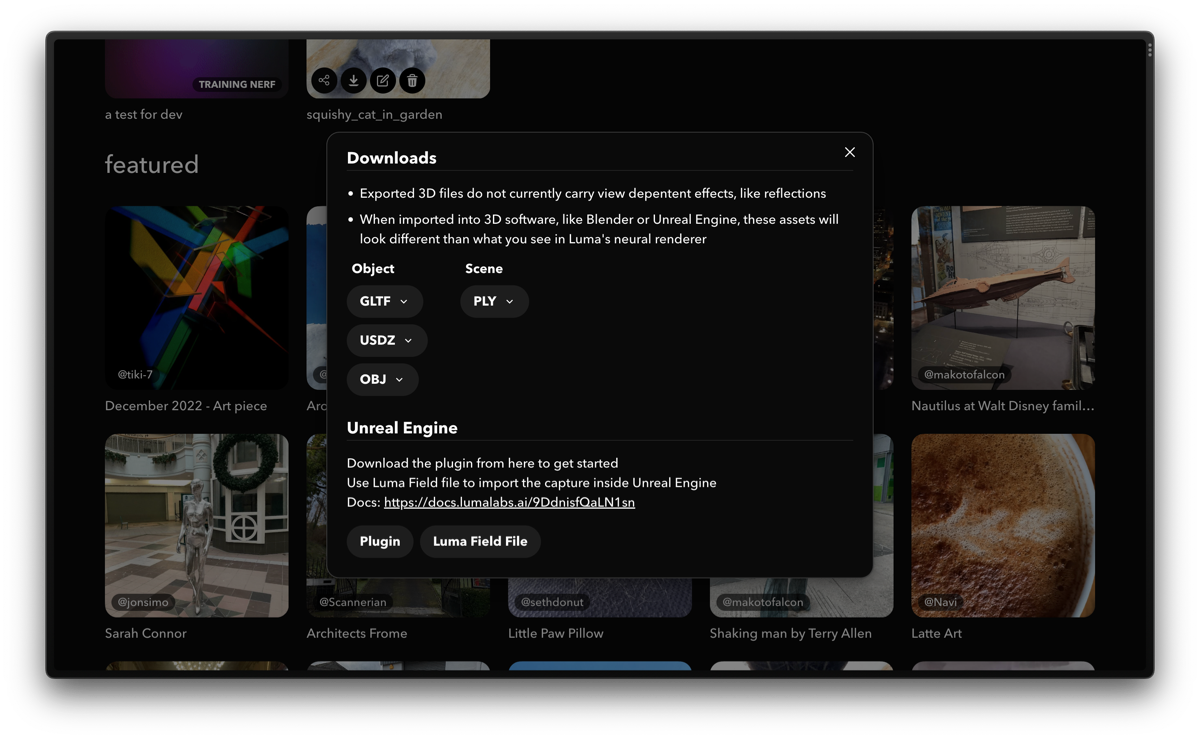

LumaAI releases Unreal plugin

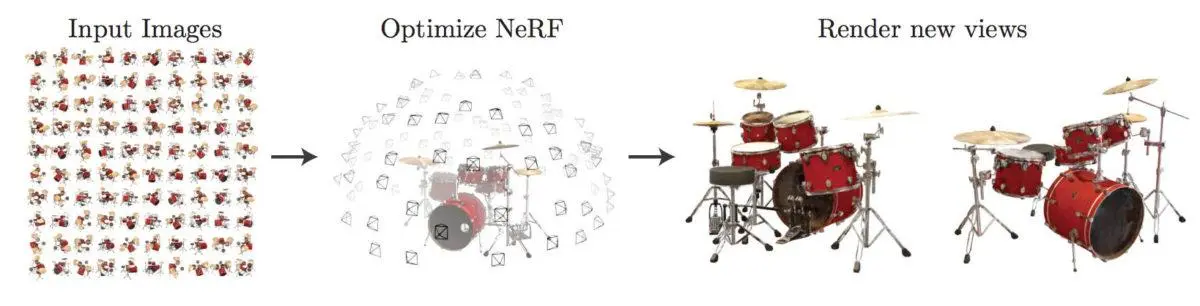

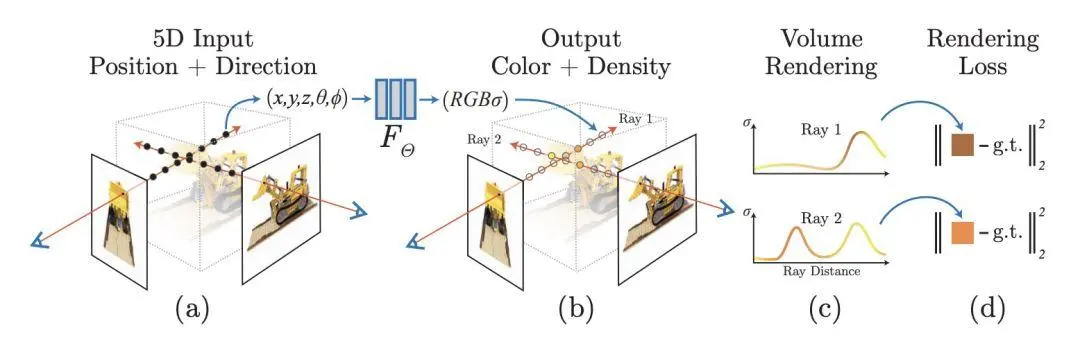

Keywords: game engine, photogrammetry, neural radiance fields, volumetric rendering

Recently, Luma AI released the first version of the UE 5 (Windows) plugin. This plugin uses locally running volumetric rendering — which means no longer needing to use traditional mesh, geometry, material-based 3D models for rendering. According to user testing, the plugin only needs an RTX2060 graphics card with 6G VRAM to run smoothly.

Luma is a mobile app that uses NeRF (Neural Radiance Fields) technology to reconstruct 3D objects or scenes. Simply put, Luma uses NeRF for photogrammetry, friendly interface guidance, good reconstruction results, and generating videos using reconstructed models are its important features. It also supports exporting in multiple formats: obj, gltf, usdz, ply. With the release of this UE5 plugin, LumaAI also supports exporting to Luma Field File and importing into UE5 for direct volumetric rendering.

The process has 5 steps:

- Download the sample project and unzip

- Double click the

.uprojectfile to open UE5 - Download your own Luma Field file (need to use Luma App for photogrammetry in advance)

- Drag the downloaded file into the Content Browser in Unreal Editor

- Wait a few seconds, a Unreal blueprint will be generated based on the NeRF file, drag it into UE5 to use

Luma officially stated: For plugins support for other 3D engines and 3D software, it will be released after the UE5 plugin is good enough. So versions for Unity and Blender still need to wait.

NeRF was first applied in novel view synthesis. Due to its powerful ability to implicitly represent 3D information, it rapidly developed in the direction of 3D reconstruction. Compared to traditional photogrammetry algorithms, it can generate 3D models with no holes and higher surface quality.

The data generated directly by NeRF is in voxel format, which is like a bunch of small spheres of different colors. To use it in 3D games or VR, it needs to be converted to traditional Mesh model format. With the release of this UE5 plugin, no format conversion is required, and volumetric rendering can be performed directly in UE5.

Currently on the market, mobile apps that use NeRF technology for photogrammetry also include Epic Games’ RealityScan, which is Unreal Engine’s own NeRF service, but does not yet support exporting and using volumetric rendering.

OpenXR Toolkit adds support for eye tracking on Meta Quest Pro

Keywords: Meta Quest Pro, eye tracking, OpenXR

Since March 2022, OpenXR Toolkit has supported eye tracking rendering plugins with eye tracking for Varjo Aero and Pimax. It now adds support for Quest Pro.

OpenXR Toolkit is a free and open source project by Microsoft employee mbucchia, bringing new capabilities not natively supported to OpenXR-based PC VR apps, like AI image upscaling, hand tracking, static and dynamic foveated rendering, and more.

Eye tracked foveated rendering (ETFR) is an emerging technology. It only renders the small area where the eyes are currently looking at full resolution, while the rest is rendered at lower resolution. This technique can greatly boost performance in games, as the freed up GPU resources can be used to increase performance, push rendering resolution, or dial up graphical settings. Eye tracking tech also allows for more natural head movement in games, enabling players to better immerse themselves. In summary, eye tracking is a very promising technology, and OpenXR Toolkit’s support will bring better gaming experiences to more players.

OpenXR is an open standard provided by Khronos to simplify AR/VR development by enabling developers to seamlessly target a wide range of AR/VR devices. The Khronos Group has been working to unify the fragmented state of XR without standards. Currently, Meta, Sony, Valve, Microsoft, HTC, NVIDIA and AMD have all embraced this standard.

NuEyes to Launch Next-Gen Smart Glasses for Medical and Dental Markets

Keywords: smart glasses, medical AR, medical VR

NuEyes is set to launch the NuLoupes smart glasses that will disrupt the surgical and dental loupes market, bringing convenience to medical professionals and patients.

For doctors, NuLoupes’ high-resolution variable digital magnification provides sub-millimeter depth perception, allowing doctors to better understand the environment they are looking at. In addition, NuLoupes also features real-time 3D stereoscopic imaging and a robust ecosystem of augmented reality apps and content tailored for clinical use. These capabilities enable doctors to more accurately diagnose and treat diseases, improving efficiency and precision of surgeries and treatments.

For patients, NuLoupes also provides a better treatment experience. For example, doctors can use AR apps and content with NuLoupes for narration or taking notes, object recognition, as well as remote health and 3D stereoscopic live streaming, while being able to view patient data and imaging within their field of view. These features allow patients to better understand their conditions, and also comprehend doctors’ treatment plans.

https://youtu.be/9XrB7japRXc?si=zhxuuitzjdO0fk_w

In addition to smart glasses, virtual reality (VR) and augmented reality (AR) technologies also have many potential applications in healthcare. Medical students and interns can use VR for immersive simulation of surgeries and clinical practice to enhance their skills and confidence. Additionally, AR can provide real-time guidance and cues for doctors to facilitate diagnosis and treatment.

VR can help patients distract from pain and discomfort. Studies show VR can reduce pain after surgery and minimize the need for pain medication. VR can also treat psychological conditions like anxiety, depression and PTSD by providing immersive experiences to help patients learn coping and emotional regulation skills. AR can offer real-time feedback and guidance to help patients make correct motions and postures for rehabilitation.

VR and AR have enormous potential in healthcare, benefiting doctors with improved diagnosis and treatment, while helping patients better manage diseases and recover health.

Idea

Jim Henson’s The Storyteller: Taking the reading experience to the next level with AR

Keywords: AR, creativity, reading, concept video

https://youtu.be/LKH1lwbrUJE?si=rRRQx1pFE2aBZXd8

Electronic devices and physical books don’t have to be completely conflicting. In the eyes of Felix & Paul Studios — an Emmy Award-winning creator, the two can even complement each other.

In this video, Felix & Paul Studios introduces how to use augmented reality (AR) technology to enhance the reading experience. By adding AR markers to printed materials, readers can use AR glasses to “see” these books in a different way. When readers open the book, the castles in the book can be presented as 3D models, accompanied by vivid animations and realistic sound effects, allowing readers to immerse themselves in the book’s scenes.

Based on this concept, AR glasses can enable even more realistic interactions, such as in the video, when the reader reads a scene full of seawater, the seawater in the book also flows out as the reader tilts the book. The realism is quite impressive.

Creating AR Scenes with ChatGPT

Keywords: ChatGPT, WebAR

Experimenting with ChatGPT and WebAR. I was able to build this AR scene by talking to a chatbot 🤩🤩. #chatgpt #openai #threejs #aframe #8thwall pic.twitter.com/F5f7SSI0Vl

— Stijn Spanhove (@stspanho) December 4, 2022

Recently, AI company OpenAI released a conversational model called ChatGPT. This model has very versatile applications, and creators have done many interesting experiments with it, including movie outlines, Blender Python scripts, “Choose Your Own Adventure” stories, and even a rap battle between fintech and banks. These experiments demonstrate ChatGPT’s amazing capabilities.

In addition, software engineer Stijn Spanhove also conducted some fun experiments with ChatGPT in the field of augmented reality (AR). He successfully created a scene using ChatGPT and WebAR. Previously, Stijn Spanhove has also shown his AI experiments in AR, where he generated artworks in a selected area using Stable Diffusion’s image inpainting capabilities.

Before Your Eyes — A Beautiful VR Narrative Art Showcase

Keywords: PlayStatation VR2, VR games

“Before Your Eyes” is a game originally released on PC in 2021, on mobile last year, and now debuts on PlayStation VR2. Players take on the role of a child named Benny, going through memories of his upbringing, family life, and exploring his artistic talents. Interaction happens by blinking, and manipulating objects seen in memories, including flipping pages, playing piano, and drawing.

Blinking may also cause players to miss interaction opportunities. When a metronome icon appears in a memory, it signals the next blink will jump to another scene, but you don’t know how far. Sometimes during dialogues, the small metronome also shows up, meaning you may accidentally truncate a memory midway from blinking, thus missing some scenes. While annoying, this also adds to the dreamlike quality of the game, just like real memories where you can’t recall certain parts.

This is an extremely compelling VR narrative that allows players to experience the emotions within the story first-hand.

Making Cosmonious High Accessible for Blind and Low Vision Users

Keywords: accessible gaming, vision, touch, blindness

Owlchemy Labs is committed to creating virtual reality games for everyone, as stated in their Accessibility Statement pledging ongoing efforts in this area. They have previously prioritized major advances in accessibility, like subtitles, physical and height accessibility, as team initiatives. They encourage every developer to consider accessibility in their creations, and proactively incorporate accessible features on launch and in updates.

Their latest addition of various accessibility options in Cosmonious High, including single-controller mode, seated mode, subtitles, etc, shows their continued exploration into accessible gaming. A product that can only be played by audiences without any disabilities is considered incomplete. They constantly push new frontiers through research, testing and collaborations to ensure the widest possible audience can play games.

Emphasized is that developing games for blind and low vision players is more than just adding audio cues and options. Blindness is a spectrum, so fully accommodating blind and low vision players also considers touch and residual vision.

To achieve this, they added more haptic feedback that helps identify objects when selected, critical for puzzle solving and tasks. Players can feel the shapes when choosing.

Additionally, high contrast object outlines were implemented. Silhouetting key objects makes them more visible, and understandable through haptic feedback on selection.

VR Handheld Camera Simulator Made with UE5

Keywords: Unreal, photography simulation game

quick VR handheld cam tests today, feels like magic. suddenly it's not a game anymore #UE5 pic.twitter.com/Y1X5CIqLZH

— 🌿 (@lushfoil) March 15, 2023

Game developer Matt Newell recently shared more demos for his upcoming photogrammetry simulation game Lushfoil. The core gameplay is roleplaying a travel photographer, showcasing meticulous digital recreations of real-world landmarks. This not only brings visual pleasures, but also allows players to better understand the background and cultural significance of various landmarks while exploring the world.

In this new demo, we see a brand new VR handheld camera function showcasing the game’s realistic scenes in VR. According to Newell, this excellent camera uses Unreal Engine 5 for development, making the game’s visuals more intricate and realistic, further immersing players. The introduction of this feature not only enhances the entertainment value, but also allows players to better explore the game world and discover more surprises and fun.

Code

RealityUI

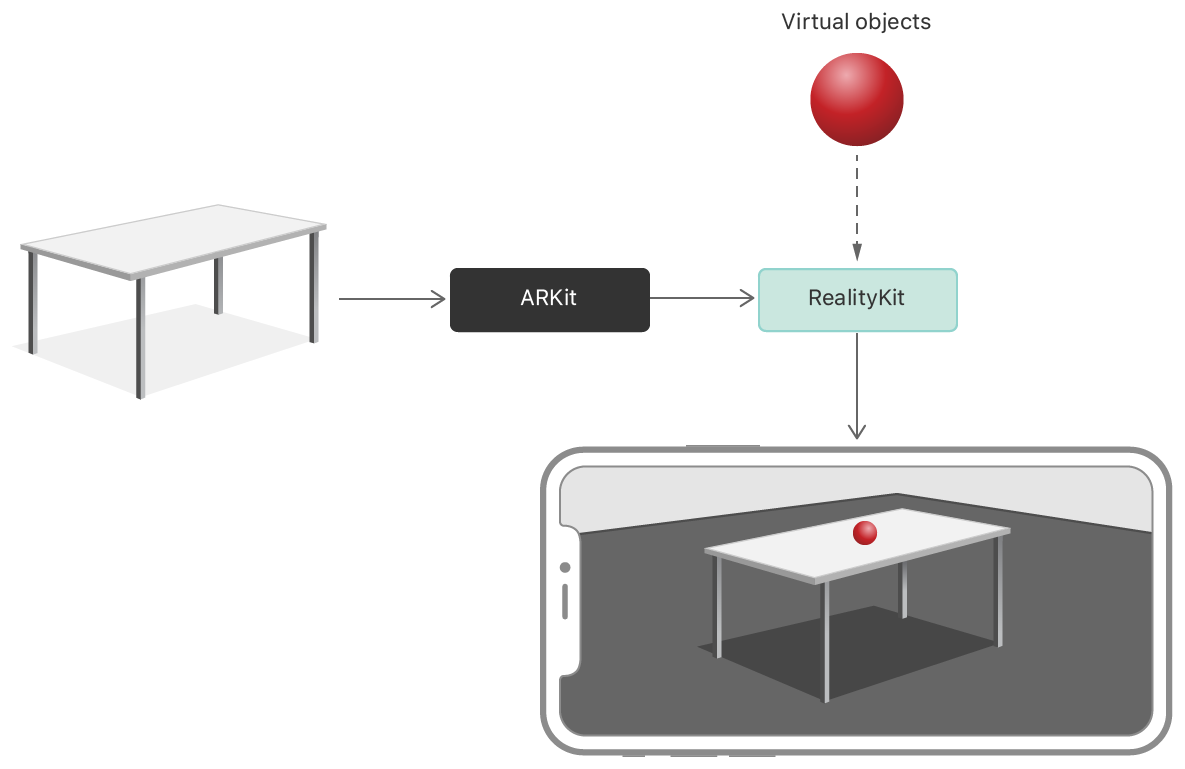

Keywords: Apple AR, RealityKit, iPhone

RealityUI is an open source AR interaction component built on top of Apple’s RealityKit framework, with the main goal of enabling users to more easily and quickly develop AR interactive apps.

In 2019, Apple built a brand new Swift rendering framework from the ground up tailored for AR development, which is RealityKit. It focuses on solving virtual object PBR rendering and accurate behavioral simulation in real environments, including physics, environment reflection, ambient light estimation, etc. Designed specifically for rendering virtual elements in real settings, all its capabilities revolve around creating AR experiences with greater realism and immersion. Additionally, thanks to the power of the Swift language, RealityKit has a clean API that is simple to use, greatly facilitating AR developers.

RealityUI takes basic 3D geometries from RealityKit further by assembling them into forms that mimic existing 2D UI styles on iOS. RealityUI is developed following the ECS architecture and uses PBR rendering. It also comes with gestures and animations built-in, so users can more easily add interactivity to their AR apps.

Unity Core ML Stable Diffusion Plugin

Keywords: game engine, generative AI

A plugin that enables Unity to run Stable Diffusion natively, by utilizing Apple’s CoreML interface, allowing Unity to leverage Apple SoC’s CPU, GPU and ANE (neural engine) for local computation and inference without needing internet connection, eliminating privacy concerns. Because it leverages Apple’s CoreML, this plugin can only run on Apple devices. It can directly execute in Editor or packaged build on Apple Silicon Macs, and only supports packaged build on iPad Pros.

Requirements:

- Unity 2023.1

- Apple Silicon Mac (editor/runtime support), macOS 13.1

- iPad Pro with Apple silicon (runtime support), iOS 16.2

Refer to the provided sample project Flipbook3 for usage details.

Tool

Bezel — Browser-based design tool for quick VR/AR demo output

Keywords: AR creation tool, Web AR service

Bezel is a browser-based design tool aimed at helping designers quickly create VR/AR demos. With Bezel, designers can build highly interactive virtual and augmented reality experiences without any coding knowledge. Bezel has an intuitive UI that makes it easy to add and manage 3D models and animations, supporting various file formats. It also provides rich asset libraries with textures, lighting and audio to aid designers in better realizing their visions. For VR/AR designers or anyone interested in this field, Bezel is an indispensable tool. Some have already combined Bezel with Blender to create quick demo tutorials.

MyWebAR — A no-code web service for creating AR projects

Keywords: AR creation tool, Web AR service

MyWebAR is an augmented reality service built on the web platform. Unlike previous app-based solutions, it can support legacy devices and even run on low-end laptops and Chromebooks. This makes MyWebAR a very cost-effective AR solution, granting more people access to AR experiences.

If you are a creator, blogger or artist, you’ll find MyWebAR to be a great choice. You can use MyWebAR’s services for free to create your desired AR projects, limited to non-commercial use. Whether you want to build an AR exhibit or incorporate AR elements into your work, MyWebAR is a great tool to bring your creative ideas to life.

SkyboxLab — Generate stunning 360° skyboxes from text prompts

Keywords: panoramic images, generative AI, skyboxes

SkyboxLab is a panoramic image generation tool using AI that allows you to create a stunning virtual space within seconds. Simply provide a text prompt and select a style, and SkyboxLab will generate a vast 360° panorama based on that info. Excitingly, these panoramas can be imported into other tools like Unity, greatly enhancing your productivity.

Additionally, SkyboxLab can also add new elements to the images like trees, buildings, etc per your choices to enrich scenes. You can also opt for different weather, tone and so on to convey different moods. These functionalities are very practical, providing you more creative freedom.

Unlike other panorama generation tools, SkyboxLab’s panoramas are highly realistic, making you feel transported to an actual scene. This is due to the high-quality rendering techniques used by SkyboxLab to make every detail very true to life. Moreover, SkyboxLab offers a variety of styles like sci-fi, urban, nature for you to choose from based on your needs.

SkyboxLab is an excellent panoramic image generation tool that is easy to use, full-featured and produces realistic effects. If you need to create panoramas, try out SkyboxLab which will make your creative process smoother.

XR 基地

XR 基地